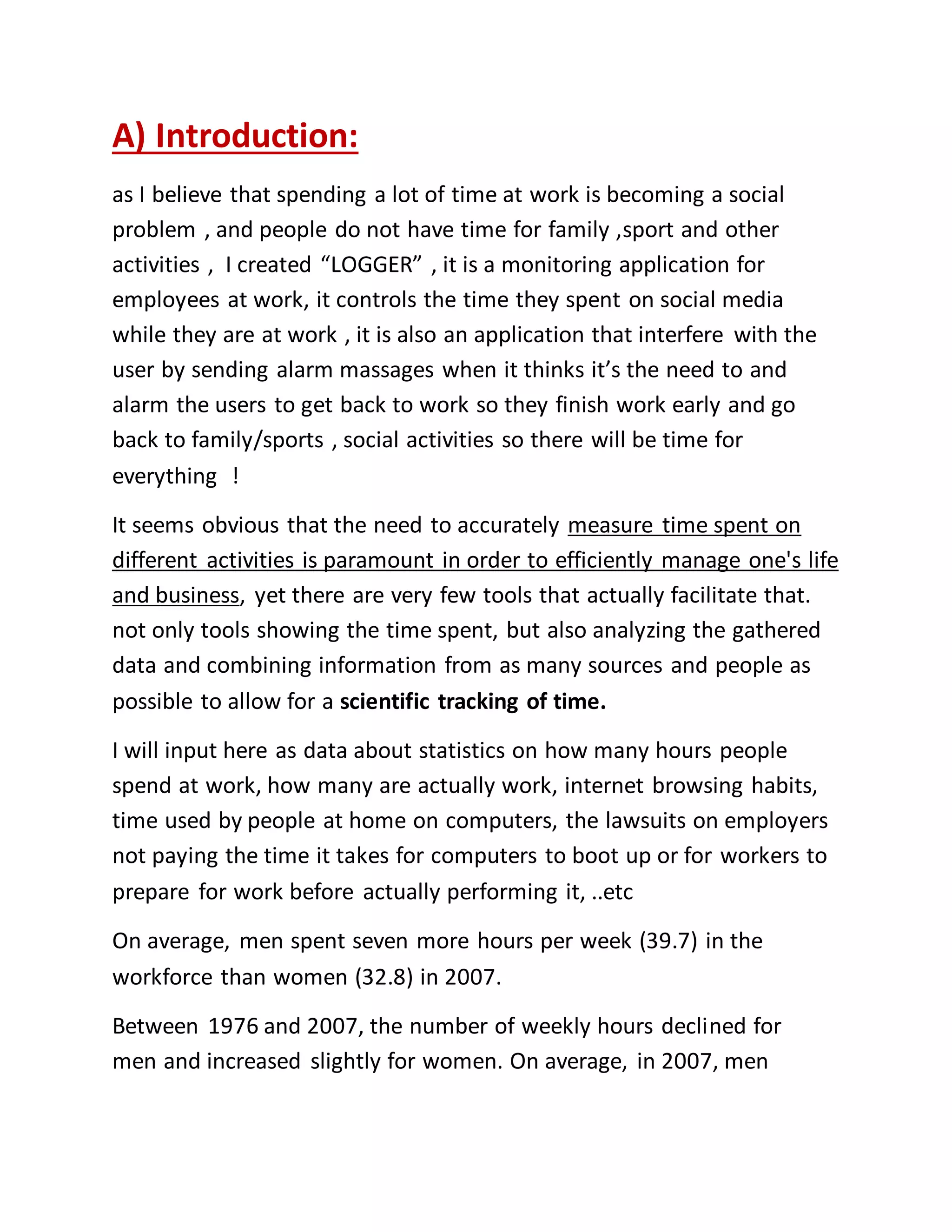

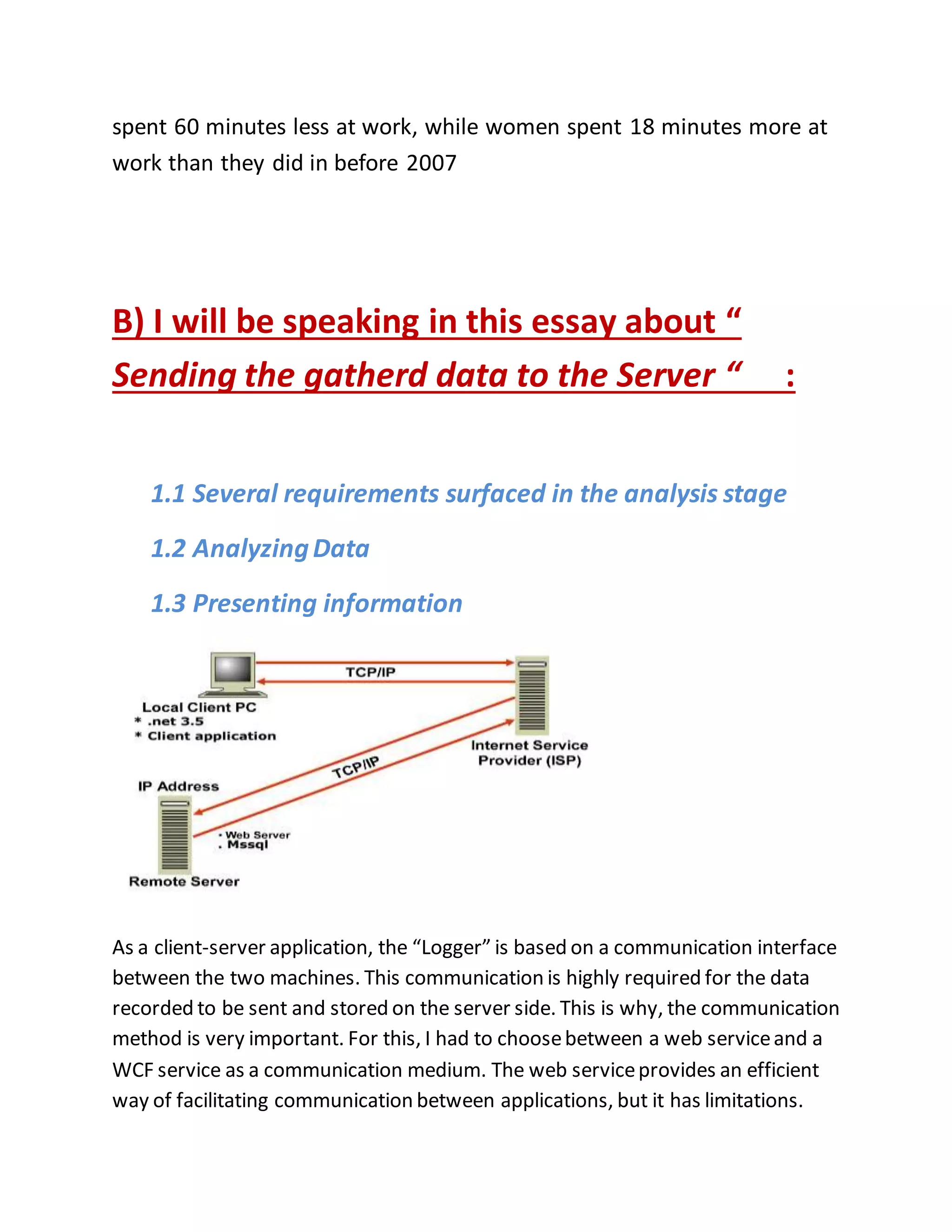

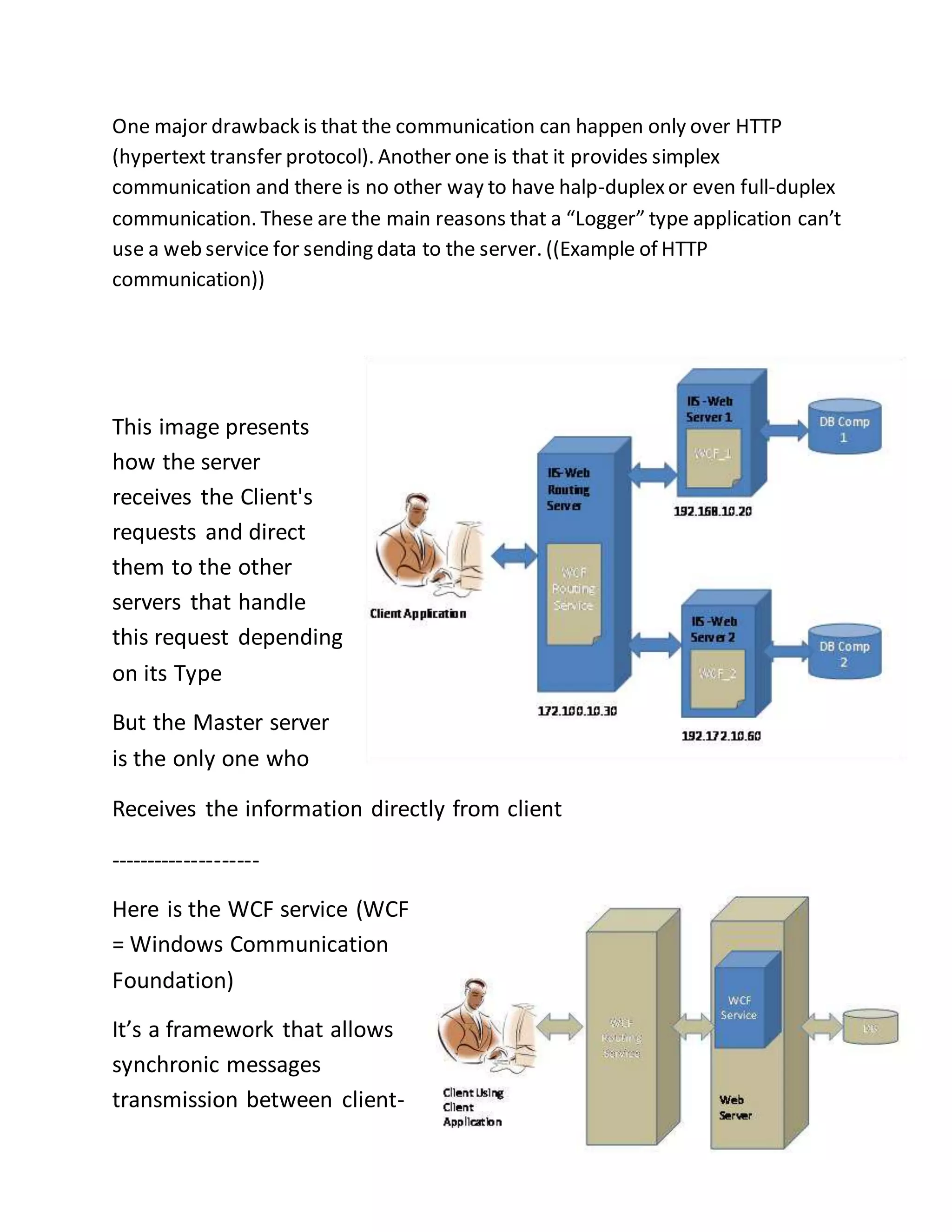

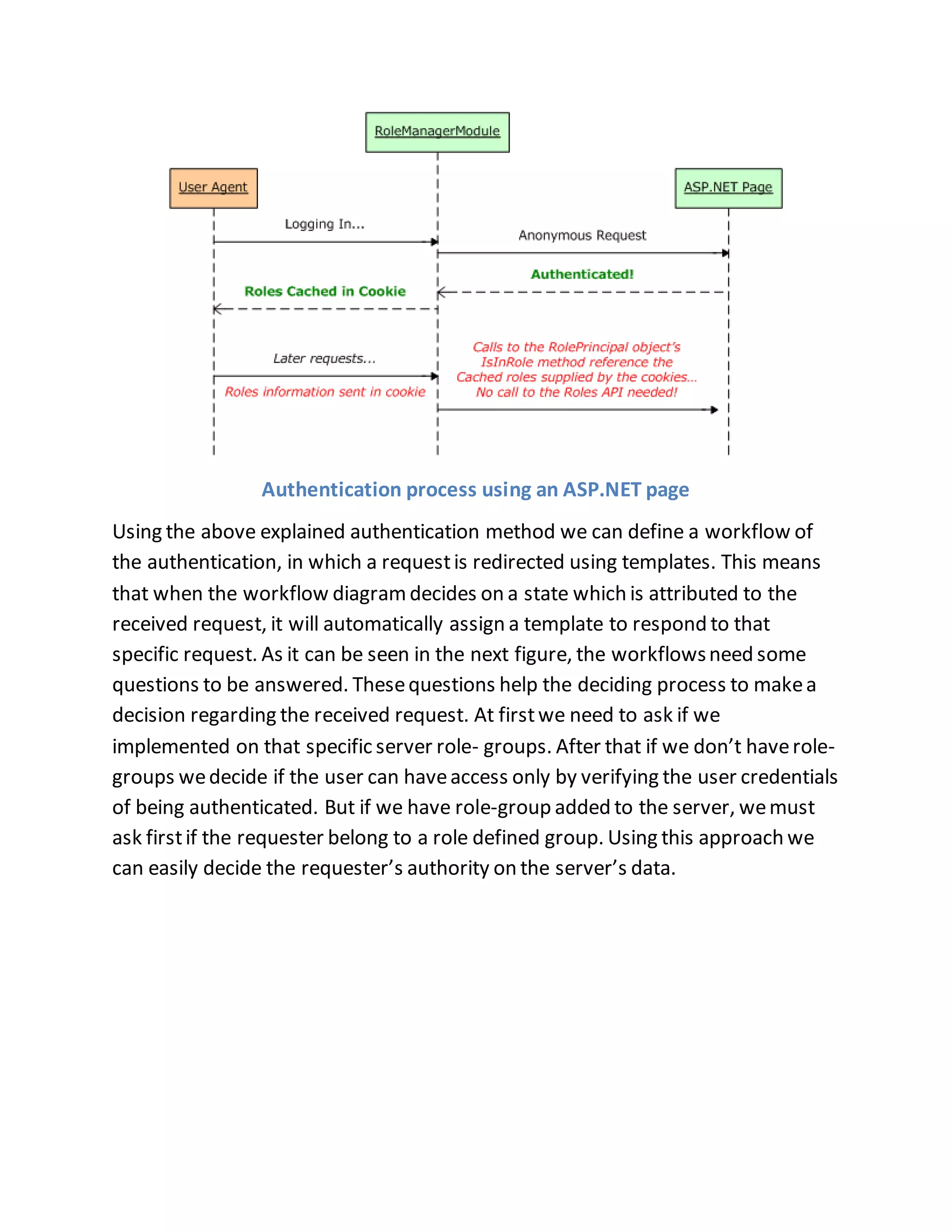

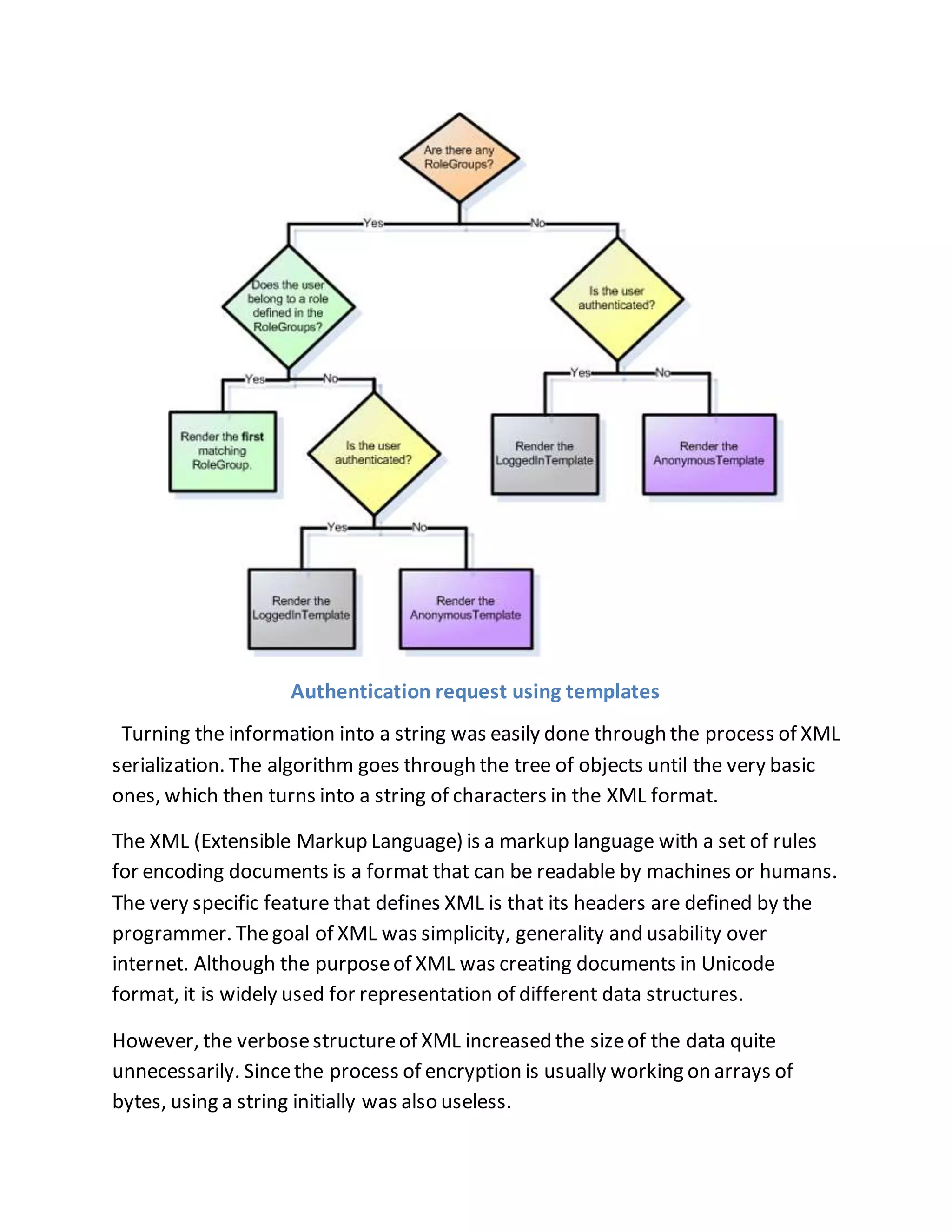

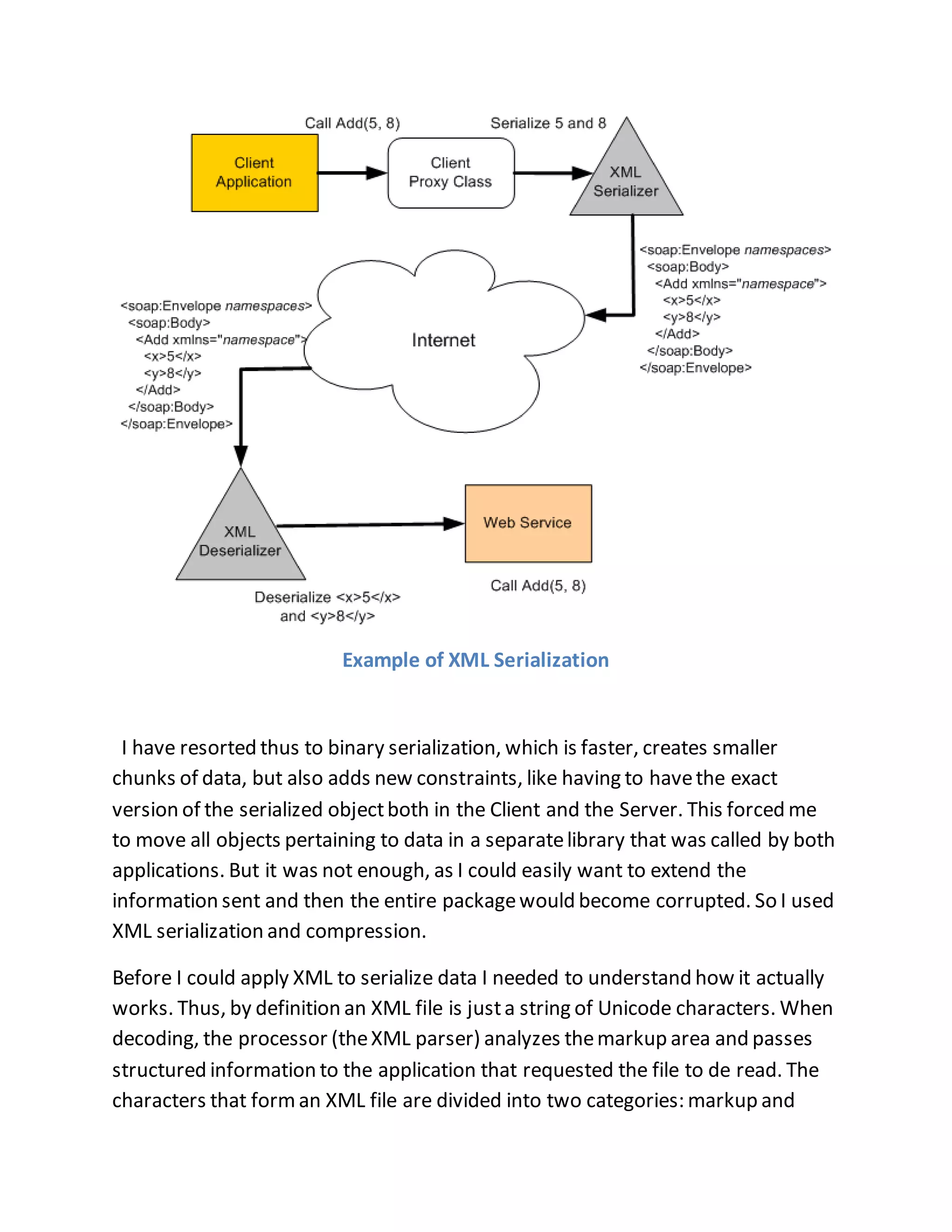

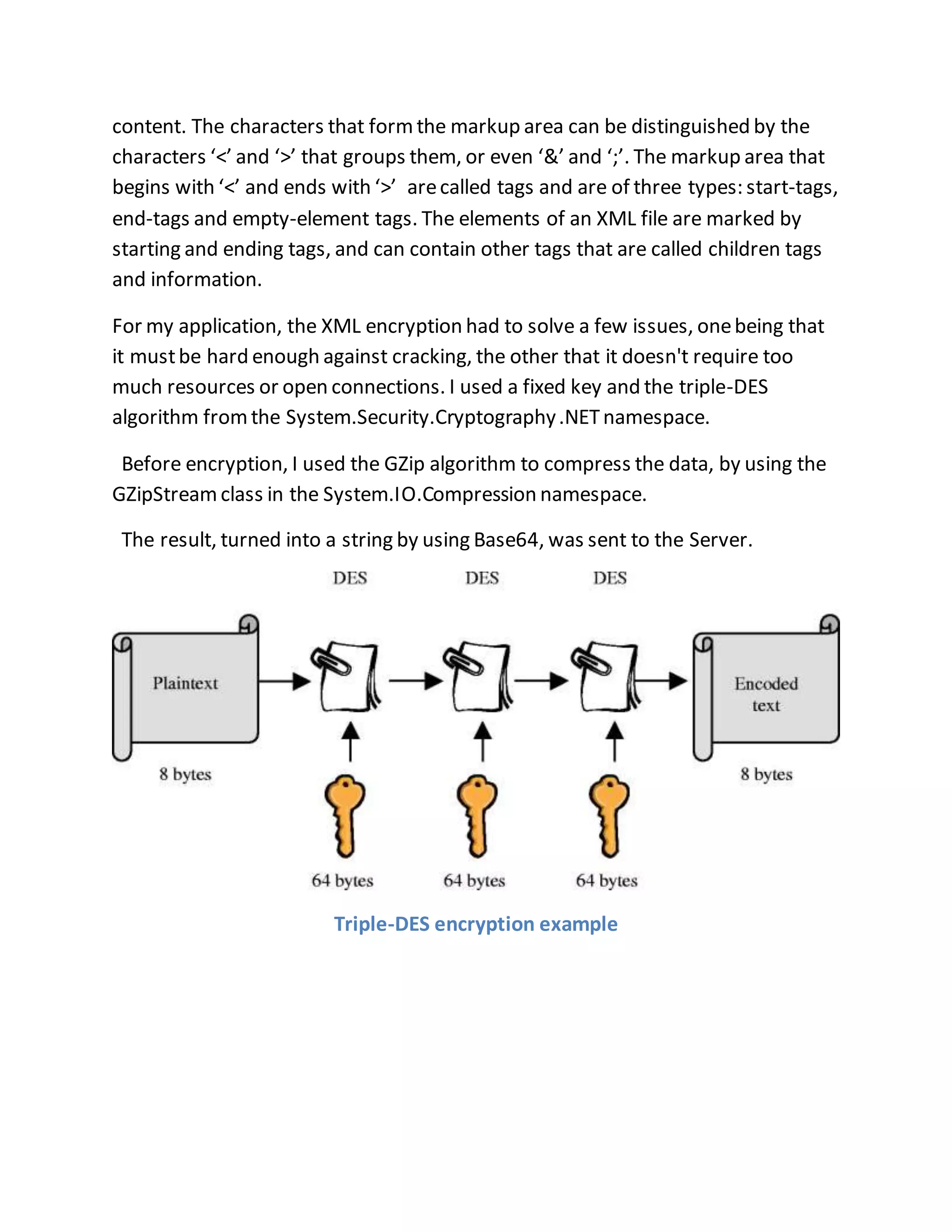

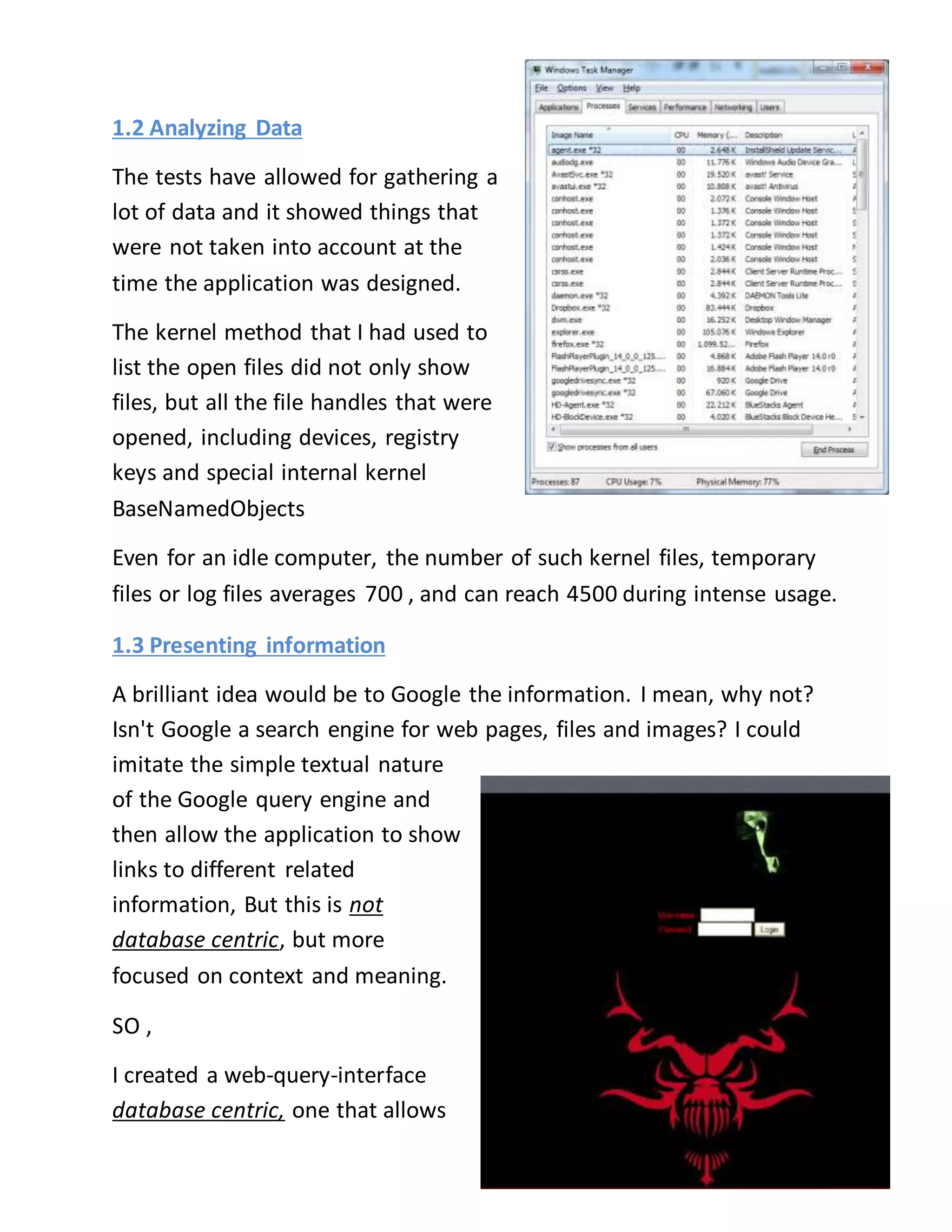

The document discusses a PhD project titled "Using Log Files to Improve Work Efficiency," which focuses on a monitoring application called "logger" that tracks employee time on social media during work hours and sends alerts to help them manage their time better. It outlines the communication methods for sending data from the client to the server, comparing web services with WCF services, ultimately opting for WCF due to its capabilities for duplex communication and protocol flexibility. Additionally, it details challenges faced in data management and the importance of efficient data handling to enhance system performance.