The document explains seasonal decomposition of time series data, highlighting the breakdown into trend, seasonal, and residual components for better pattern interpretation. It details methods for decomposition, provides a real-world example using airline passenger data, and compares traditional time series models like ARIMA with LSTM networks regarding their handling of long sequences. Additionally, it covers feature engineering techniques, the integration of exogenous variables, and the differences between traditional and time series cross-validation methods.

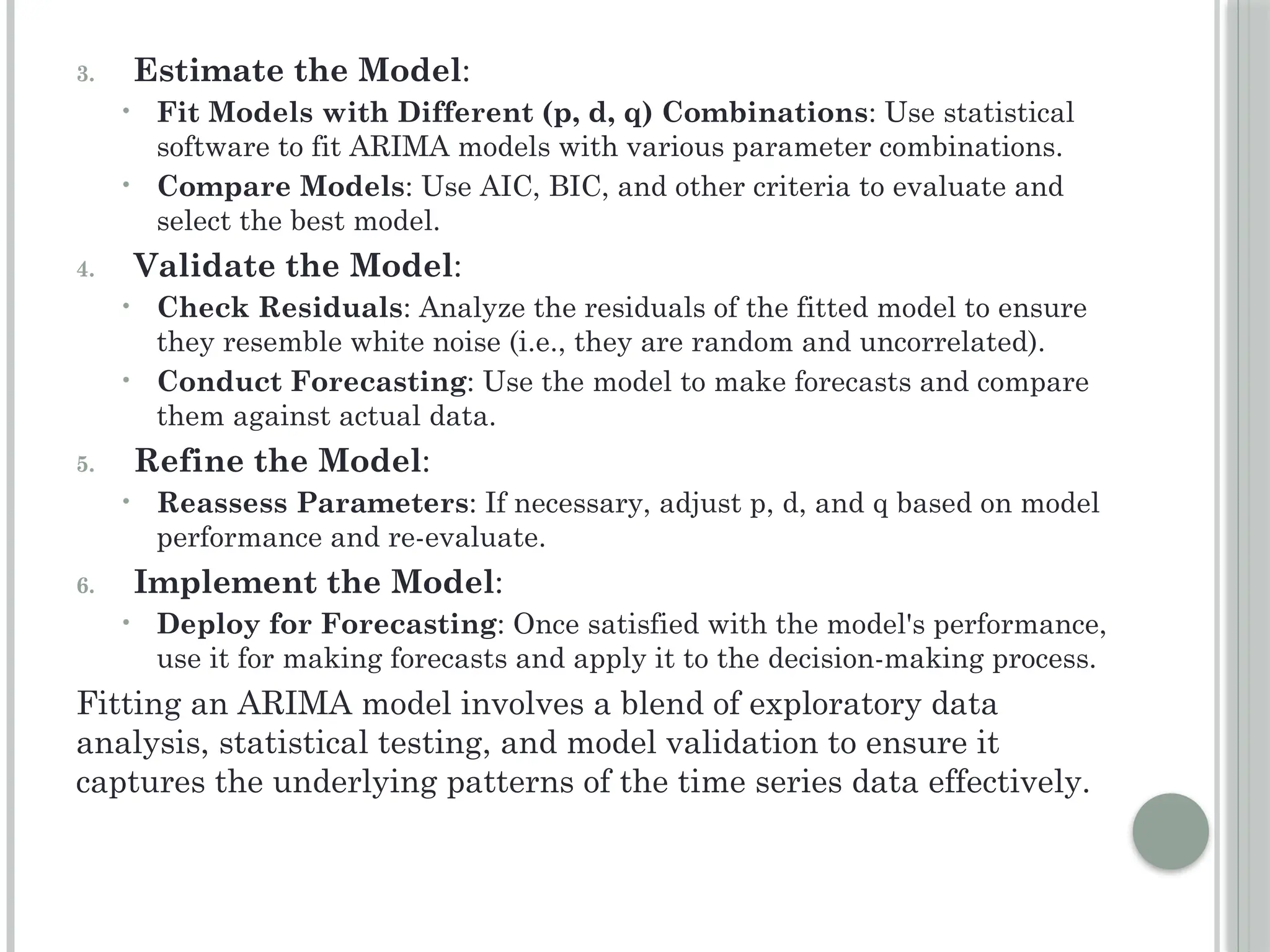

![Python Example with statsmodels

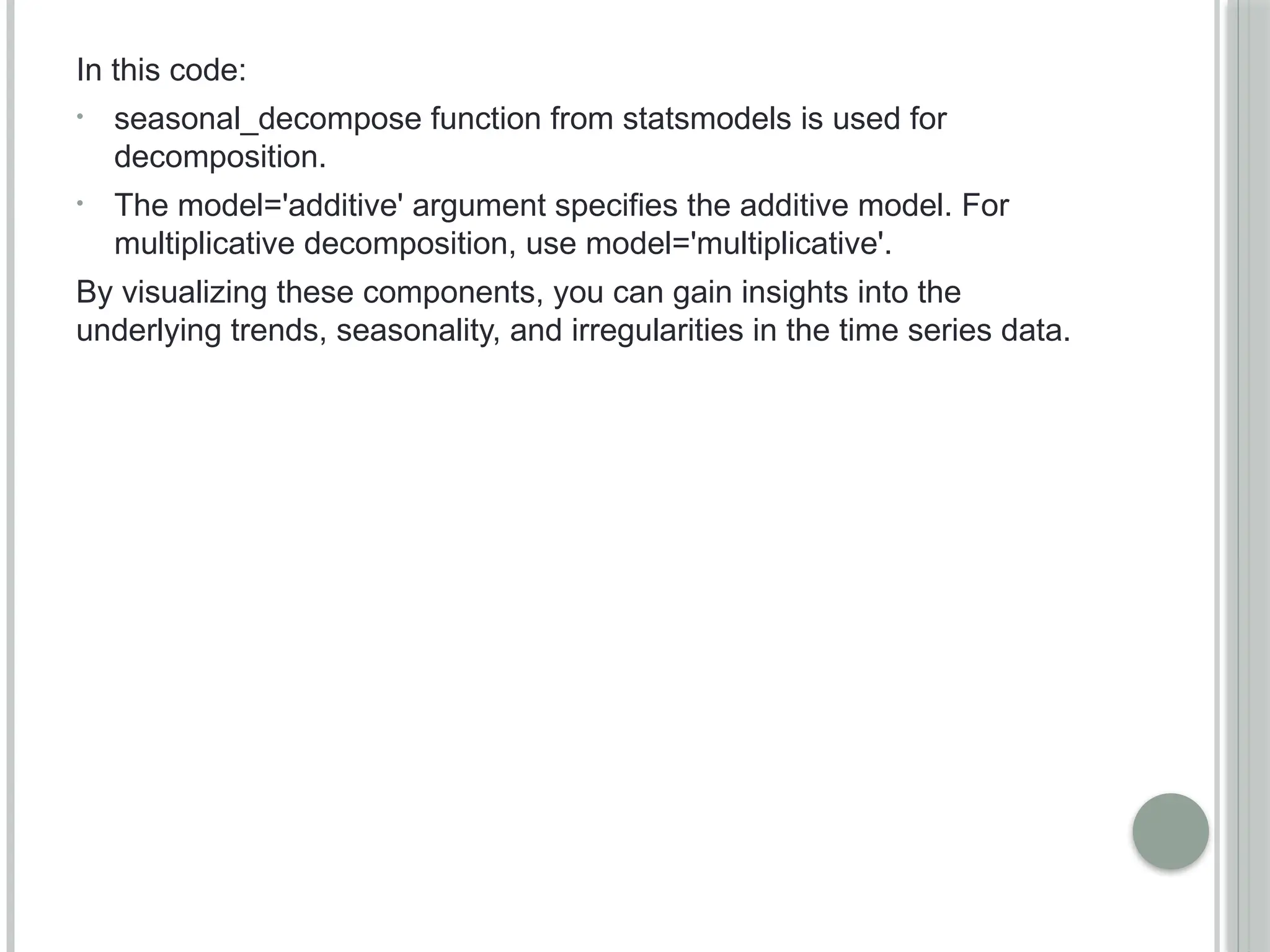

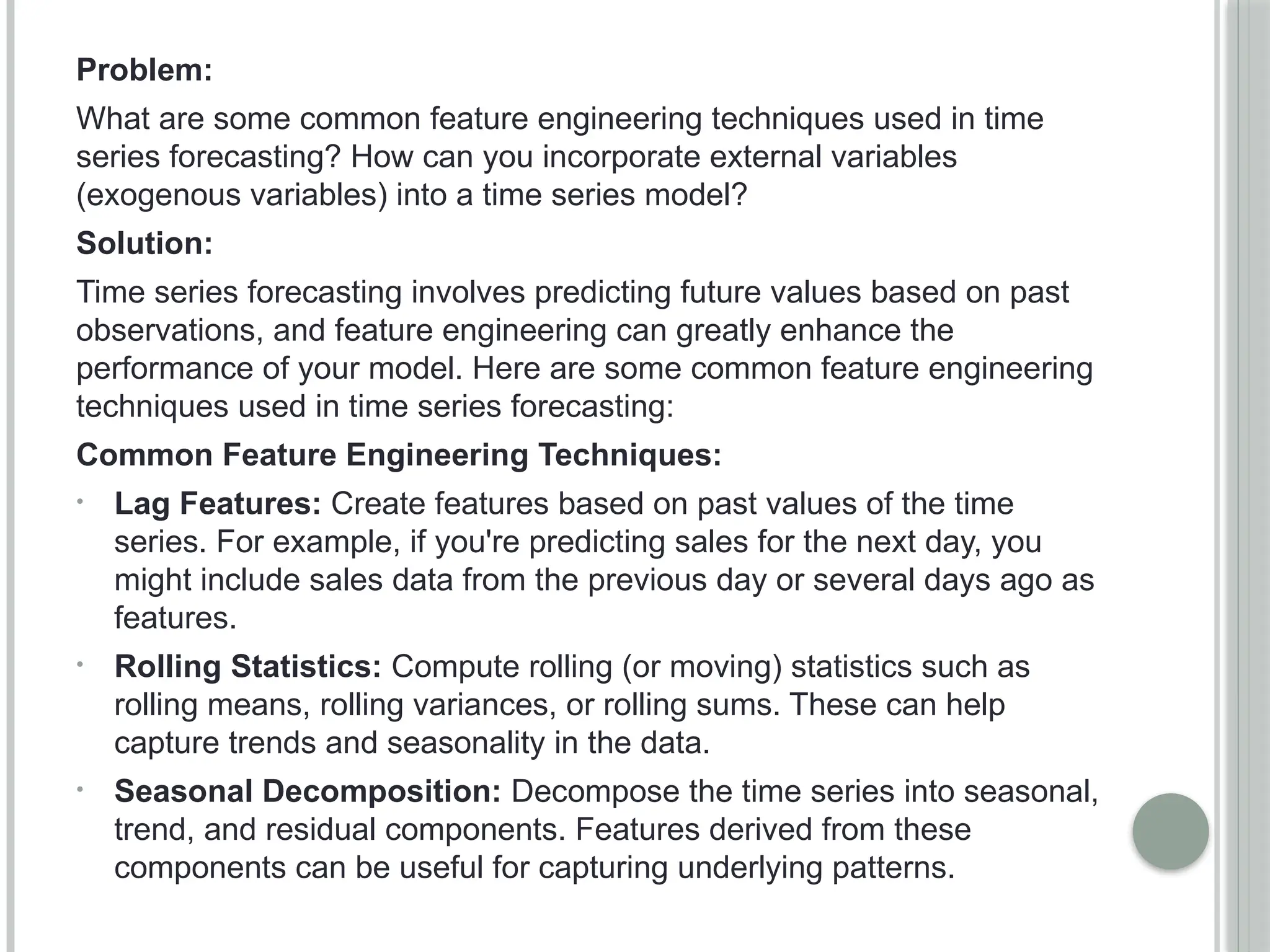

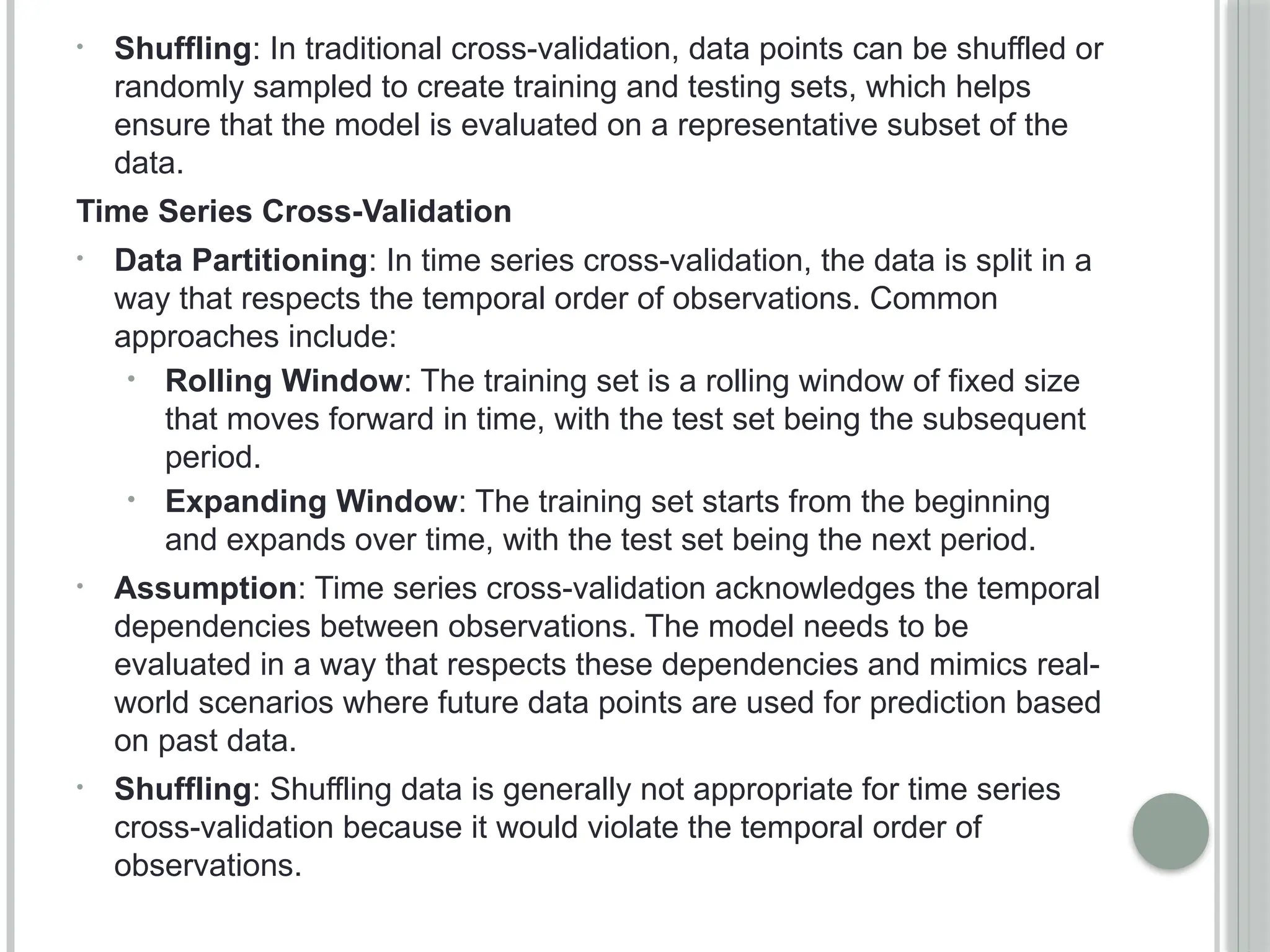

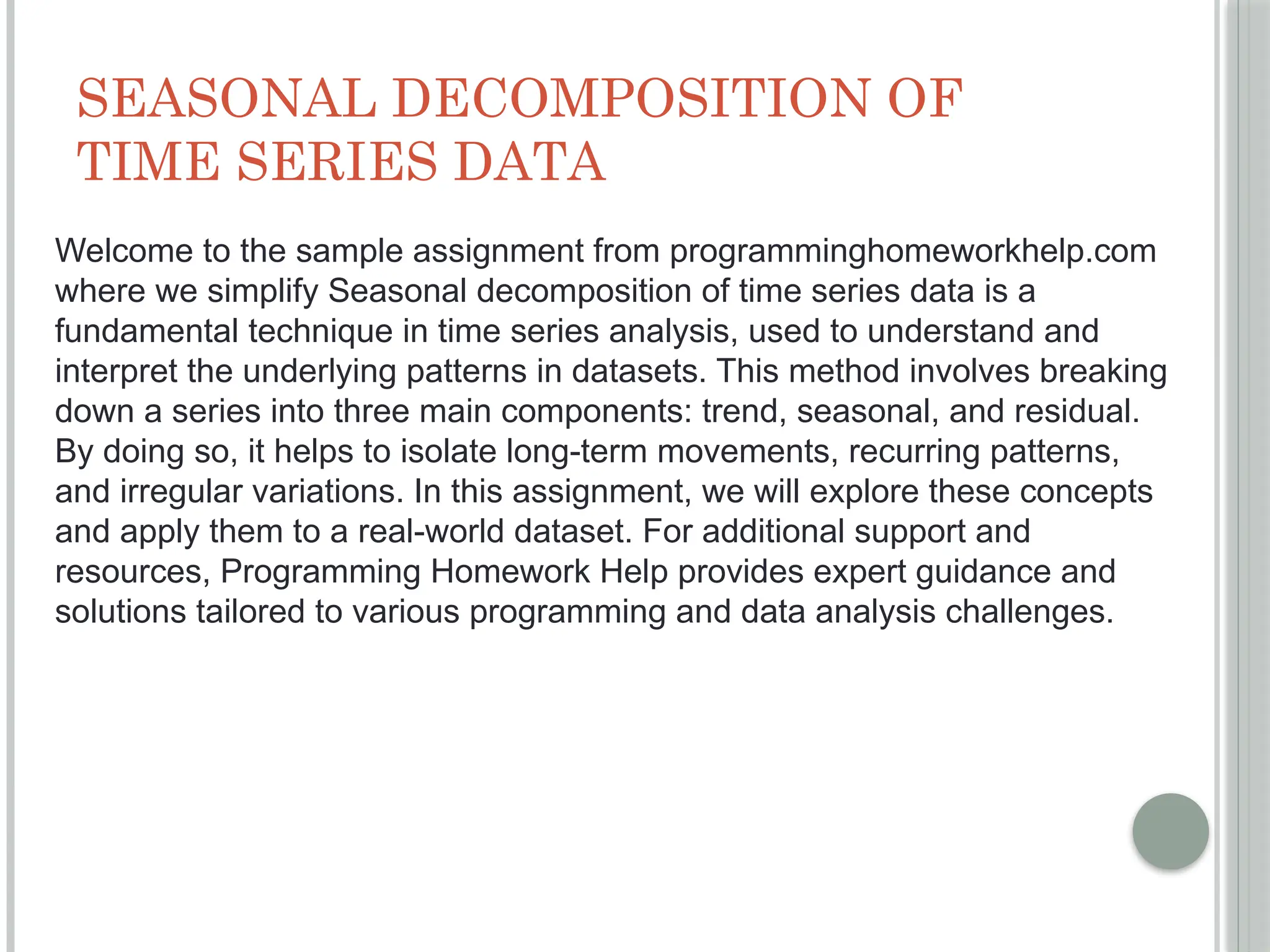

Here's a simple Python example using the statsmodels library to

decompose a time series:

import pandas as pd

import matplotlib.pyplot as plt

from statsmodels.tsa.seasonal import seasonal_decompose

# Load the dataset (example with airline passengers)

data = pd.read_csv('airline_passengers.csv', parse_dates=['Month'],

index_col='Month')

# Perform seasonal decomposition

decomposition = seasonal_decompose(data['Passengers'],

model='additive')

# Plot the decomposed components

plt.figure(figsize=(12, 8))](https://image.slidesharecdn.com/machinelearning-240809085849-ad41a623/75/Seasonal-Decomposition-of-Time-Series-Data-6-2048.jpg)

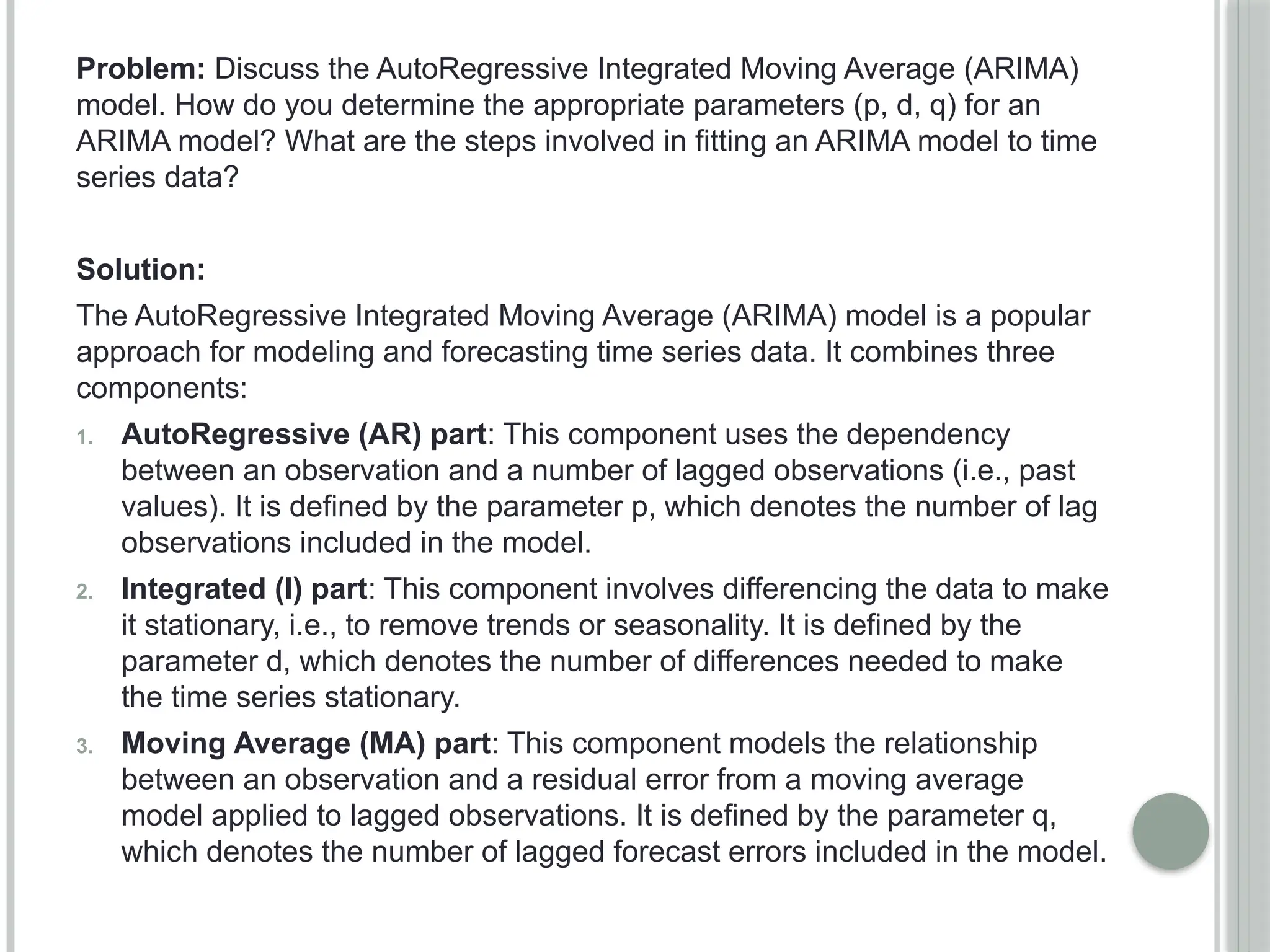

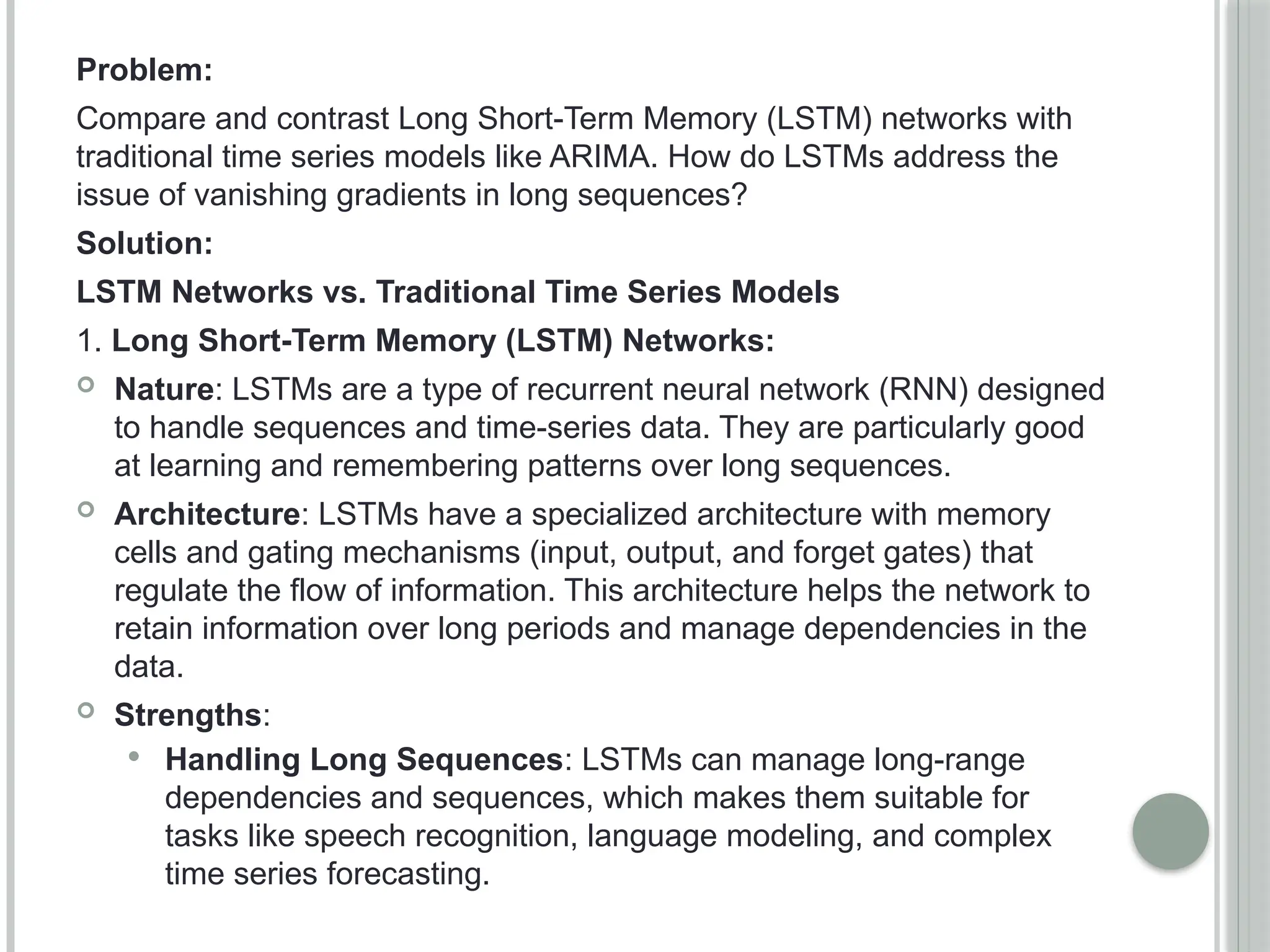

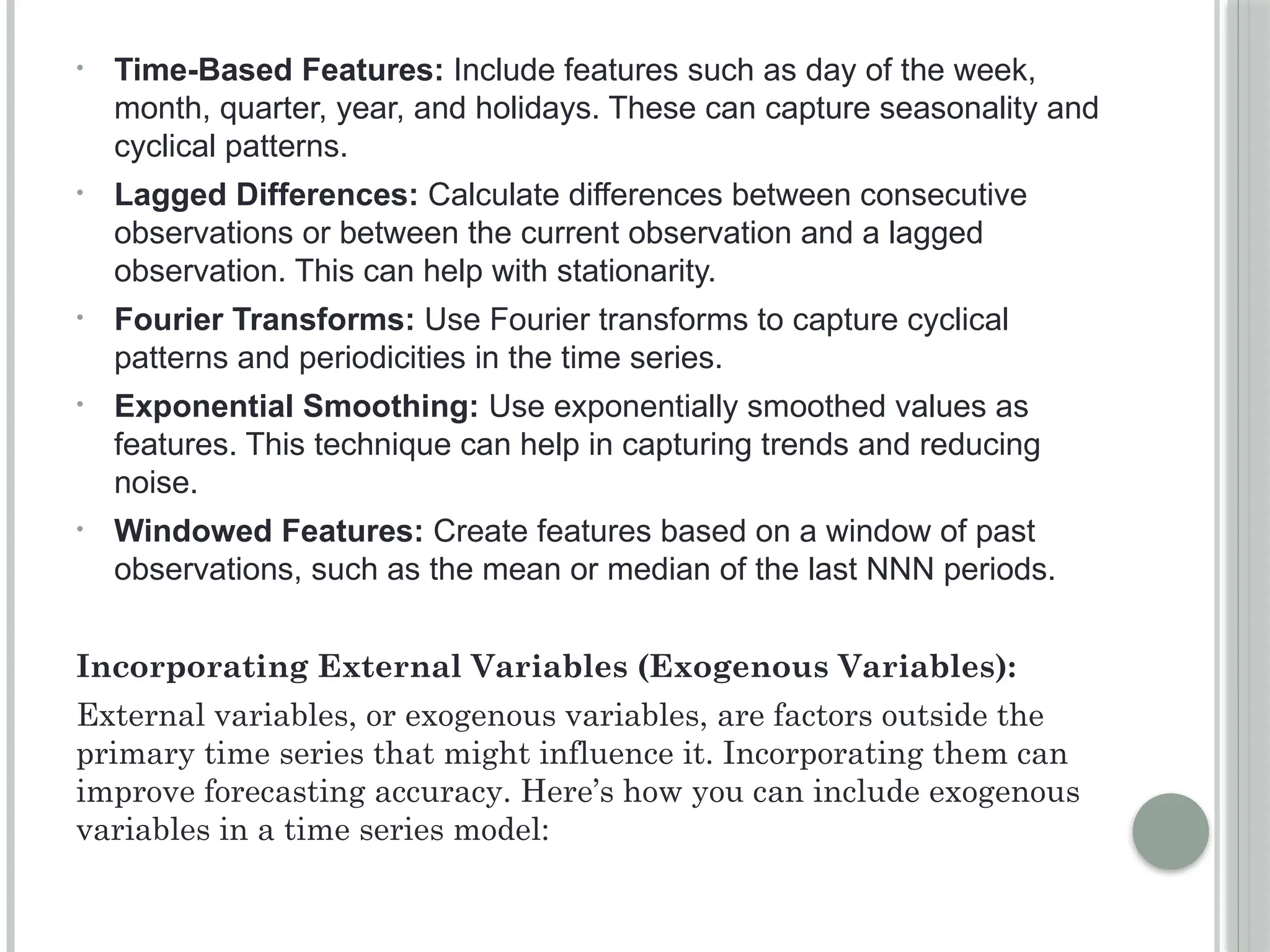

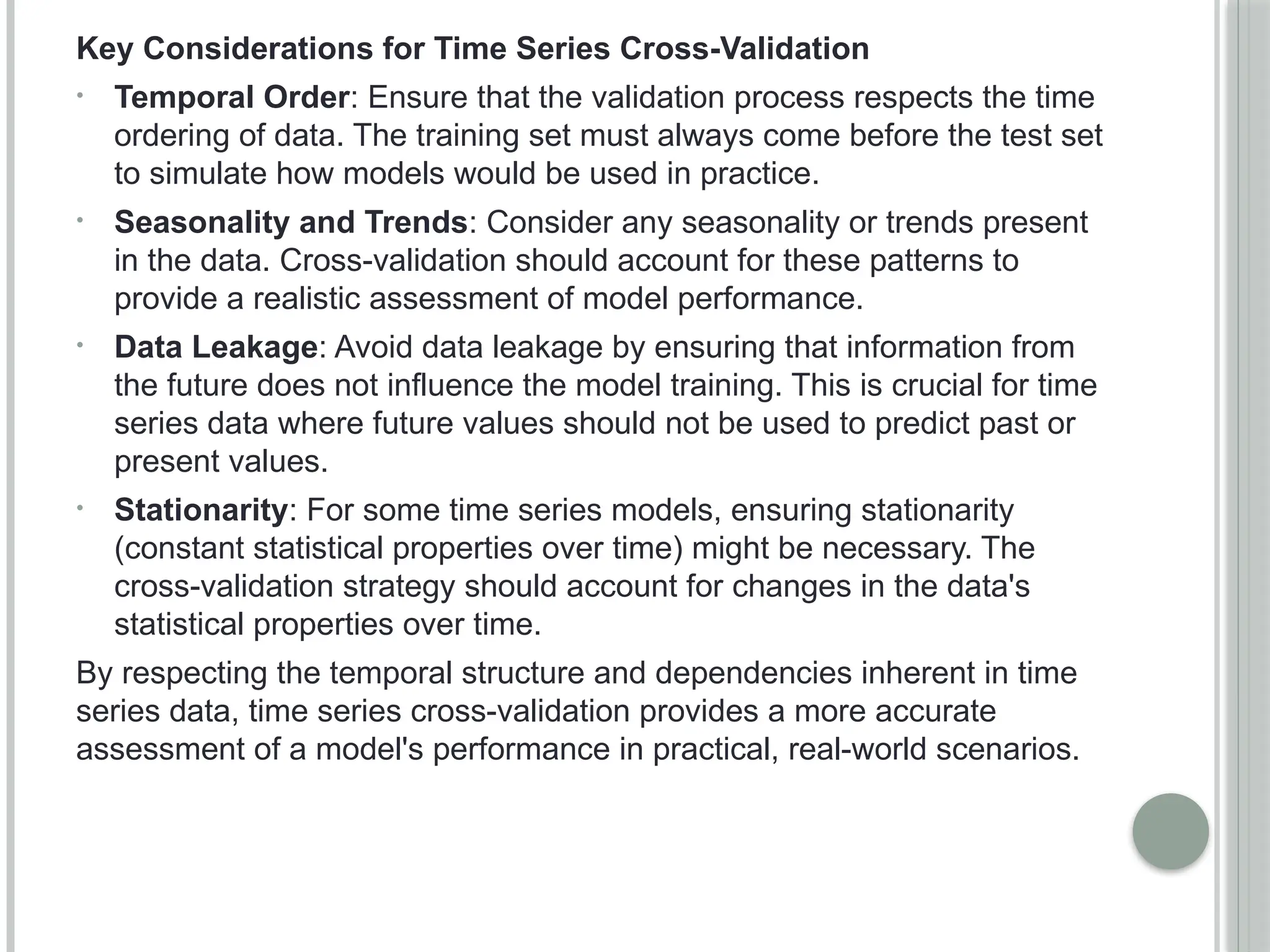

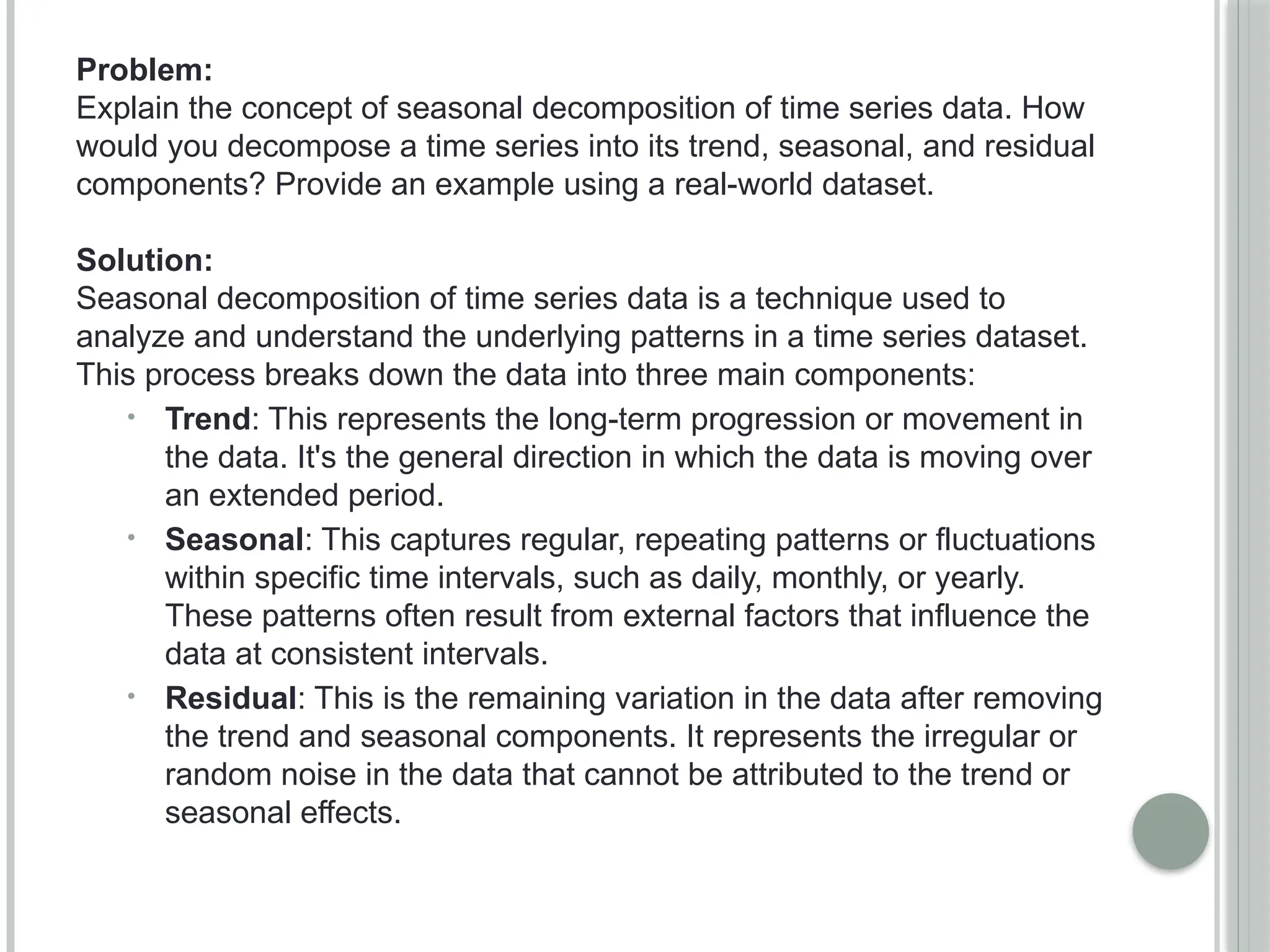

![plt.subplot(4, 1, 1)

plt.plot(data['Passengers'], label='Original')

plt.legend(loc='best')

plt.title('Original Series')

plt.subplot(4, 1, 2)

plt.plot(decomposition.trend, label='Trend')

plt.legend(loc='best')

plt.title('Trend Component')

plt.subplot(4, 1, 3)

plt.plot(decomposition.seasonal, label='Seasonal')

plt.legend(loc='best')

plt.title('Seasonal Component')

plt.subplot(4, 1, 4)

plt.plot(decomposition.resid, label='Residual')

plt.legend(loc='best')

plt.title('Residual Component')

plt.tight_layout()

plt.show()](https://image.slidesharecdn.com/machinelearning-240809085849-ad41a623/75/Seasonal-Decomposition-of-Time-Series-Data-7-2048.jpg)