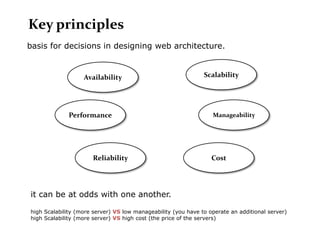

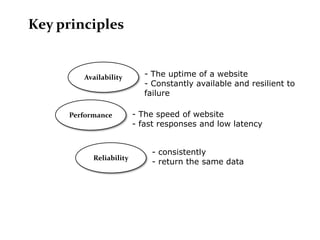

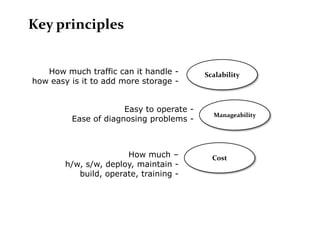

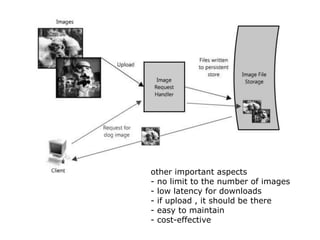

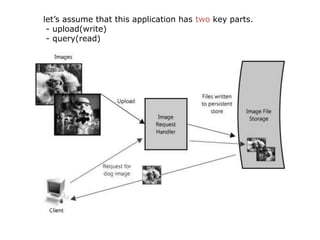

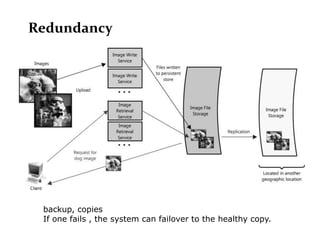

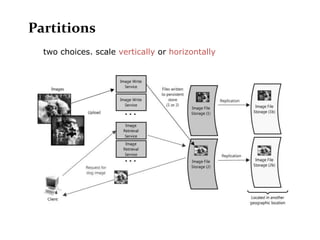

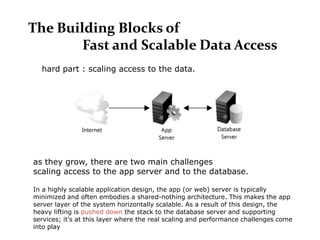

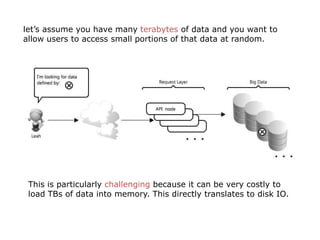

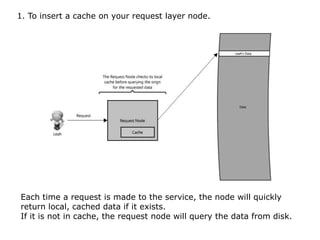

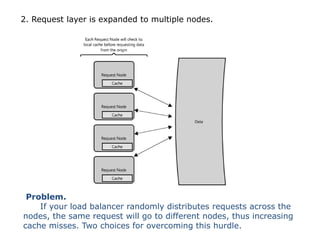

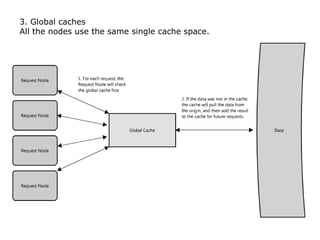

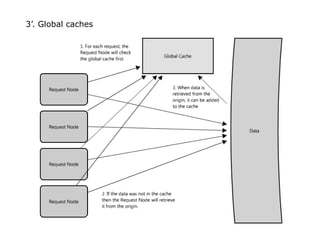

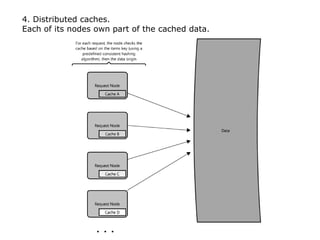

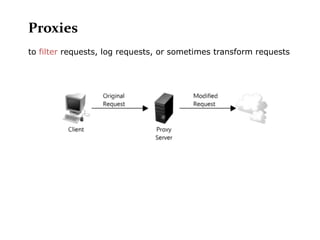

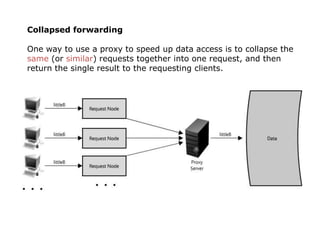

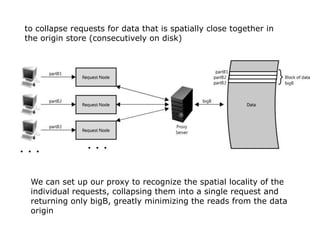

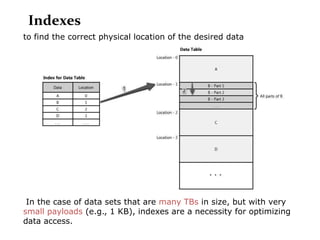

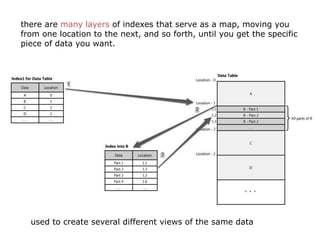

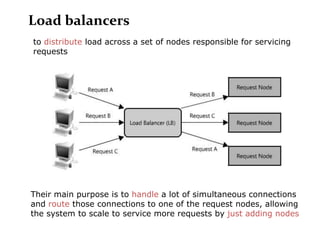

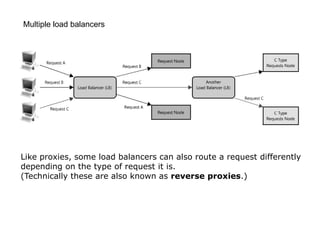

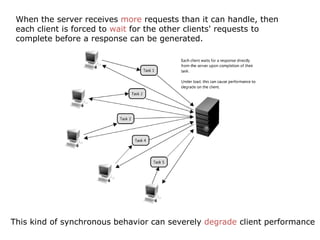

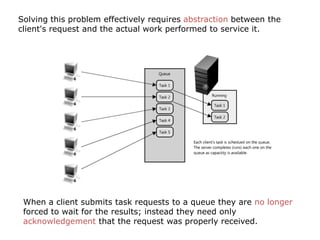

Scalable web architectures distribute resources across multiple servers to improve availability, performance, reliability, and scalability. Key principles for designing scalable systems include availability, performance, reliability, scalability, manageability, and cost. These principles sometimes conflict and require tradeoffs. To improve scalability, services can be split and data distributed across partitions or shards. Caches, proxies, indexes, load balancers, and queues help optimize data access and manage asynchronous operations in distributed systems.