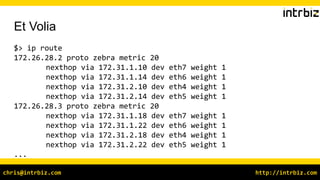

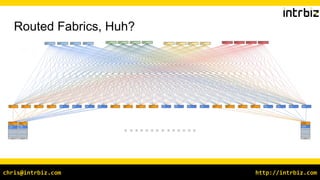

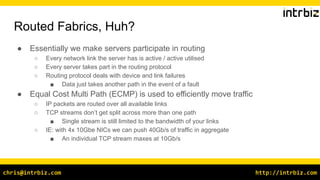

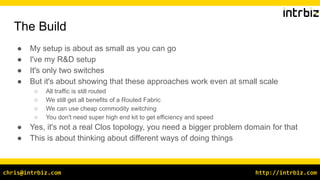

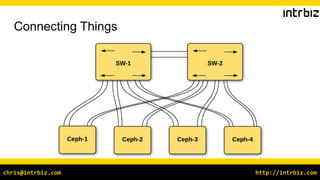

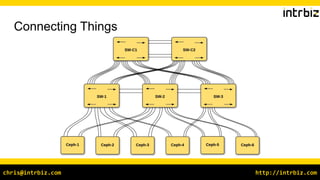

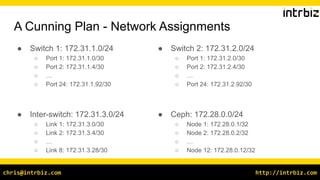

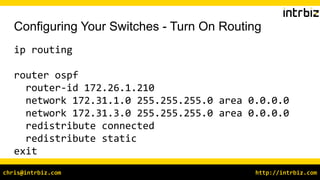

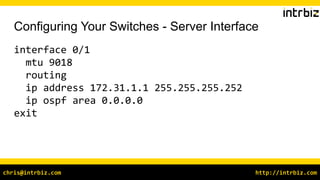

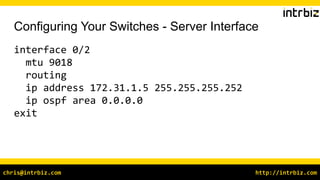

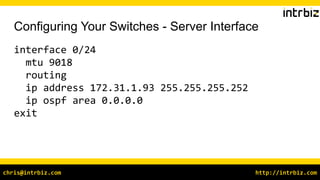

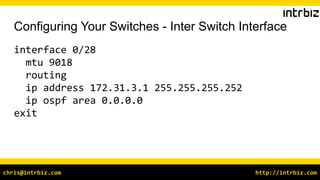

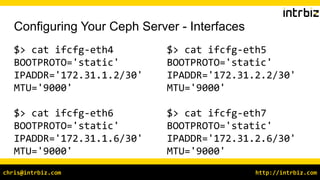

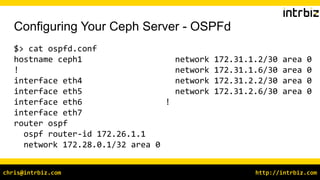

The document discusses using routed fabrics for Ceph networking. Routed fabrics allow servers to participate in routing so that every network link is actively used. This provides redundancy so that if one path fails, data can take another path. The document outlines setting up a small proof-of-concept network with two switches and Ceph servers to demonstrate how routed fabrics can provide benefits even at a small scale. It provides details on configuring the switches and servers, including network assignments, OSPF routing, and Ceph configuration.

![http://intrbiz.comchris@intrbiz.com

Configuring Your Ceph Server - Ceph

$> cat ceph.conf

[global]

public_network = 172.28.0.0/24](https://image.slidesharecdn.com/chrisellis-routedfabricsforceph-191105152634/85/Routed-Fabrics-For-Ceph-21-320.jpg)