This document discusses challenges and techniques in web media recommendation systems. It covers:

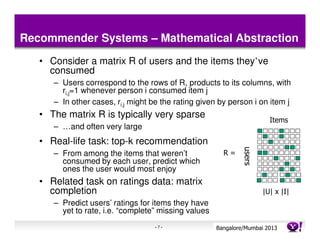

1) Recommender systems are widely used on shopping, content, streaming and social media sites to suggest items users may like. Collaborative filtering and content-based approaches are two main techniques.

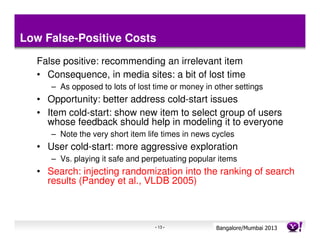

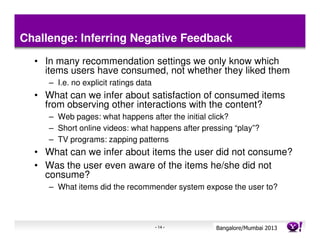

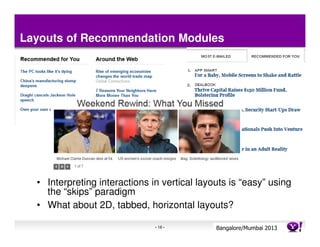

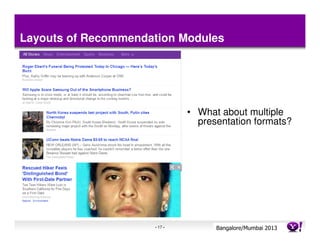

2) Challenges include cold starts for new users and items, inferring negative feedback, accounting for presentation bias, handling different layouts, and balancing personalization, popularity and context.

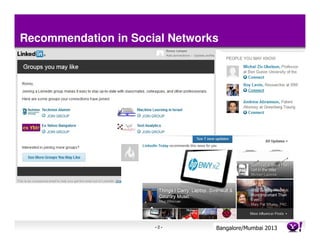

3) Incremental updating of recommendations and recommending sets or sequences of items rather than just individual items are also challenges addressed. The role of social networks in recommendations is discussed.

![Collaborative Filtering – Neighborhood Models

• Compute the similarity of items [users] to each other

– Items are considered similar when users tend to rate them

similarly or to co-consume them

– Users are considered similar when they tend to co-consume

items or rate items similarly

• Recommend to a user:

– Items similar to items he/she has already consumed [rated

highly]

– Items consumed [rated highly] by similar users

• Key questions:

– How exactly to define pair-wise similarities?

– How to combine them into quality recommendations?

-9- Bangalore/MumbaiConfidential

Yahoo! 2013](https://image.slidesharecdn.com/ronnylempelyahooindiabigthinkerapril2013-130409023706-phpapp01/85/Ronny-lempelyahooindiabigthinkerapril2013-10-320.jpg)

![Challenge: Cold Start Problems

• Good recommendations require observed data on the user

being recommended to [the items being recommended]

– What did the user consume/enjoy before?

– Which users consumed/enjoyed this item before?

• User cold start: what happens when a new user arrives to a

system?

– How can the system make a good “first impression”?

• Item cold start: how do we recommend newly arrived items

with little historic consumption?

• In certain settings, items are

ephemeral – a significant portion of

their lifetime is spent in cold-start state

– E.g. news recommendation

- 12 - Bangalore/MumbaiConfidential

Yahoo! 2013](https://image.slidesharecdn.com/ronnylempelyahooindiabigthinkerapril2013-130409023706-phpapp01/85/Ronny-lempelyahooindiabigthinkerapril2013-13-320.jpg)