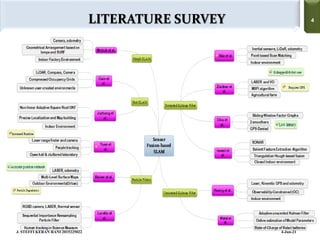

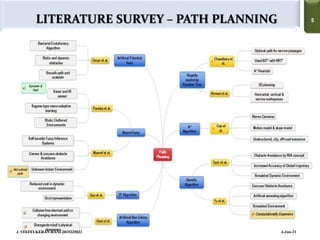

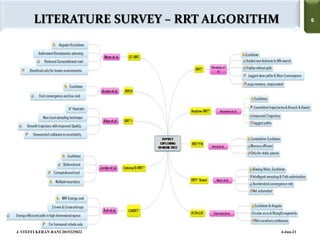

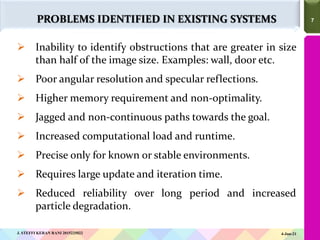

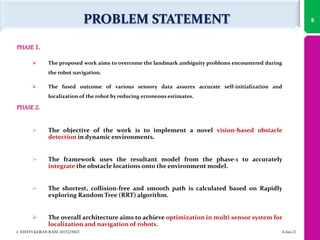

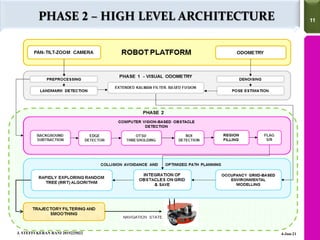

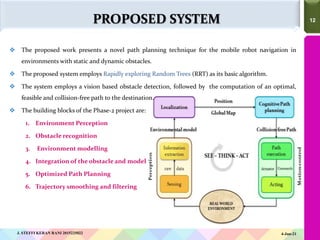

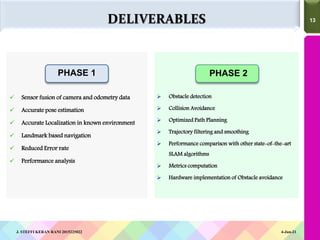

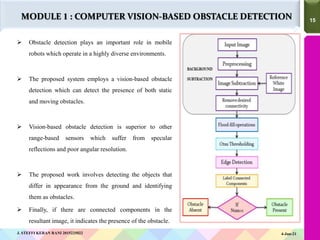

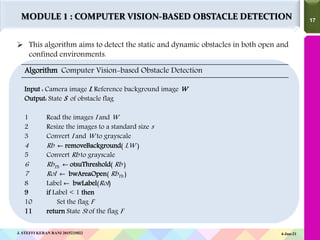

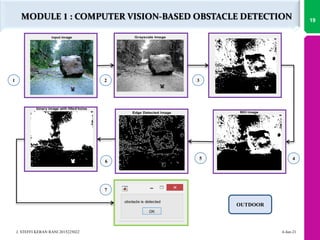

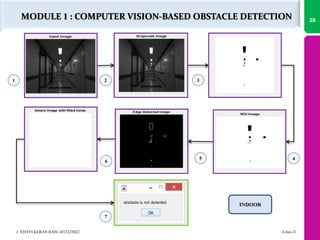

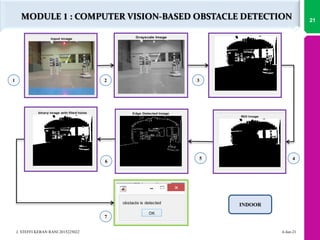

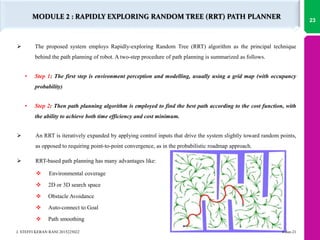

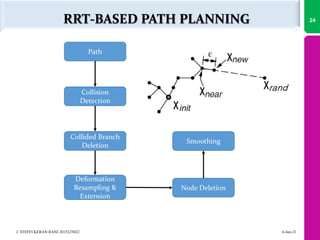

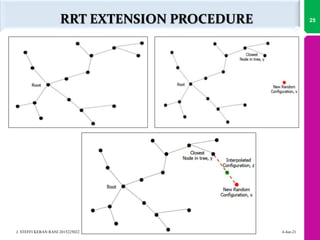

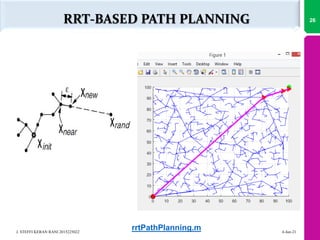

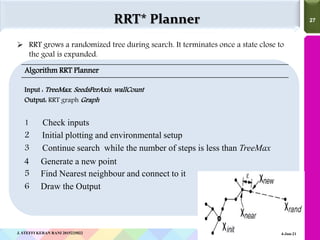

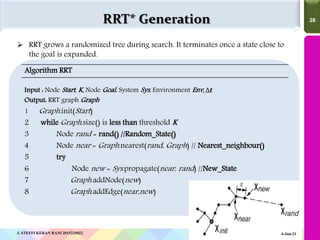

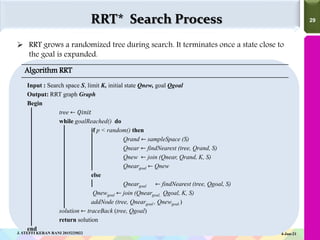

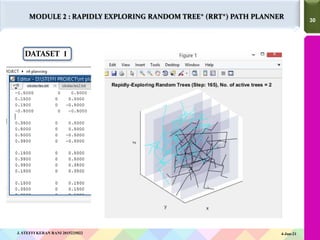

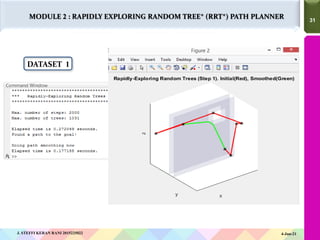

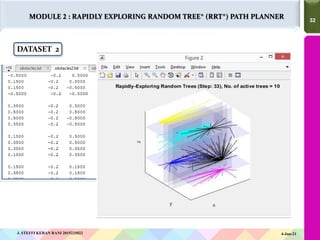

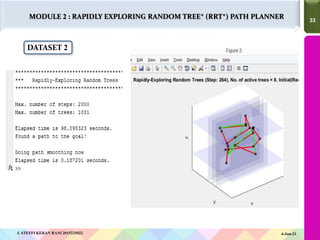

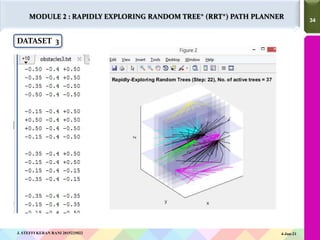

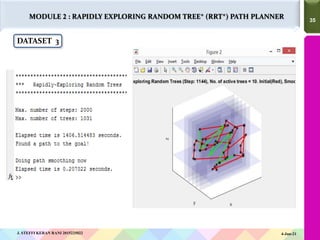

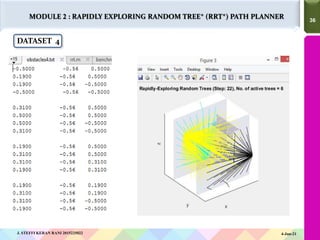

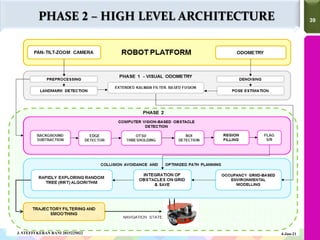

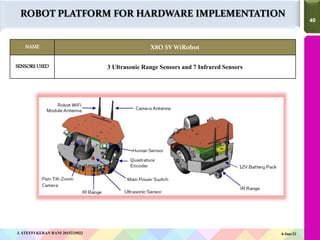

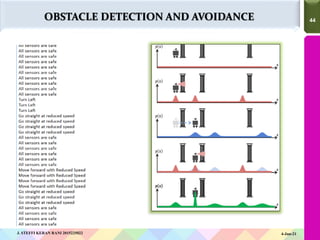

The document outlines a proposed algorithm for mobile robot navigation in GNSS-denied environments, focusing on detecting and avoiding obstacles using a multi-sensor fusion approach. It highlights the use of a rapidly-exploring random tree (RRT) algorithm for optimal path planning and addresses known issues in existing systems, such as landmark ambiguity and poor angular resolution. The proposed framework aims to enhance autonomous navigation by integrating various sensory data for accurate localization and collision-free pathfinding.