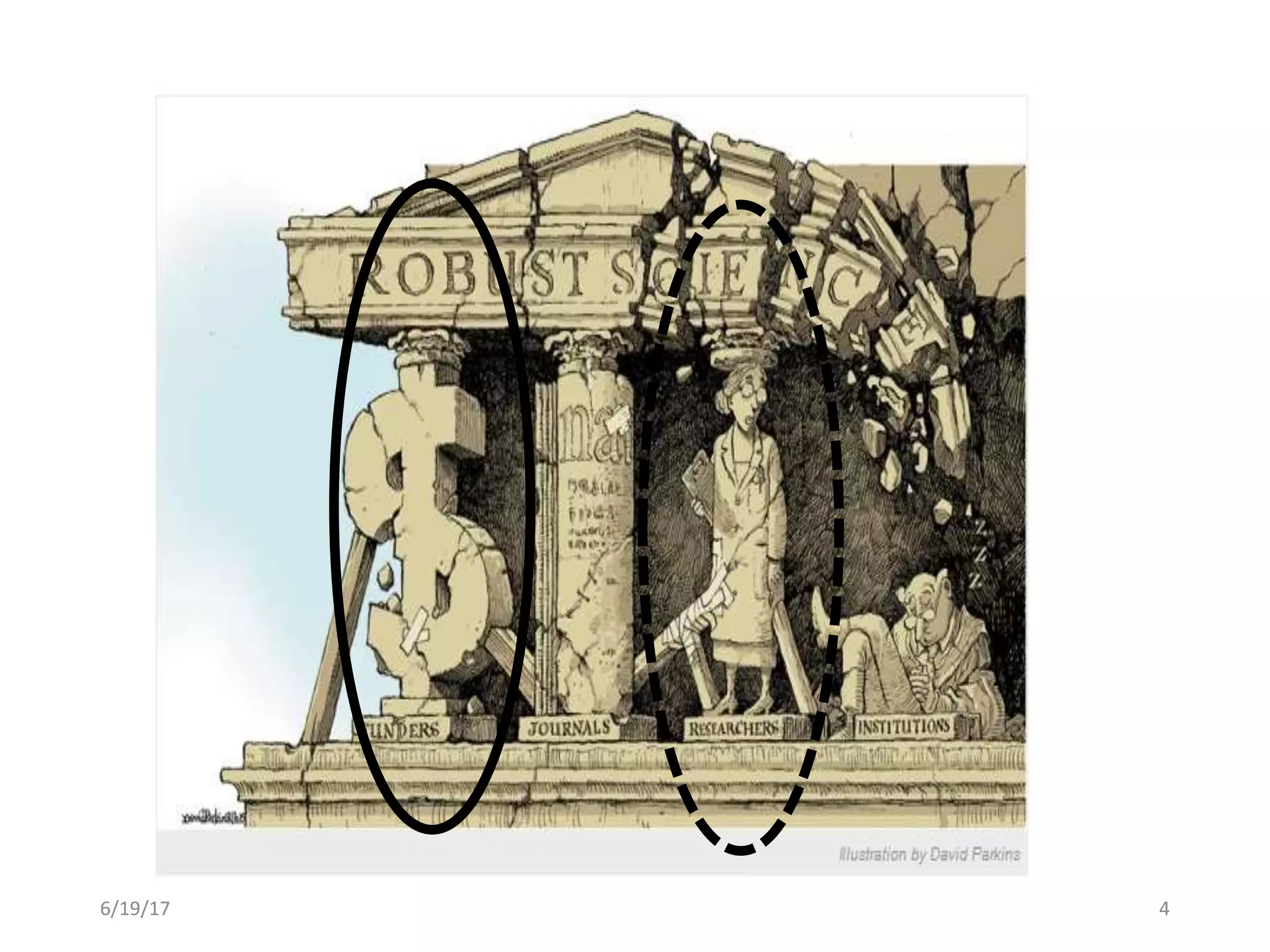

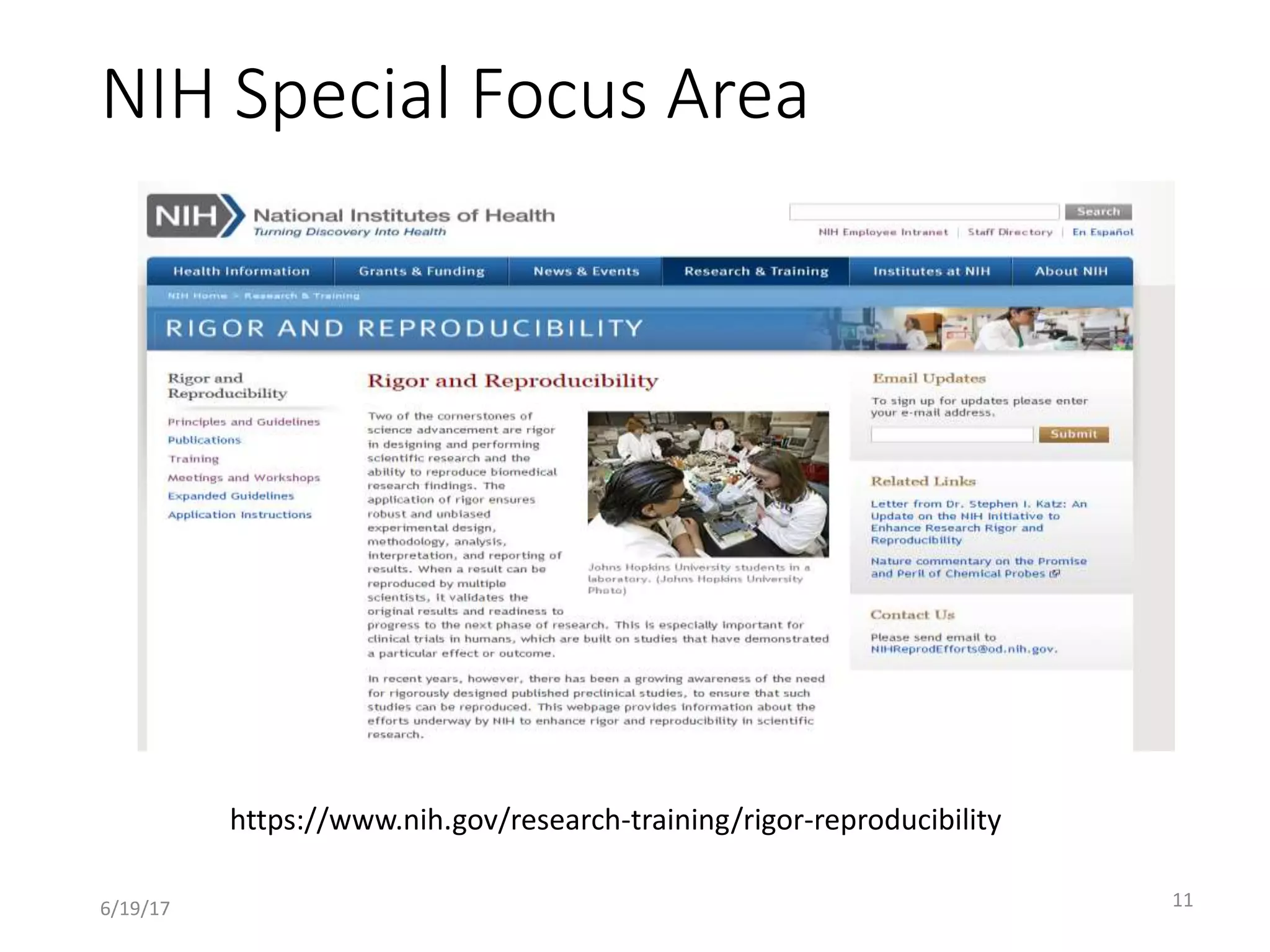

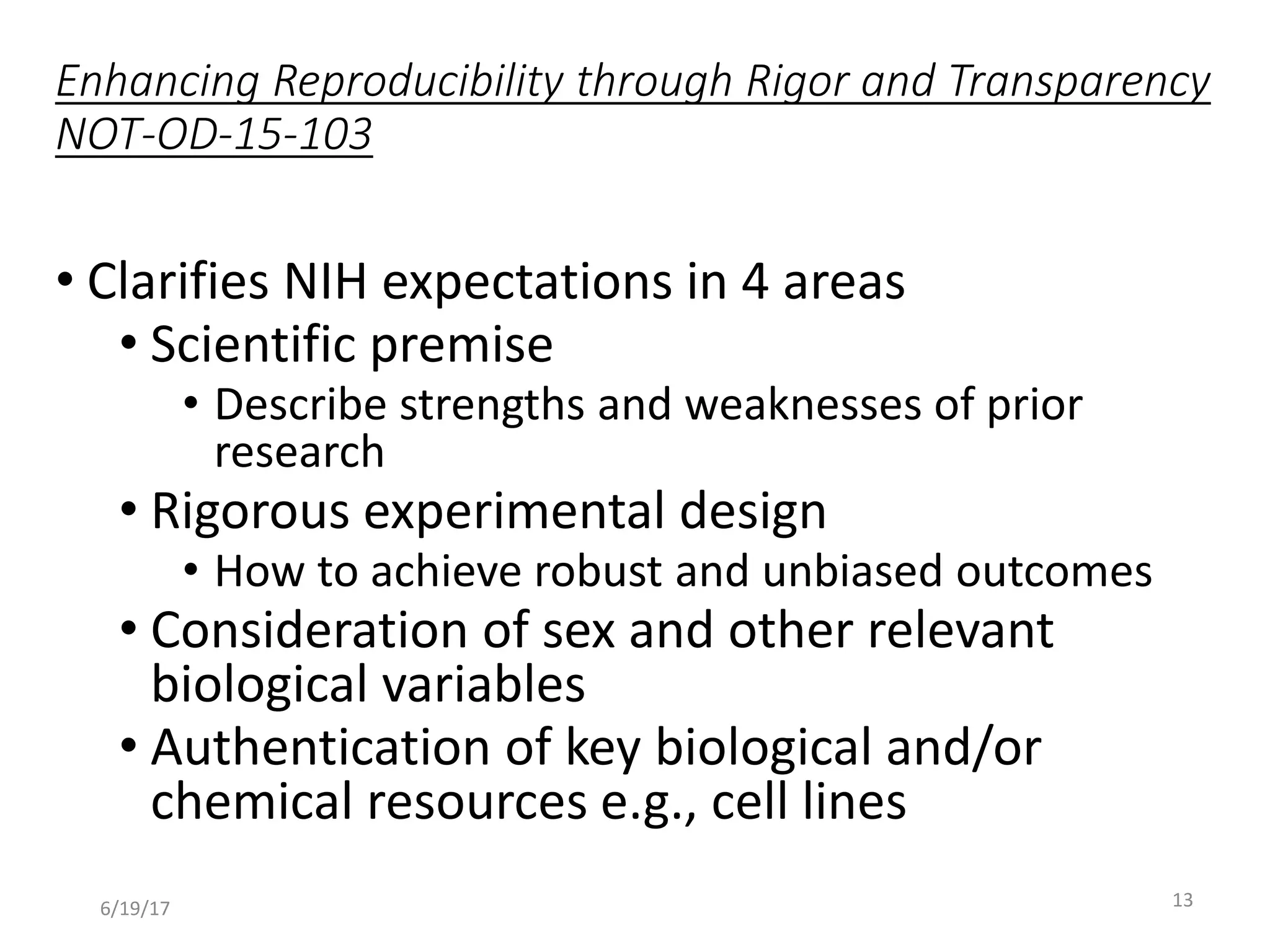

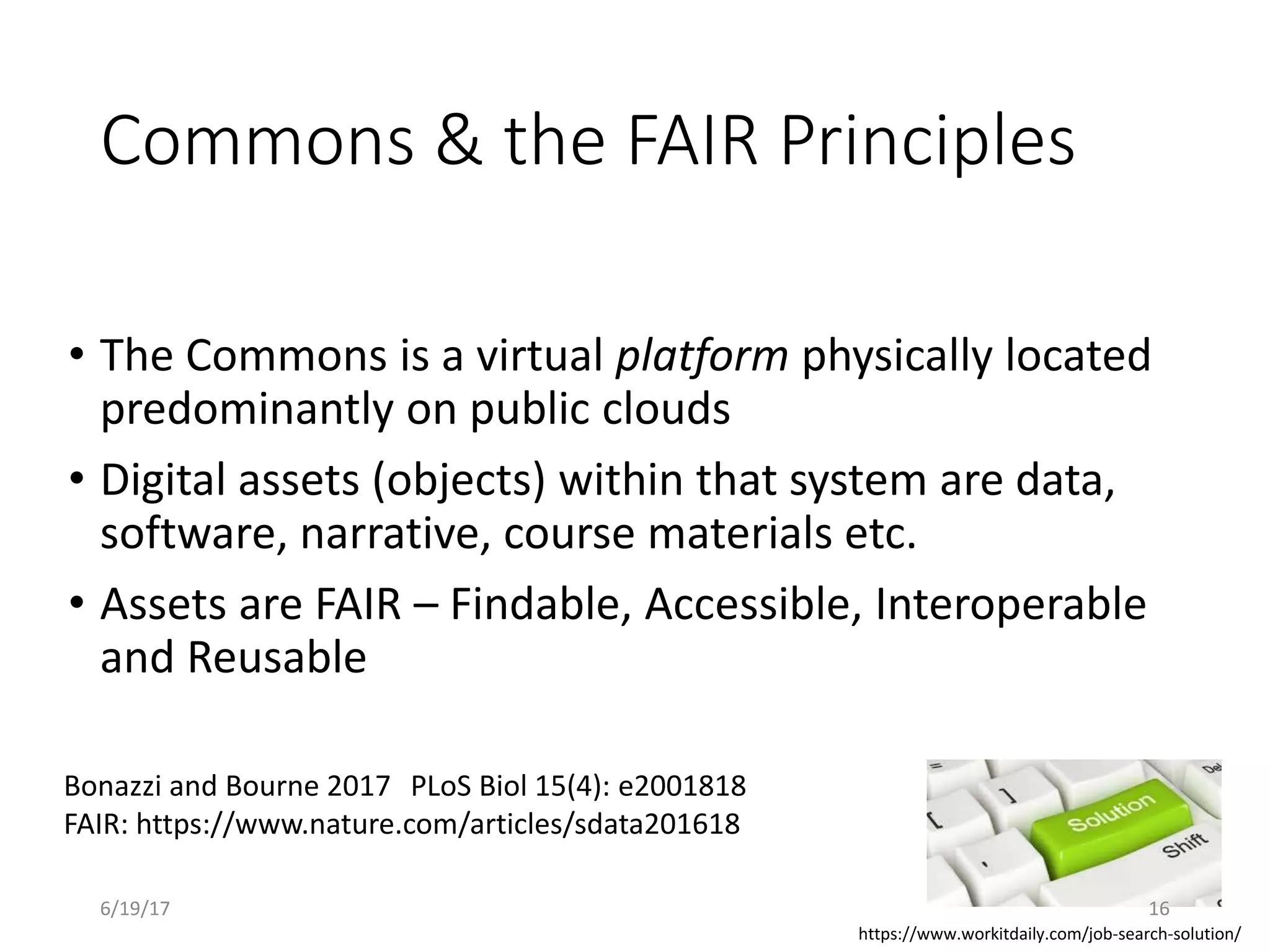

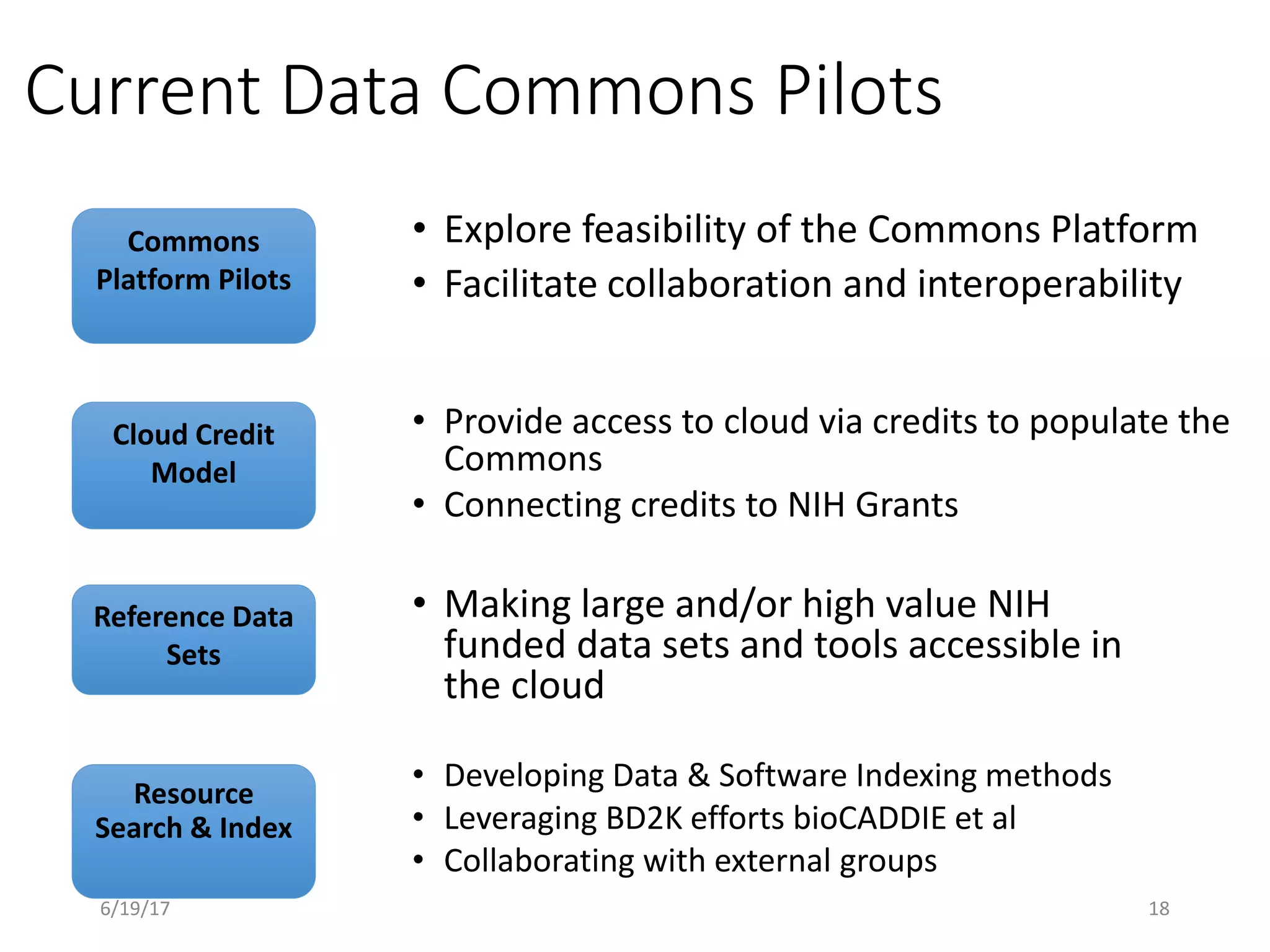

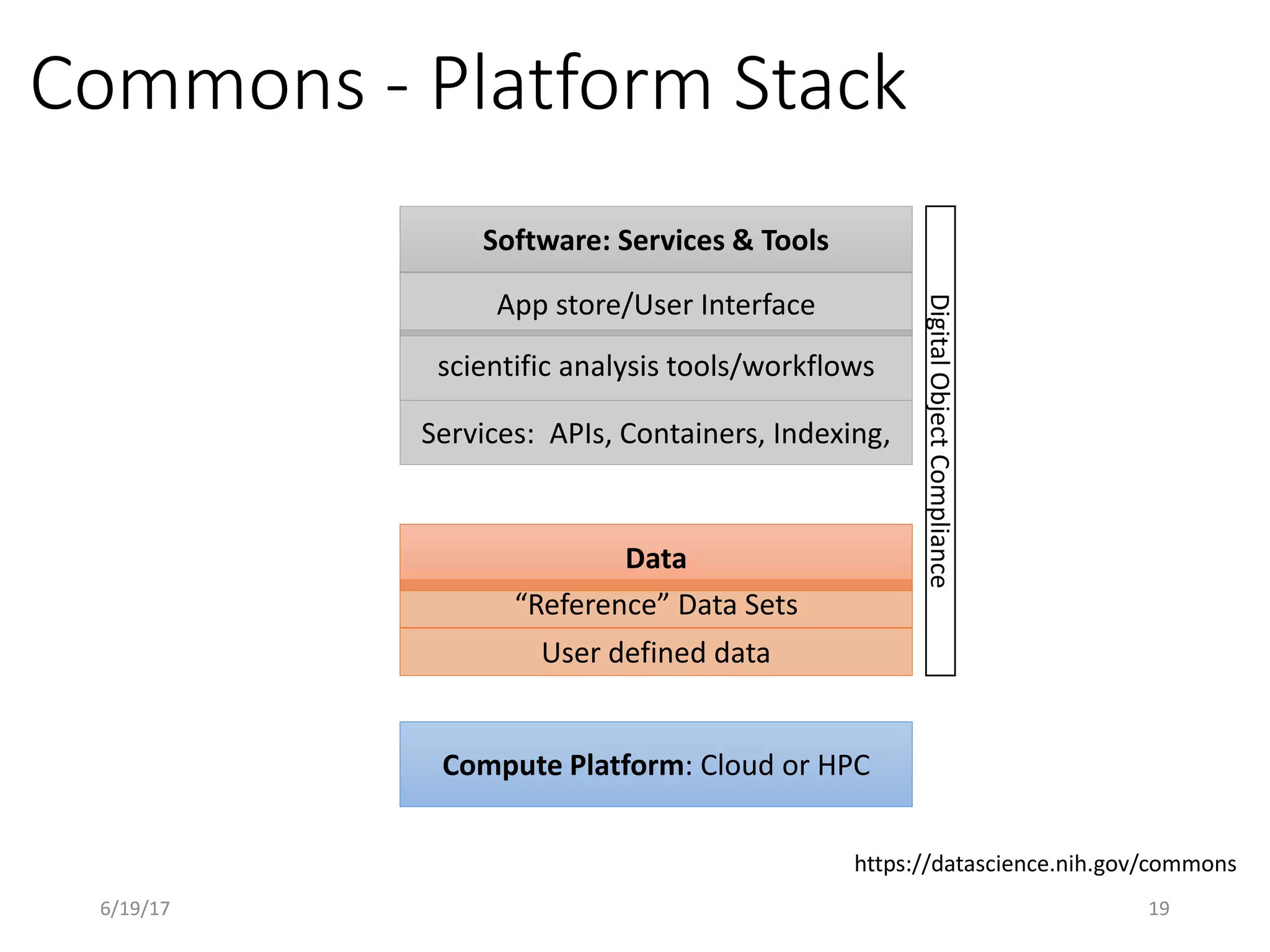

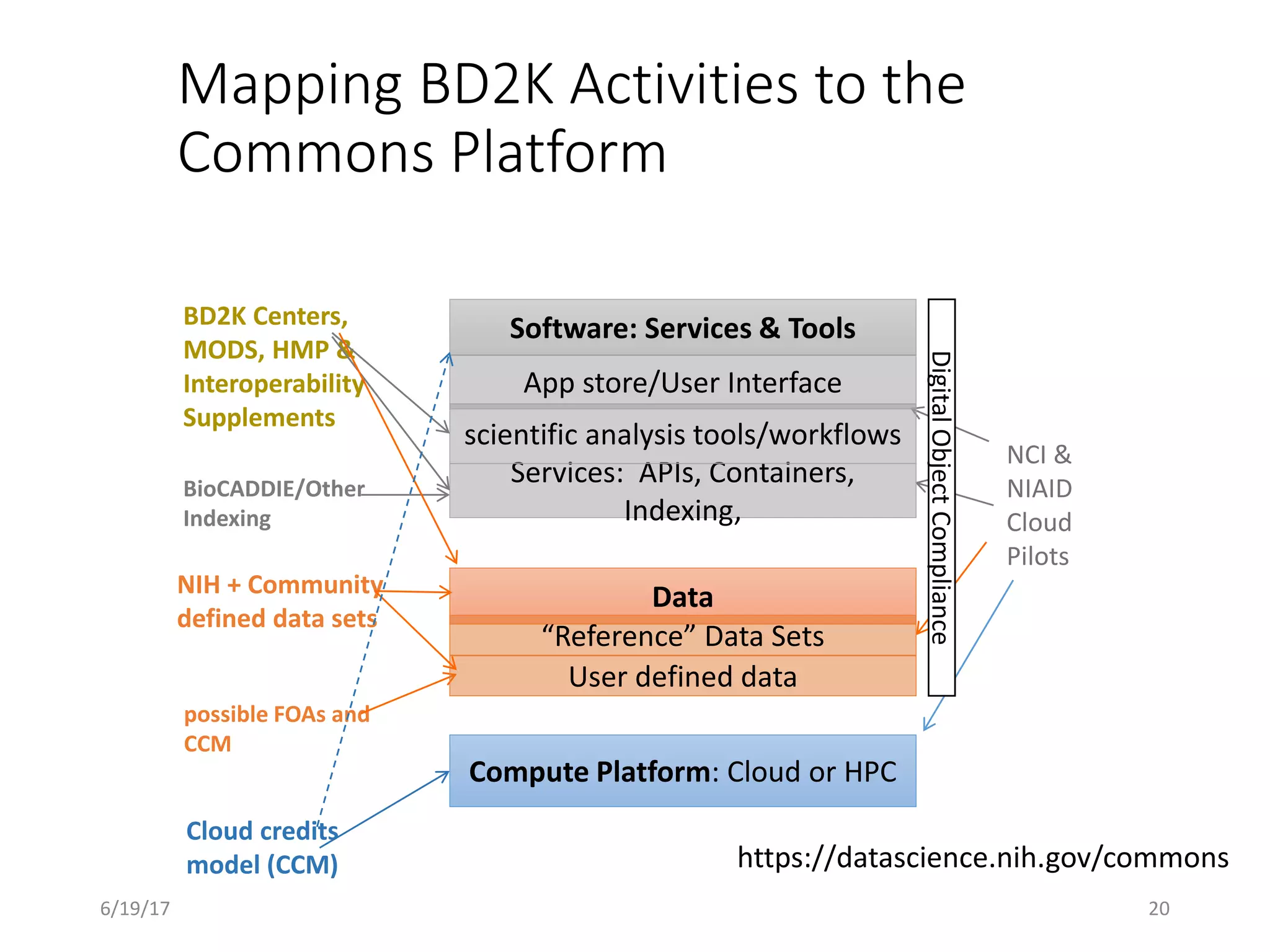

The document discusses the NIH's efforts to improve reproducibility in biomedical research. It describes how the NIH is working to incentivize researchers to make their work reproducible through funding policies, tools, and a proposed "Commons" platform. The Commons would be a virtual platform located in public clouds that would make large NIH-funded datasets and tools FAIR (Findable, Accessible, Interoperable, and Reusable). Several pilots are exploring using the Commons approach to facilitate collaboration and reproducibility. The document raises questions about evaluating the success of the pilots and balancing various metrics in a potential larger-scale implementation of the Commons.