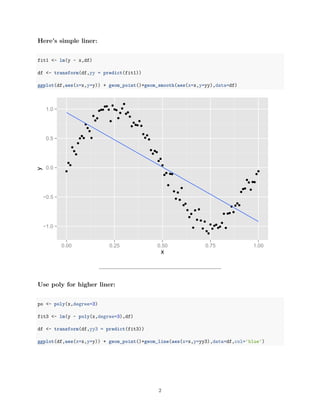

1) The document describes performing regression analysis on simulated sine wave data to compare different regression models. Simple linear regression, polynomial regression with degrees 3 and 26, and regularized regression using l1, l2, and cross-validation are examined.

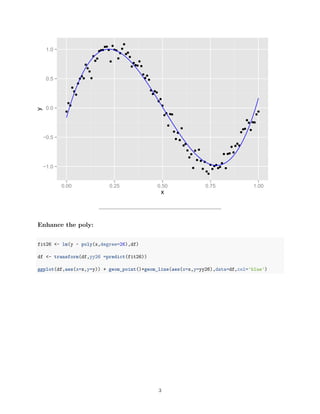

2) Cross-validation is used to compare train and test RMSE for polynomial models of degrees 1-10, showing higher degree does not necessarily yield better performance.

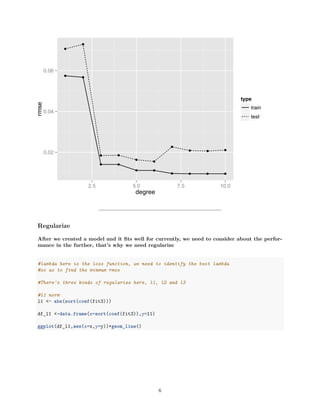

3) Regularization methods like l1 norm, l2 norm, and selecting lambda via cross-validation are explored, with the best lambda found to be 0.06 based on minimizing test RMSE.

![−1.0

−0.5

0.0

0.5

1.0

0.00 0.25 0.50 0.75 1.00

x

y

Higher poly doesn’t enjoy better performance, we use RMSE to cross-validation

model:

#Create rmse function:

rmse <- function(y,ry)

{

return(sqrt(sum((y-ry)^ 2))/length(ry))

}

#Create split function for cross-validation

split <- function(df,rate)

{

n <- length(df[,1])

index <- sample(1:n,round(rate * n))

train <- df[index,]

test <- df[-index,]

df <- list(train=train,test=test,data=df)

4](https://image.slidesharecdn.com/815458ba-1f14-4bd3-a342-118dc9989af5-170223160137/85/Regression_Sample-4-320.jpg)

![return(df)

}

#Create function to plot output

performance_Gen <- function(df,n){

performance <- data.frame()

for(index in 1:n){

fit <- lm(y ~ poly(x,degree=index),data = df$train)

performance <- rbind(performance,data.frame(degree =index,type='train',rmse=rmse(df$train['y'],predic

performance <- rbind(performance,data.frame(degree = index,type='test',rmse=rmse(df$test['y'],predict

}

return(performance)

}

Plot output about train & test dataset:

df_split <- split(df,0.5)

performance<- performance_Gen(df_split,10)

ggplot(performance,aes(x=degree,y=rmse,linetype=type))+geom_point()+geom_line()

5](https://image.slidesharecdn.com/815458ba-1f14-4bd3-a342-118dc9989af5-170223160137/85/Regression_Sample-5-320.jpg)

![−200

−100

0

100

200

−5.0 −2.5 0.0 2.5

x

y L2norm

#Use cross-validation to find the best lambda

library(glmnet)

getperform <- function(df){

fit<- with(df$train,glmnet(poly(x,degree=10),y))

lambdas <- fit$lambda

performance <- data.frame()

for( lambda in lambdas){

performance <- rbind(performance,data.frame(

lambda=lambda,

rmse=rmse(df$test['y'],with(df$test,predict(fit,poly(x,degree=10),s=lambda)))))

}

return(performance)

}

8](https://image.slidesharecdn.com/815458ba-1f14-4bd3-a342-118dc9989af5-170223160137/85/Regression_Sample-8-320.jpg)