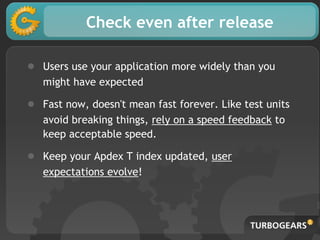

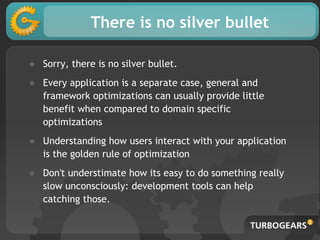

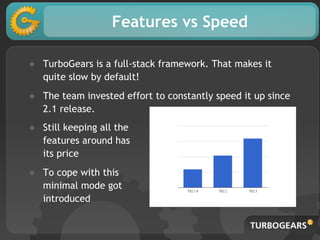

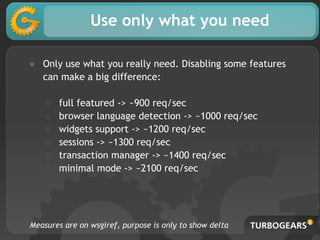

TurboGears is a full-stack Python web framework that can be slowed down by its many features. The document provides tips to optimize TurboGears applications, such as only using necessary features, avoiding static file serving, leveraging caching strategically, and offloading work asynchronously. It also stresses that understanding how users interact with an application is key to optimization.

![Looking at the code

class RootController(BaseController):

@expose('myproj.templates.movie')

@expose('json')

@validate({'movie':SQLAEntityConverter(model.Movie)}

def movie(self, movie, **kw):

return dict(movie=movie, user=request.identity and request.identity['user'])

Serving /movie/3 as a webpage and /movie/3.json as a

json encoded response](https://image.slidesharecdn.com/pygrunn2013-highperformancewebapplicationswithturbogears-130510051022-phpapp01/85/PyGrunn2013-High-Performance-Web-Applications-with-TurboGears-6-320.jpg)

![Avoid serving statics

Cascading files serving is a common pattern:

static_app = StaticURLParser('statics')

app = Cascade([static_app, app])

What it is really happening is a lot:

○ path gets parsed to avoid ../../../etc/passwd

○ path gets checked on file system

○ a 404 response is generated

○ The 404 response is caught by the Cascade

middleware that forwards the requests to your app](https://image.slidesharecdn.com/pygrunn2013-highperformancewebapplicationswithturbogears-130510051022-phpapp01/85/PyGrunn2013-High-Performance-Web-Applications-with-TurboGears-10-320.jpg)