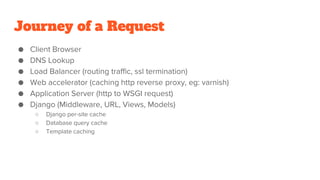

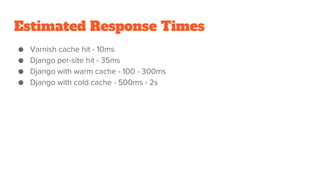

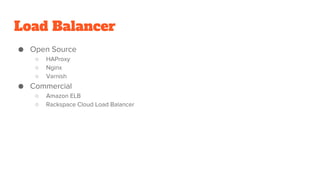

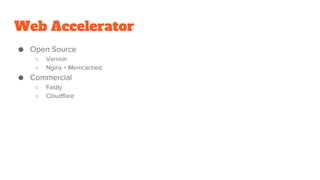

The document outlines best practices and optimizations for building high performance Django applications, detailing various components from client requests to database management. It emphasizes techniques such as caching, query optimizations, template management, and monitoring tools to enhance response times and overall efficiency. Additionally, it highlights the importance of continuous integration, deployment strategies, and maintaining updated libraries to prevent performance degradation and security vulnerabilities.

![● prefetch_related

Post = Post.objects.prefetch_related(‘tags’).get(slug=’this-post’)

# no extra queries

all_tags = post.tags.all()

# triggers additional queries

active_tags = post.tags.filter(is_active=True)

# such additional queries can be avoided by doing the additional filtering in

memory

active_tags = [tag for tag in all_tags if tag.is_active]](https://image.slidesharecdn.com/journeythroughhighperformancedjangoapplication-190105064709/85/Journey-through-high-performance-django-application-15-320.jpg)

![Too many Results

● Limit the queries using queryset[:n] where n is the maximum results returned

● Use pagination where appropriate](https://image.slidesharecdn.com/journeythroughhighperformancedjangoapplication-190105064709/85/Journey-through-high-performance-django-application-19-320.jpg)