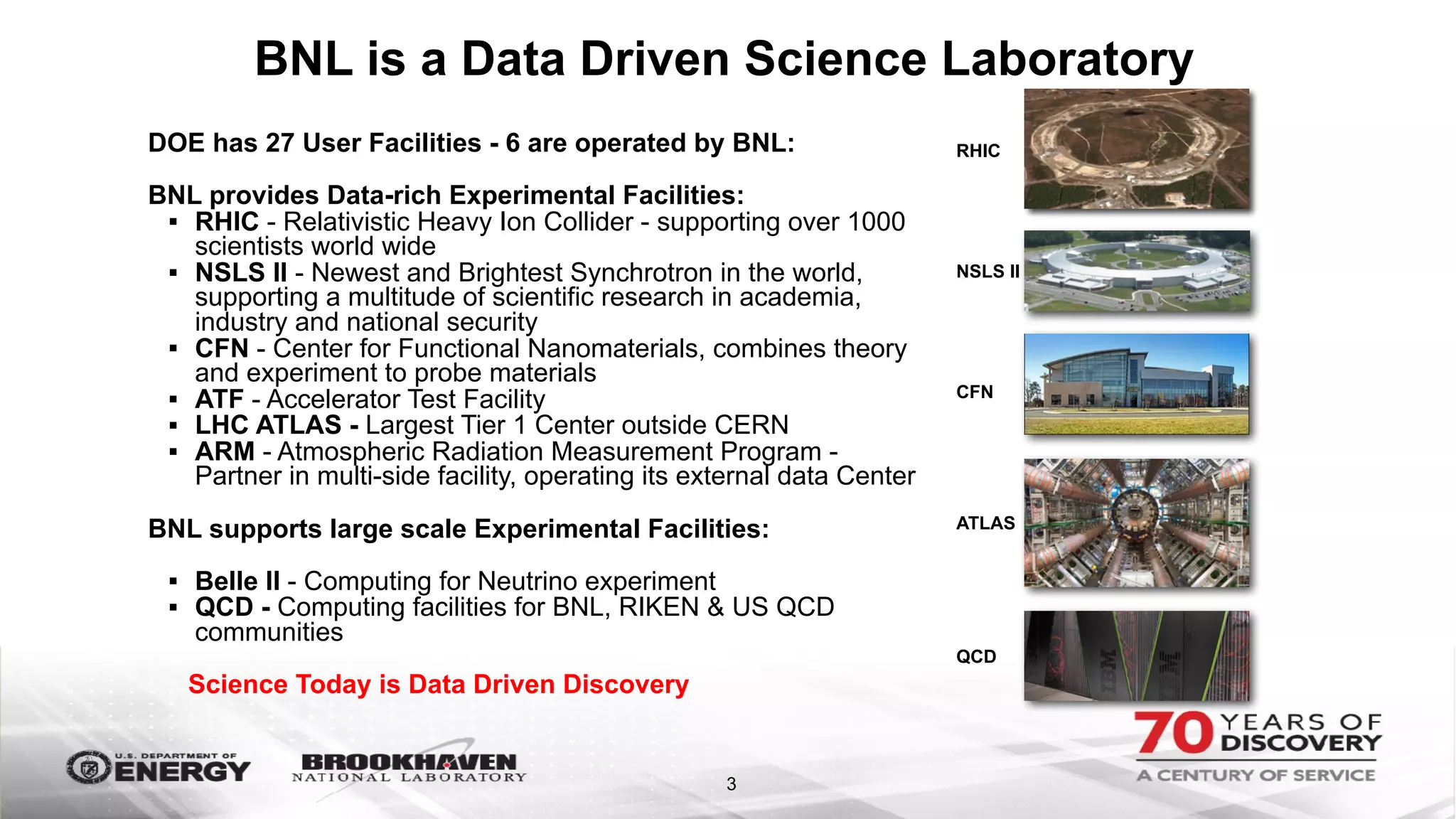

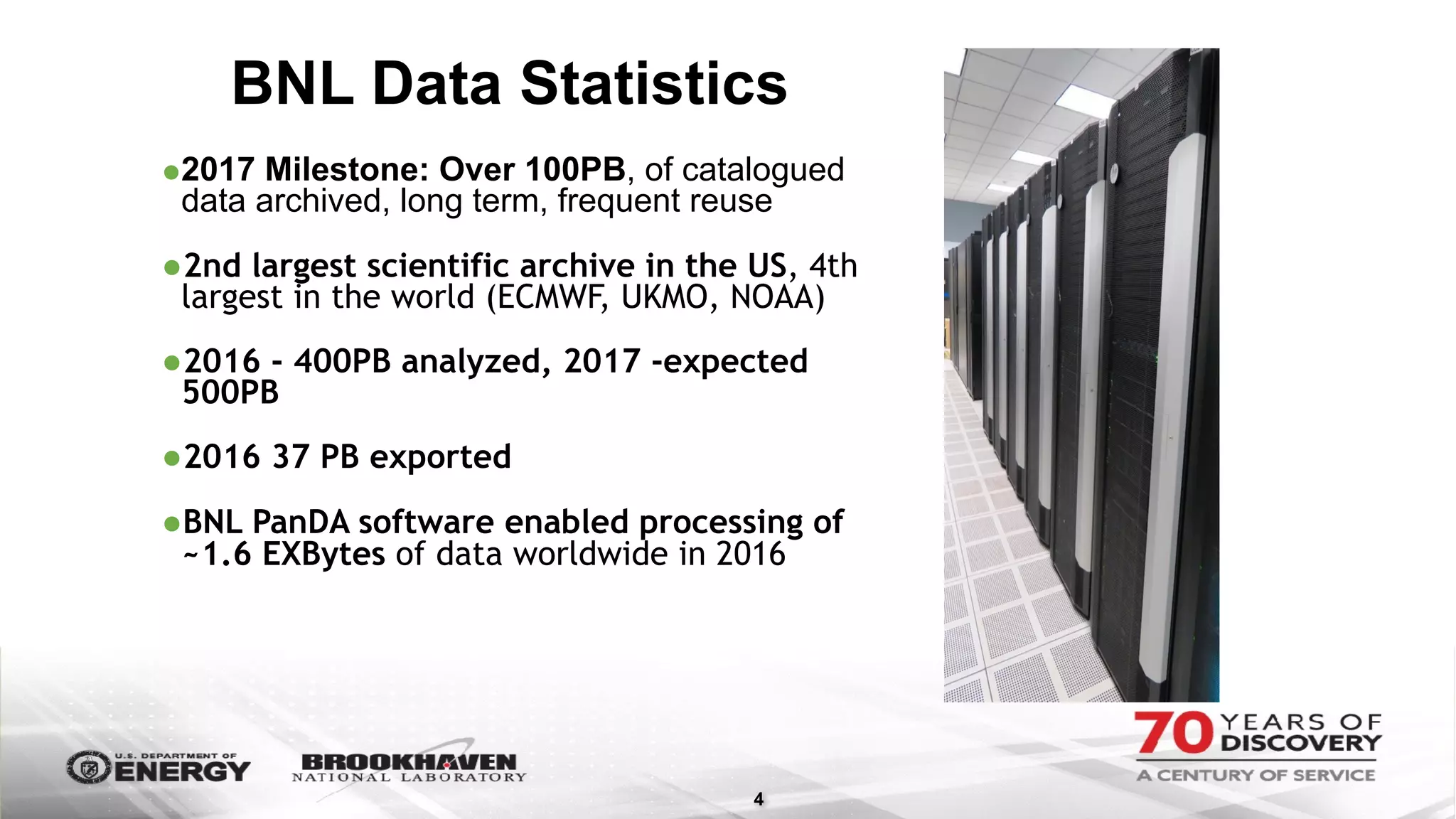

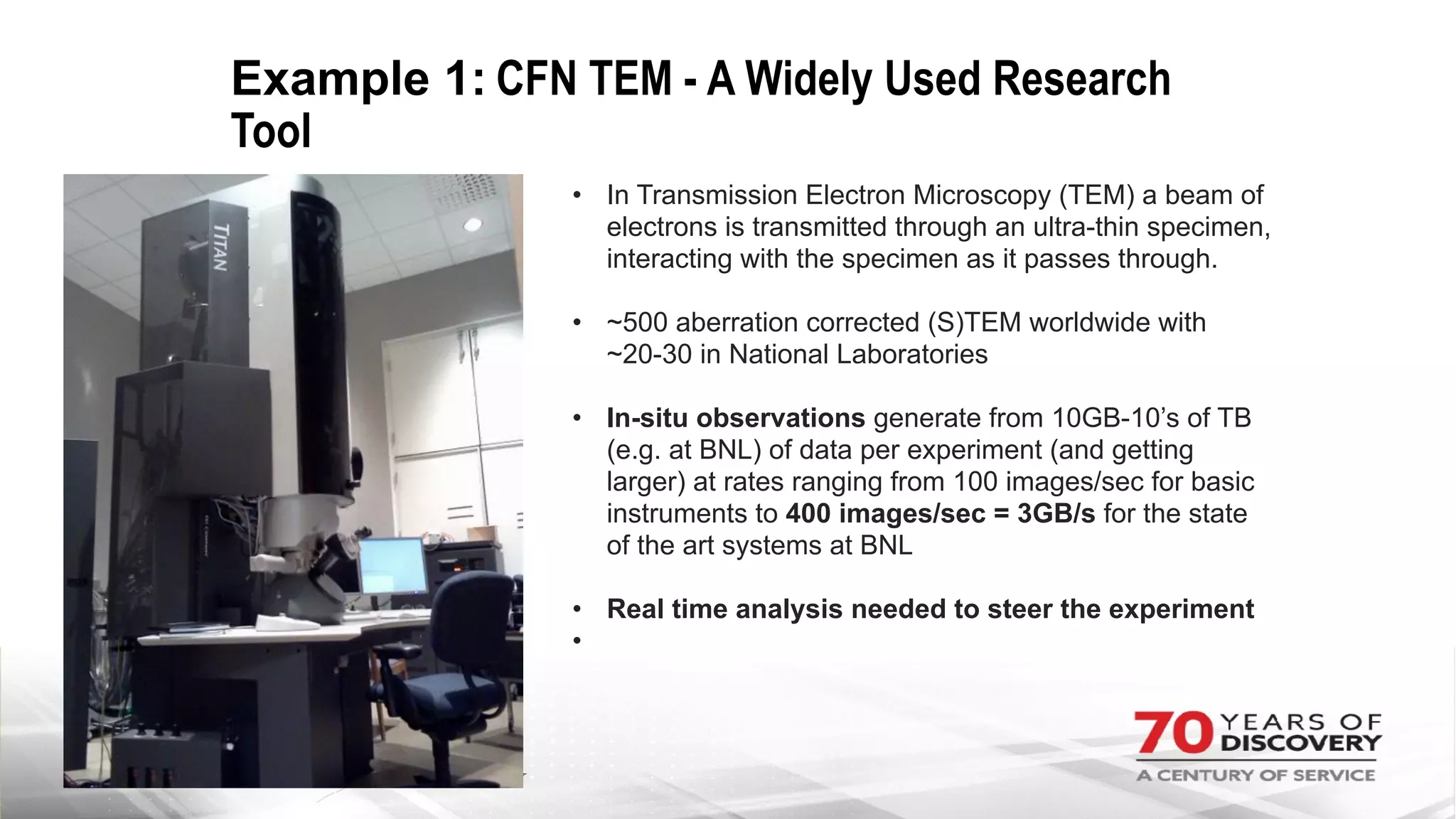

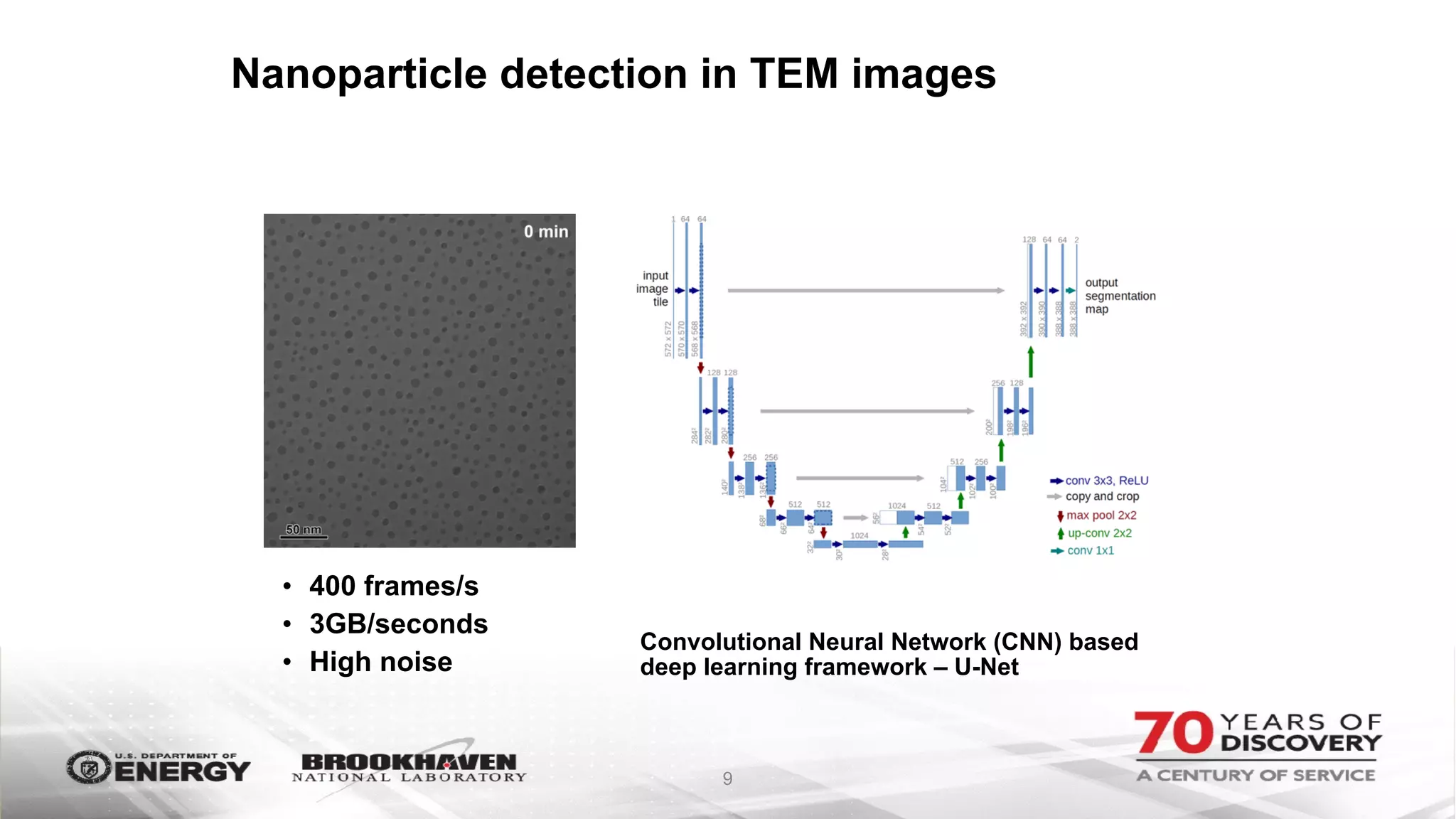

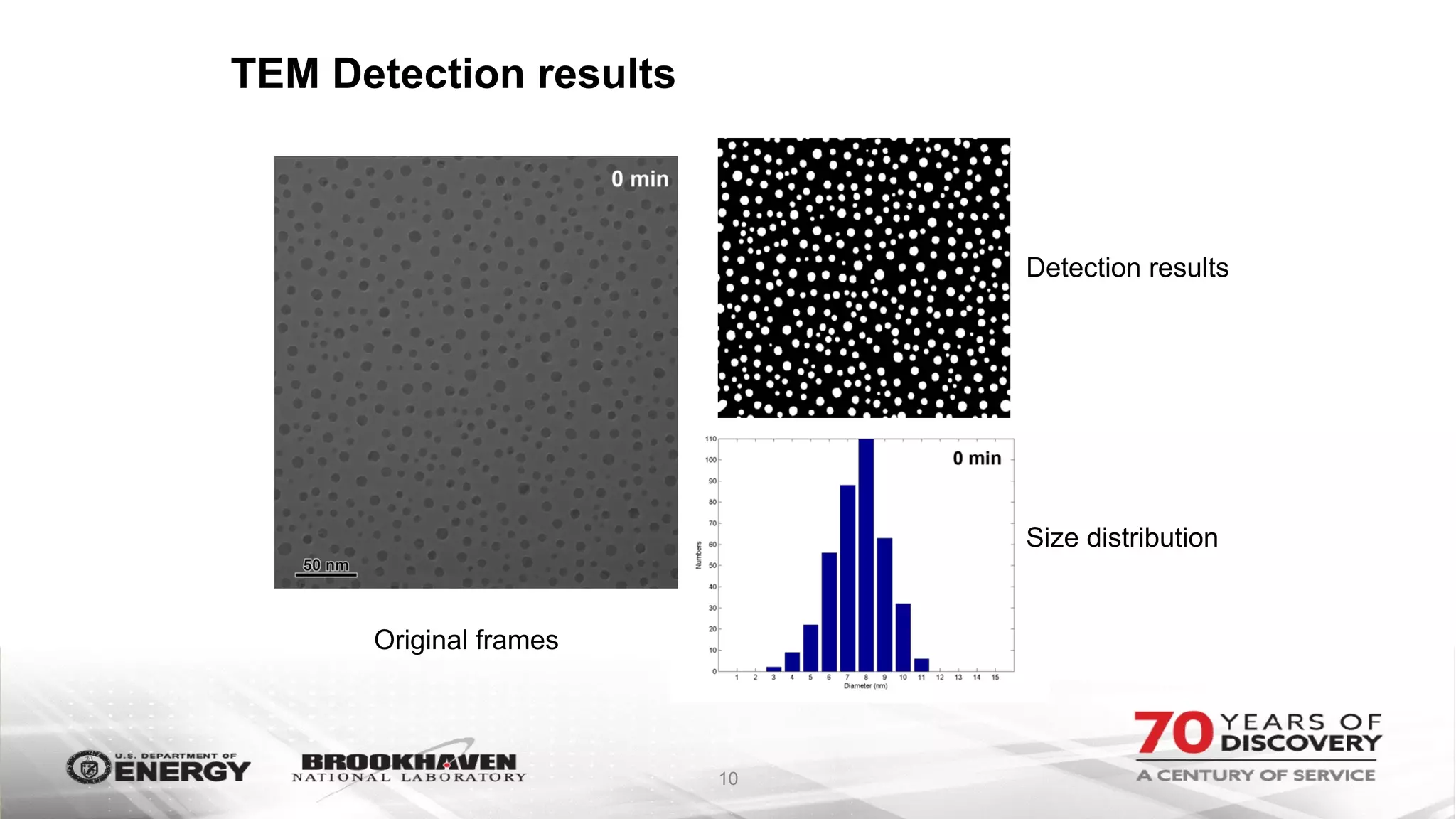

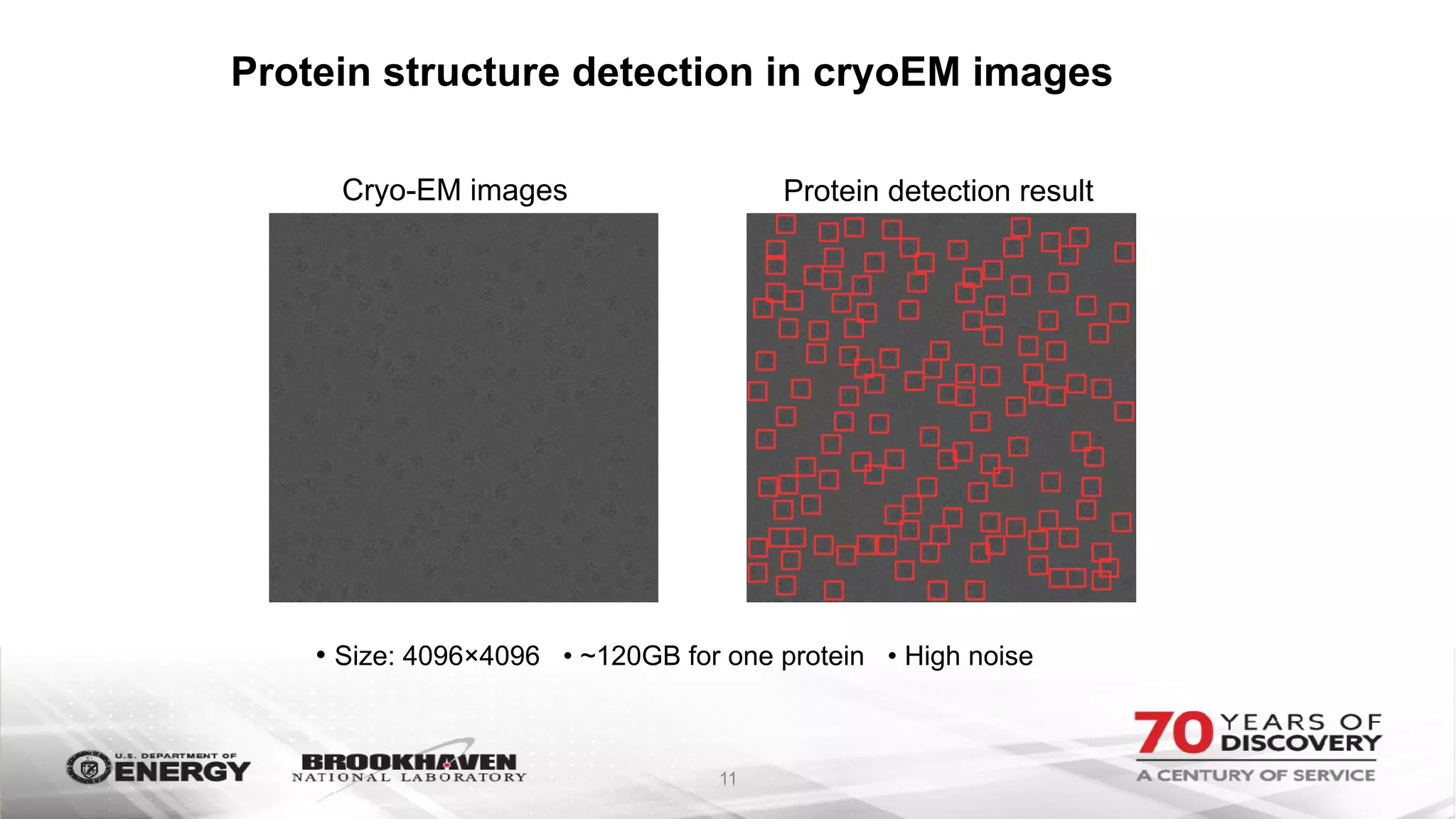

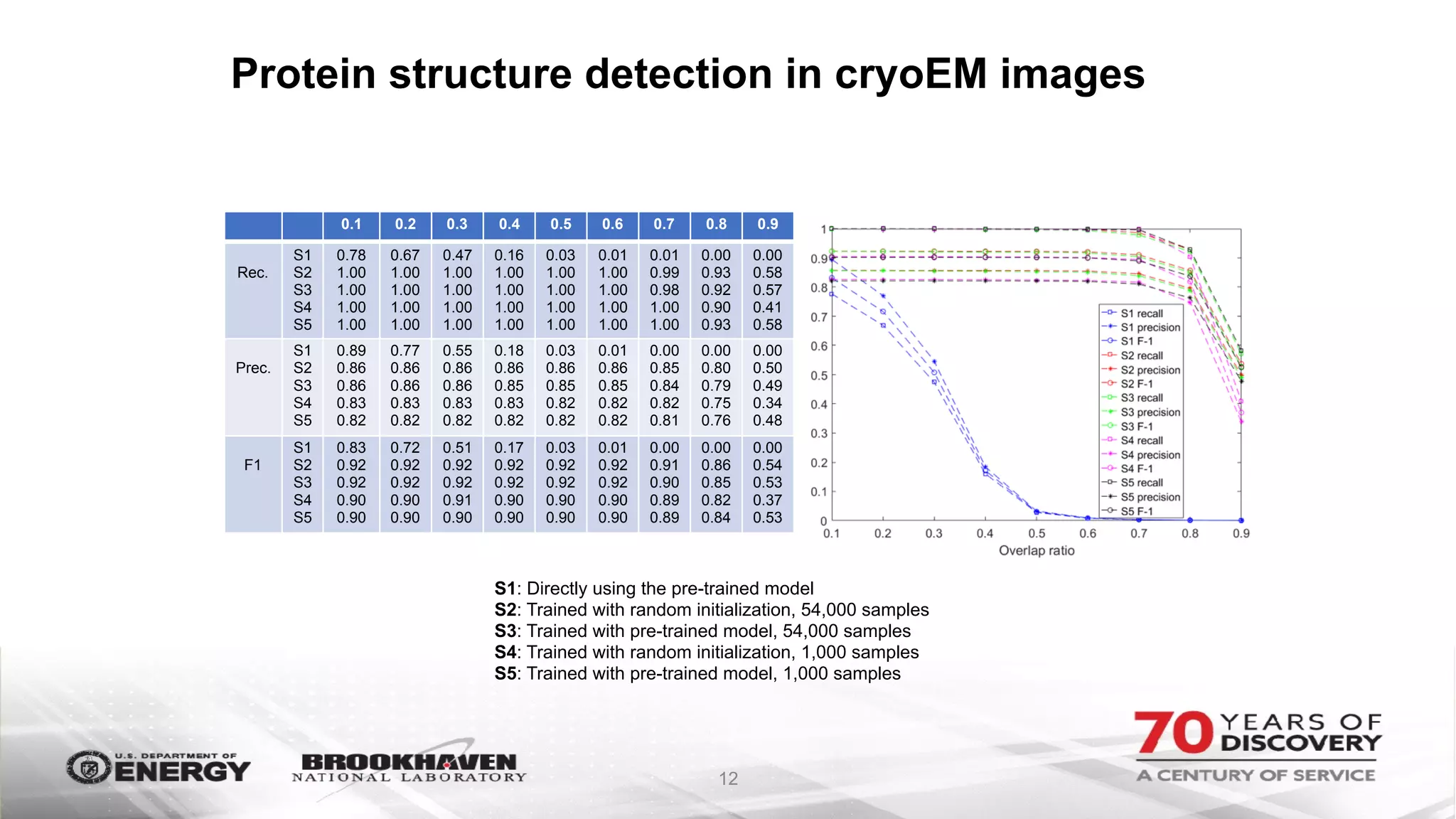

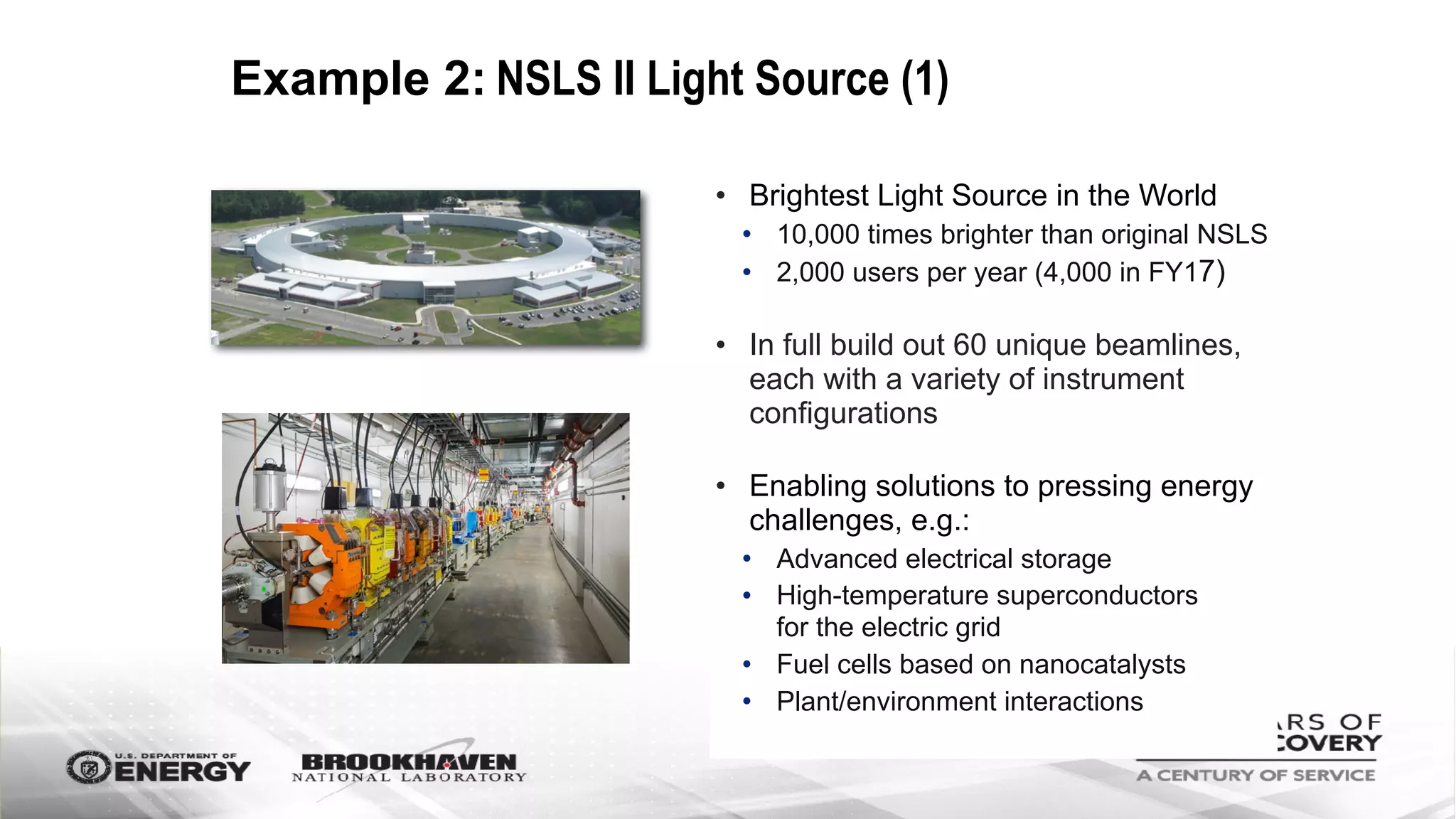

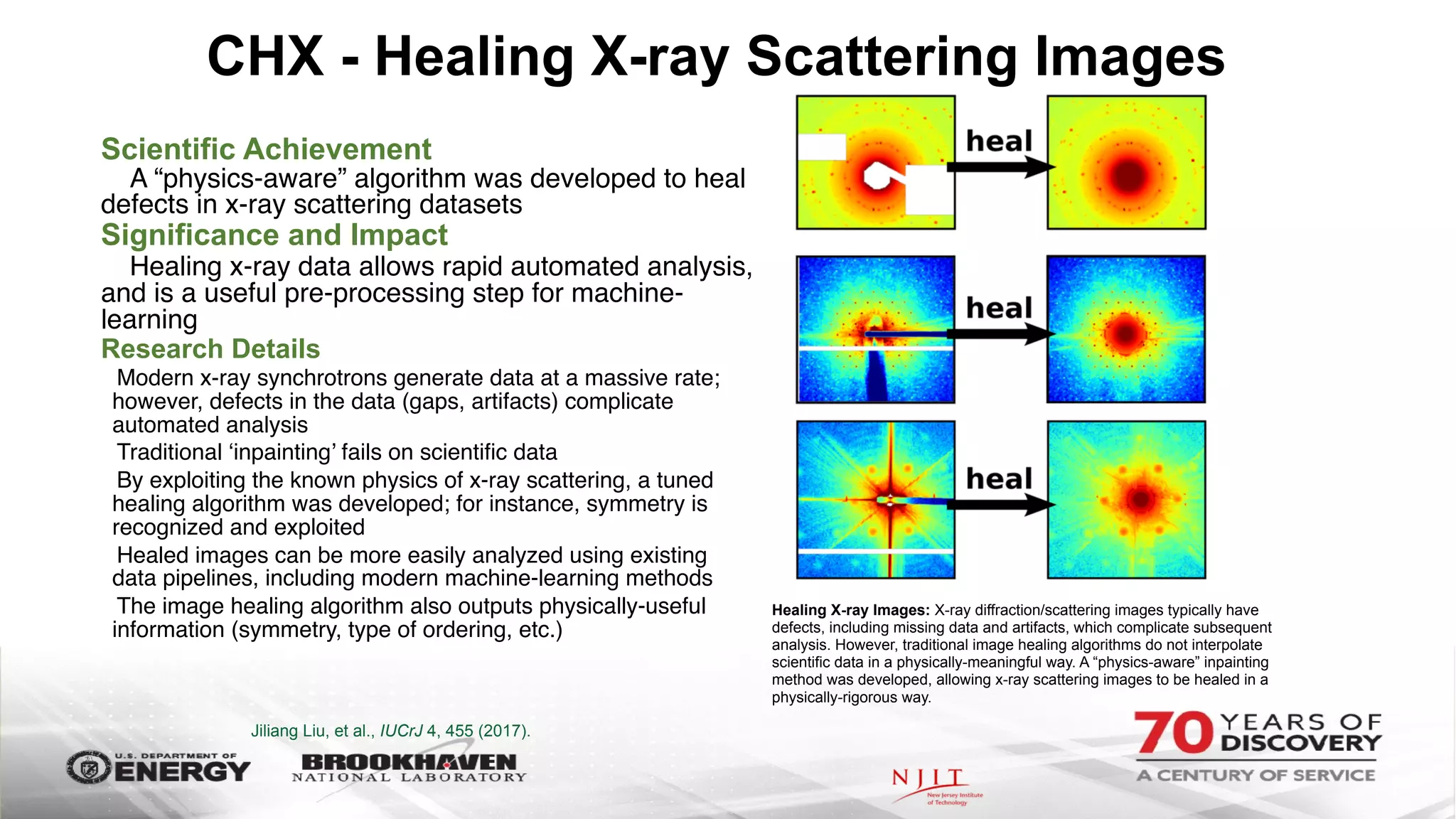

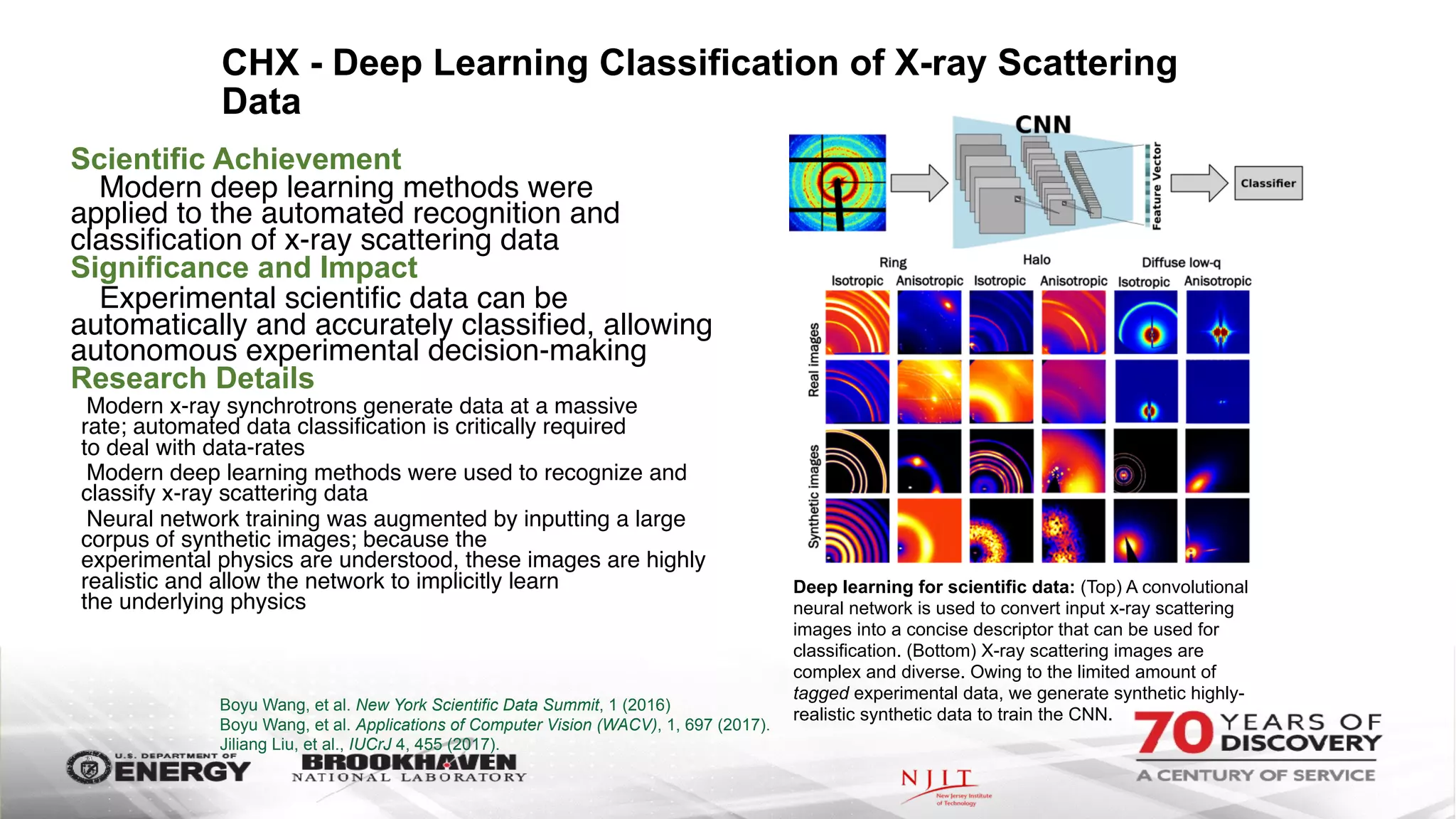

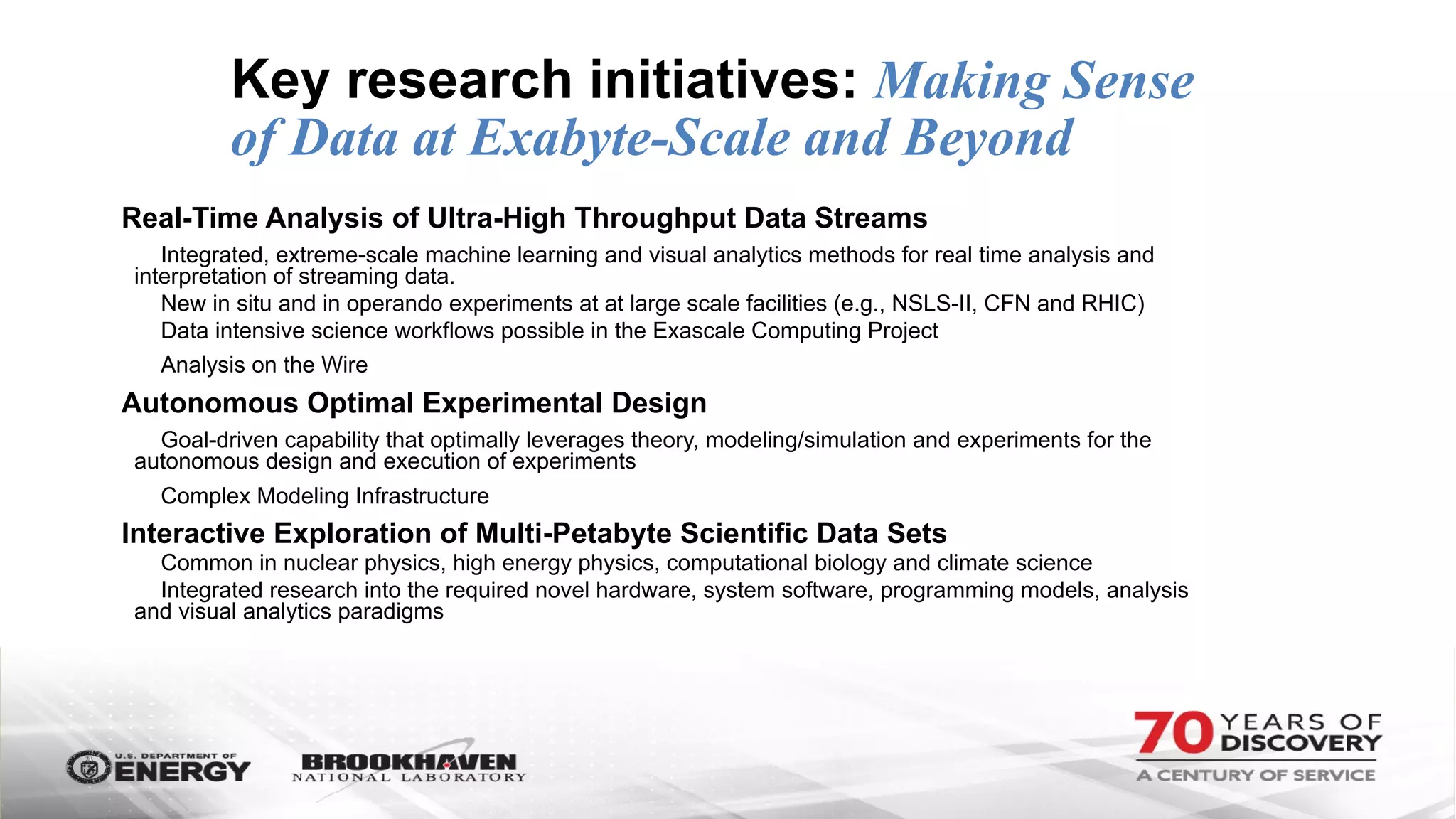

The document outlines BNL's role as a data-driven science laboratory, operating six user facilities that support various scientific research initiatives. It emphasizes the importance of real-time analysis and decision-making in handling large volumes of data generated from advanced experimental techniques, especially in fields like nanotechnology and material sciences. Key advancements include machine learning algorithms for data classification and defect healing in x-ray scattering images, which enhance the capabilities of current scientific methodologies.