The document outlines a systematic formative evaluation plan for the implementation of social studies education for democratic citizenship (ssedc) in grades K-6. It details the evaluation committee structure, methodology, and goals, emphasizing mixed methods to collect both quantitative and qualitative data from various school community stakeholders. The aim is to identify strengths and weaknesses in the program's implementation to recommend improvements and foster accountable citizenship education in schools.

![Instructional Program Evaluation Plan 4

conduct a detailed evaluation by means of multiple data collection tools, from multiple sources.

In Part IV, possible findings from the data analysis will provide the basis for suggestions to

improve the program. The presentation will form the basis to conduct a formative process

evaluation and provide recommendations for improvement of teachers‟ implementation of

SSEDC.

Committee Selection

The director, supervisor, one other education officer, teachers, principals, a community

member, a parent, and a private school principal, a school mentor, will comprise the evaluation

committee. The responsibility of the committee members is to respond to the strengths and

challenges of the program to refine the program. Gard, Flannigan, and Cluskey (2004) cited the

evaluation committee has the responsibility “to use data to identify strengths and weaknesses of

the program” (p. 176).

The coordinator of the development and revision processes and the supervisor coupled

with the stakeholders in the school community “…. are vital to the survival and success of the

[program]” (Gard et al., 2004, p. 4). Collaboration with external evaluators will ensure a

supportive environment (Chen, 2005). The director must guard against bias and conflict of

interests (Posavac & Carey, 2007) because of involvement in all stages of the program. Ethics

and values are two elements necessary to plan, conduct, and evaluate a program to ensure

accuracy of results. Using external and internal evaluators would help to lessen or eliminate

perceived internal bias while empowering internal and external stakeholders (Chen).

Part I: Background of the SSEDC Program

Before 2006, the last attempt at social studies curriculum review and renewal was in the

late 1980s, supported by USAID curriculum specialists. After a quarter of a century, rebranding](https://image.slidesharecdn.com/programevaluationplan-130809205232-phpapp02/85/Program-evaluation-plan-4-320.jpg)

![Instructional Program Evaluation Plan 5

of the social studies curriculum was necessary including renaming the program to social studies

education for Democratic citizenship (SSEDC). Besides datedness, factors, affecting the social

behaviors of citizens, especially among the youth, influenced the development of SSEDC.

In 2007, a team, including the Director of Curriculum as expert, a core of teachers, and

representatives from the environment ministry, completed a first draft of SSEDC. After several

reviews, SSEDC was piloted among a sample of schools and classes (K –9), over a period of 12

weeks in 2008. At various review sessions, all grade teachers had the opportunity to input

changes, based on the results and recommendations of the pilot implementation data.

Implementation of the revised instructional program took place in September 2009, Familiarity

seminars and training workshops were actions to develop teacher competence and support the

implementation. Between 2009 and present, the director, the supervisor, education officers,

principals, and senior teachers continue to conduct monitoring of the SSEDC.

Goals of SSEDC

The following is a section of the rationale of SSEDC (Ministry of Education, 2009)

outlining several reasons that influenced program development.

First, in Antigua and Barbuda [is] a Democratic state; independent from Britain

since 1981; Education for Democratic Citizenship (EDC) would mean that the

main outcome of schooling should be citizens with civic consciousness; not only

equipped with knowledge but[also have] the ability to demonstrate skills

appropriate to such a citizen, who also exhibit democratic values. Second, there

appears to be a democratic deficit. A high percentage of individuals (youth) do

not vote or even show much interest in politics. SSEDC should help to improve

individuals‟ levels of understanding of their lives and how they interact within](https://image.slidesharecdn.com/programevaluationplan-130809205232-phpapp02/85/Program-evaluation-plan-5-320.jpg)

![Instructional Program Evaluation Plan 6

society. Third, [because the mid-2000s] there has been an upsurge of crime and

violence. Of particular interest are the negative activities among the youth. These

include school violence, drug-related violence, increases in cases of HIV/AIDS,

home invasions coupled with robbery and rape, murders, and other gun-related

crimes. Fourth, surge in migration of Caribbean neighbors and an influx of other

migrants from China has opened up the avenue for the focus on themes, such as

civic ideals and practices, identity, traditions, multiculturalism, cultural diversity

and tolerance. All citizens need to tolerate peoples from other places, and also to

tolerate their differences (p. 1)

The focus of SSEDC is on relationships to promote (i) understanding the role and

responsibility of citizens in a democratic society and (ii) awareness of the link and

interdependence locally, regionally, globally. The overarching goal of SSEDC is citizenship;

achievable through:

1. Knowledge of social issues and concerns;

2. Skill development;

3. Development of values and attitudes; and

4. Social participation (p. 3)

Teachers should provide the preceding experiences. The program„s rationale and goal

emphasize the outcome capabilities including knowledge, skills, values, and dispositions the

students should achieve. Students should also receive opportunities to participate in the society

by transferring classroom learning to perform the role of productive citizens. These long-term

outcomes should drive lesson objectives as well as the teaching learning experiences.](https://image.slidesharecdn.com/programevaluationplan-130809205232-phpapp02/85/Program-evaluation-plan-6-320.jpg)

![Instructional Program Evaluation Plan 7

The director introduced the instructional guide with the following statement adapted from

the Organization of Eastern Caribbean States Educational and Research Unit (OERU):

The [program] offers a range of ideas and suggestions to help teachers

organize participatory learning experiences designed to prepare students

for lifelong learning”…. Social studies classrooms place major emphasis

on student-centered learning through the acquisition and development of

specific cognitive skills and competencies. The focus is on learning

through activities, practice, and participation…. These skills are expected

to produce the ultimate outcomes of SSEDC: students as citizens,

acquiring and demonstrating social understanding and civic efficacy”

(Ministry of Education, 2009, p. 2)

Social and Contextual Factors

The SSEDC Instructional program is a part of private and public schools curriculum.

The pilot implementation findings highlighted some gaps and the intent of the review was to

improve on the program. Currently, the curriculum unit personnel conduct support and

monitoring evaluation to provide feedback information on a regular basis to facilitate supervision

of the program. The qualitative and quantitative reports obtained from observation of teaching

using a rating scale, reflections, the classroom environment, students‟ work, and the interactions

reveal areas that mentors could assist with on a continuous basis.

Determination of the Status of the Program

The monitoring in public schools revealed variations exist in the teaching-learning

contexts within and across schools and classes that result in differentiated delivery and students‟

learning experiences. The nature of school leadership and support, supporting materials, and out](https://image.slidesharecdn.com/programevaluationplan-130809205232-phpapp02/85/Program-evaluation-plan-7-320.jpg)

![Instructional Program Evaluation Plan 10

ii. Provide assurance that each has the expertise or support required to

complete the work.

2. Feasibility

a. Practical procedures

i. Implement practical and responsive procedures aligned with the

operation of the program.

3. Propriety

a. Human rights and respect

i. Design and conduct evaluation to protect human and legal rights and

maintain the dignity of participants and stakeholders.

4. Accuracy

a. Sound designs and analyses

i. Employ technically adequate designs and analyses appropriate for the

purpose of the evaluation

The description of the standards supports the importance of the stakeholders developing trust in

the expertise of the evaluator to plan and implement appropriate procedures and designs to

promote successful and valid evaluation. Stakeholders must also feel protected and respected.

The following discussion will support how the standards will influence the plan in the choice of

theory, stakeholders, model, design, and human rights and respect.

Program Theory

Chen (2005) supported the view program theory is useful in “improving the

generalizability of evaluation results, contributing to social science theory, uncovering

unintended effects,… achieving consensus in evaluation planning…[and providing] …early](https://image.slidesharecdn.com/programevaluationplan-130809205232-phpapp02/85/Program-evaluation-plan-10-320.jpg)

![Instructional Program Evaluation Plan 25

is a summative approach to link teaching learning and assessment. Schneller (2008) noted

service learning as an offshoot of Dewey‟s theory of experience, describing the strategy as

“pedagogy, curriculum, activities and programmes that embrace organized, hands-on community

service and volunteerism to enhance student learning and the schooling experience” (p. 294).

This aspect of experiential learning culminates a period of learning, giving opportunity for the

learners to demonstrate transfer of learning competencies in similar or new situations in the

school environment and in the community.

The documents will include the program document and teachers‟ lesson plans with their

reflections. The documents could bear evidence of the teaching, learning, and assessment

strategies that teachers use in planning and delivery of lessons. Numerous instructional

strategies are available for use in the classroom (See Teacher questionnaire, Appendix B).

Planning is important since “without a careful plan for presenting content, [students] experience

may be akin to a jigsaw puzzle” (Gunter, Estes, & Schwab, 2003, p. 39). Planning the

procedures portion of lesson plans requires teachers to select appropriate strategies to meet

identified needs, interests, motivations, and dispositions of learners. Teachers should consider

learner characteristics and learning styles when choosing an instructional strategy (Hunt,

Wiseman, & Touzel, 2009).

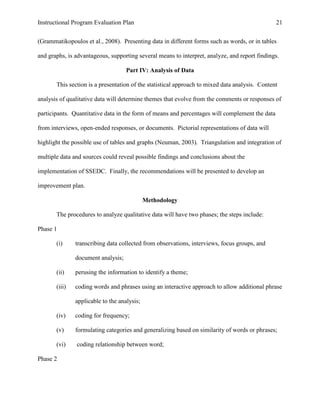

Sub-question 3. What problems are teachers experiencing?

The questionnaire should give further support to the delivery of the curriculum.

Although prescriptive, some variables might yet affect the implementation process, including the

allotted periods, the scope of the topics, the available resources, and the appropriateness of the

content for the prescribed grade level, and the learning environment. The evaluation could

highlight the difficulty and ease with which teachers were able to implement the objectives and](https://image.slidesharecdn.com/programevaluationplan-130809205232-phpapp02/85/Program-evaluation-plan-25-320.jpg)

![Instructional Program Evaluation Plan 33

Appendix B

Teacher Questionnaire

Teacher: ________________________________________________________________

School: _________________________________________________________________

Class:

Term: __________________________________________________________________

Section A

i. How often do you use the social studies education for democratic citizenship (SSEDC) instructional guide

Always Sometimes Never

ii. Lessons contain realistic teaching time frames. Yes _____ No ______

iii. Number of teaching lessons/activities. Sufficient ____ Insufficient ____

iv. Number of available resources listed. Sufficient ____ Insufficient ____

v. Content for the level of teaching? Appropriate ____ Inappropriate_____

Section B

1.What objectives did you cover this term?

[use unit & objective numbers]

2.What content was difficult to teach?

3.What content was easy to teach?

Section C: Strategies/methods

1. Which teaching-learning strategies or activities do you use?

Research ____

Grouping ____

Peer teaching ____

Investigation ____

Simulations ____

Role Play ____

Dramatization ____

Community Service Learning ____

Lecture ____

Reading textbook ____

Project ____

Poster ____

Chart ____

Poem/song ____

Displays ____

Exhibitions ____

Questioning ____

Field trip ____

Journal ____

Discussion ____

Lecture ____

Vocabulary development ____

Presentation ____

Notes ____

Class work ____](https://image.slidesharecdn.com/programevaluationplan-130809205232-phpapp02/85/Program-evaluation-plan-33-320.jpg)

![Running head: INSTRUCTIONAL PROGRAM EVALUATION 34

2. Which assessment methods do you use?

Journals ____

Investigation & Projects ____

Observation ____

Oral assessment ____

Pencil& Paper Tests/exercises ____

Worksheets ____

Practical / Performance Assessment ____

Portfolio Assessment ____

Peer assessment ____

Questionnaires ____

Community Service Learning ____

Section D

Respond to the following:

1. Describe TWO main difficulties you encounter in using the curriculum/program guide

2. State THREE suggestions for improving the curriculum

3. Explain the importance of SSEDC in the national curriculum.

4. Other comments. [e.g. your feelings, your practice, and students‟ responses]](https://image.slidesharecdn.com/programevaluationplan-130809205232-phpapp02/85/Program-evaluation-plan-34-320.jpg)