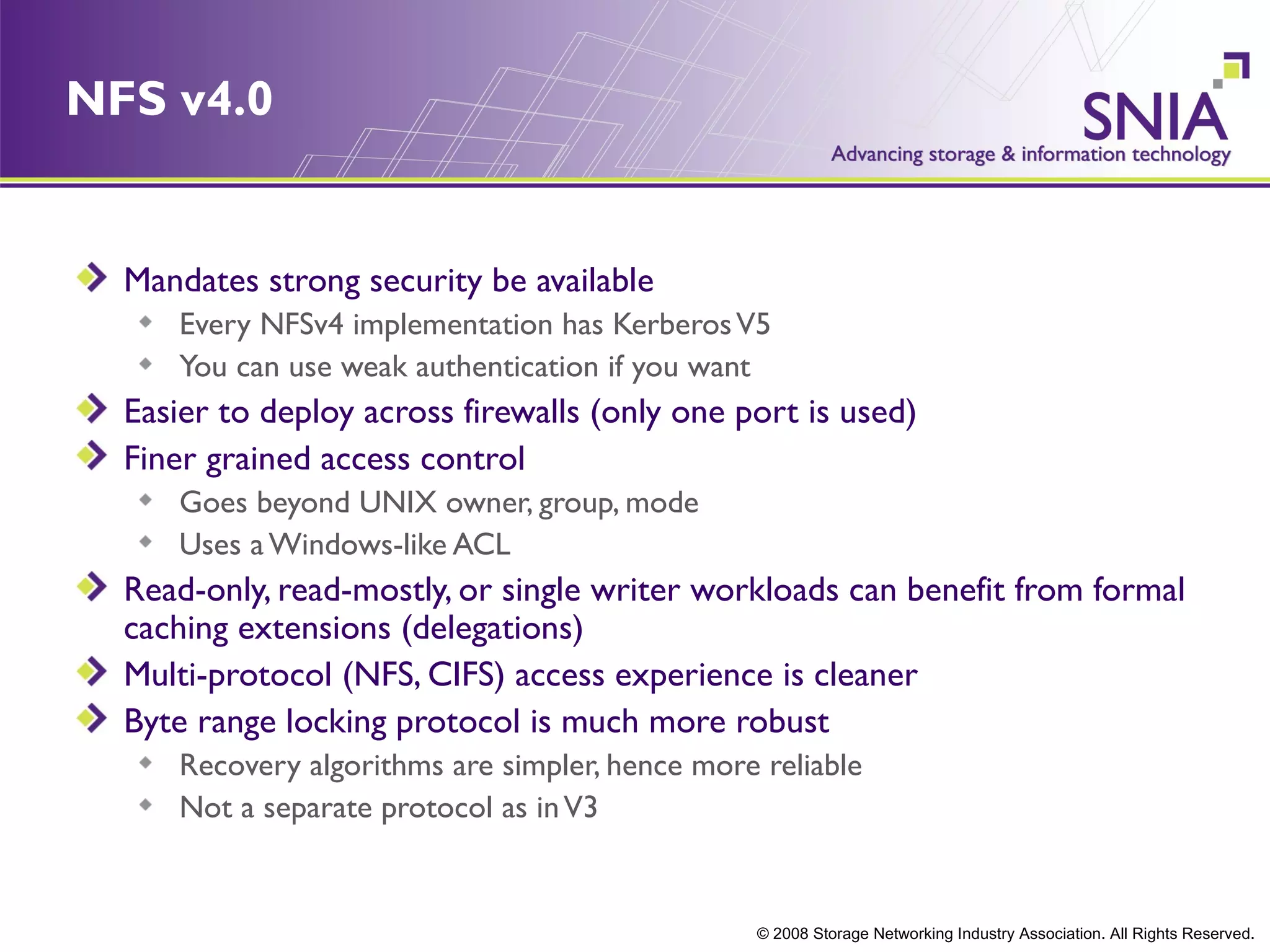

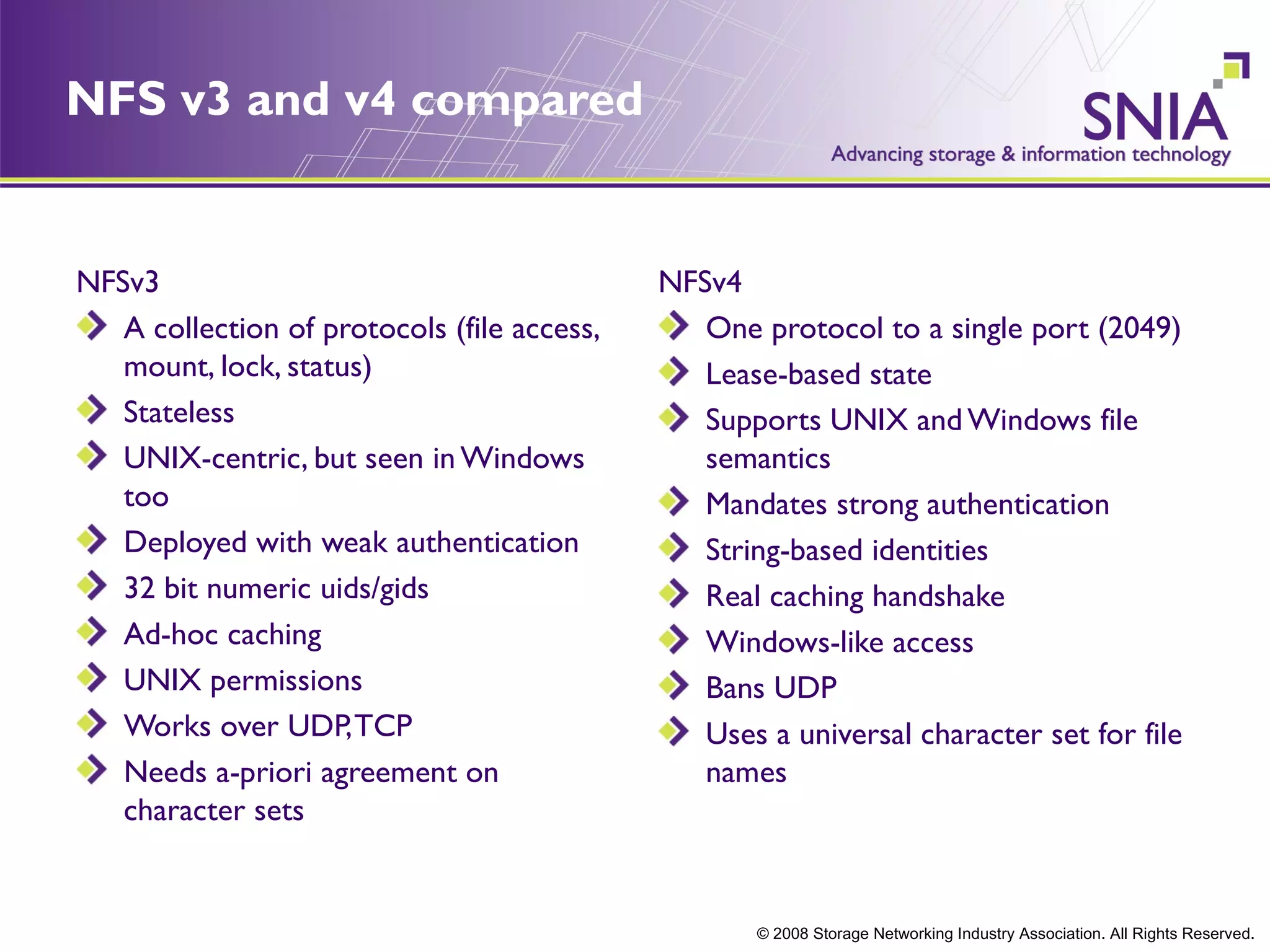

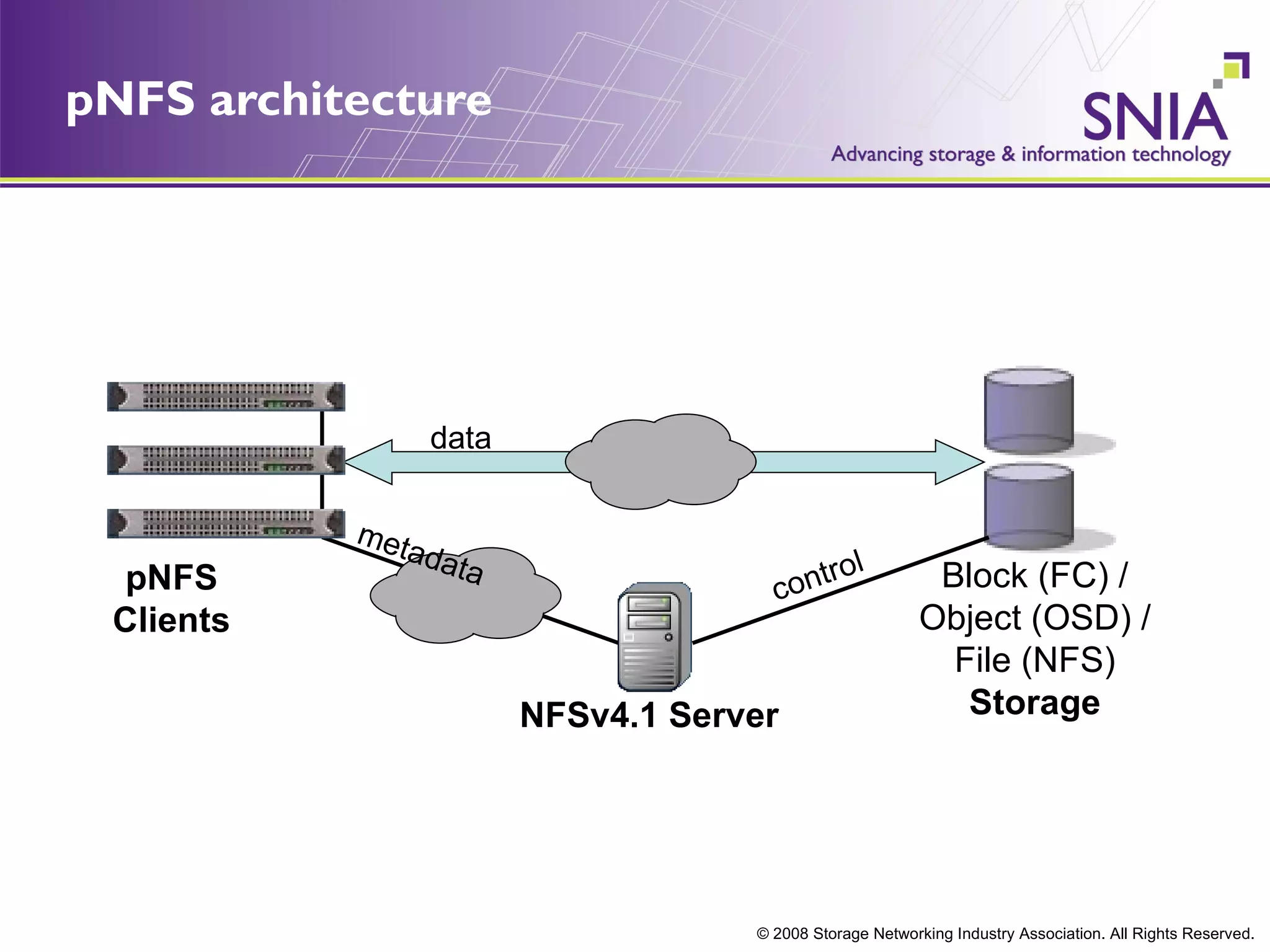

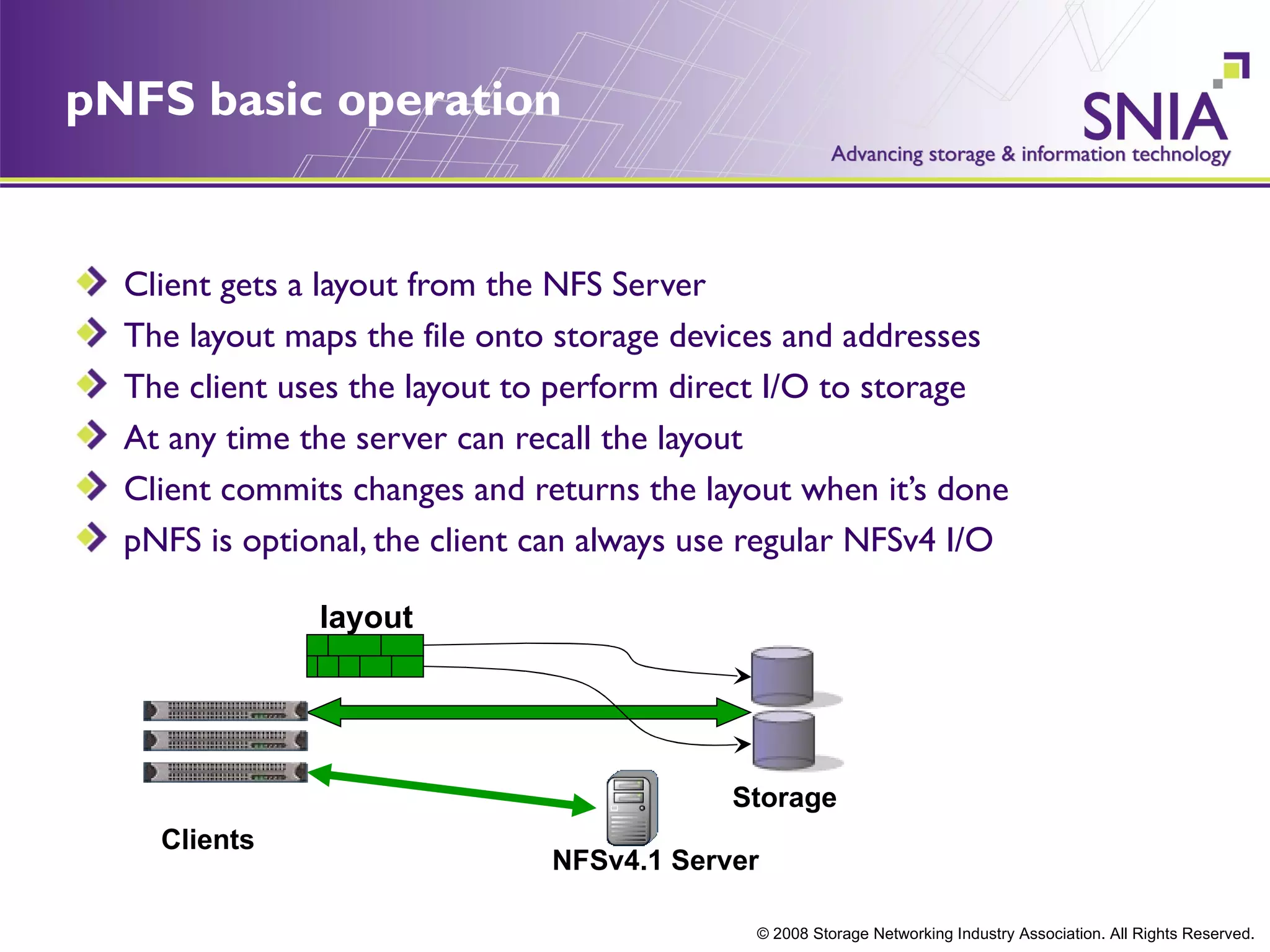

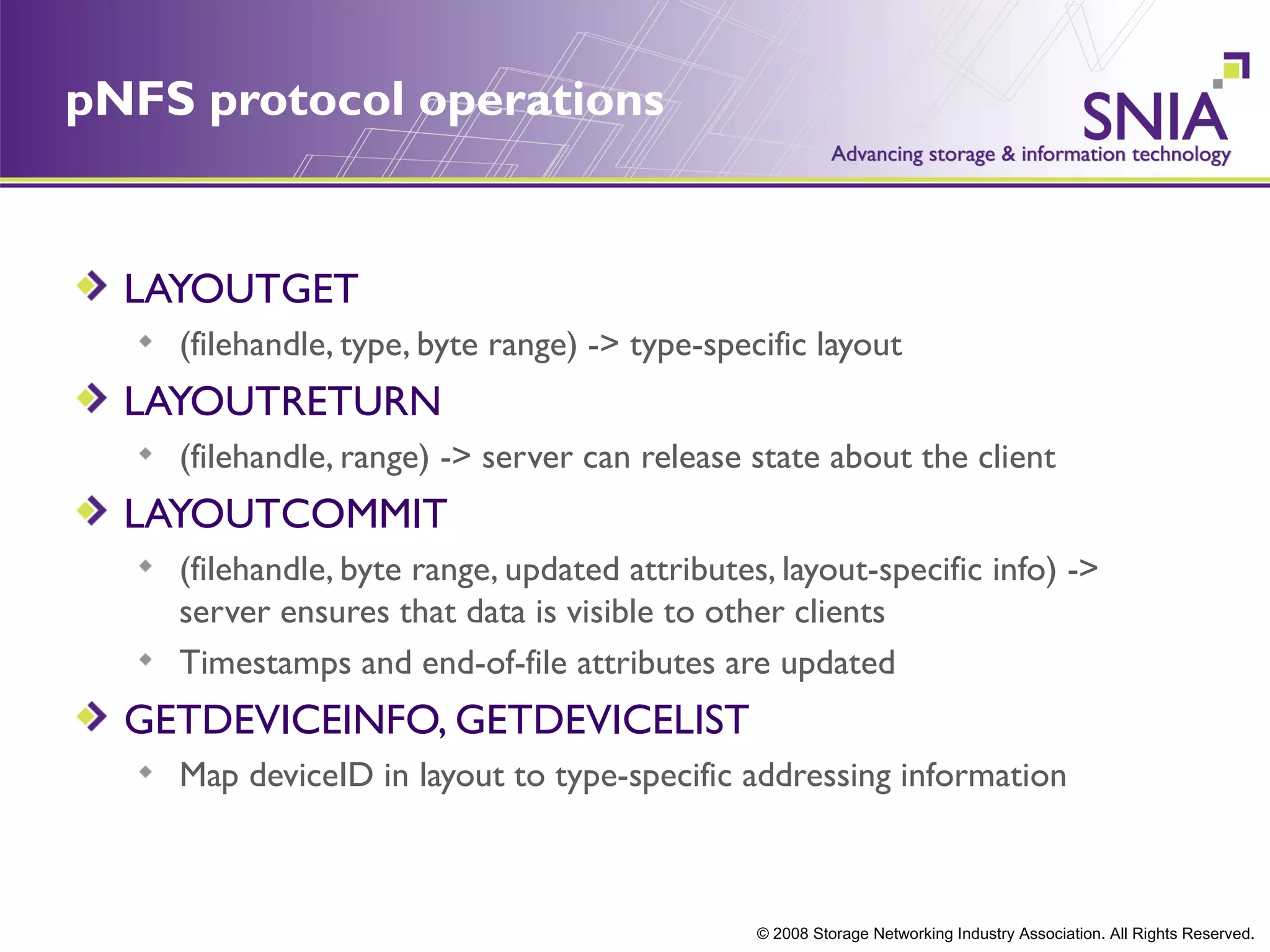

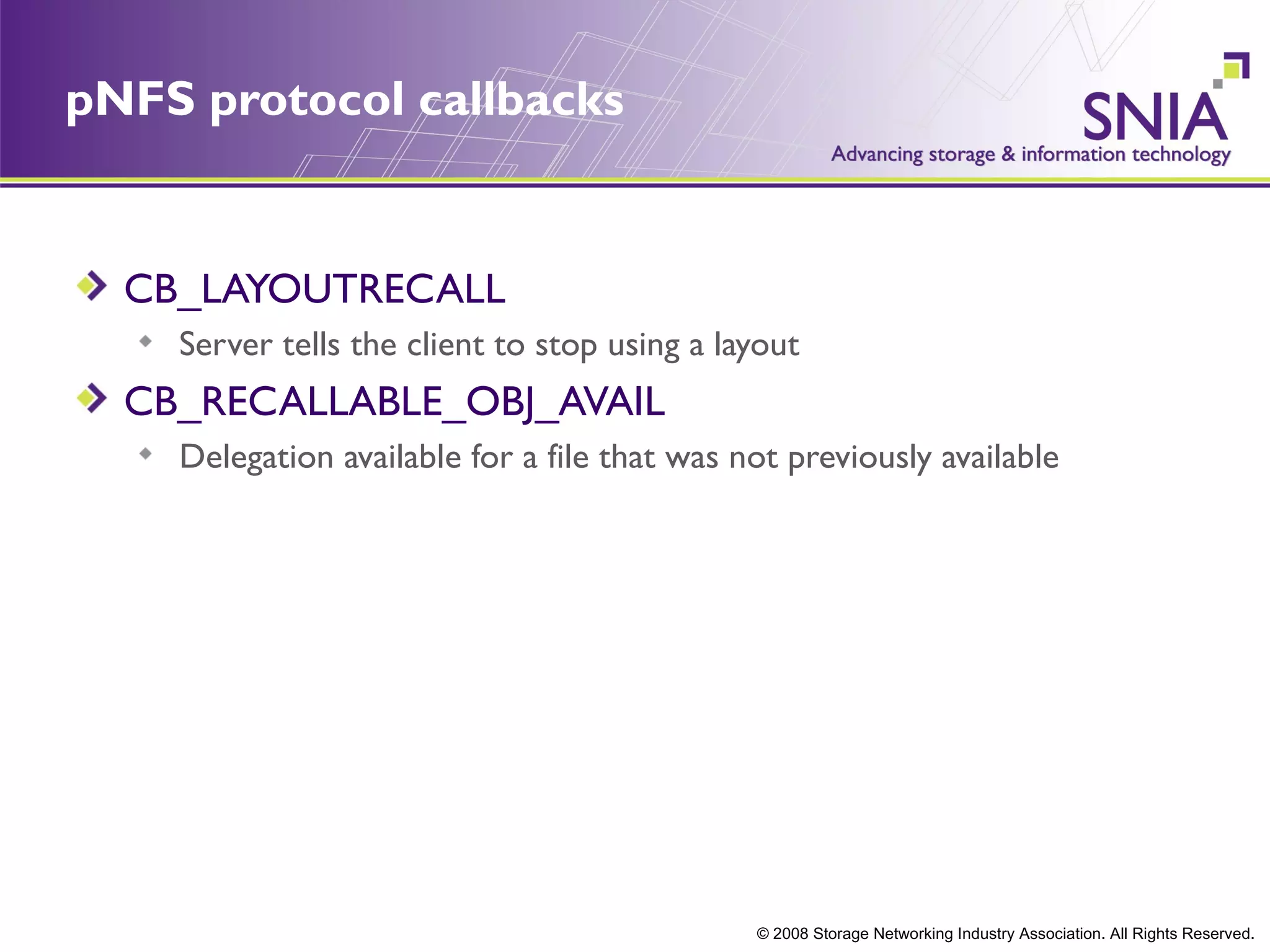

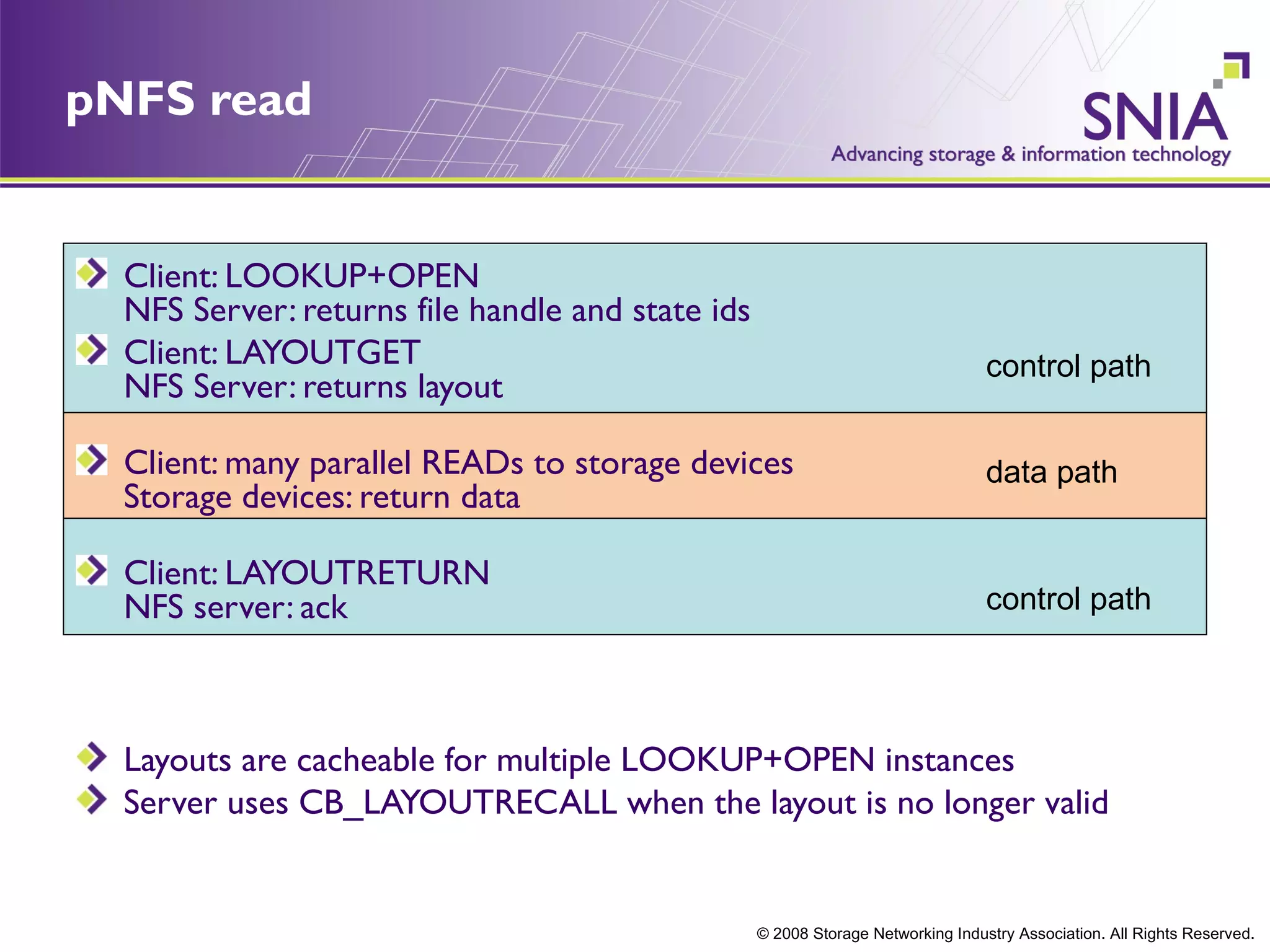

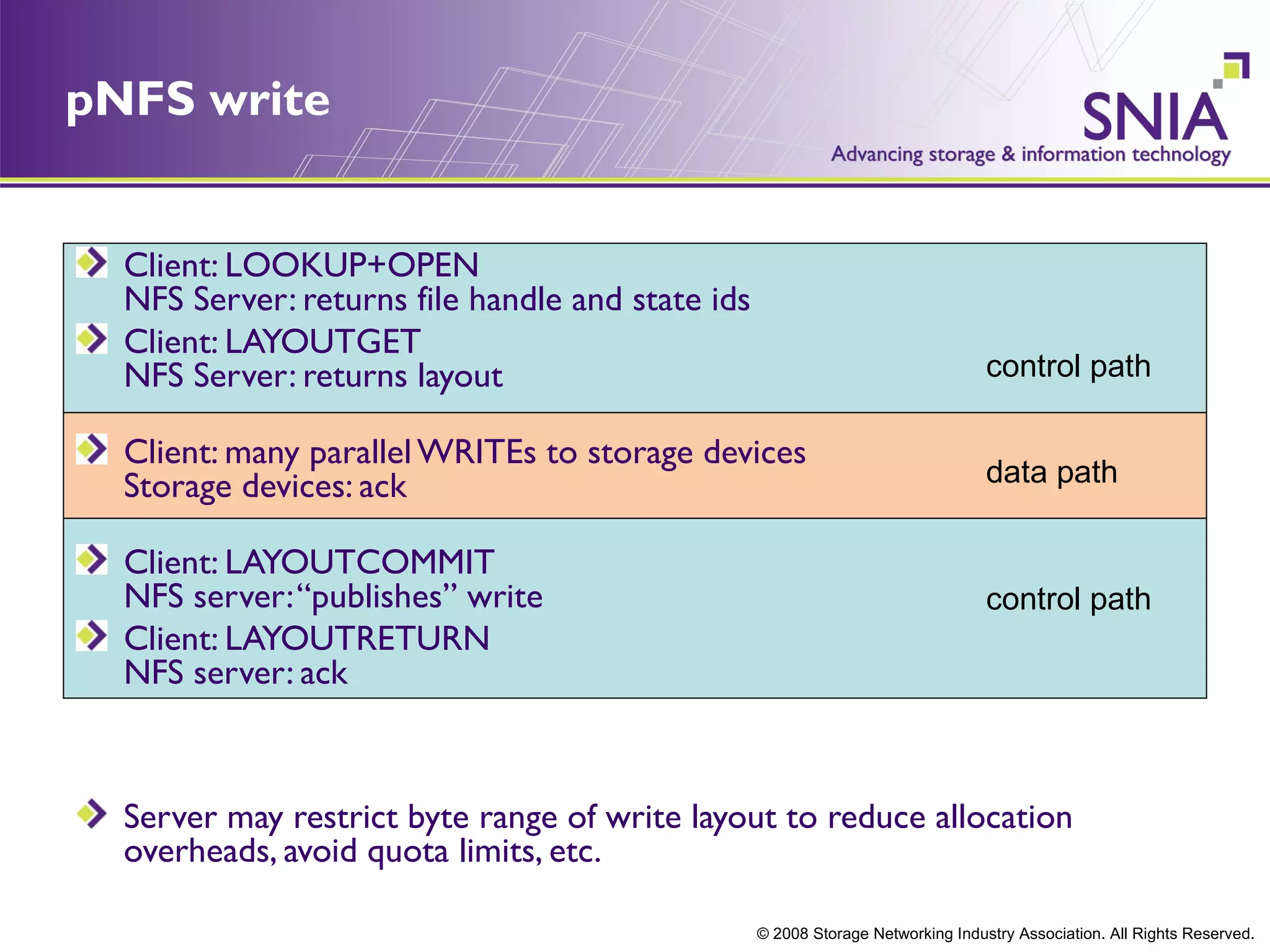

NFS v4.0 introduced mandatory strong security, easier firewall deployment, finer access control, and more robust byte-range locking. pNFS extends NFS v4 to allow clients direct access to storage devices for parallel access to files striped across storage. With pNFS, clients obtain layouts from the NFS server mapping files to storage addresses, then perform I/O directly. Layouts can be recalled, data committed, and layouts returned over the control path.