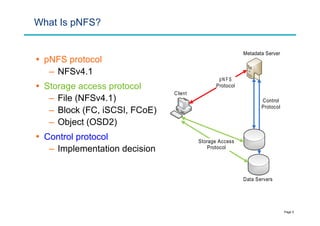

pNFS allows clients to directly access file data from storage devices, bypassing the metadata server. This provides improved scalability and performance over traditional NFS. Key aspects of pNFS include the ability to retrieve and return file layouts describing data distribution, as well as control protocols for coordinating between clients, metadata servers, and storage devices. The pNFS specification was standardized in 2010 and implementations have since appeared in Linux distributions and vendor solutions. Open issues remain around layout state handling and compatibility of different layout types.