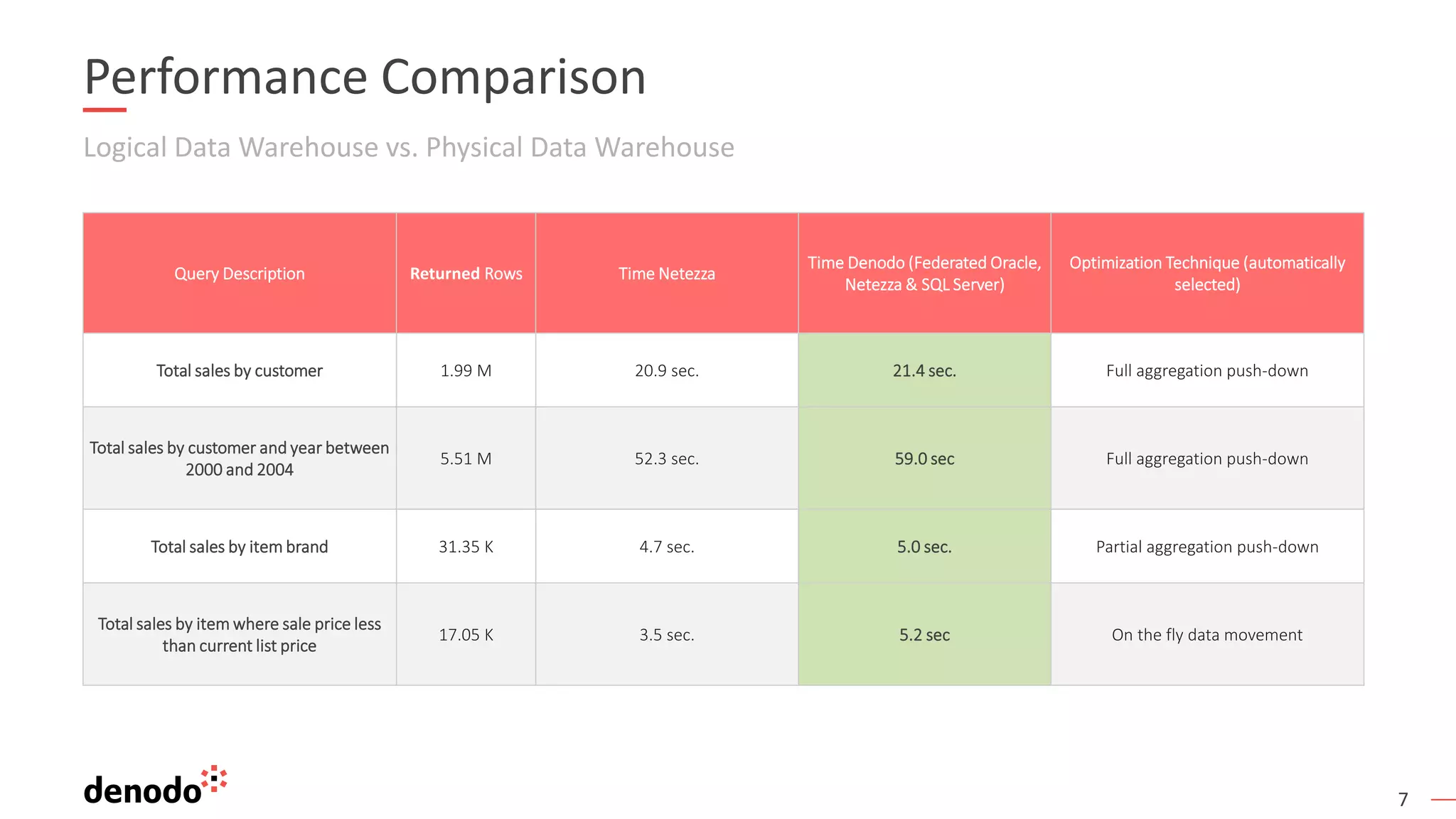

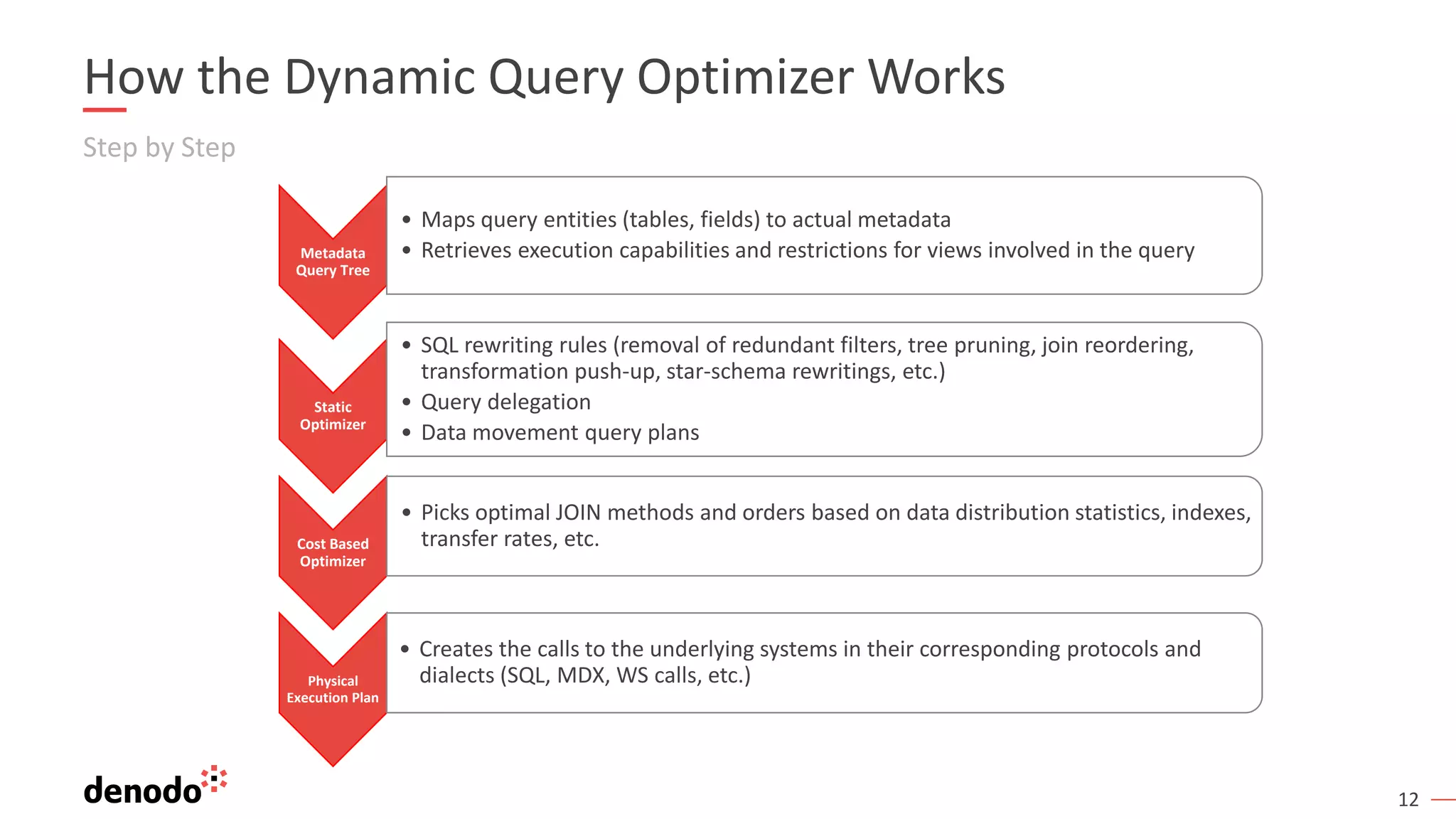

The document discusses the concept of a logical data warehouse (LDW) and its performance in comparison to physical data warehouses, particularly through Denodo's data virtualization solutions. It challenges the myth that data virtualization is inherently slower than ETL processes and emphasizes techniques such as query optimization and selective data movement that enhance performance. The testing results demonstrate that with appropriate architecture and optimizations, LDWs can achieve competitive processing speeds, leveraging dynamic query execution and caching strategies.