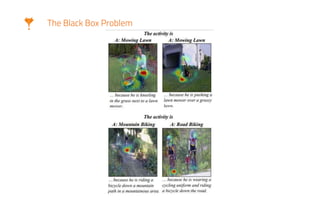

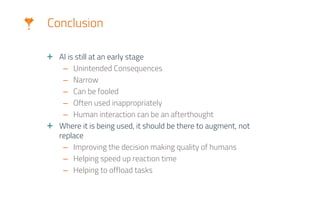

The document discusses the application of AI in cybersecurity, specifically focusing on user entity behavior analytics and its role in detecting human behavior for compliance and learning from unknown patterns. It highlights the importance of AI in enhancing human capabilities, particularly in malware detection and incident response, while cautioning against its misuse and the potential for unintended consequences. The conclusion emphasizes that AI should augment human decision-making rather than replace it, particularly during critical cybersecurity tasks.