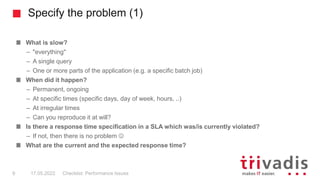

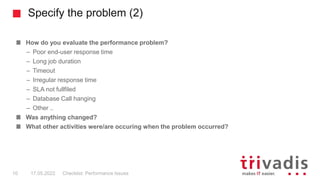

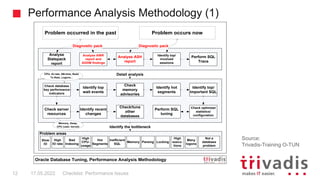

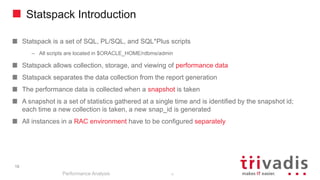

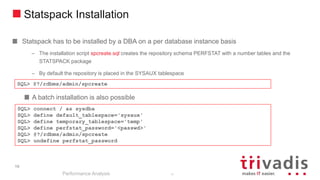

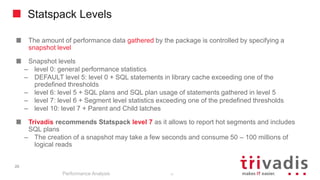

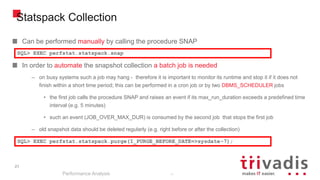

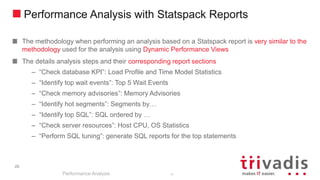

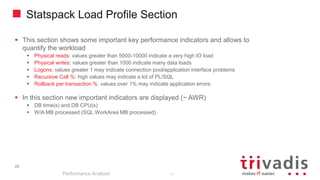

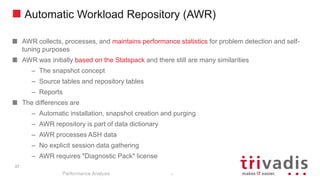

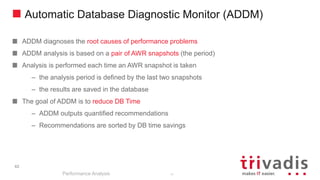

Trivadis is an IT consulting firm with over 650 employees across 15 locations. The document provides an overview of Trivadis' mission, expertise, products, key figures, and introduces Markus Flechtner, a principal consultant specializing in Oracle database performance tuning. It then outlines an agenda for a performance issues checklist, covering how to specify problems, performance analysis methodology, and the tools Statspack and Tuning Pack.