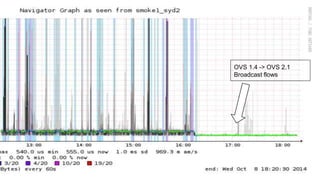

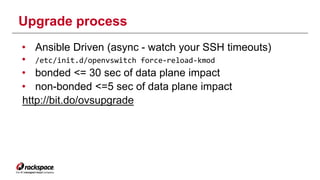

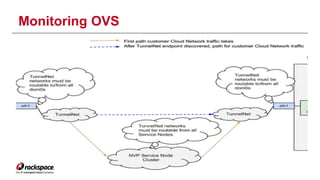

The document discusses the management of Open vSwitch (OVS) across a large heterogeneous fleet, detailing its evolution and the importance of upgrading for performance and stability. It outlines the upgrade process, challenges faced during migration, and performance metrics for monitoring OVS health and connectivity to SDN controllers. Key recommendations include consistent upgrades and proactive monitoring to mitigate issues and improve operational efficiency.

![Why upgrade?

Reasons we upgraded:

• Performance

• Less impacting upgrades

• NSX Controller version requirements

• Nasty regression in 2.1 [96be8de]

http://bit.do/OVS21Regression

• Performance](https://image.slidesharecdn.com/openvswitchfinal-141105003854-conversion-gate01/85/Managing-Open-vSwitch-Across-a-Large-Heterogenous-Fleet-6-320.jpg)