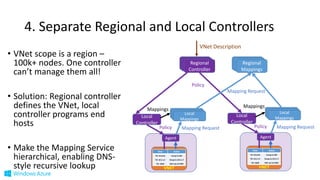

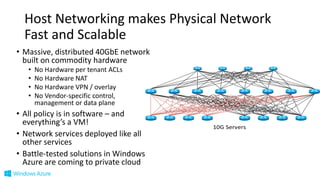

The document discusses Microsoft's Azure networking strategy, emphasizing the importance of scalable Software Defined Networking (SDN) in the public cloud. It outlines how Azure supports a large number of virtual networks, enabling flexible and efficient communication while utilizing a host-based approach for flow processing. Additionally, it highlights innovations in storage solutions and hybrid cloud connectivity through ExpressRoute, which enhances scalability and reduces costs for enterprises migrating to the cloud.