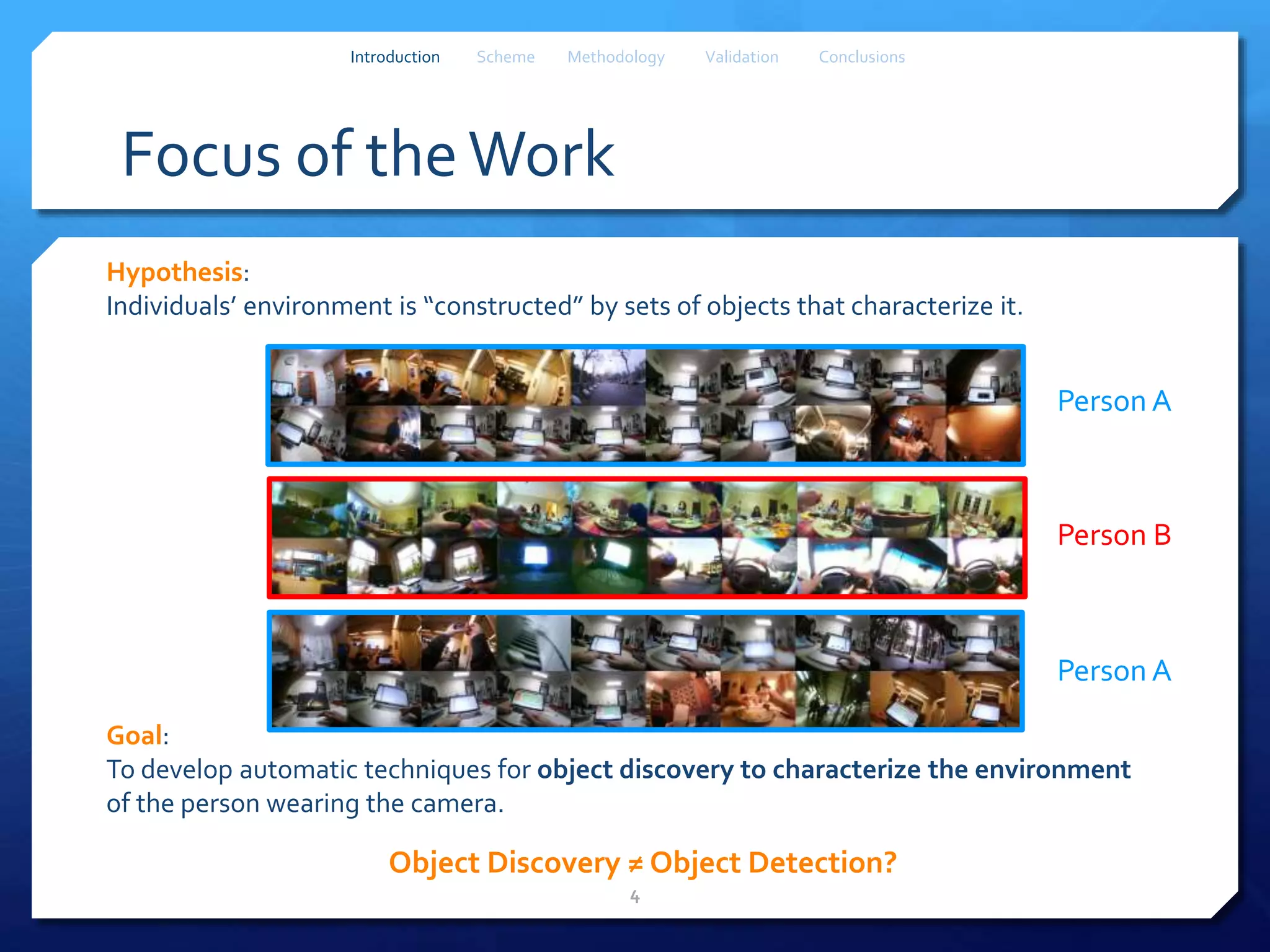

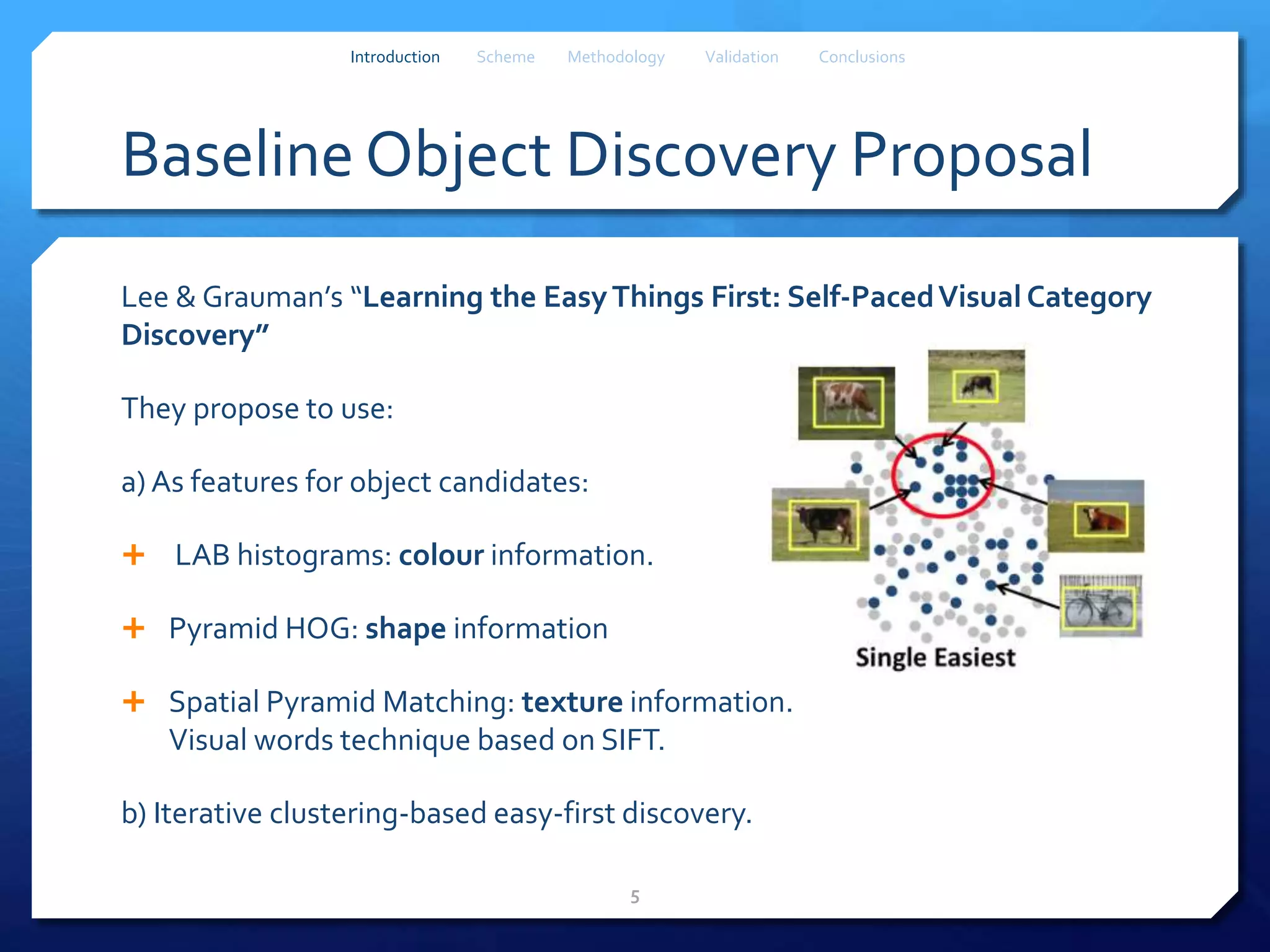

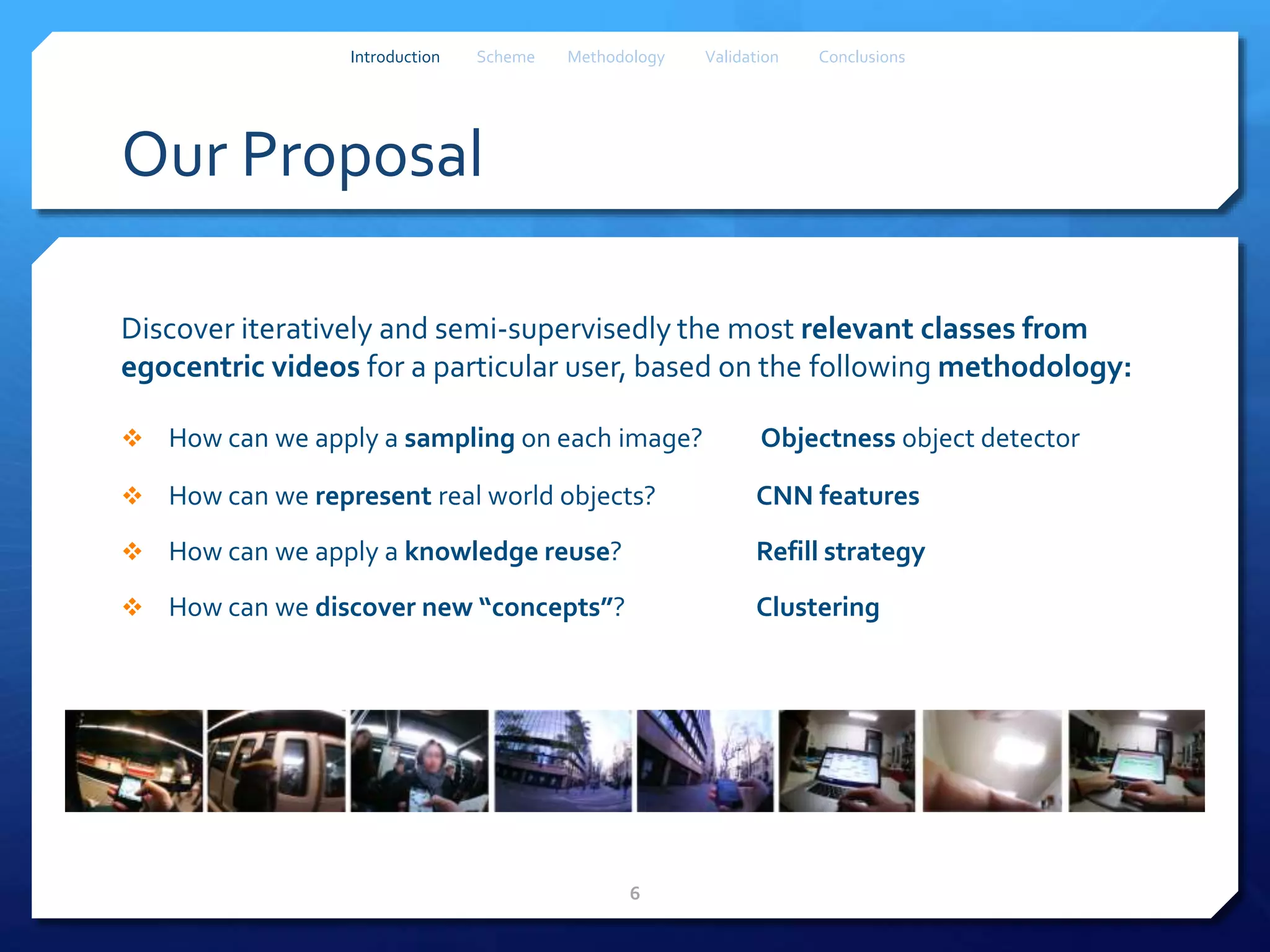

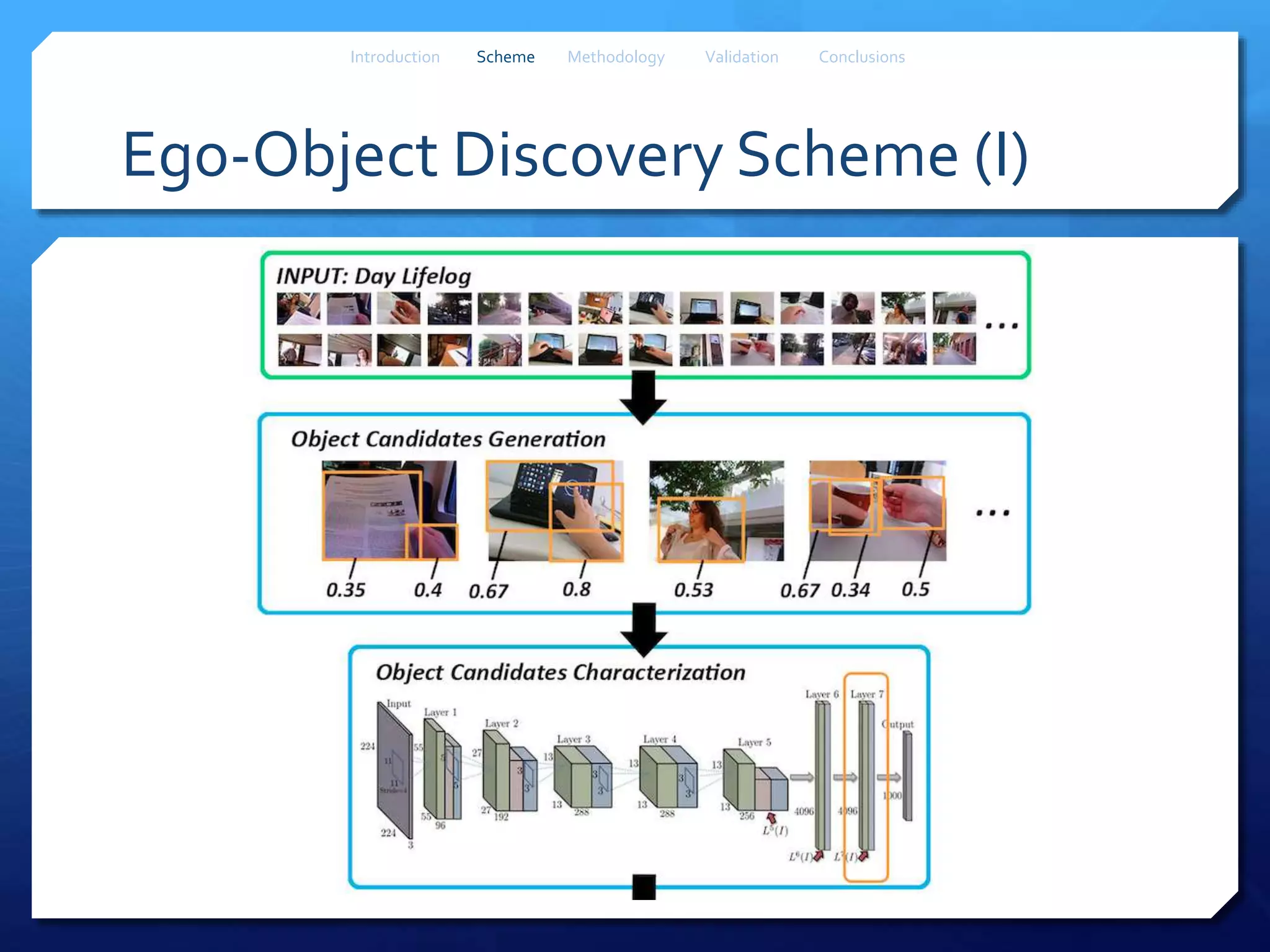

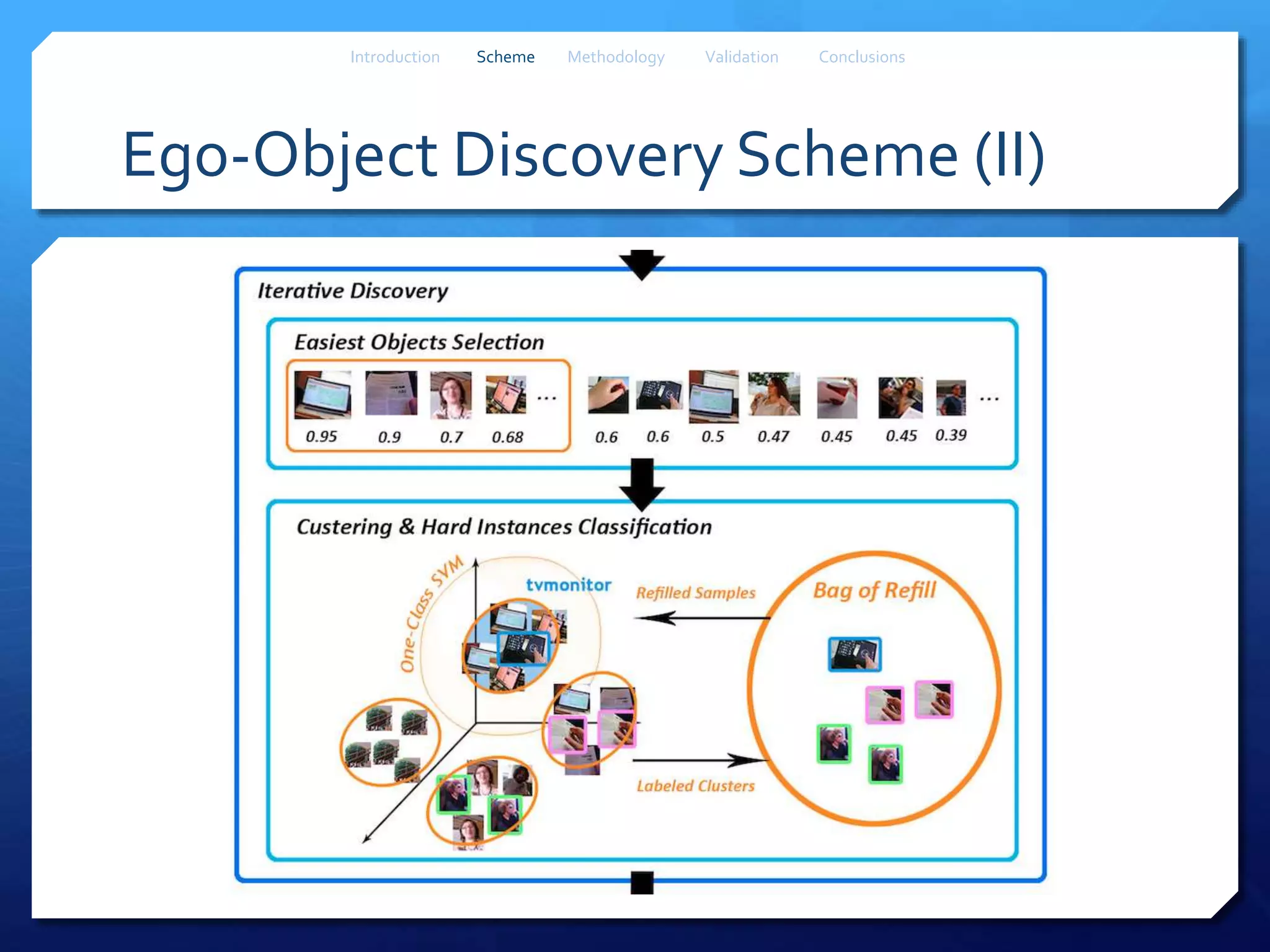

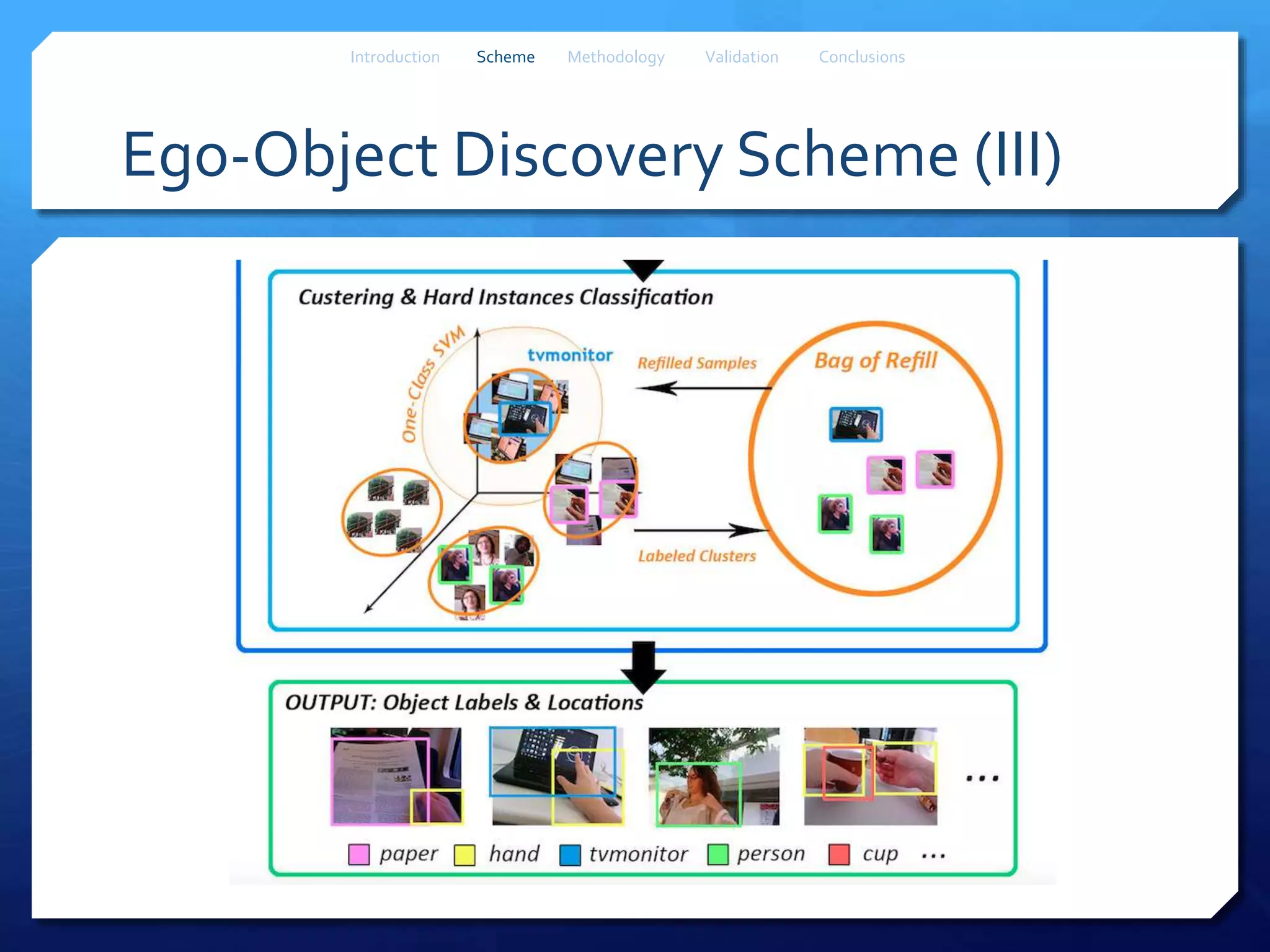

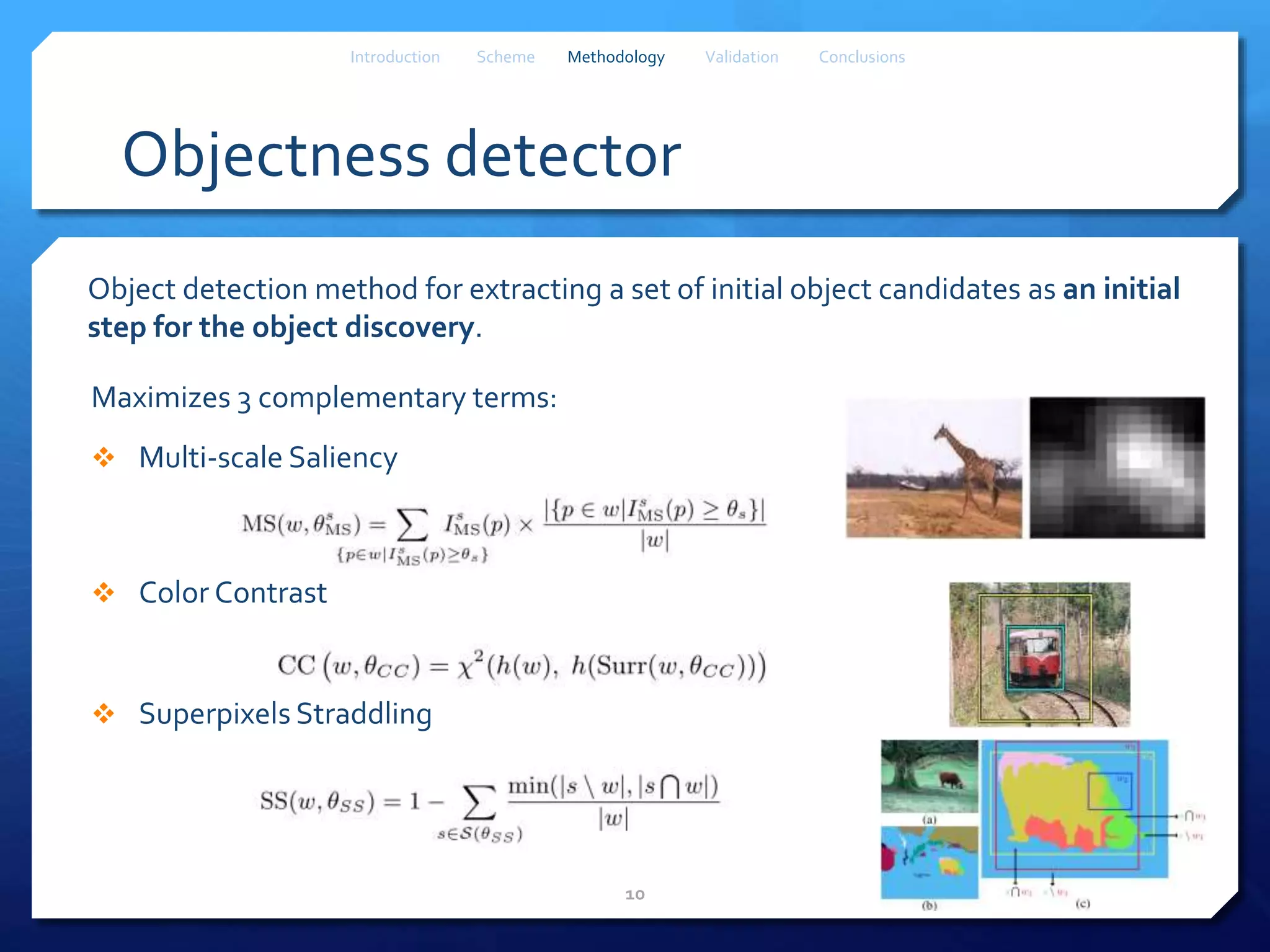

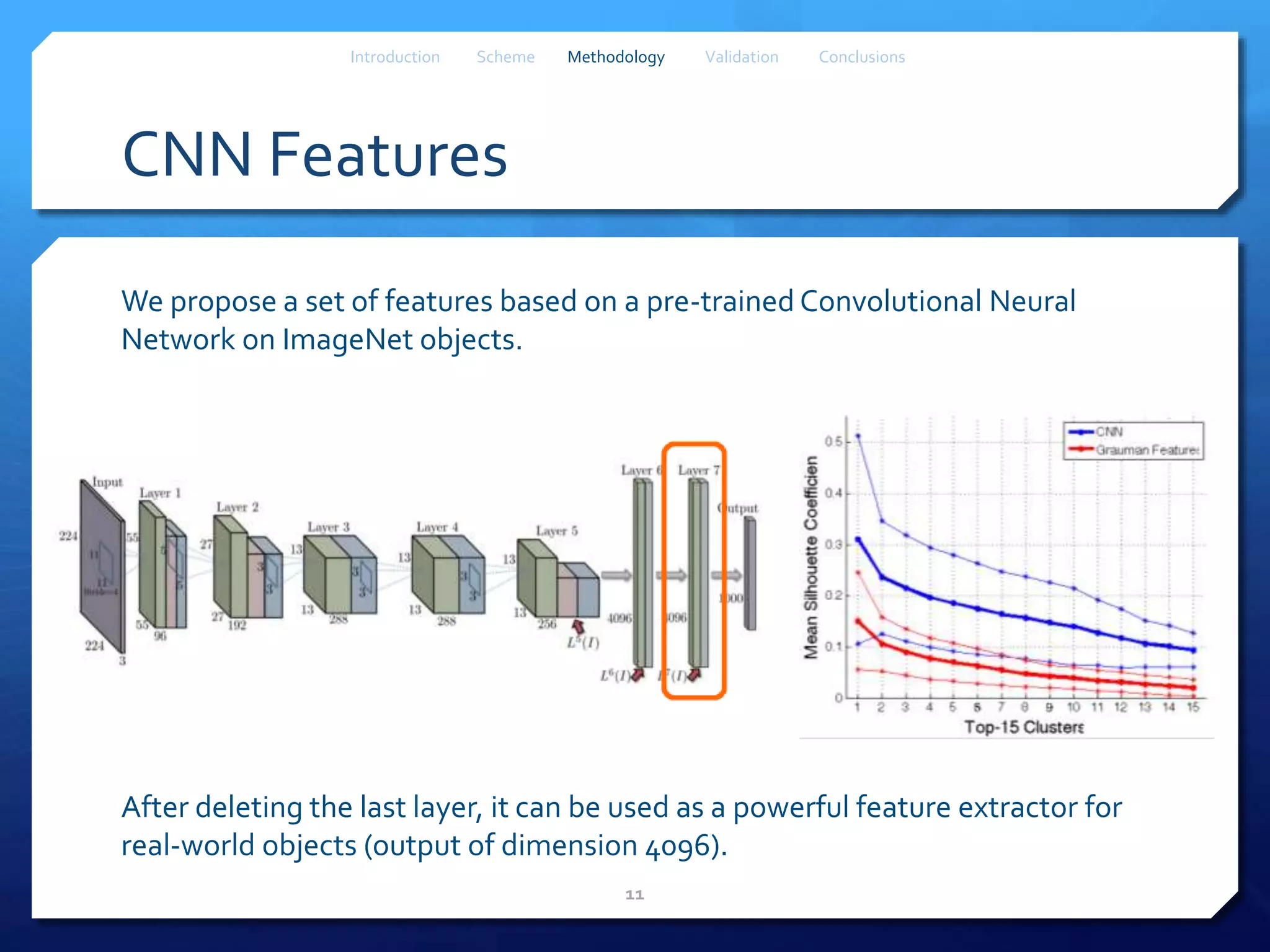

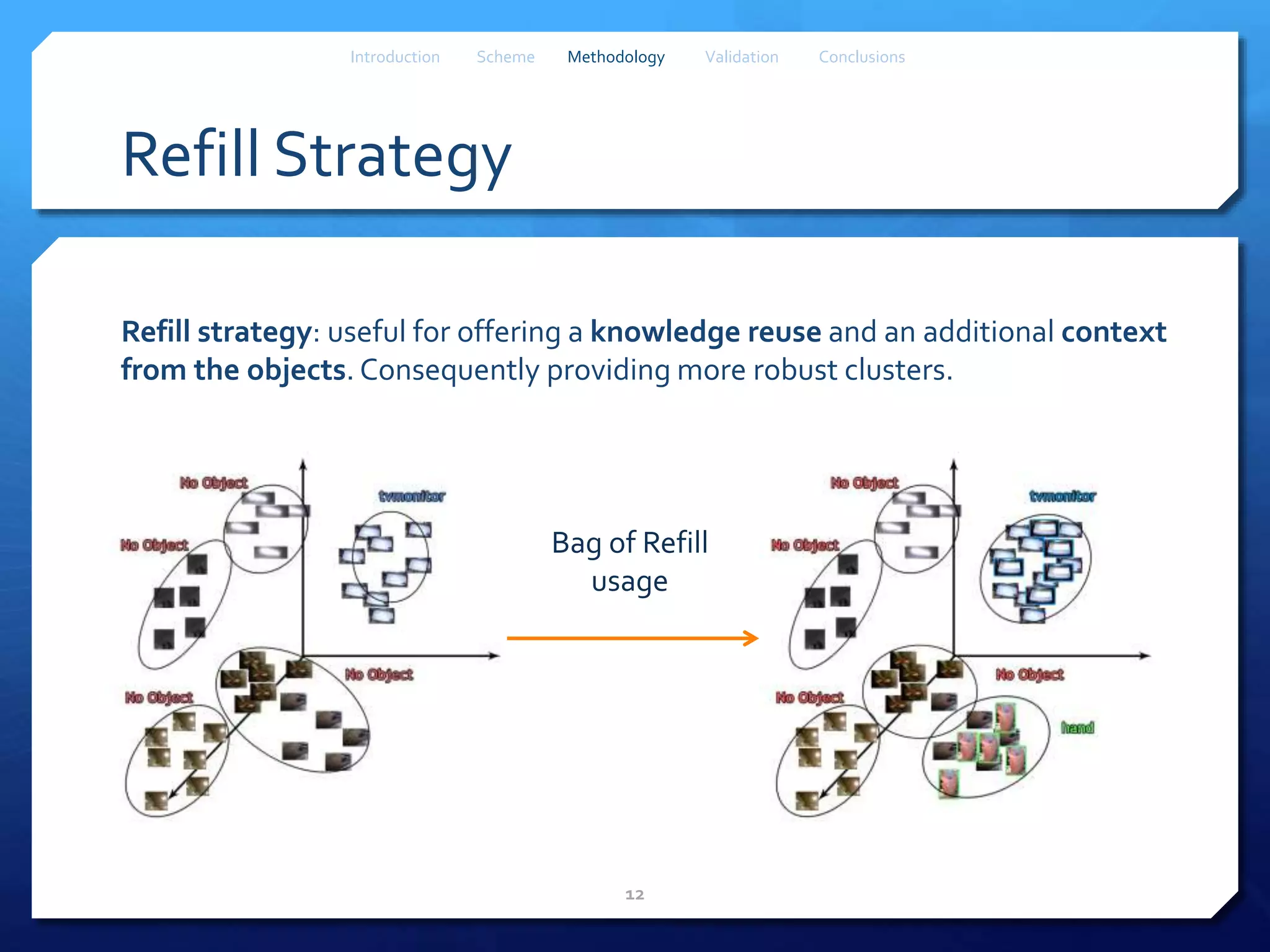

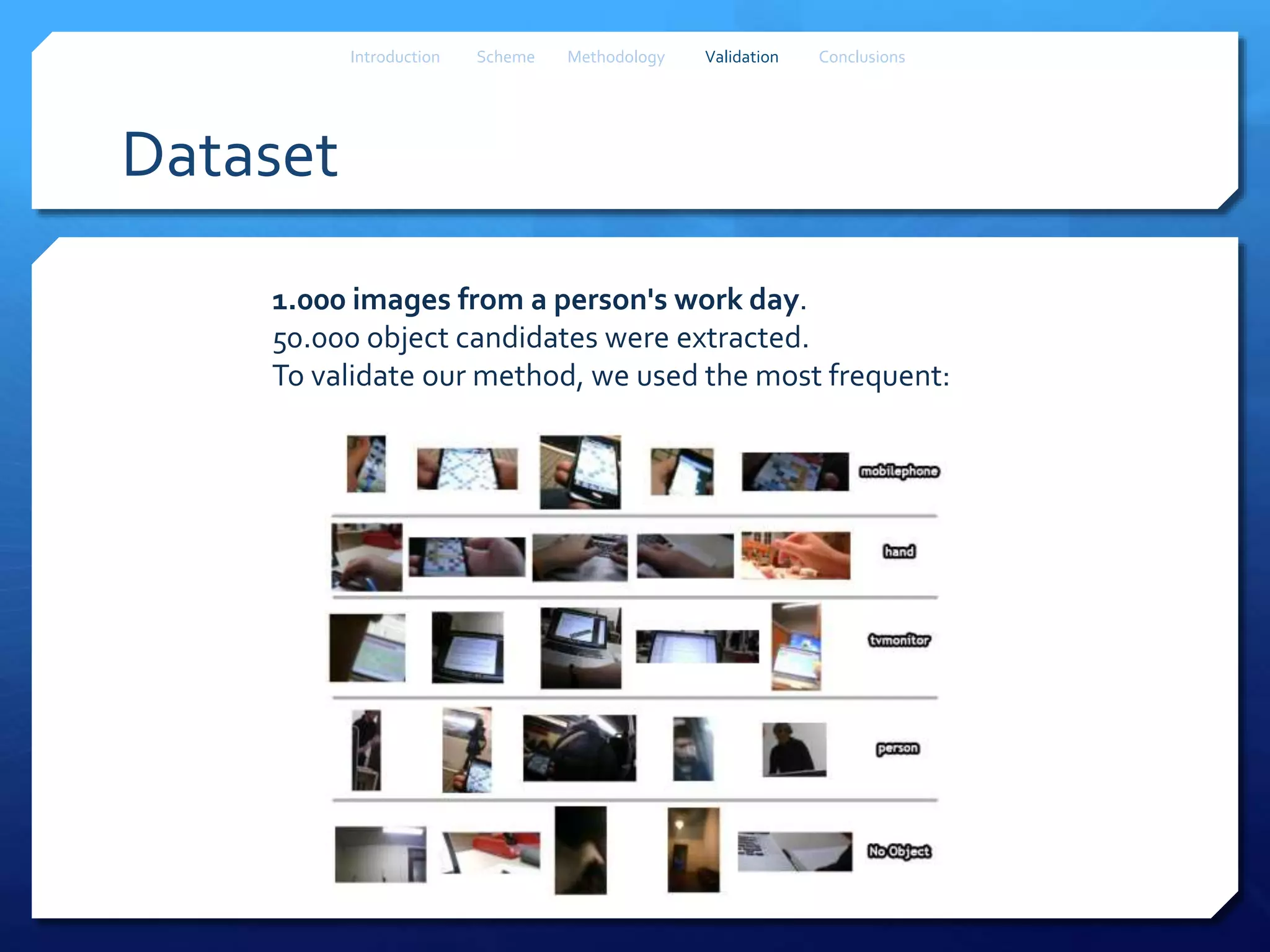

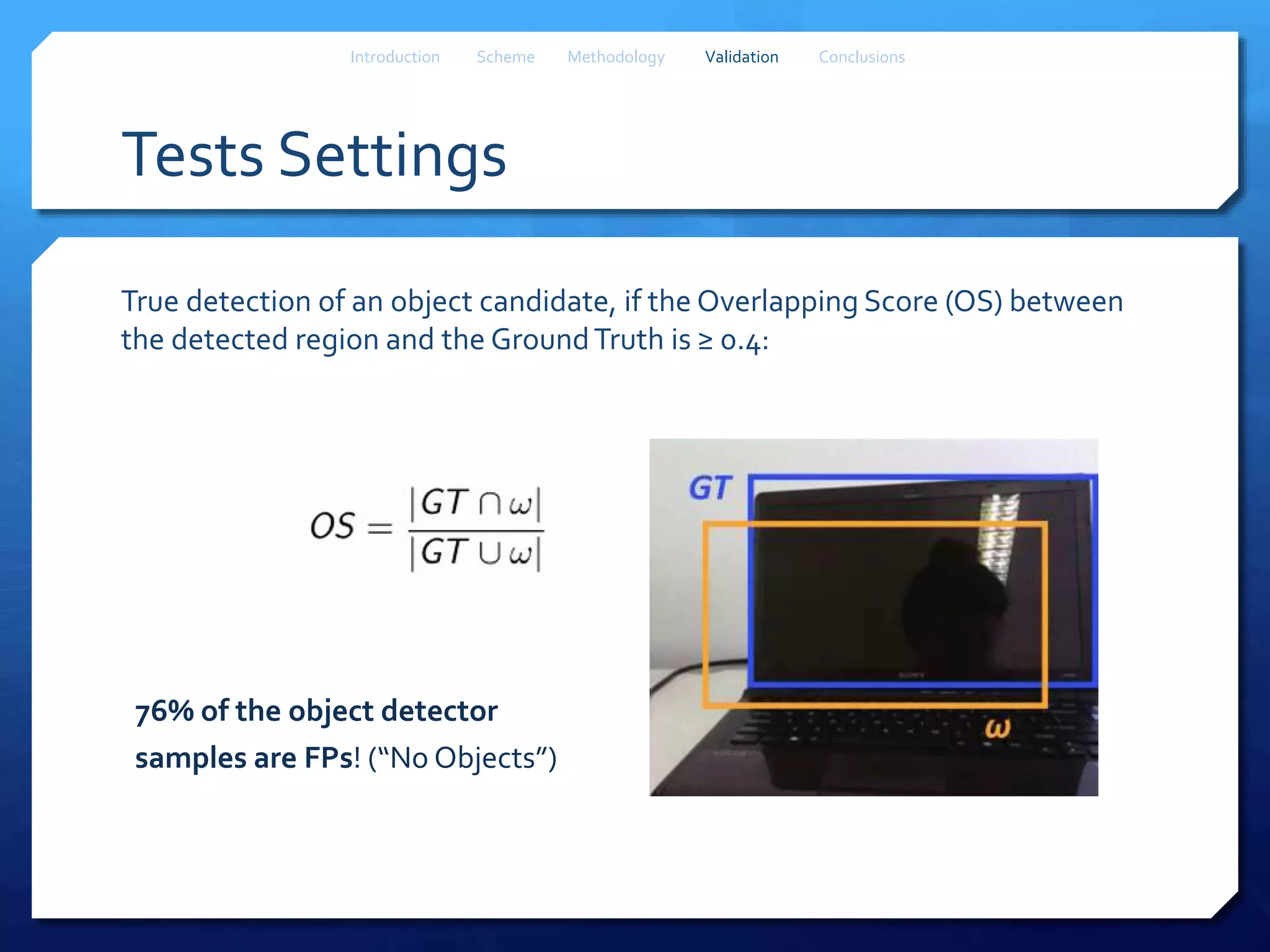

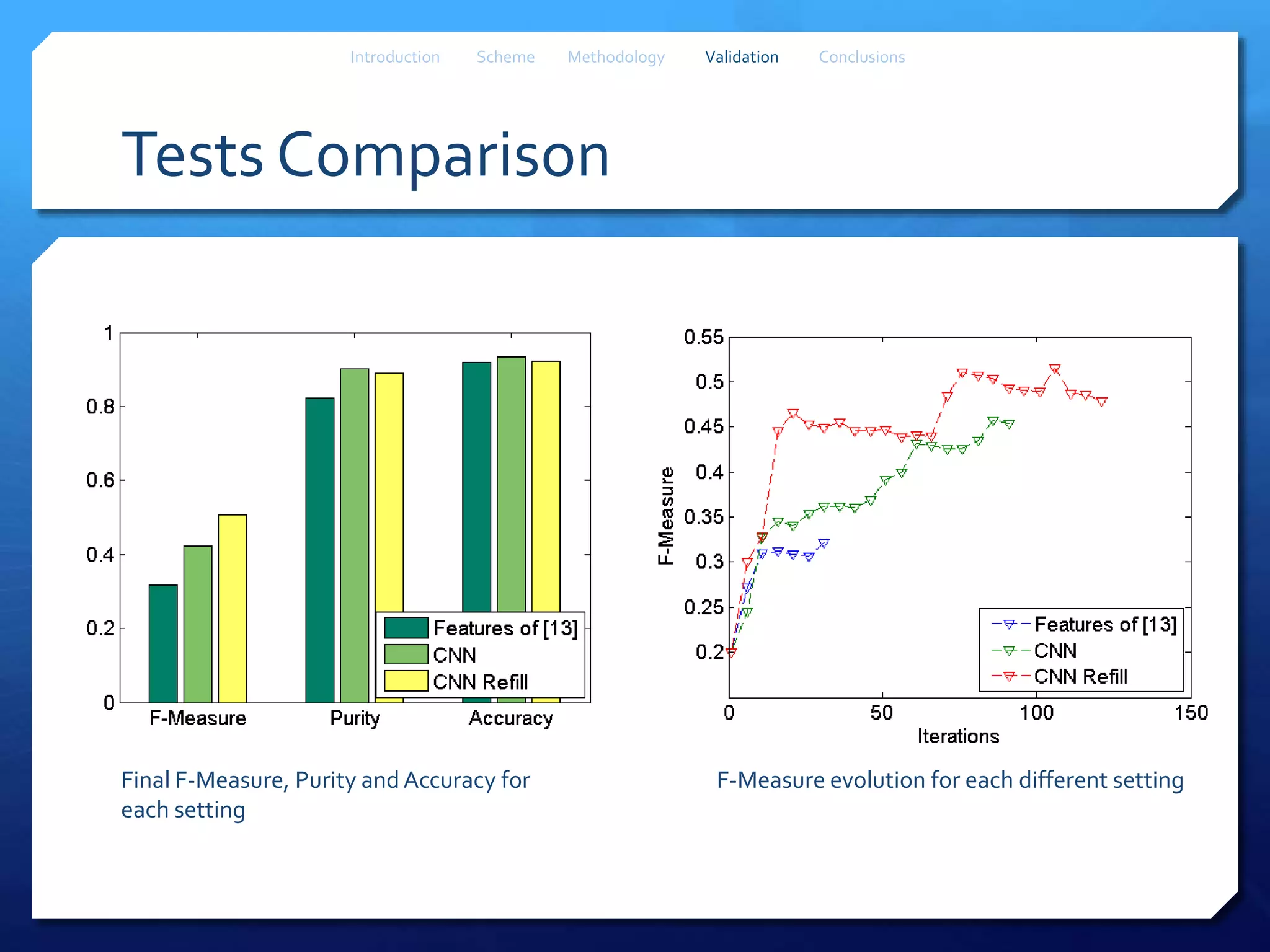

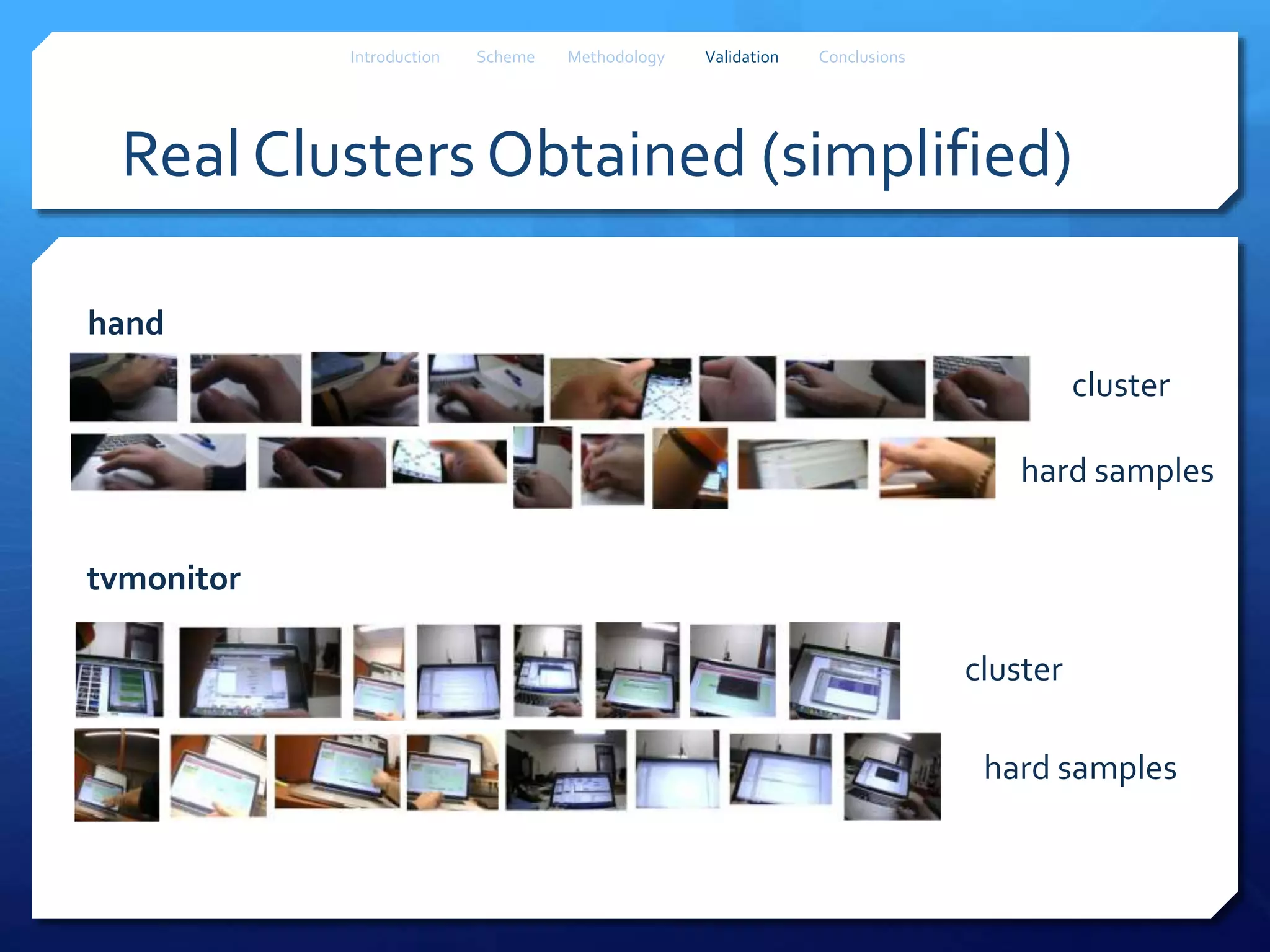

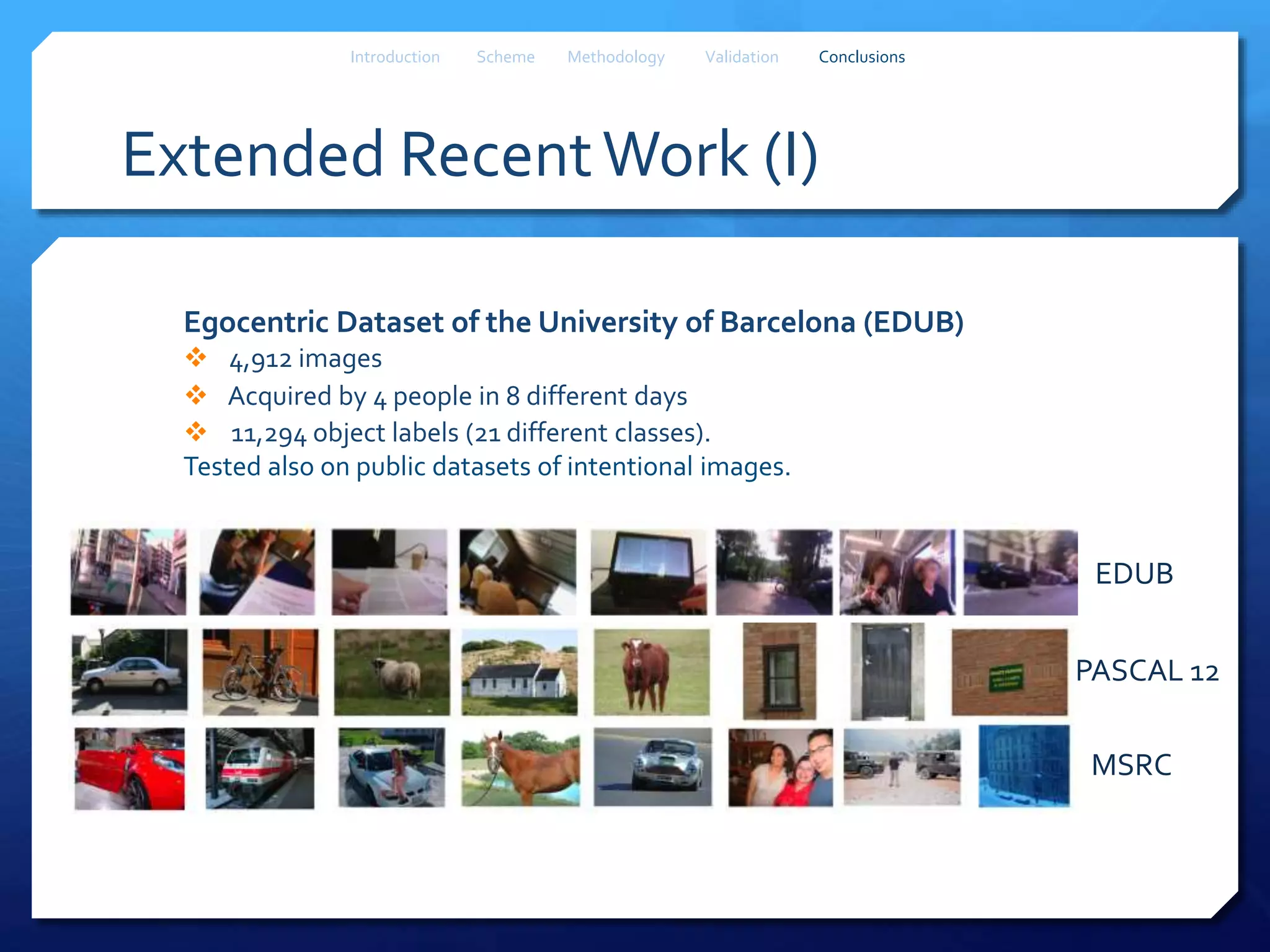

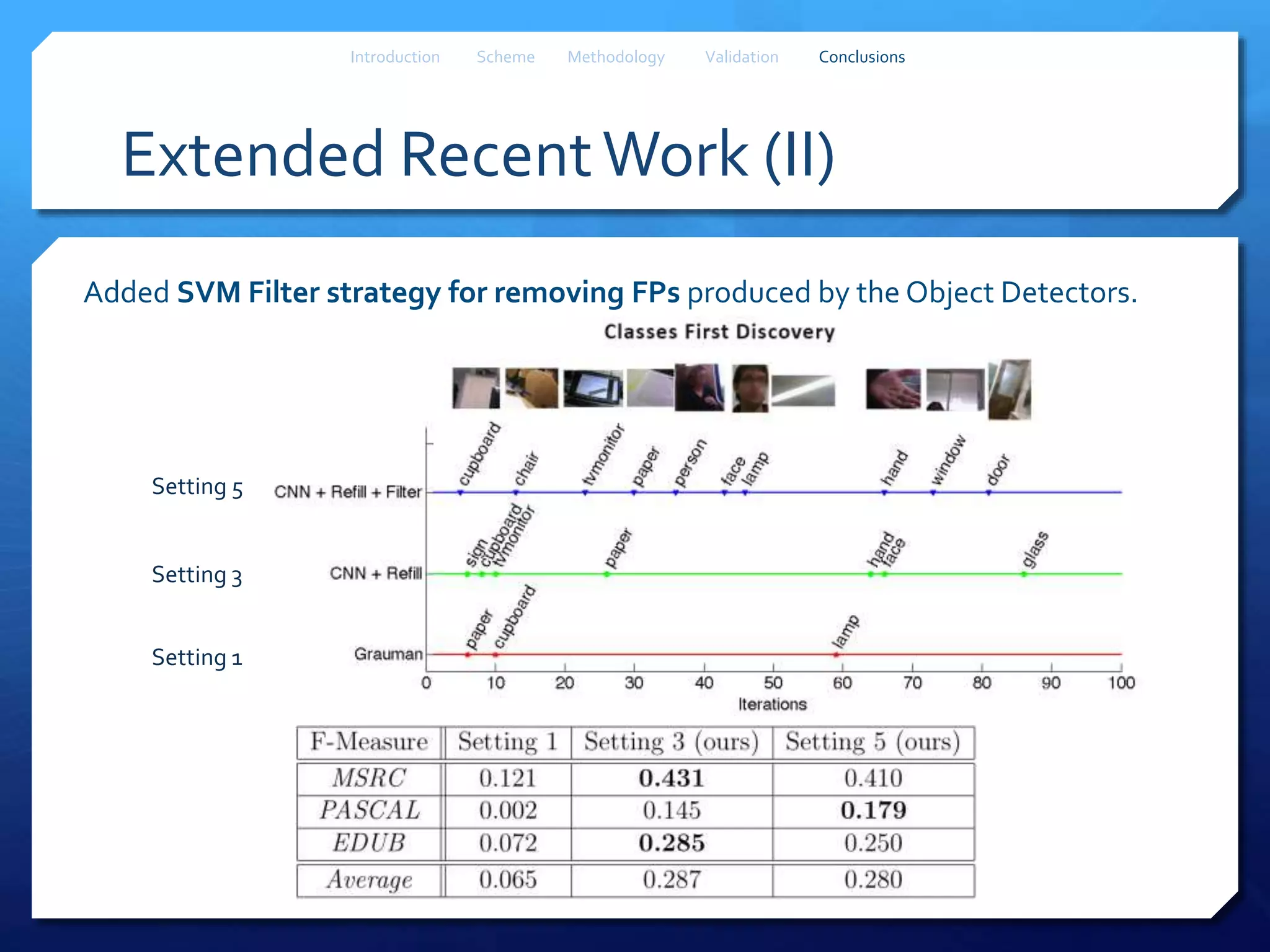

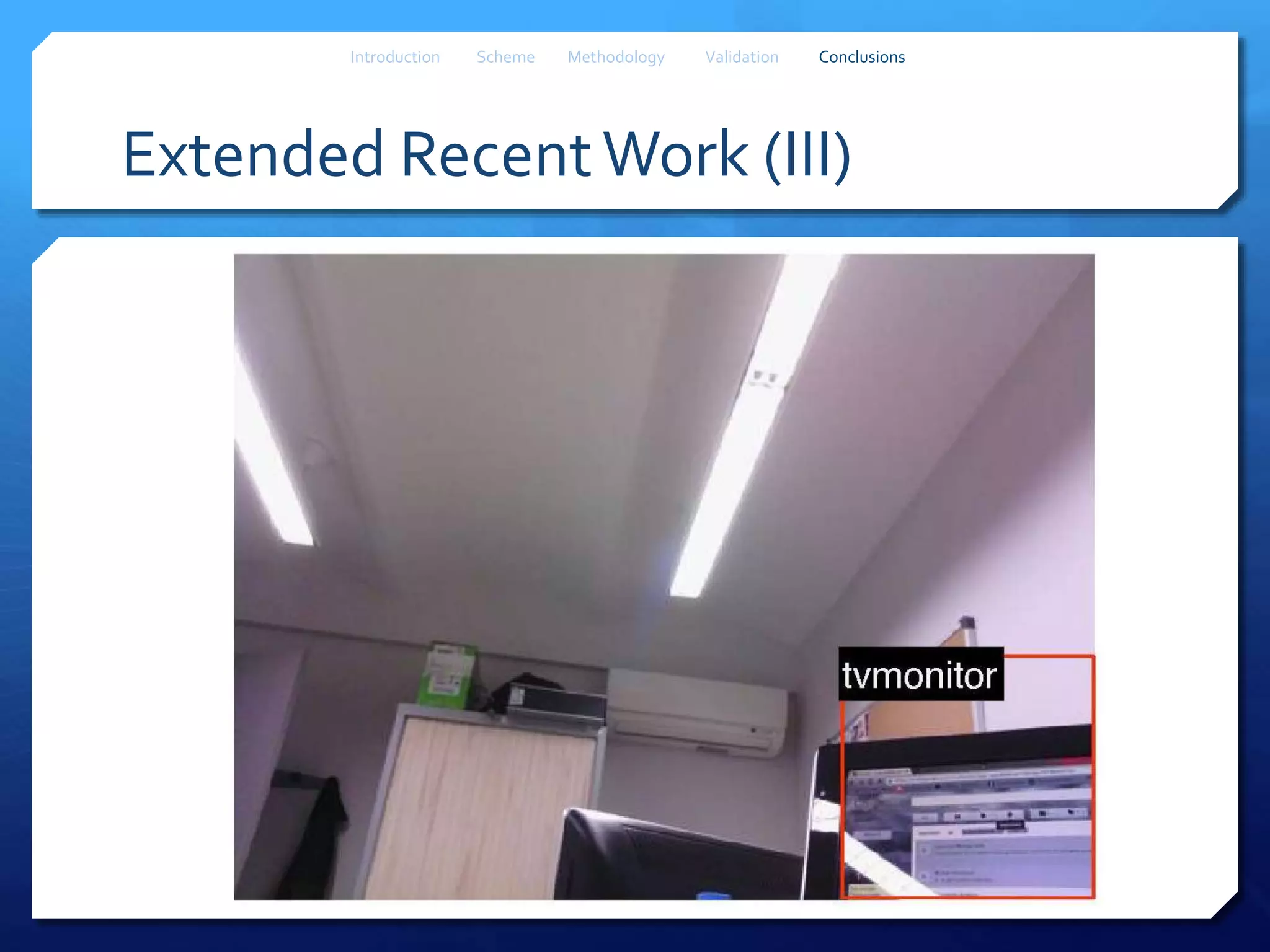

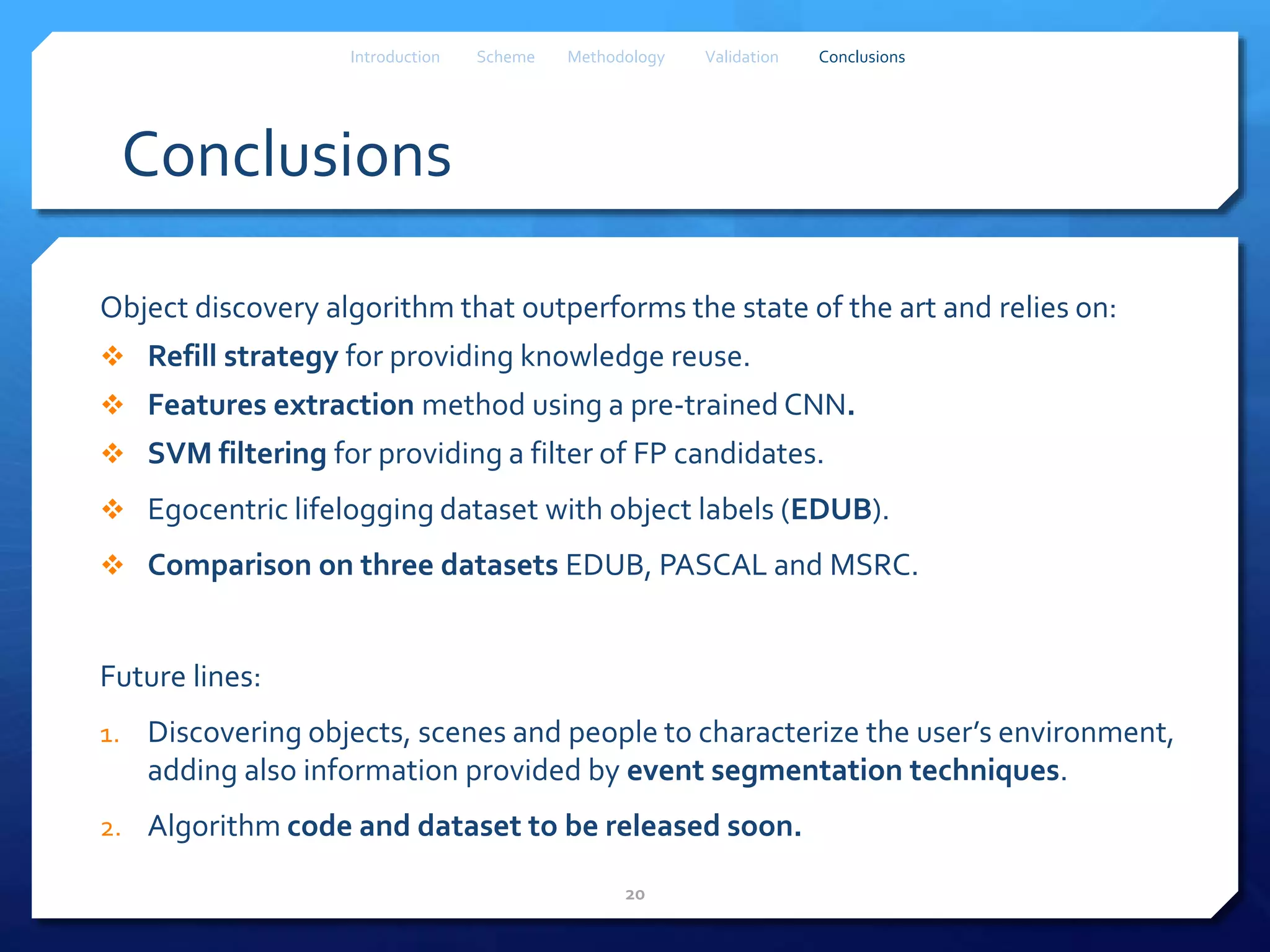

This document proposes a method for object discovery in egocentric videos using convolutional neural networks (CNN). The method aims to characterize the environment of the person wearing an egocentric camera. It uses an objectness detector to sample object candidates, extracts CNN features to represent objects, and employs a refill strategy and clustering to discover new concepts in an iterative manner. The method is validated on a dataset of 1,000 images labeled with the most frequent objects, outperforming state-of-the-art approaches. Future work includes discovering objects, scenes and people to further characterize the environment.