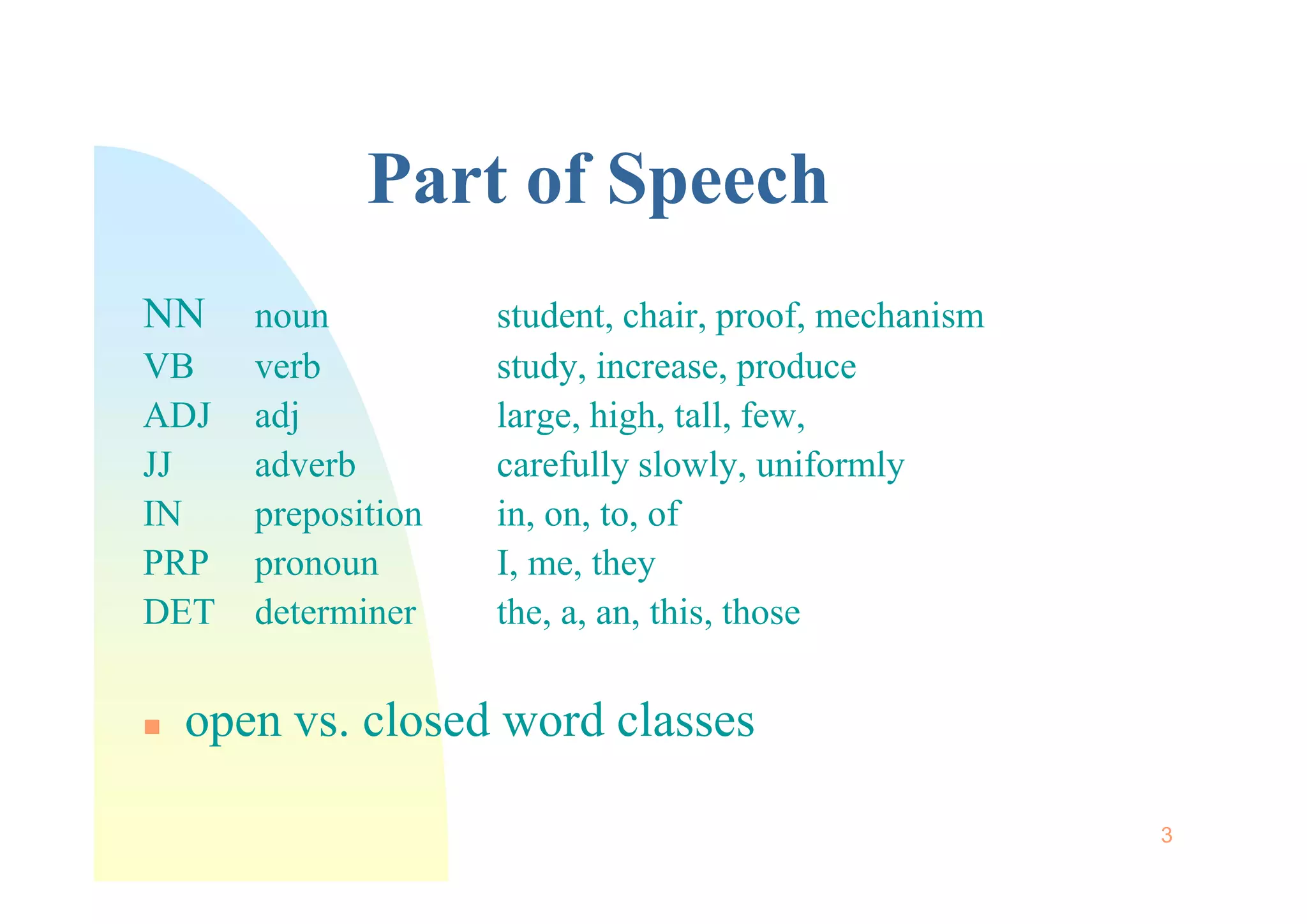

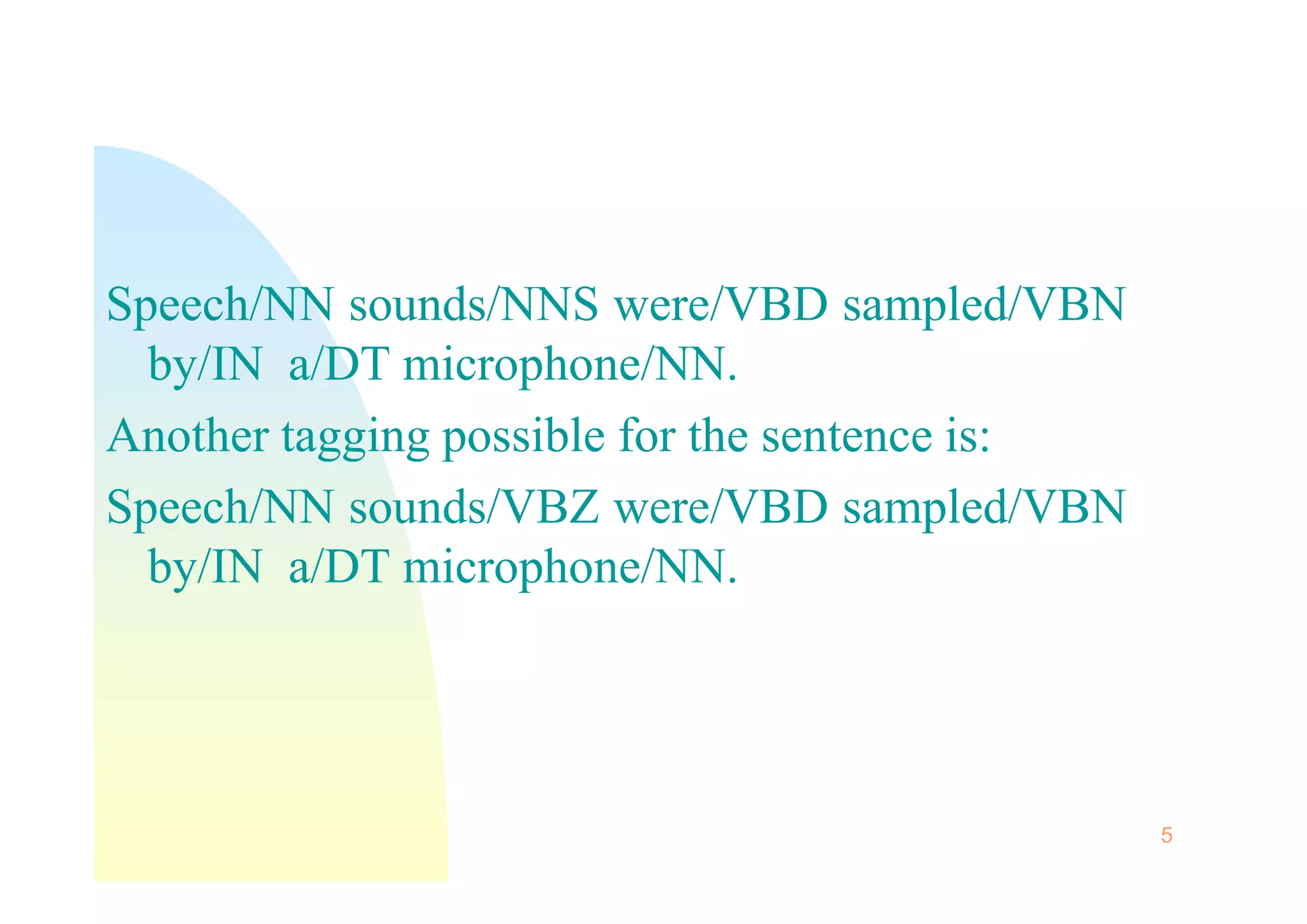

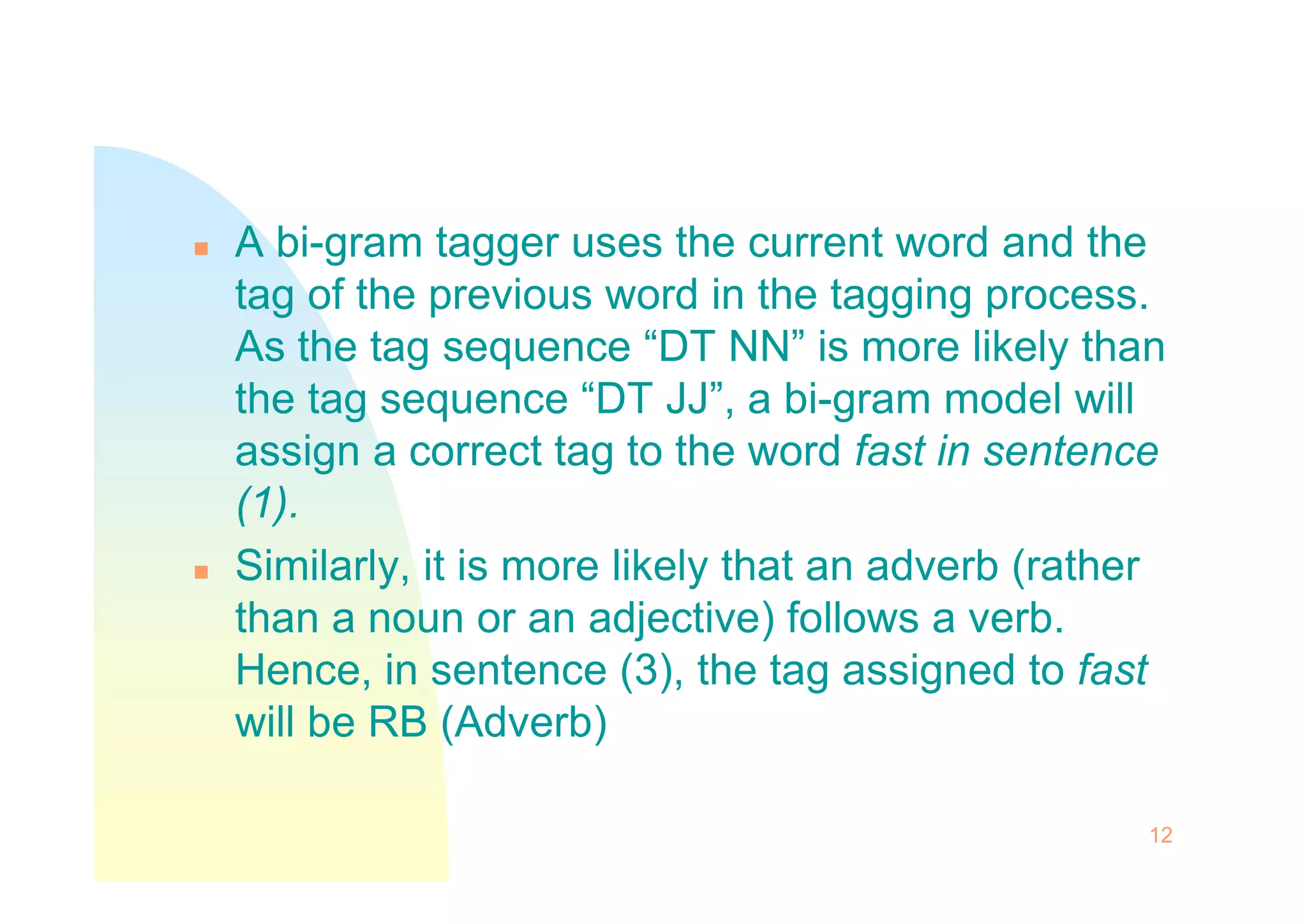

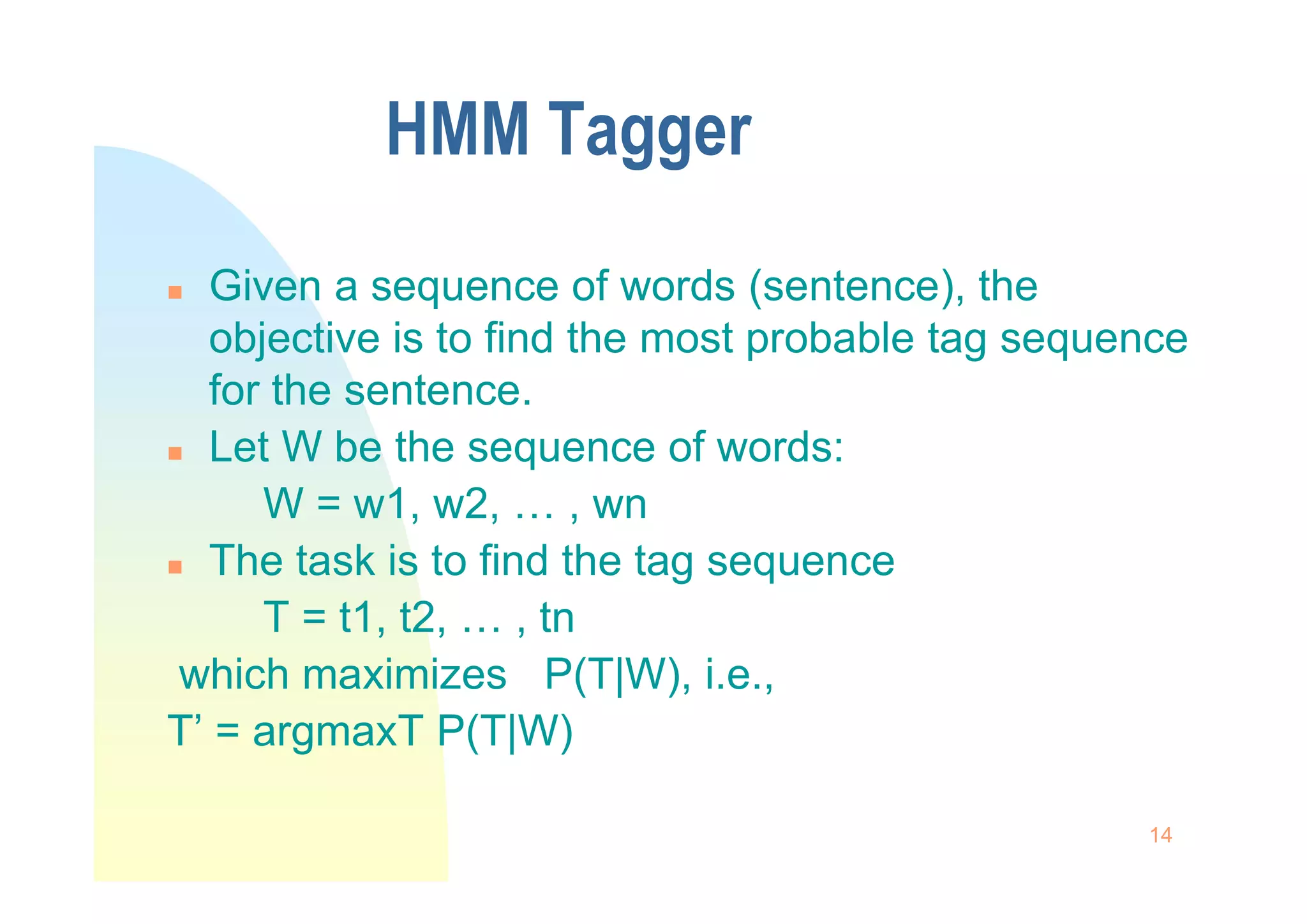

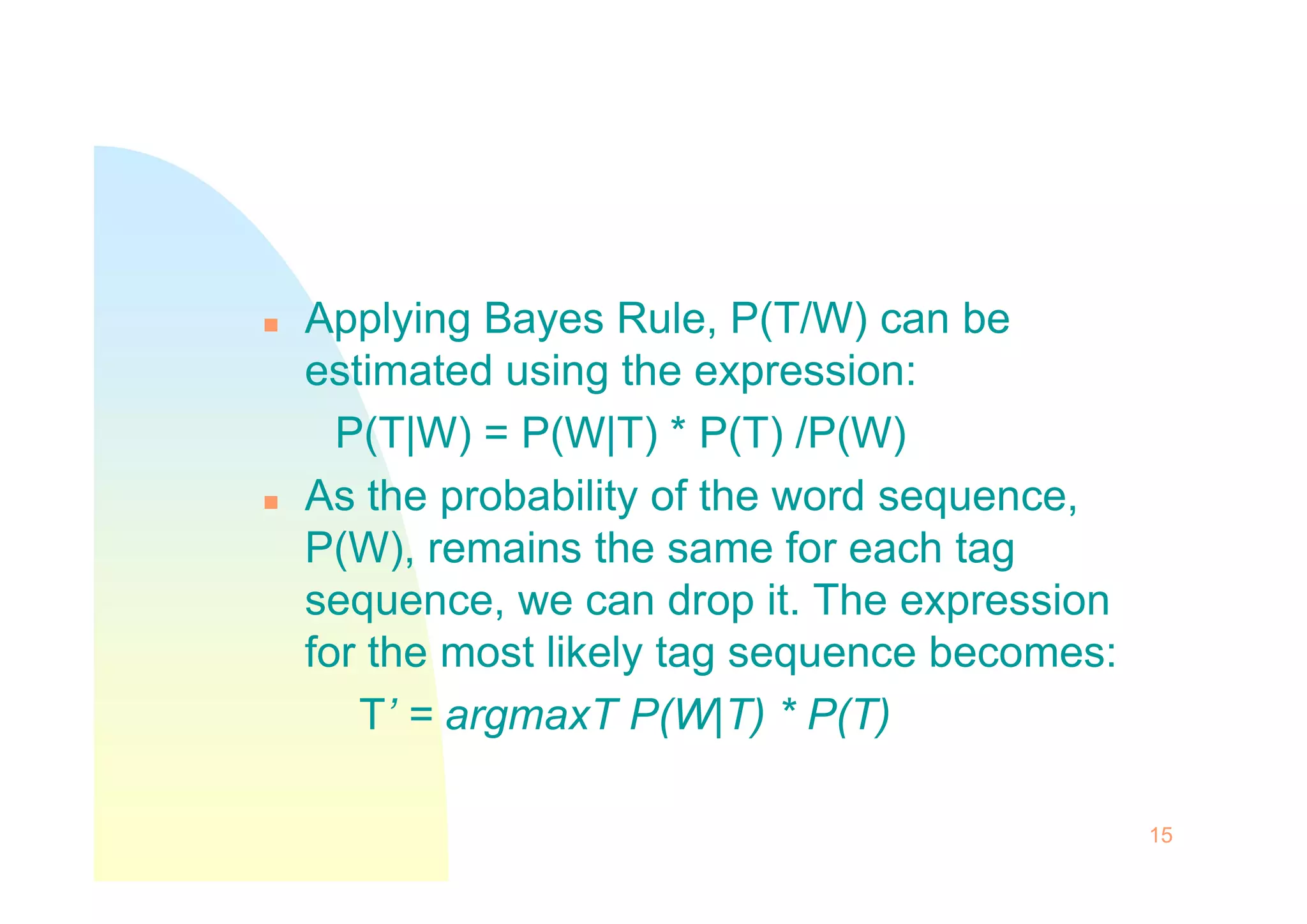

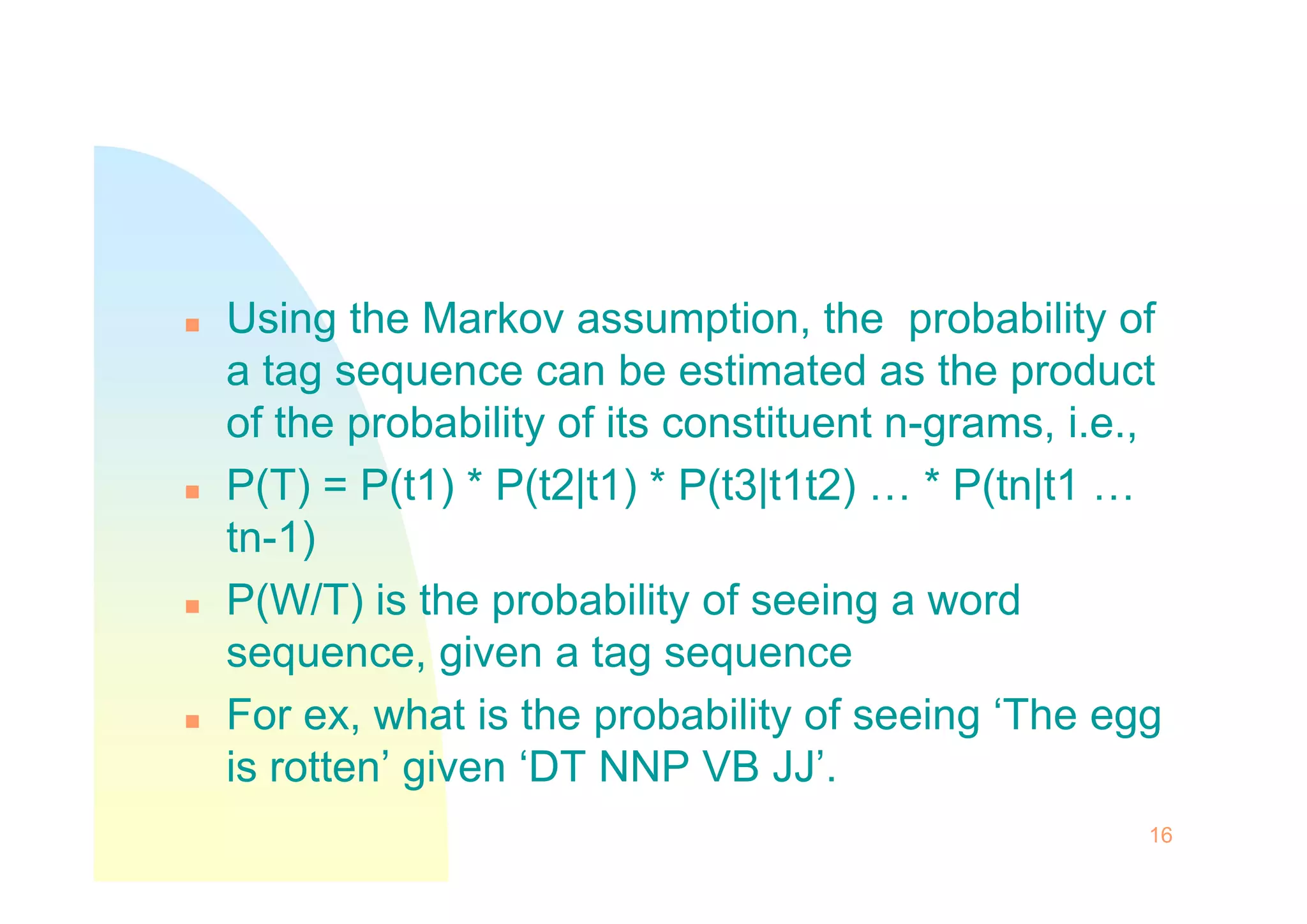

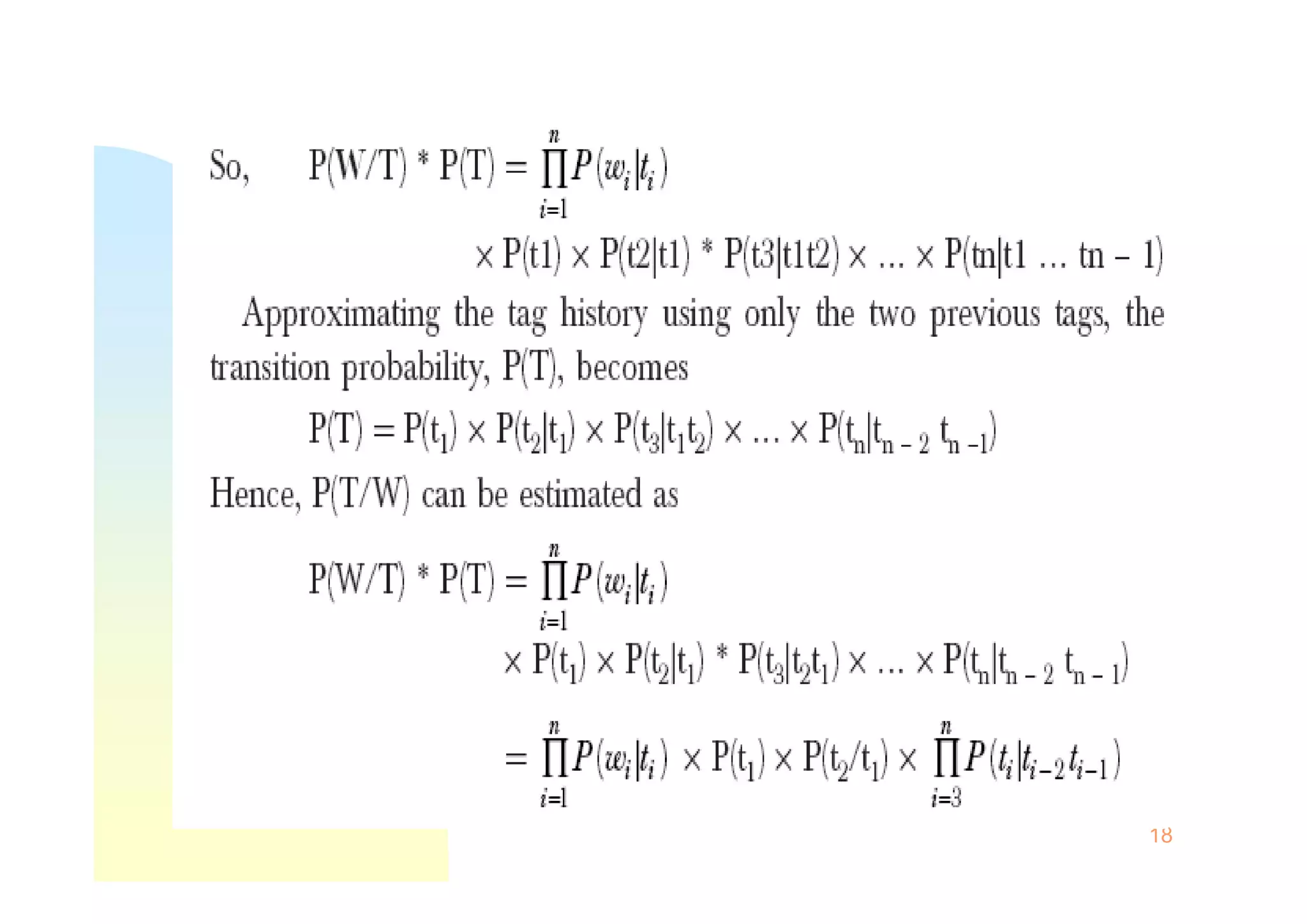

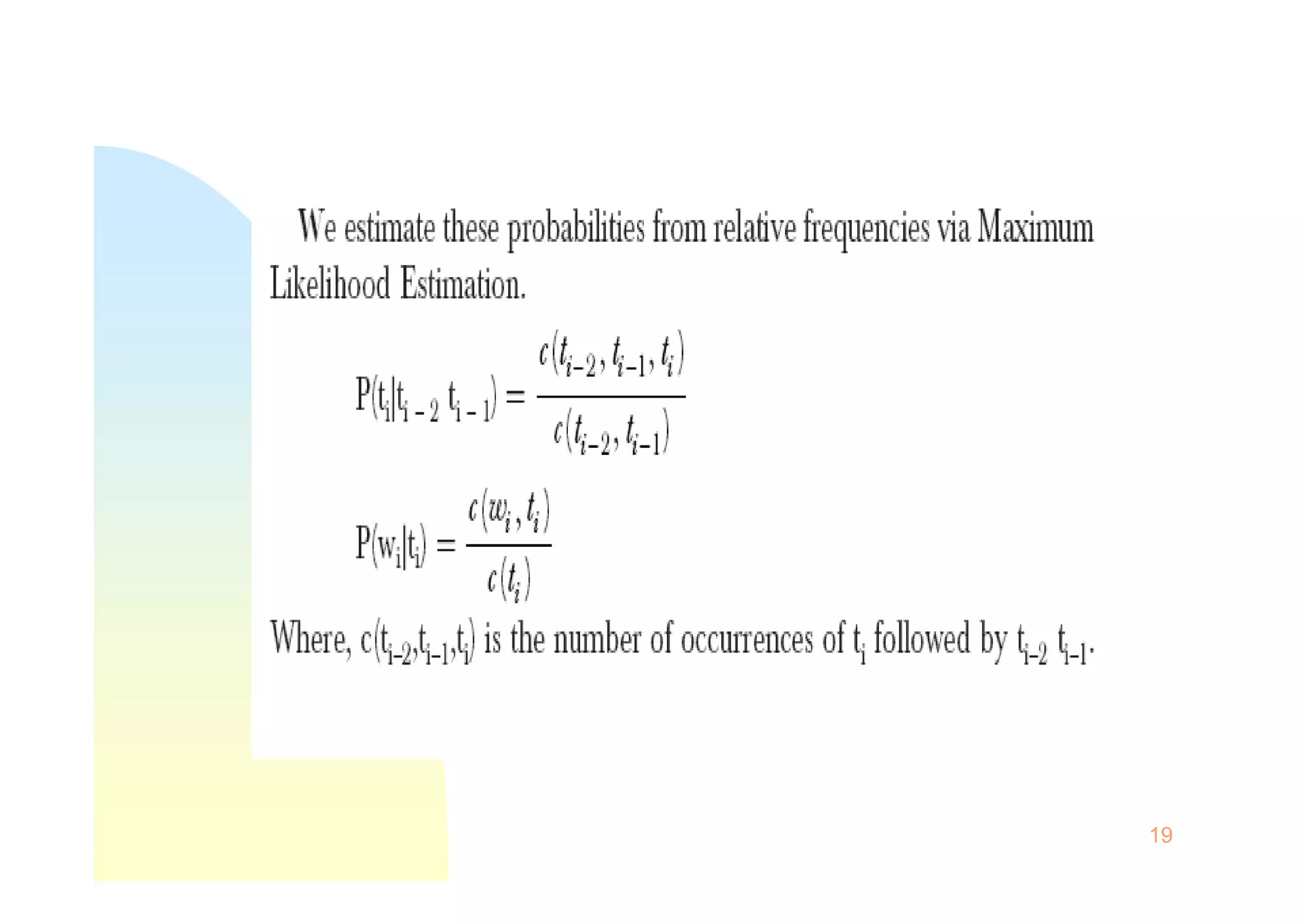

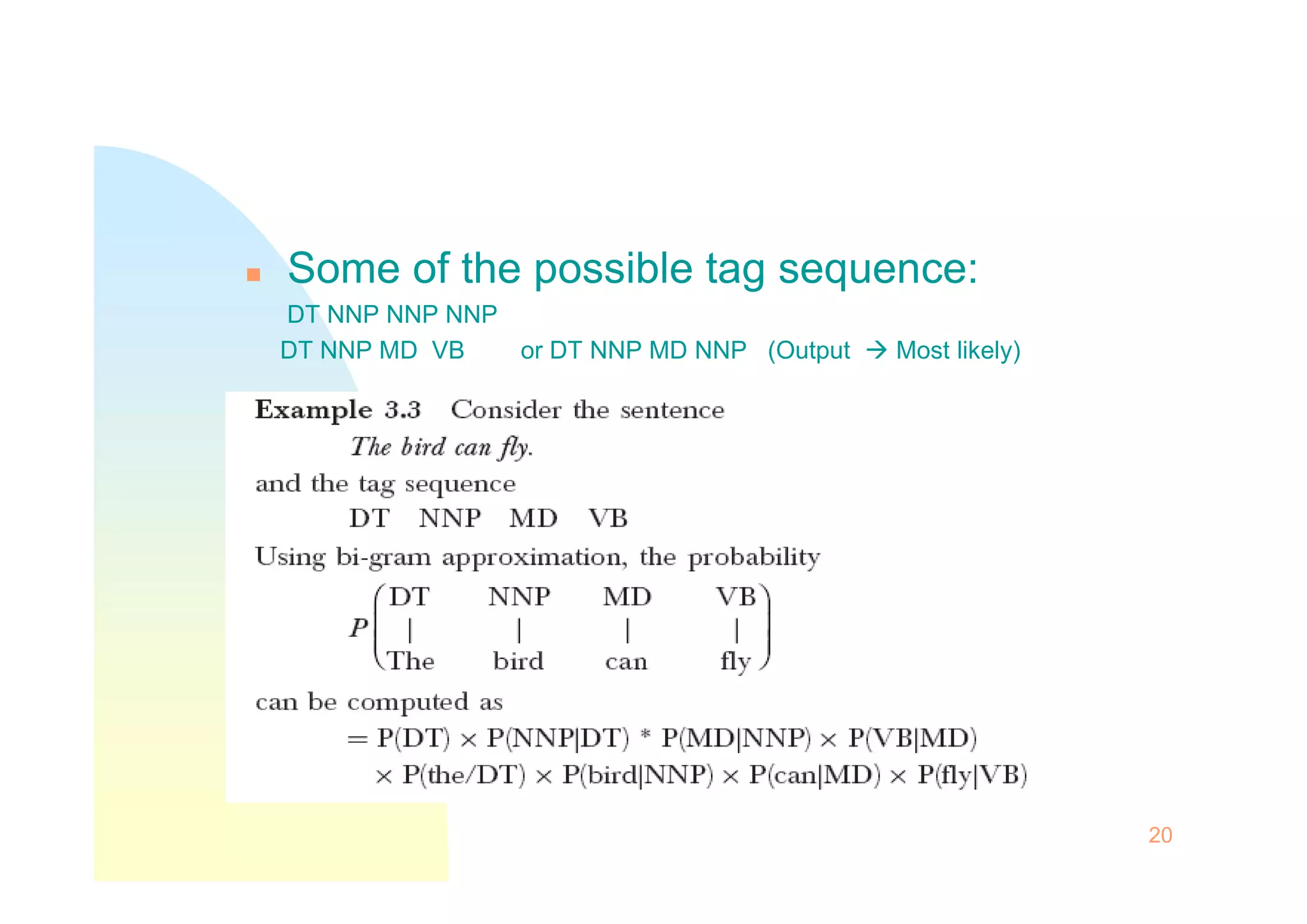

This document discusses part-of-speech (POS) tagging and different methods for POS tagging, including rule-based, stochastic, and transformation-based learning (TBL) approaches. It provides details on how rule-based tagging uses dictionaries and hand-coded rules, while stochastic taggers are data-driven and use hidden Markov models (HMMs) to assign the most probable tag sequences. TBL taggers start with an initial tag and then apply an ordered list of rewrite rules and contextual conditions to learn transformations that reduce tagging errors.