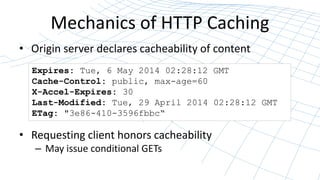

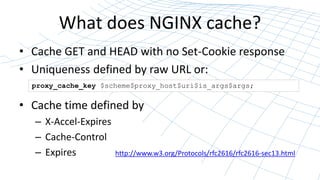

The webinar by Owen Garrett discusses the high-performance caching capabilities of nginx, outlining how caching can dramatically enhance website performance. It covers basic principles, configuration, cache management, and the benefits of implementing caching strategies. Key insights include the mechanics of HTTP caching, instrumentation for cache status, and the impact of page speed on user experience and revenue.

![Loading cache from disk

• Cache metadata stored in shared memory segment

• Populated at startup from cache by cache loader

proxy_cache_path path keys_zone=name:size

[loader_files=number] [loader_threshold=time] [loader_sleep=time];

(100) (200ms) (50ms)

– Loads files in blocks of 100

– Takes no longer than 200ms

– Pauses for 50ms, then repeats](https://image.slidesharecdn.com/nginxcaching-141120212306-conversion-gate02/85/NGINX-High-performance-Caching-18-320.jpg)

![Managing the disk cache

• Cache Manager runs periodically, purging files that

were inactive irrespective of cache time, deleteing

files in LRU style if cache is too big

proxy_cache_path path keys_zone=name:size

[inactive=time] [max_size=size];

(10m)

– Remove files that have not been used within 10m

– Remove files if cache size exceeds max_size](https://image.slidesharecdn.com/nginxcaching-141120212306-conversion-gate02/85/NGINX-High-performance-Caching-19-320.jpg)

![Multiple Caches

proxy_cache_path /tmp/cache1 keys_zone=one:10m levels=1:2 inactive=60s;

proxy_cache_path /tmp/cache2 keys_zone=two:2m levels=1:2 inactive=20s;

• Different cache policies for different tenants

• Pin caches to specific disks

• Temp-file considerations – put on same disk!:

proxy_temp_path path [level1 [level2 [level3]]];](https://image.slidesharecdn.com/nginxcaching-141120212306-conversion-gate02/85/NGINX-High-performance-Caching-25-320.jpg)