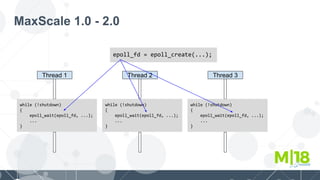

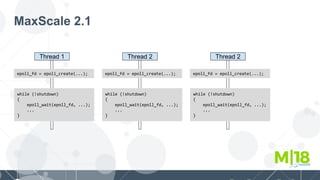

MaxScale uses an asynchronous and multi-threaded architecture to route client queries to backend database servers. Each thread creates its own epoll instance to monitor file descriptors for I/O events, avoiding locking between threads. Listening sockets are added to a global epoll file descriptor that notifies threads when clients connect, allowing connections to be distributed evenly across threads. This architecture improves performance over the previous single epoll instance approach.

![MaxScale epoll Setup

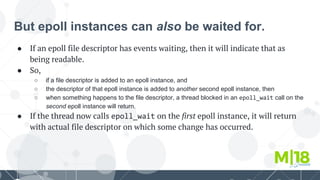

● At startup, socket creation is triggered by the presence of listeners.

[TheListener]

type=listener

service=TheService

...

port=4009

so = socket(...);

...

listen(so);

...

struct epoll_event ev;

ev.events = events;

ev.data.ptr = data;

epoll_ctl(epoll_fd, EPOLL_CTL_ADD, so, &ev);

epoll_fd = epoll_create(...);](https://image.slidesharecdn.com/session4m18maxscalearchitectureandperformance-180306225456/85/M-18-Architectural-Overview-MariaDB-MaxScale-16-320.jpg)

![Client Connection

Client MaxScale

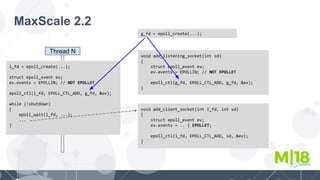

while (!shutdown)

{

struct epoll_event events[MAX_EVENTS];

int ndfs = epoll_wait(epoll_fd, events, ...);

for (int i = 0; i < ndfs; ++i)

{

epoll_event* event = &events[i];

handle_event(event);

}

}](https://image.slidesharecdn.com/session4m18maxscalearchitectureandperformance-180306225456/85/M-18-Architectural-Overview-MariaDB-MaxScale-17-320.jpg)

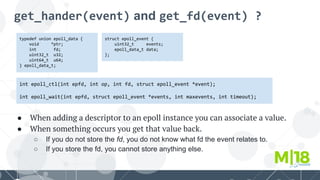

![Handle Accept

void handle_accept(struct epoll_event* event)

{

for (all servers in service)

{

int so;

connect each server;

struct epoll_event ev;

ev.events = events;

ev.data.ptr = data;

epoll_ctl(epoll_fd, EPOLL_CTL_ADD, so, &ev);

}

}

[TheService]

type=service

service=readwritesplit

servers=server,server2

...](https://image.slidesharecdn.com/session4m18maxscalearchitectureandperformance-180306225456/85/M-18-Architectural-Overview-MariaDB-MaxScale-19-320.jpg)

![Handle Read

void handle_read(struct epoll_event* event)

{

char buffer[MAX_SIZE];

read(sd, buffer, sizeof(buffer));

figure out what to do with the data

// - wait for more

// - authenticate

// - send to master, send to slaves, send to all

// ...

...

}

● When the servers reply,

the response will be

handled in a similar

manner.](https://image.slidesharecdn.com/session4m18maxscalearchitectureandperformance-180306225456/85/M-18-Architectural-Overview-MariaDB-MaxScale-20-320.jpg)

![Client Connecting

typedef void (*handler_t)(epoll_event*);

while (!shutdown)

{

struct epoll_event events[MAX_EVENTS];

int ndfs = epoll_wait(epoll_fd, events, ...);

for (int i = 0; i < ndfs; ++i)

{

epoll_event* event = &events[i];

handler_t handler = get_handler(event);

handler(event);

}

}

void handle_epoll_event(epoll_event*)

{

struct epoll_event events[1];

int fd = get_fd(event); // fd == g_fd

epoll_wait(fd, events, 1, 0); // 0 timeout.

epoll_event* event = &events[0];

handler_t handler = get_handler(event);

handler(event);

}

void handle_accept_event(epoll_event* event)

{

int sd = get_fd(event);

while ((cd = accept(sd)) != NULL)

{

...

add_client_socket(cd, ...);

}

}](https://image.slidesharecdn.com/session4m18maxscalearchitectureandperformance-180306225456/85/M-18-Architectural-Overview-MariaDB-MaxScale-34-320.jpg)

![Adding and Extracting Events

void poll_add_fd(int fd, uint32_t events, MXS_POLL_DATA* pData)

{

struct epoll_event ev;

ev.events = events;

ev.data.ptr = pData;

epoll_ctl(m_epoll_fd, EPOLL_CTL_ADD, fd, &ev);

}

DCB* dcb = ...;

poll_add_events(dcb->fd, ..., &dcb->poll);

Worker* pWorker = ...;

poll_add_events(pWorker->fd, ..., pWorker);

while (!should_shutdown)

{

struct epoll_event events[MAX_EVENTS];

int n = epoll_wait(epoll_fd, events, MAX_EVENTS, -1);

for (int i = 0; i < n; ++i)

{

MXS_POLL_DATA* data = (MXS_POLL_DATA)events[i].data.ptr;

data->handler(data, ..., events[i].events);

}

}

Each worker thread sits in this loop.](https://image.slidesharecdn.com/session4m18maxscalearchitectureandperformance-180306225456/85/M-18-Architectural-Overview-MariaDB-MaxScale-37-320.jpg)

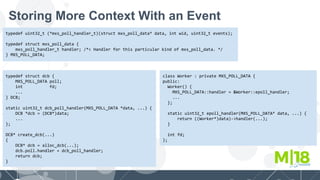

![Cache

● The cache was introduced in 2.1.0 but the

performance was less than satisfactory.

● Problem was caused by parsing.

○ The cache parsed all statements to detect non-cacheable statements.

○ E.g. SELECT CURRENT_DATE();

● Added possibility to declare that all SELECT statements are cacheable.

[TheCache]

type=filter

module=cache

...

selects=assume_cacheable

● Huge impact on the performance.](https://image.slidesharecdn.com/session4m18maxscalearchitectureandperformance-180306225456/85/M-18-Architectural-Overview-MariaDB-MaxScale-45-320.jpg)