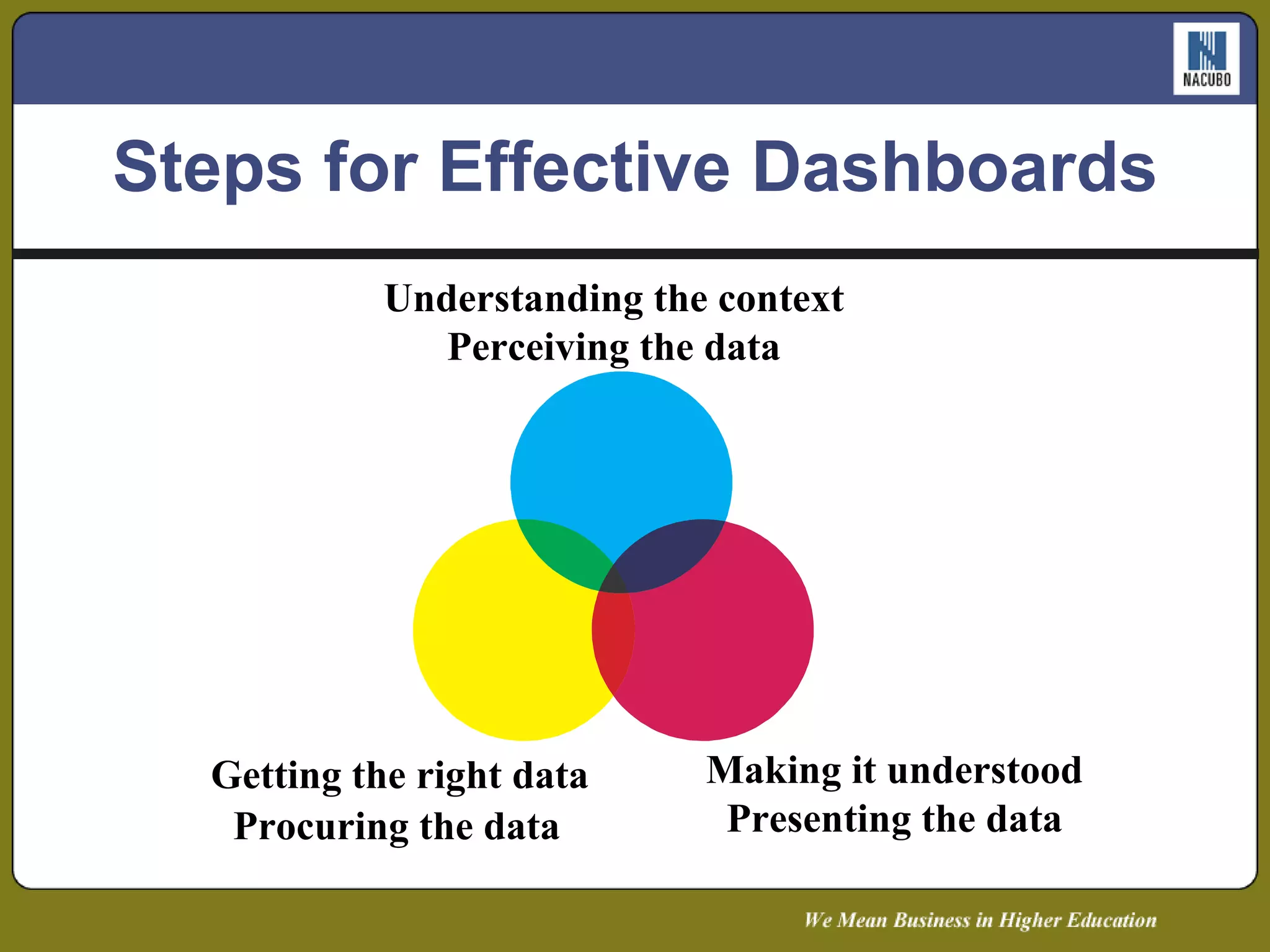

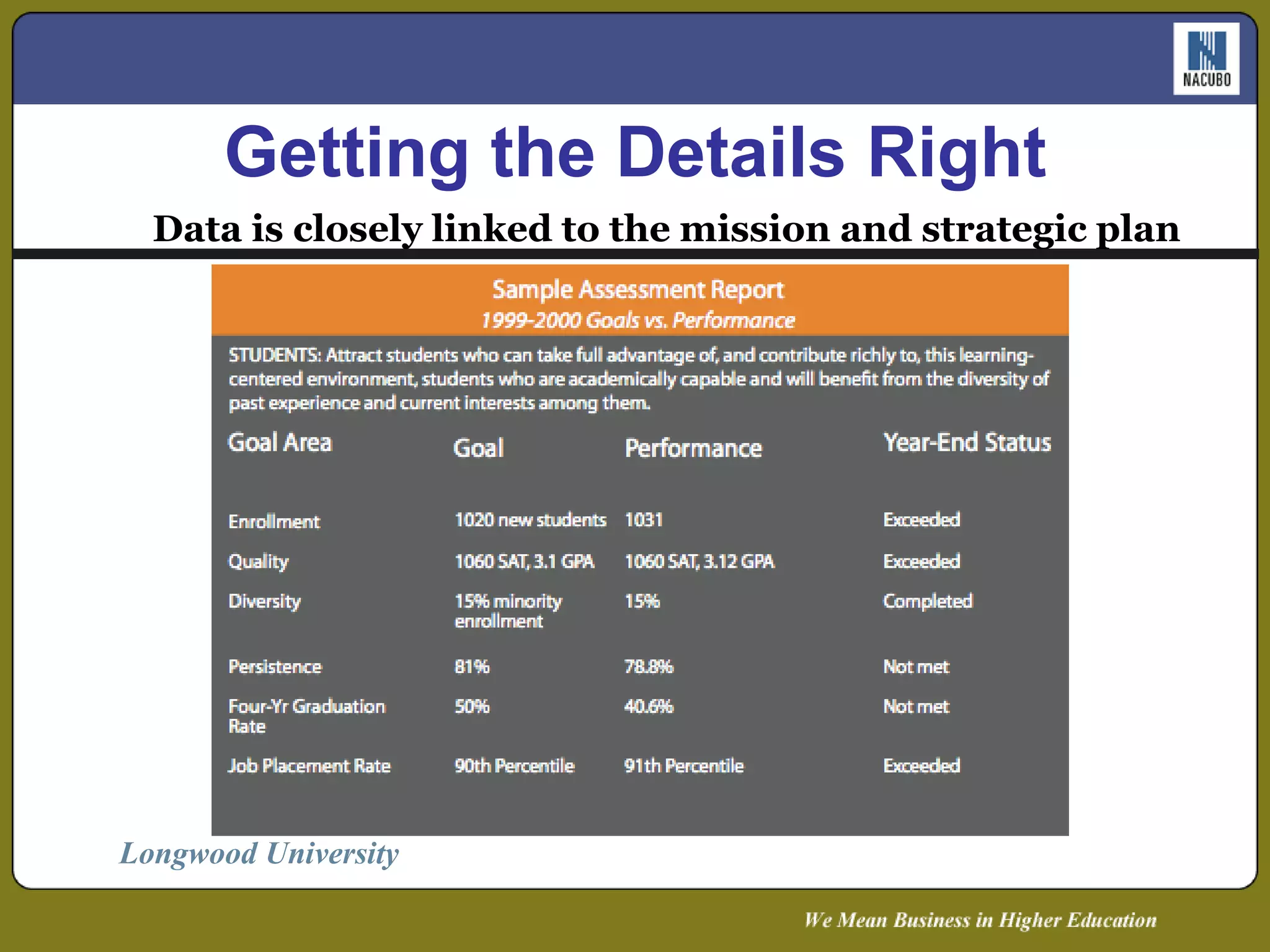

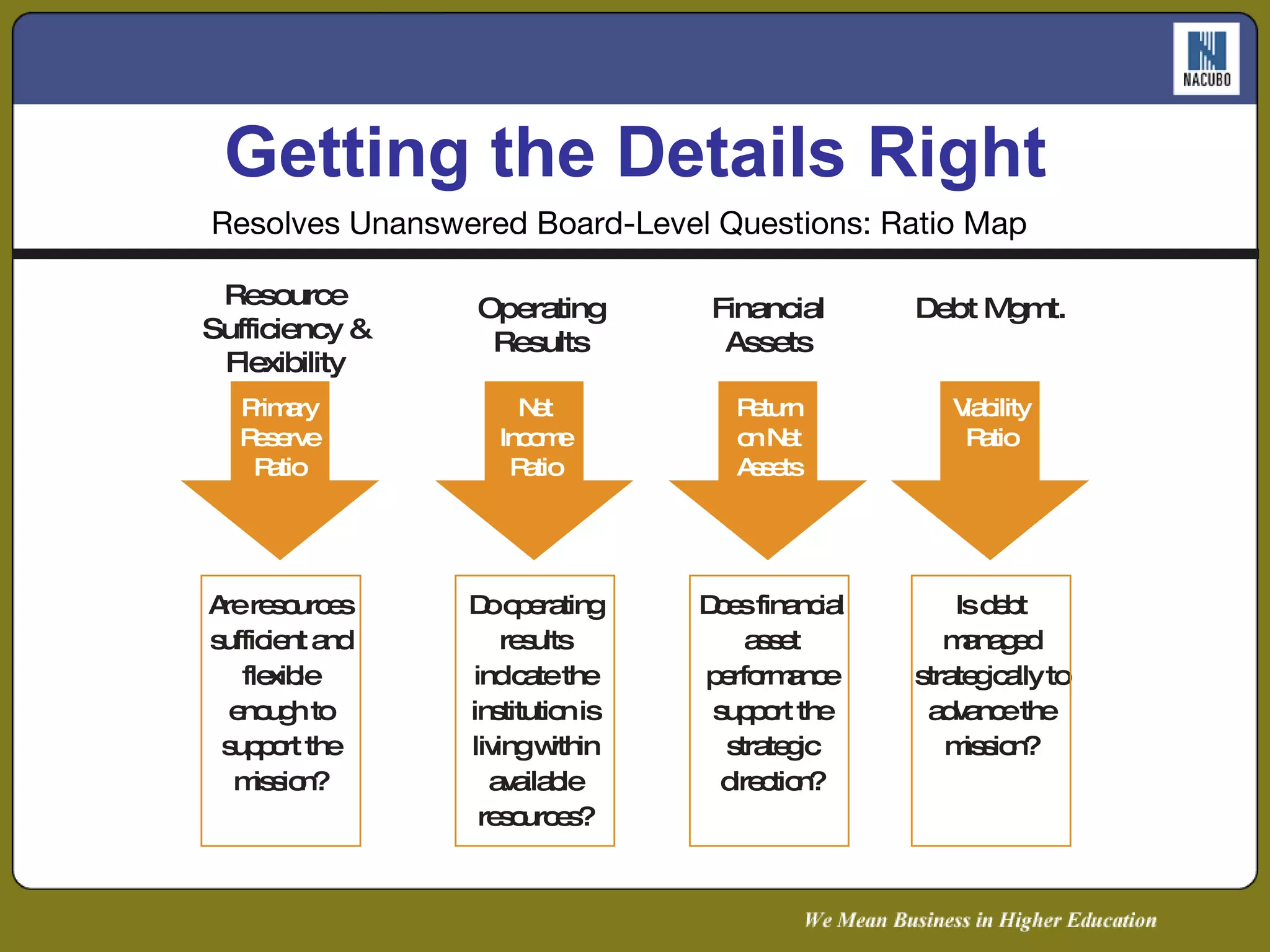

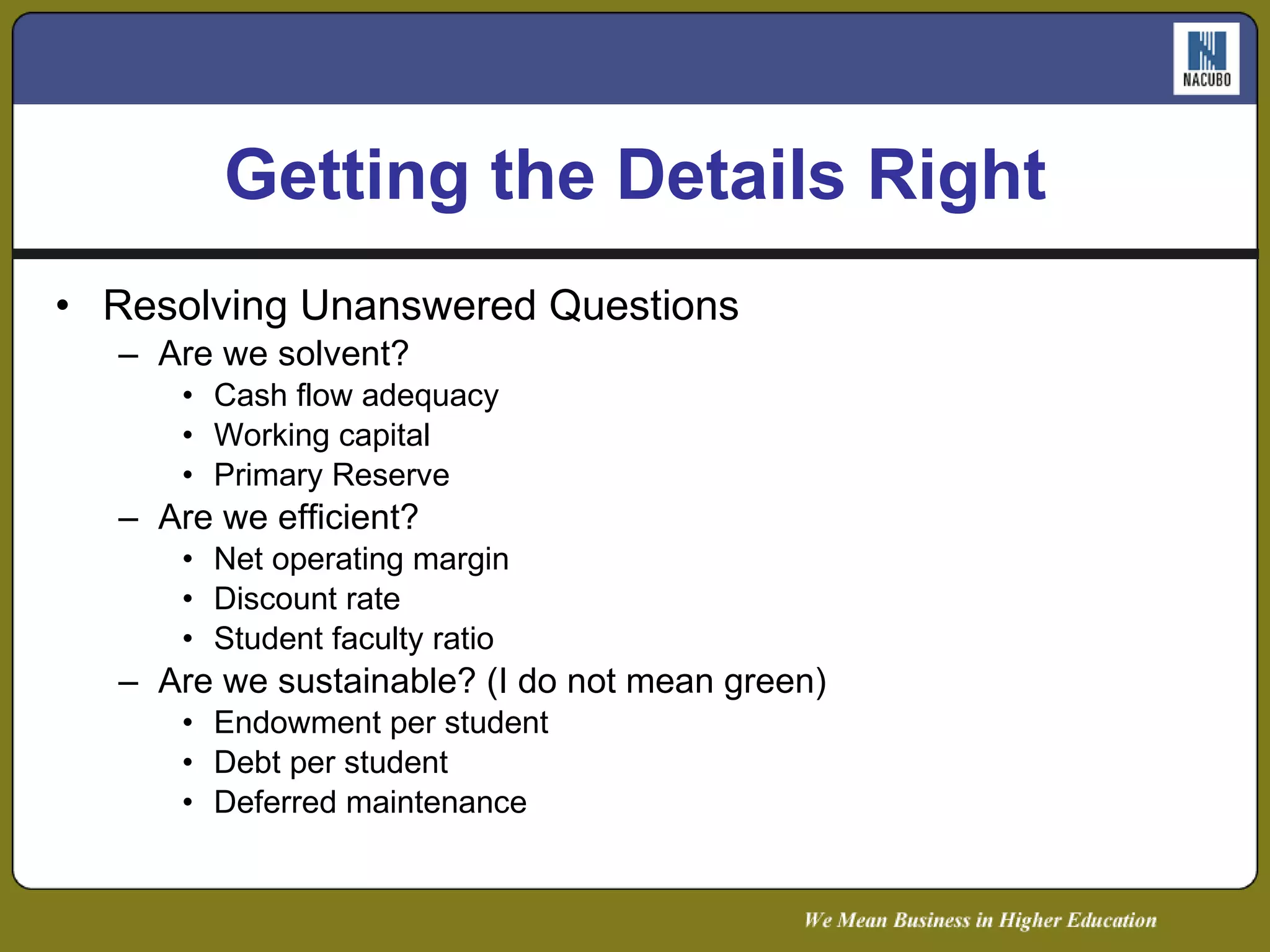

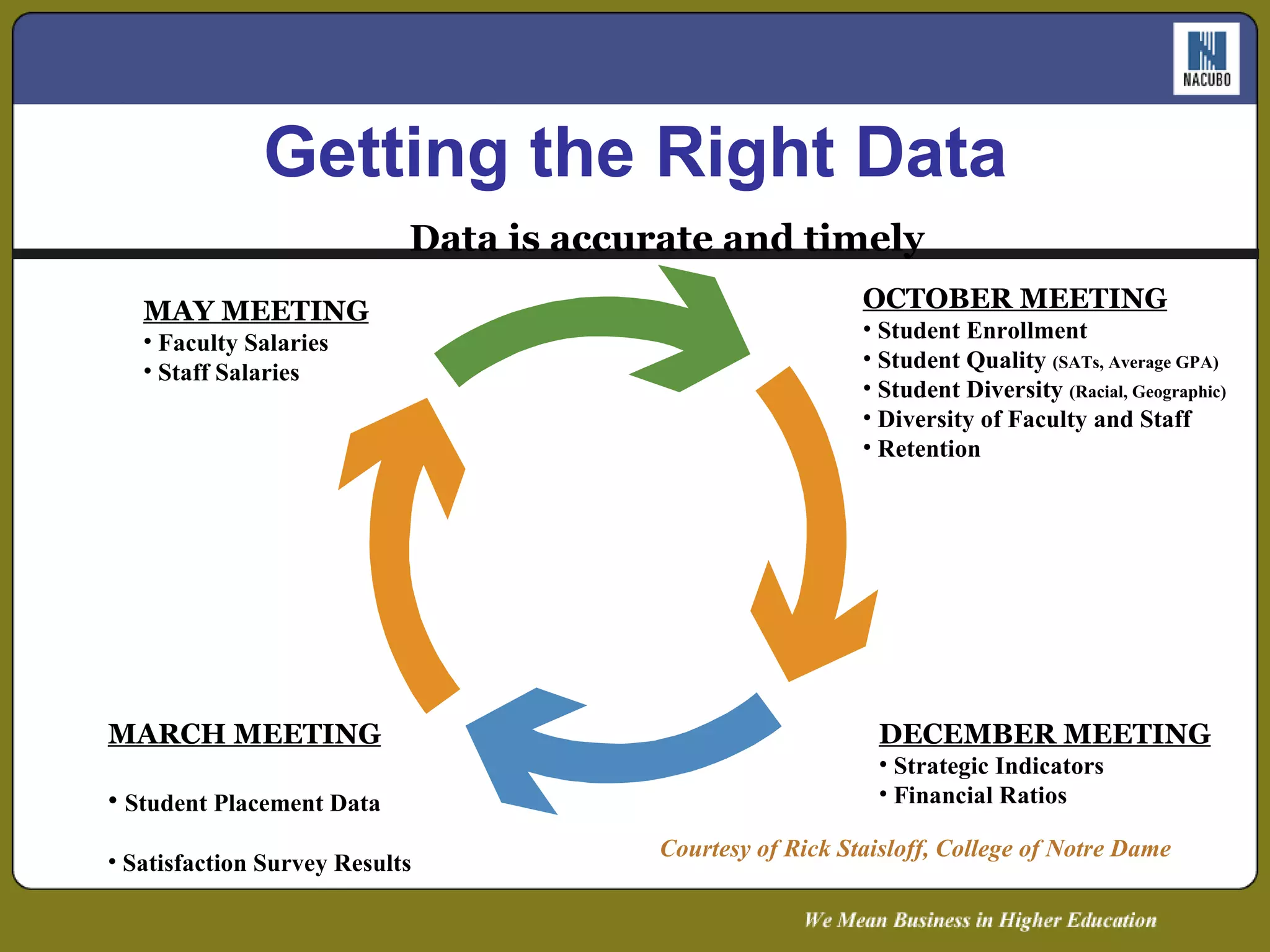

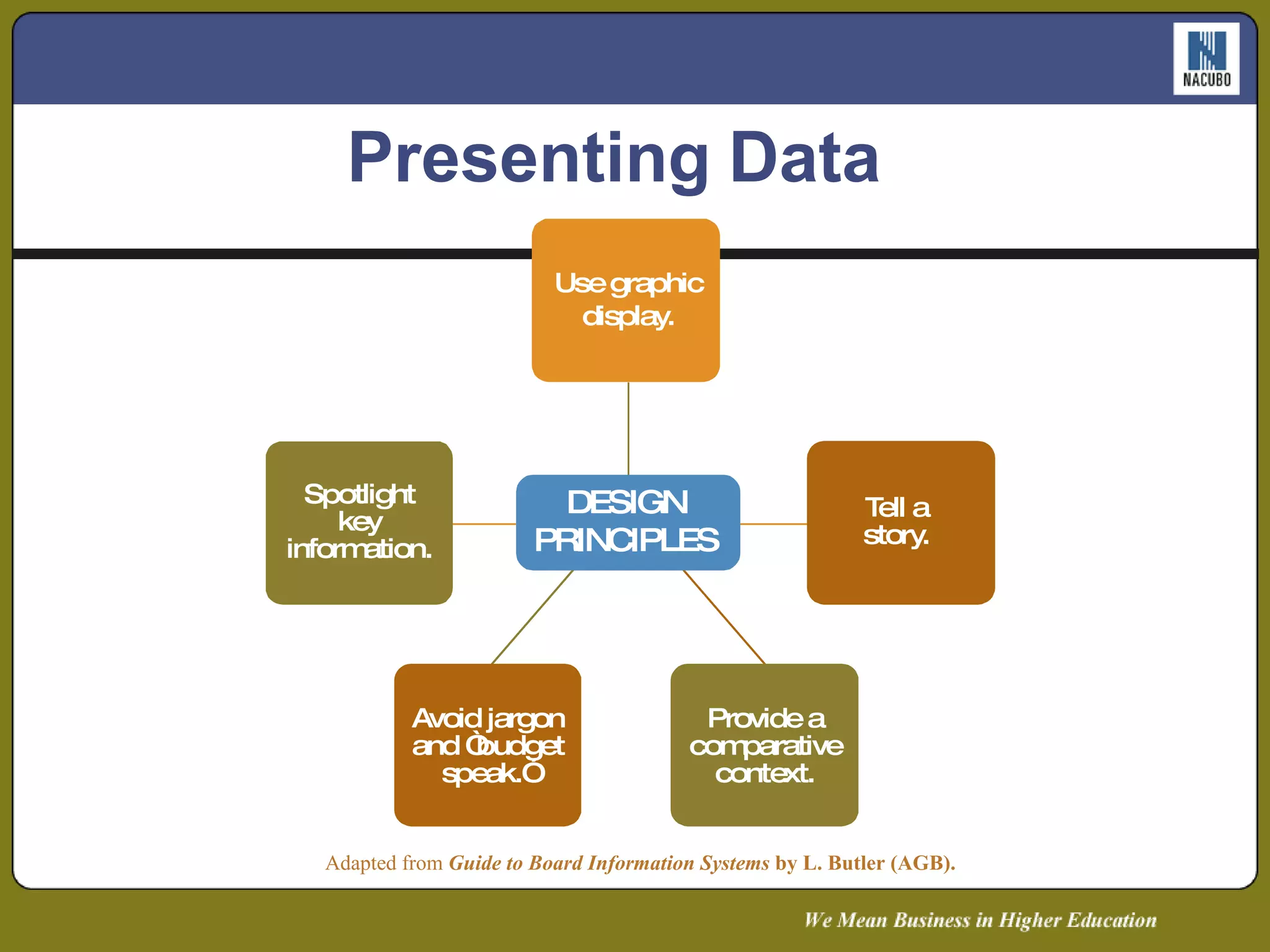

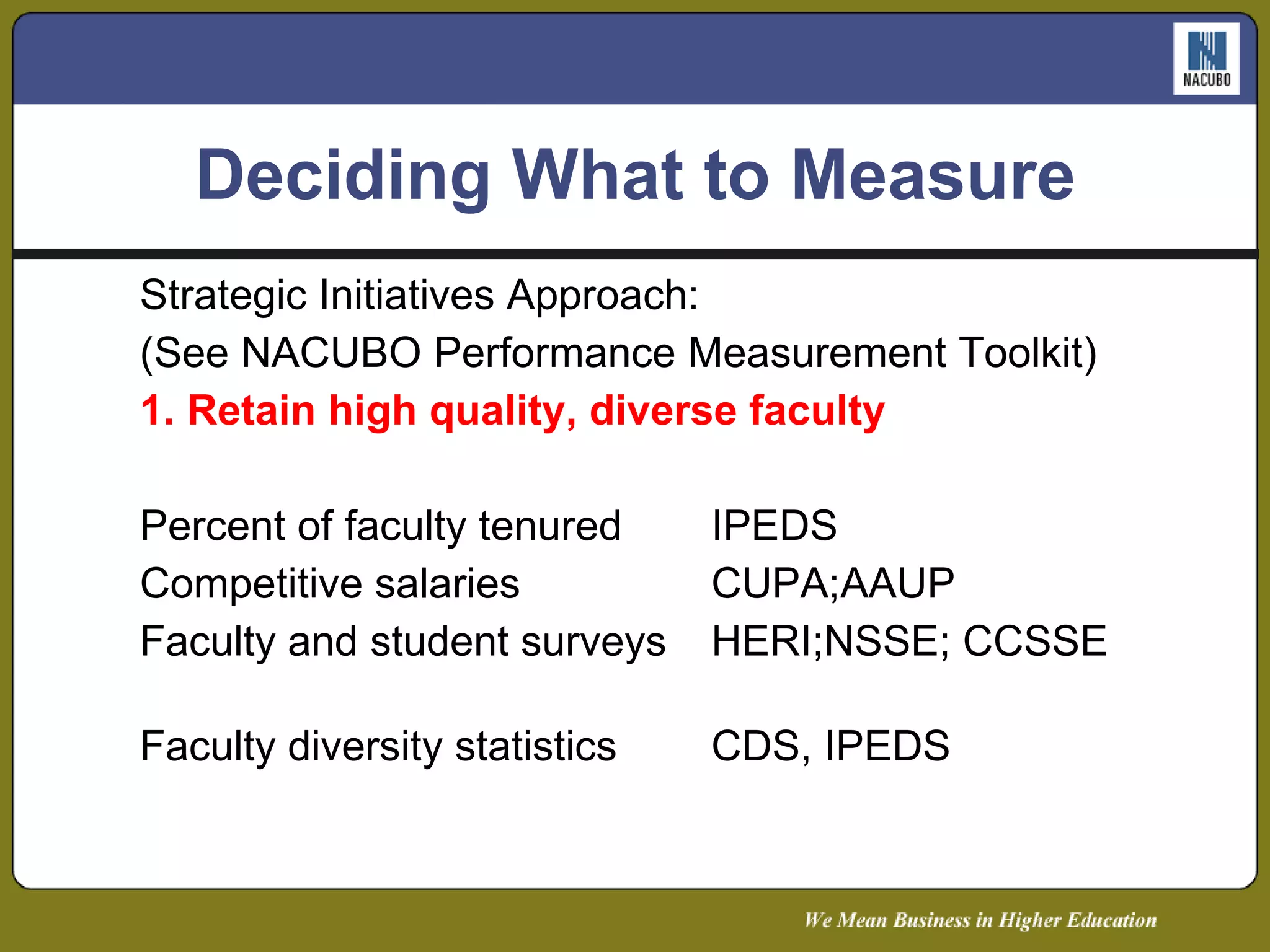

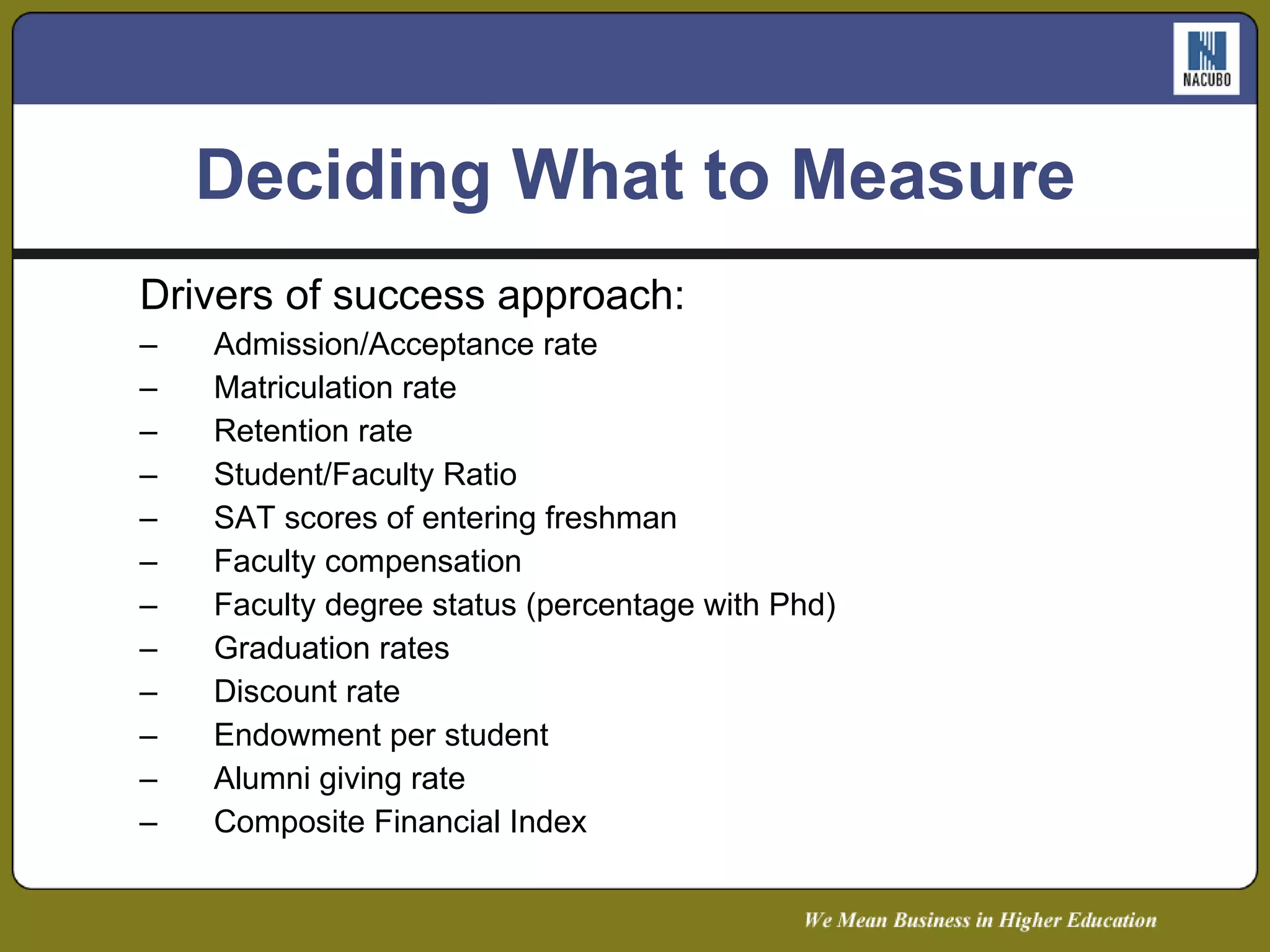

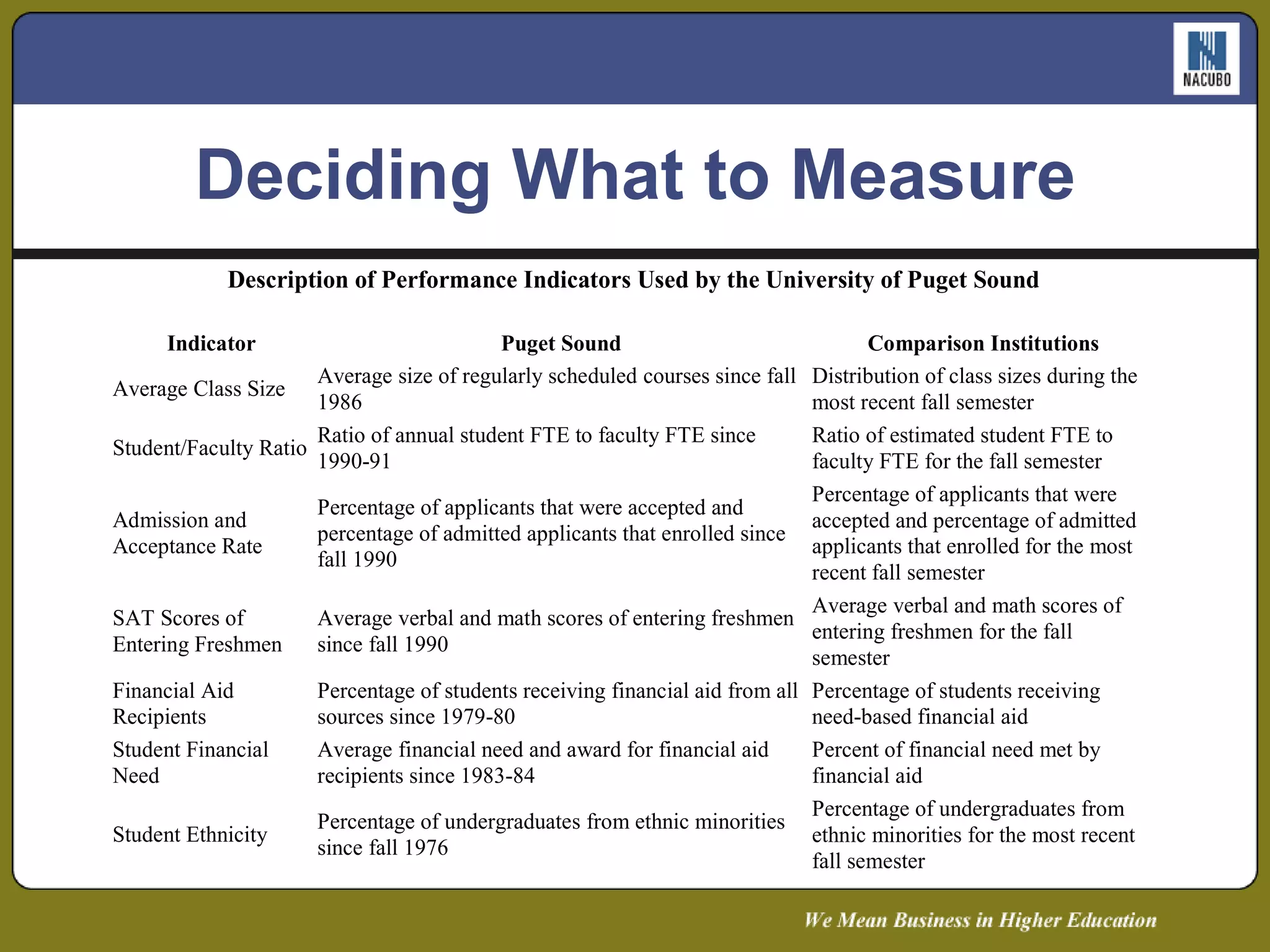

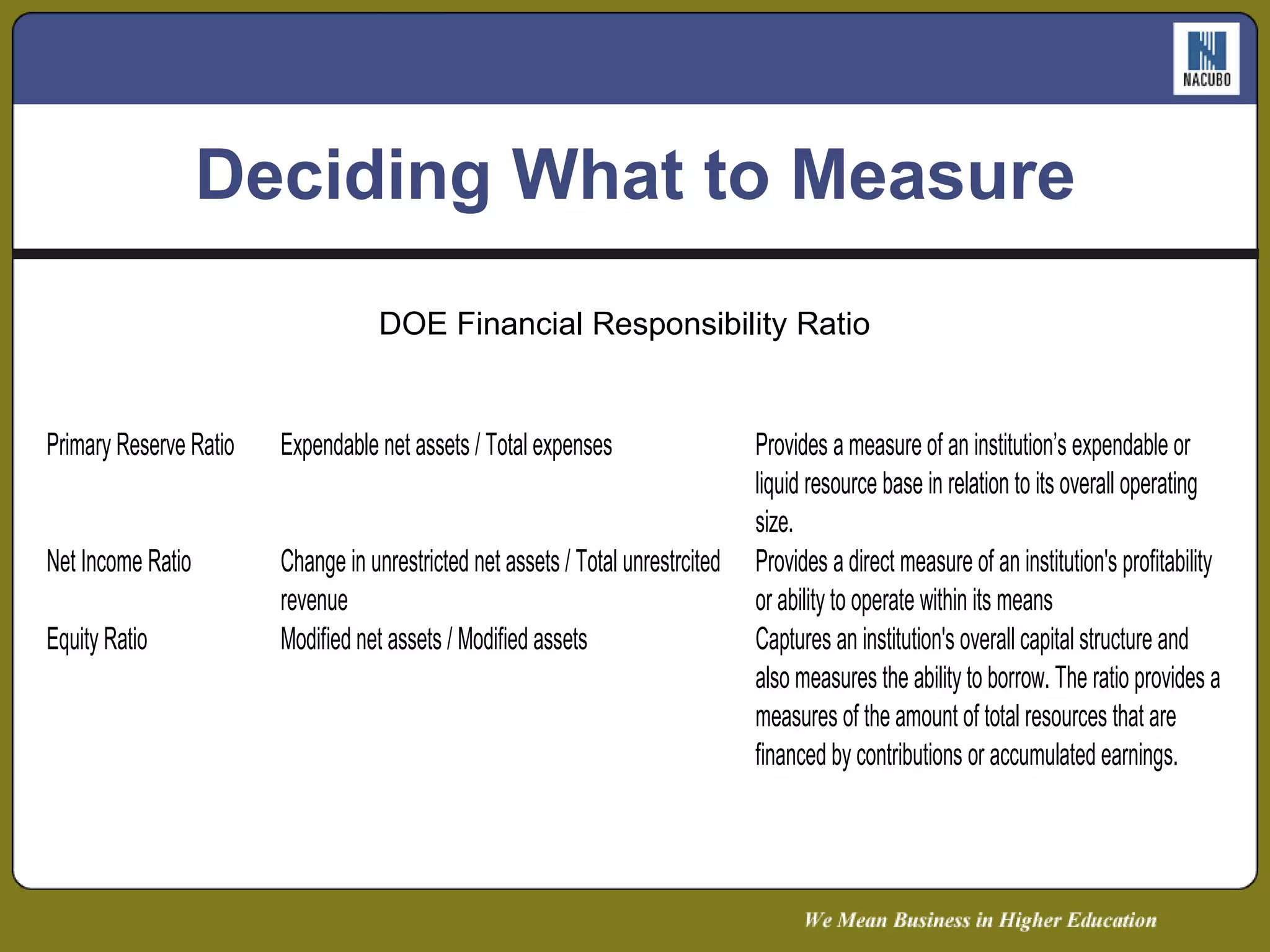

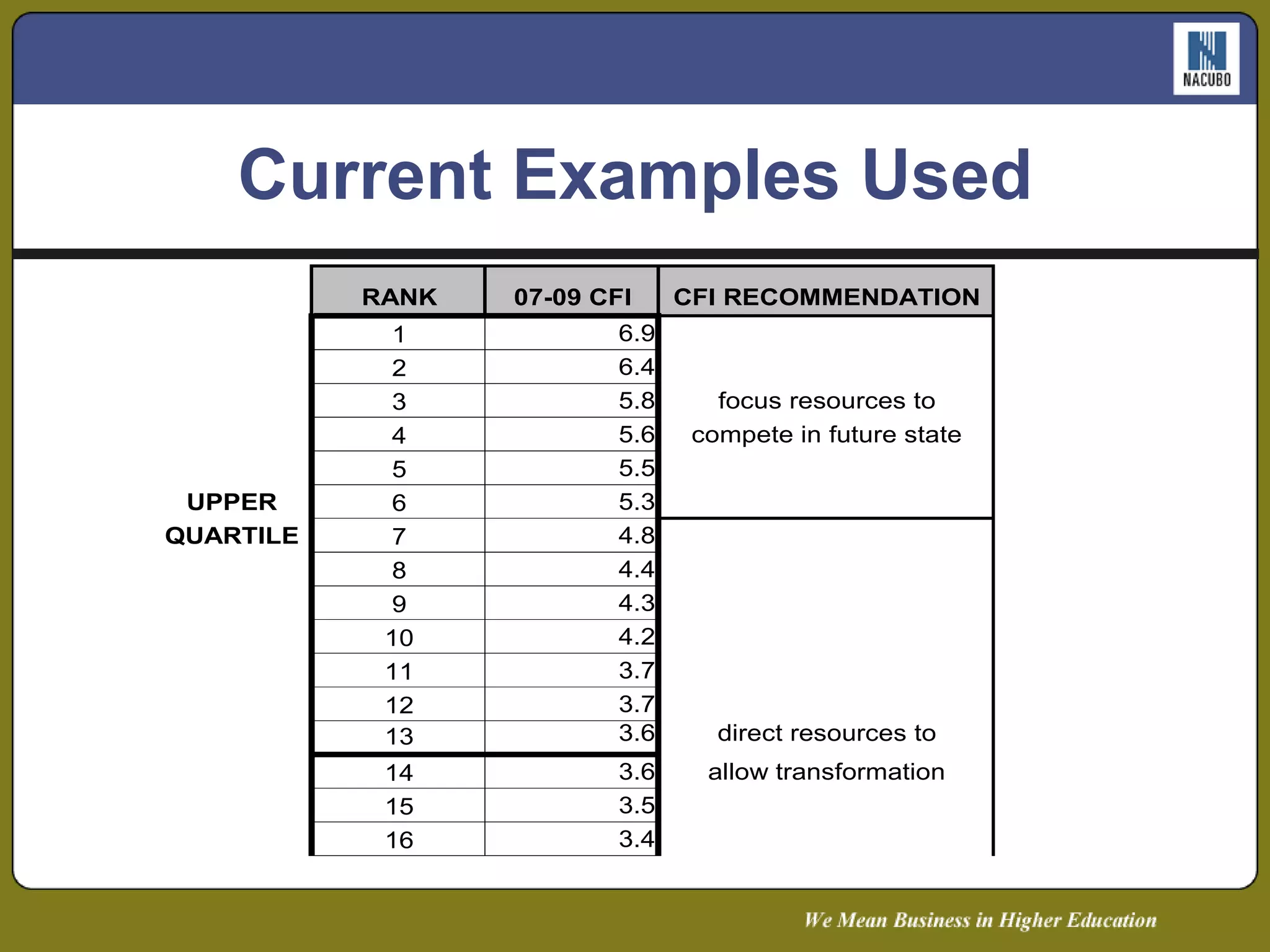

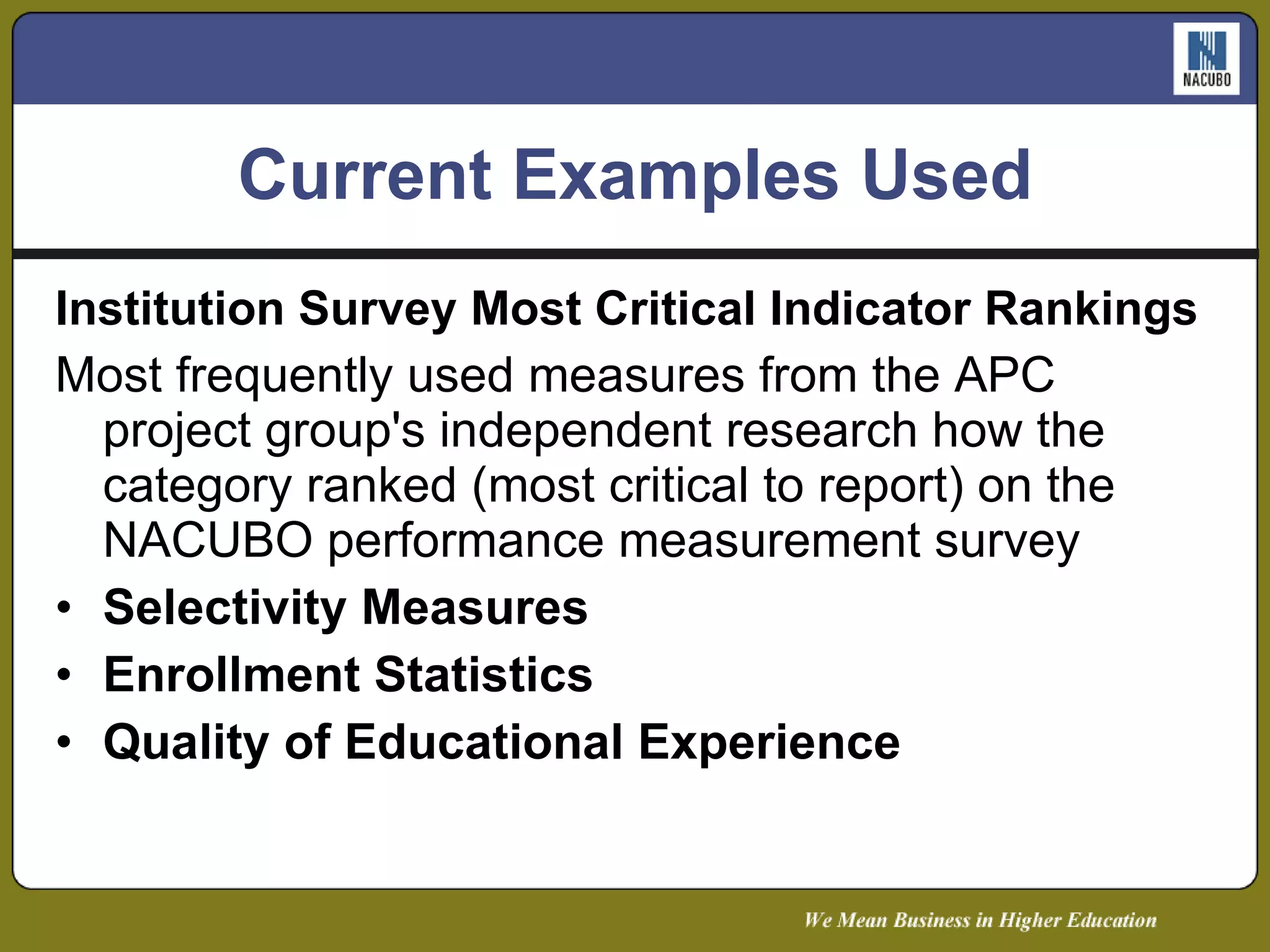

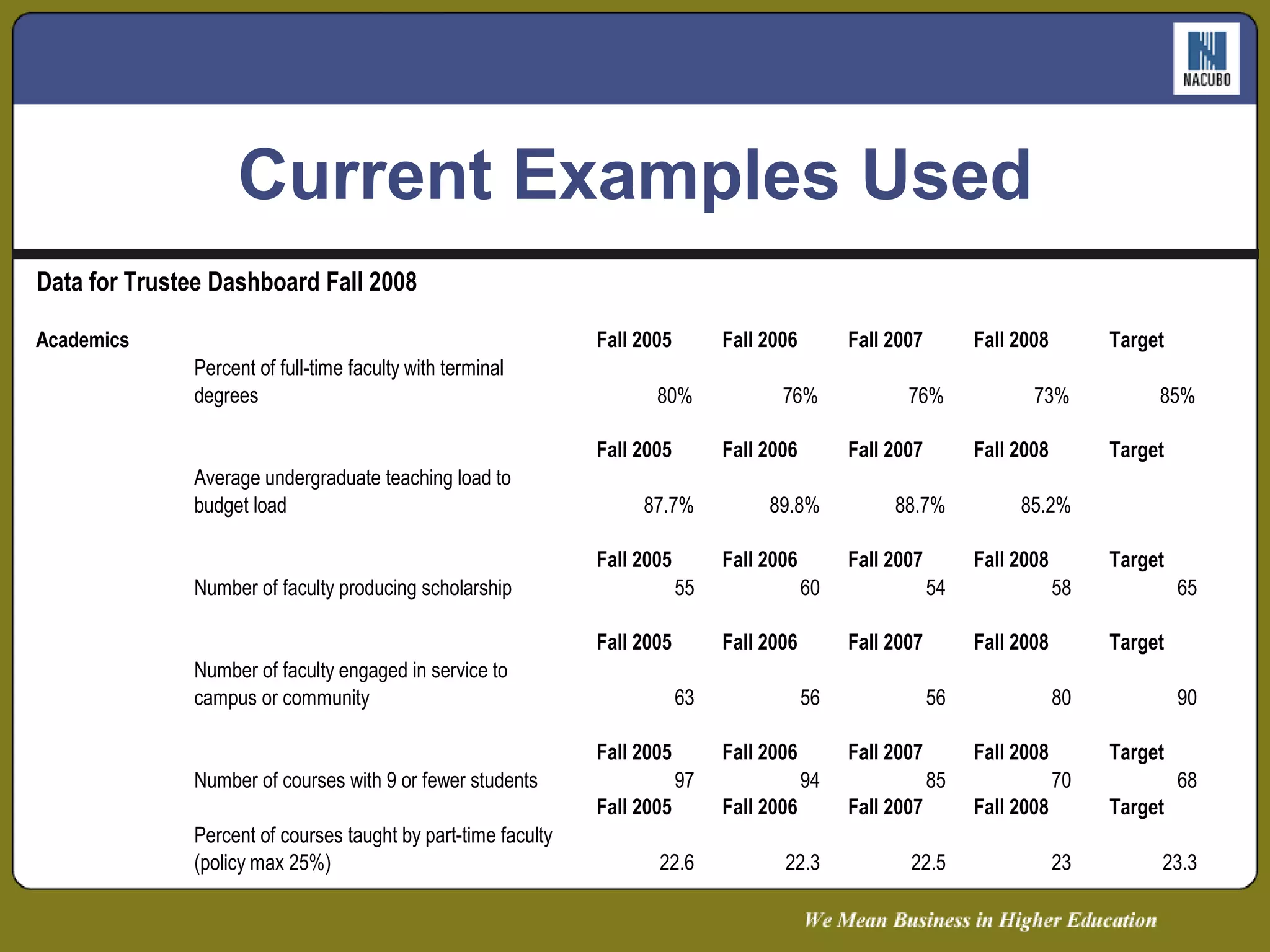

The document discusses key performance indicator (KPI) dashboards and benchmarking for higher education institutions. It outlines the case for good communication of financial and operational data through dashboards to highlight potential problems. It describes effective dashboard principles like understanding context, perceiving and presenting data accurately and linking data to mission and strategy. Benchmarking is presented as a way to maintain viability by comparing performance to peers. Examples of common higher education KPIs and benchmarking groups are provided.