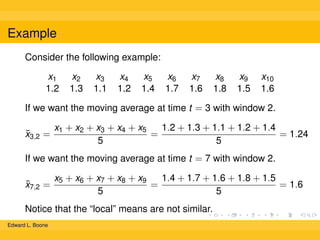

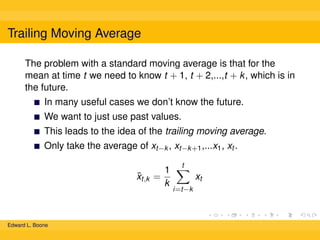

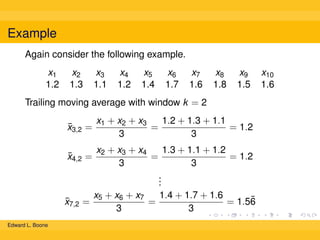

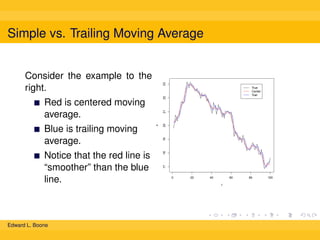

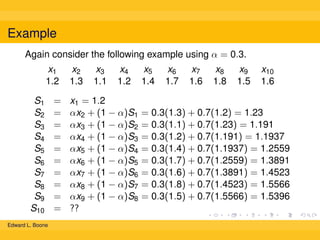

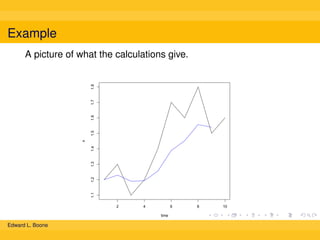

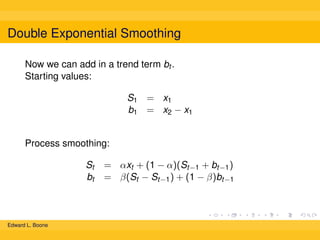

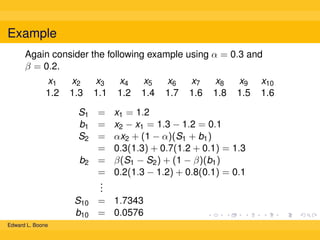

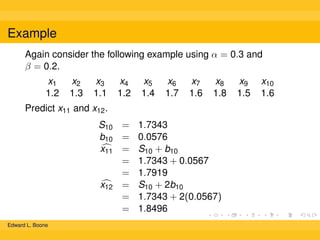

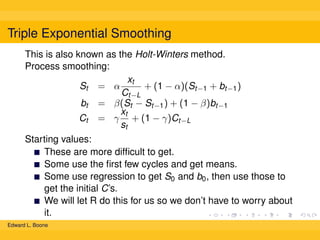

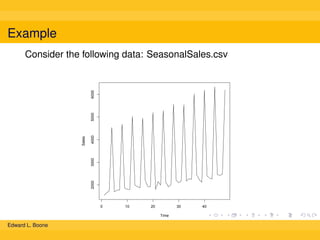

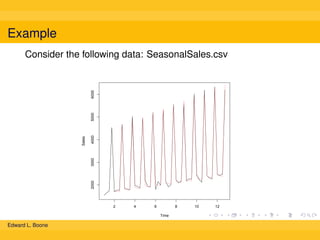

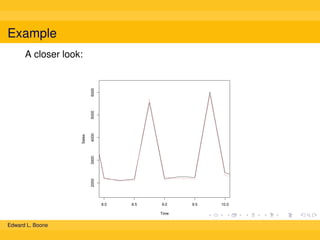

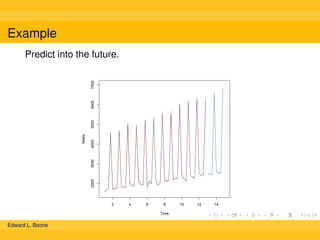

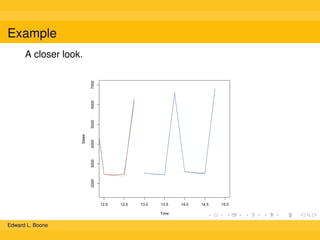

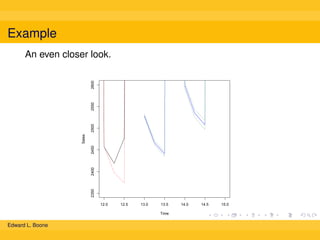

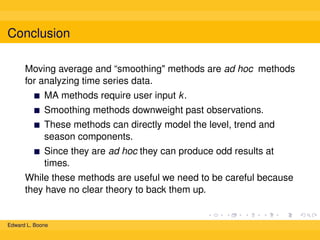

The document discusses different methods for calculating moving averages of time series data, including simple, trailing, and exponentially weighted moving averages. It also covers double and triple exponential smoothing techniques, which can model the level, trend, and seasonality components of time series. Triple exponential smoothing, also known as the Holt-Winters method, is demonstrated as an example using R code to analyze time series sales data and predict future values.