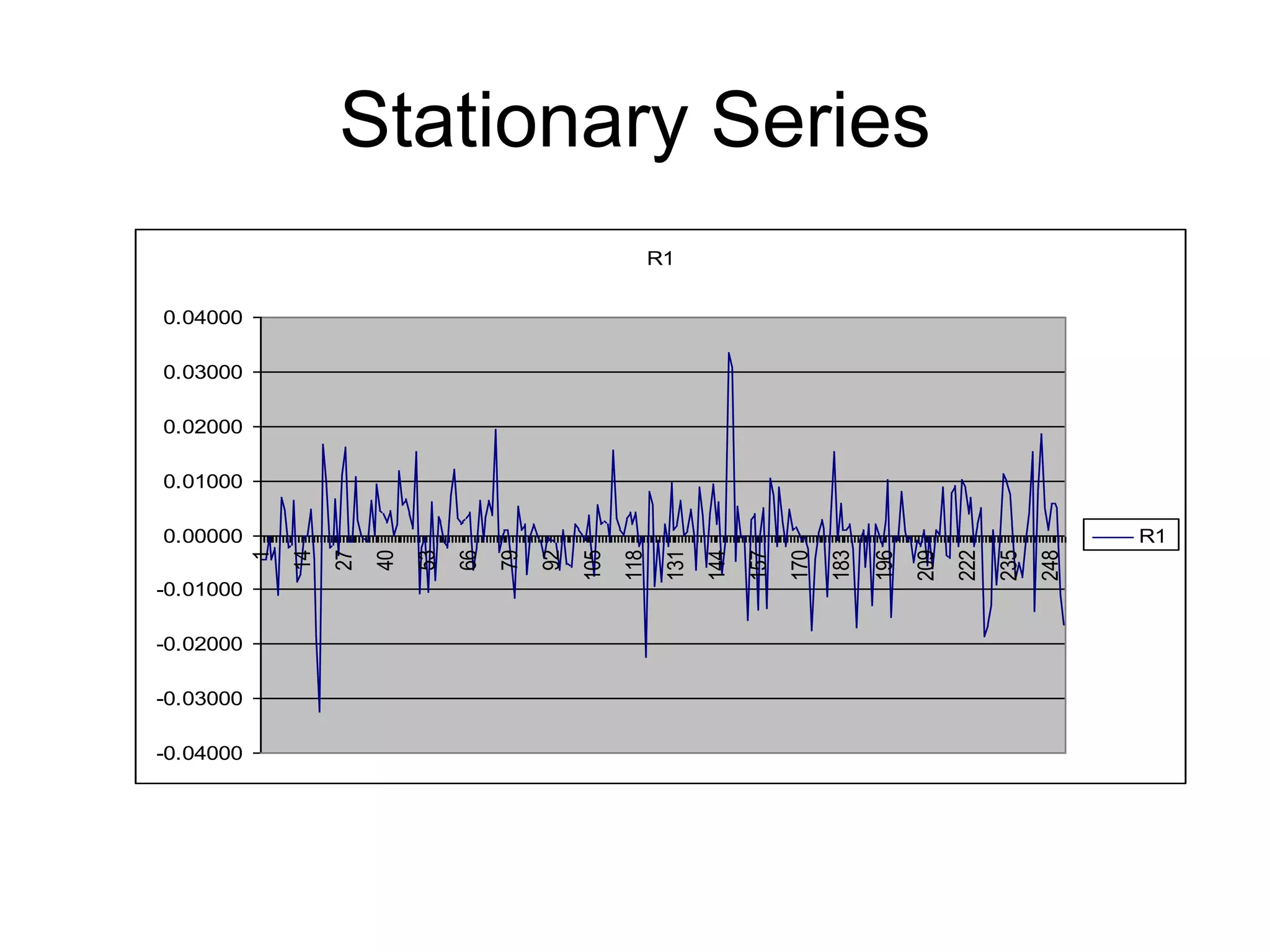

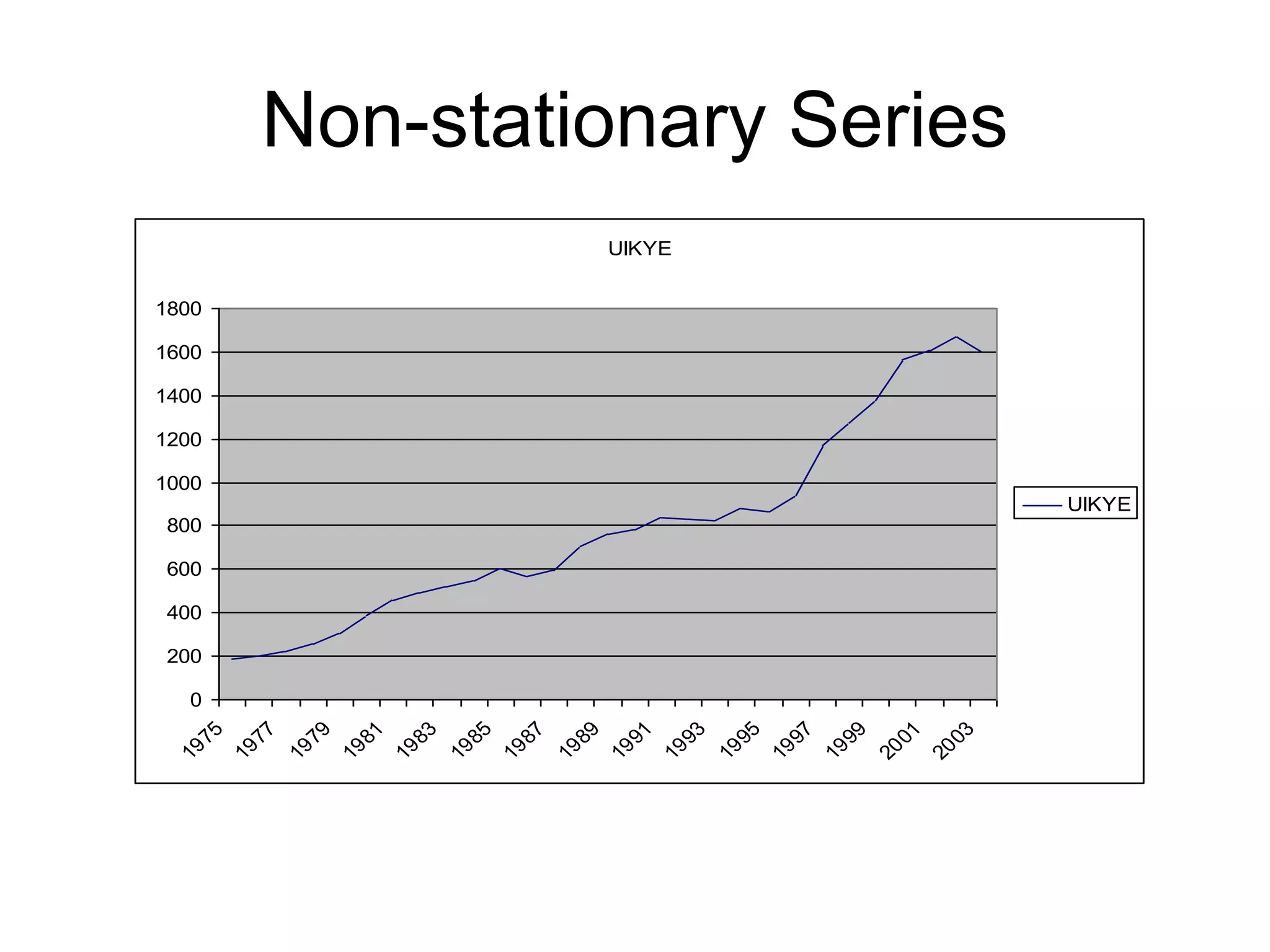

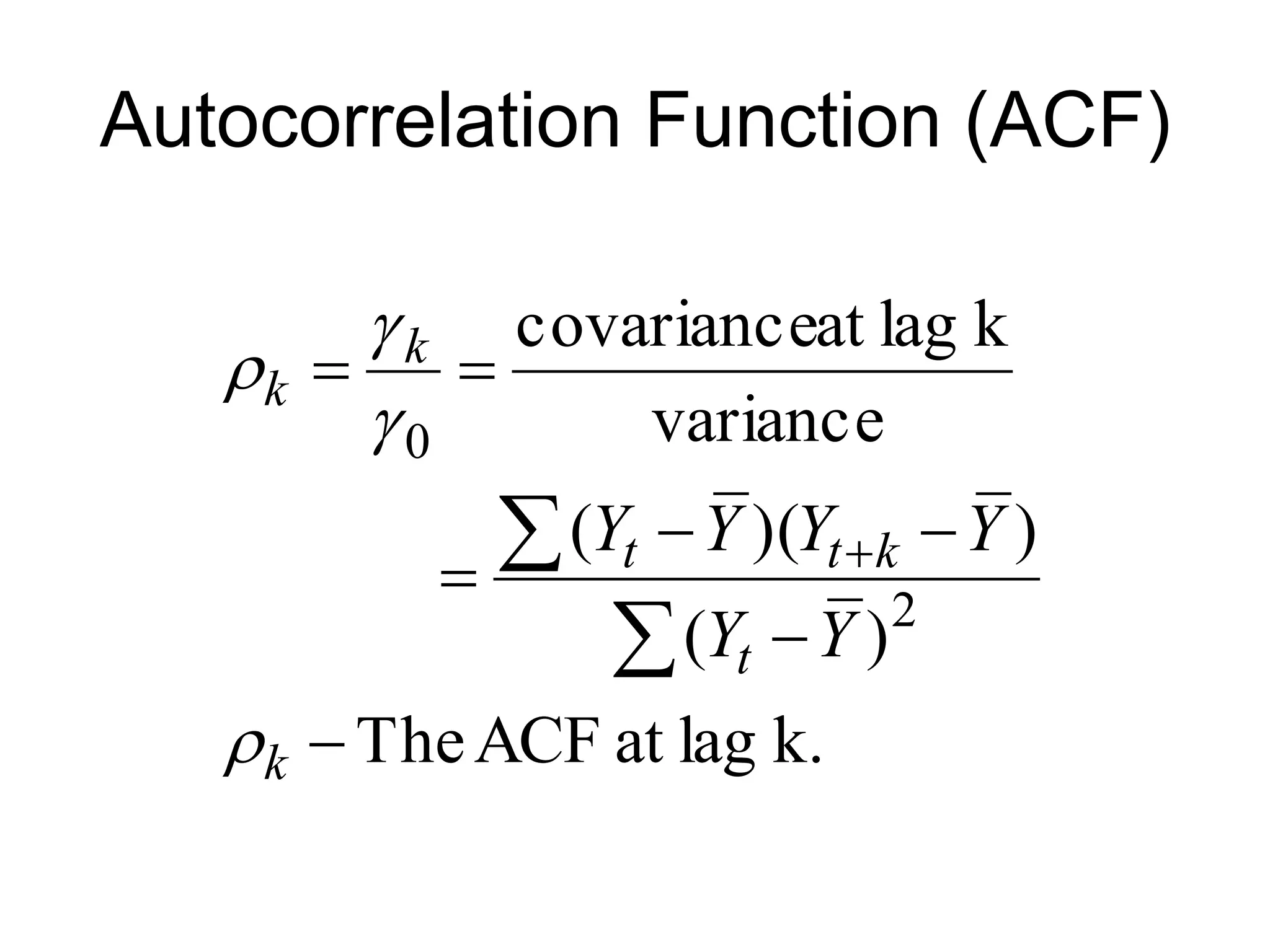

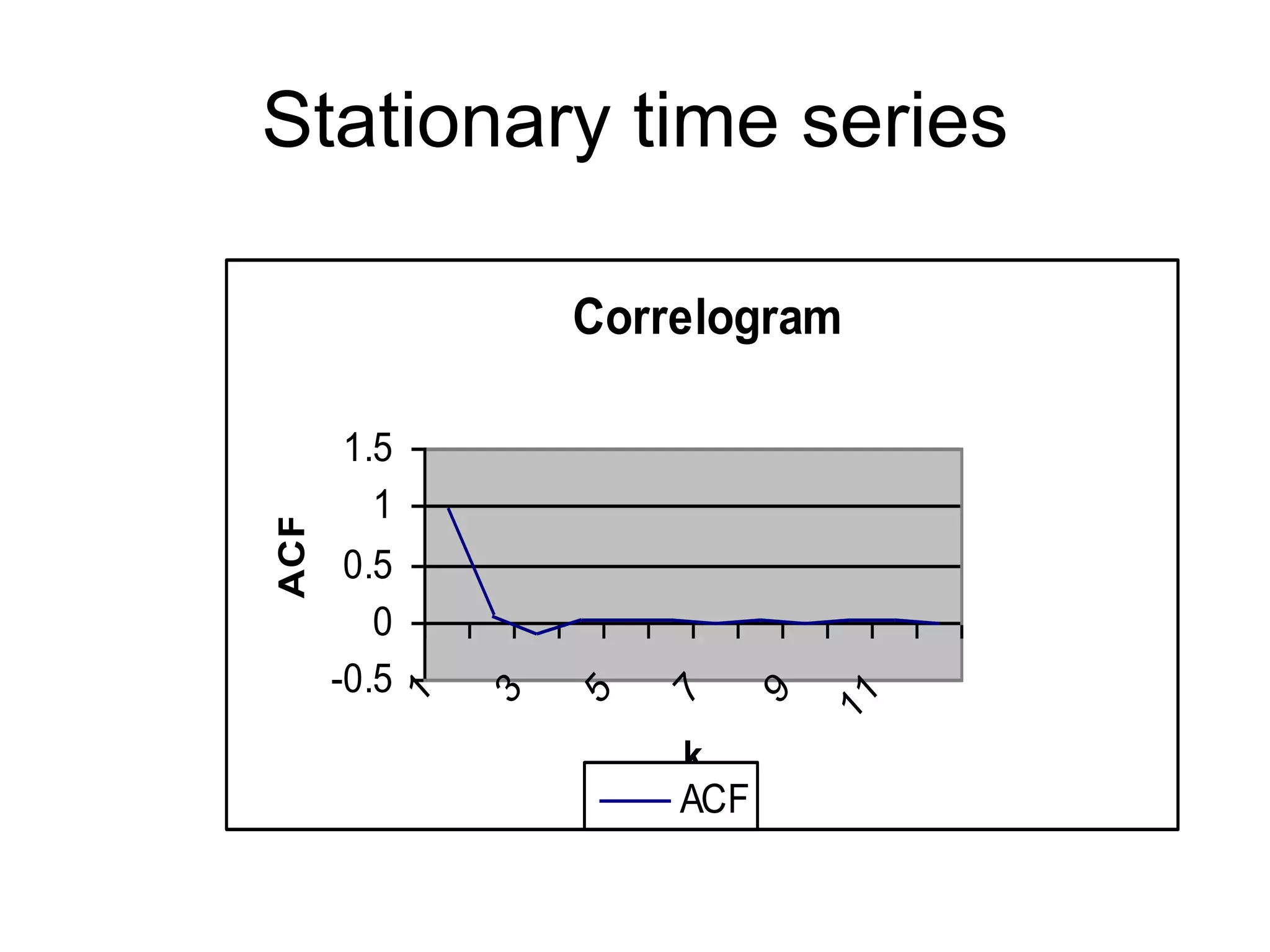

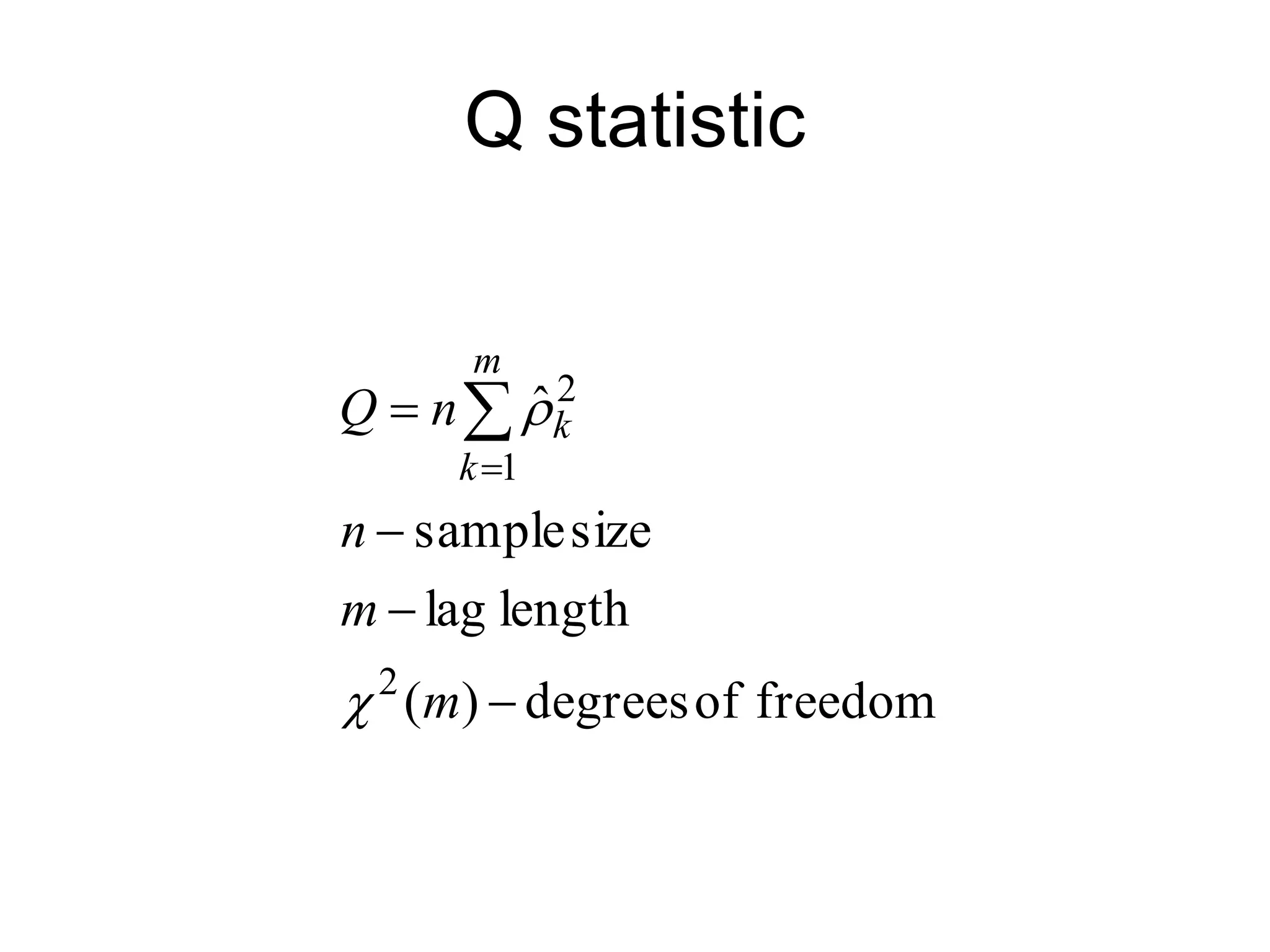

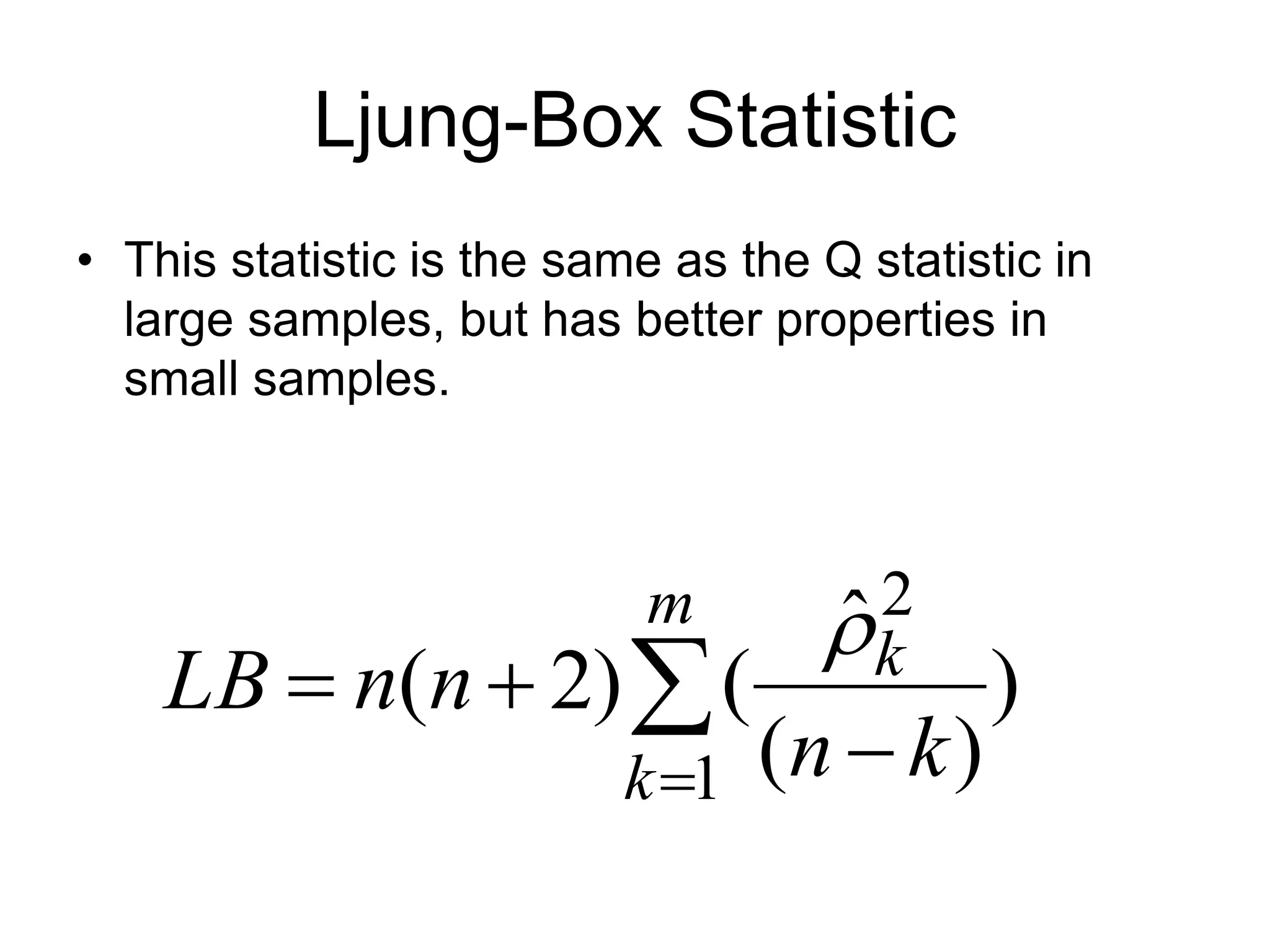

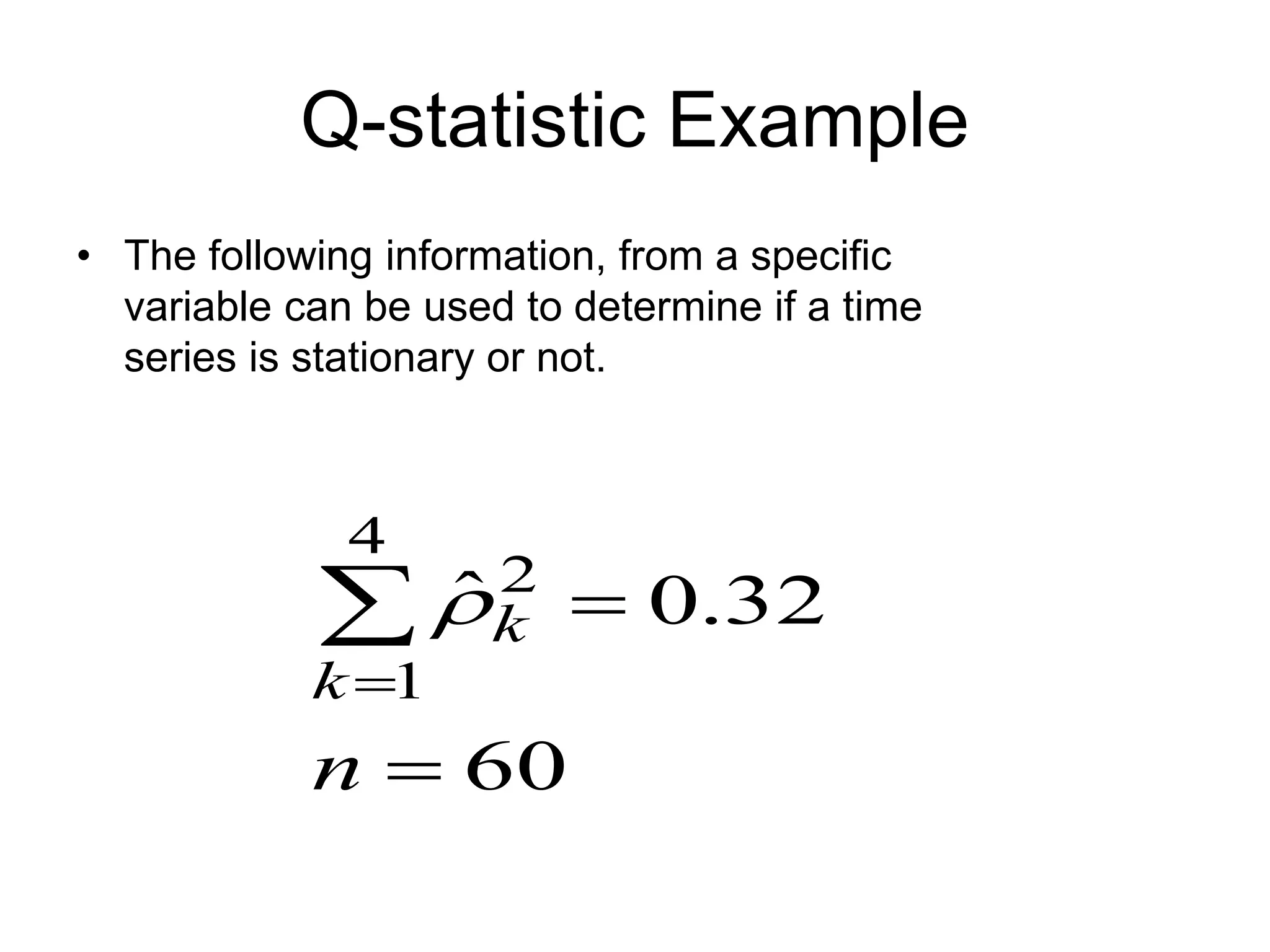

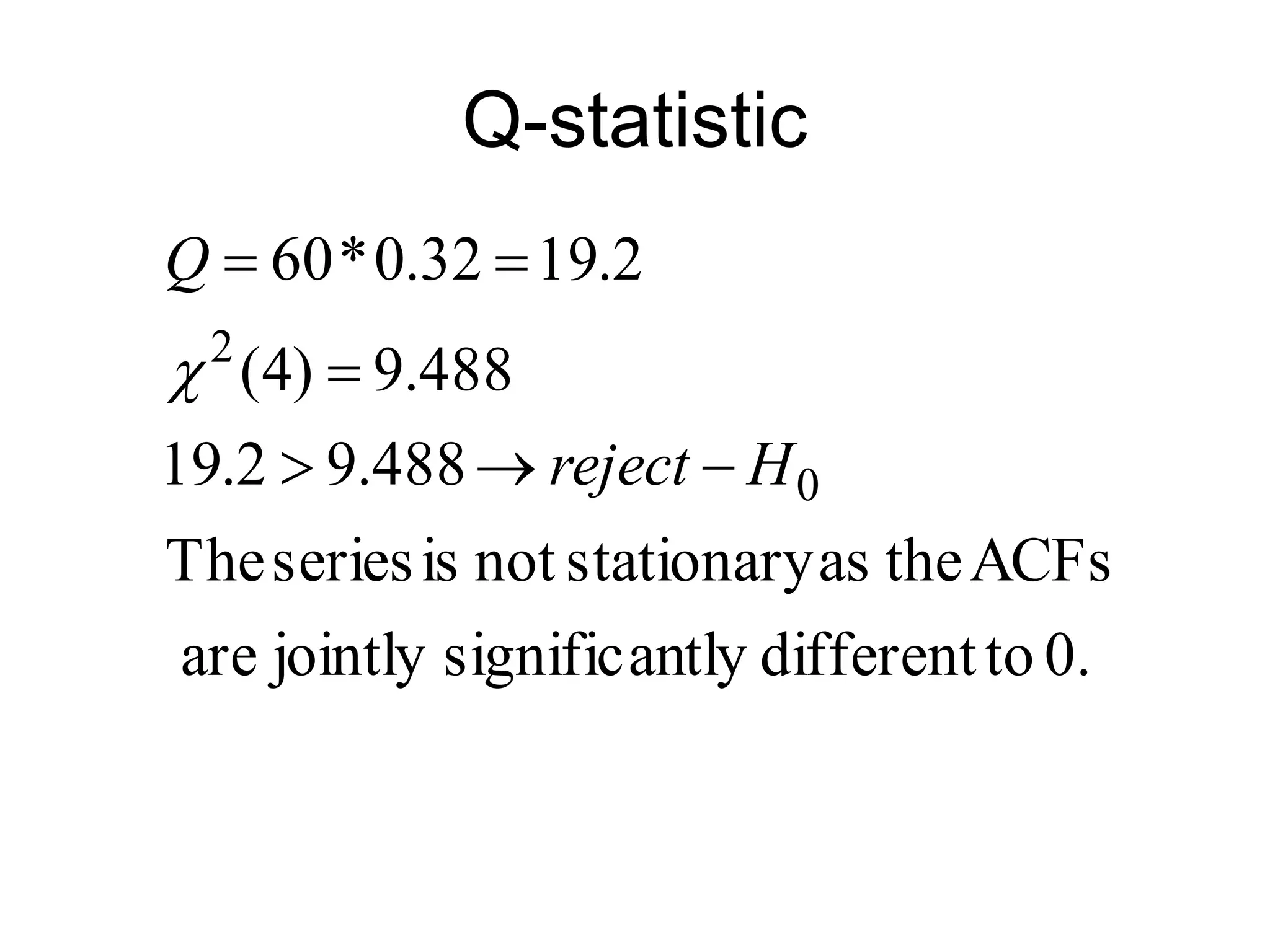

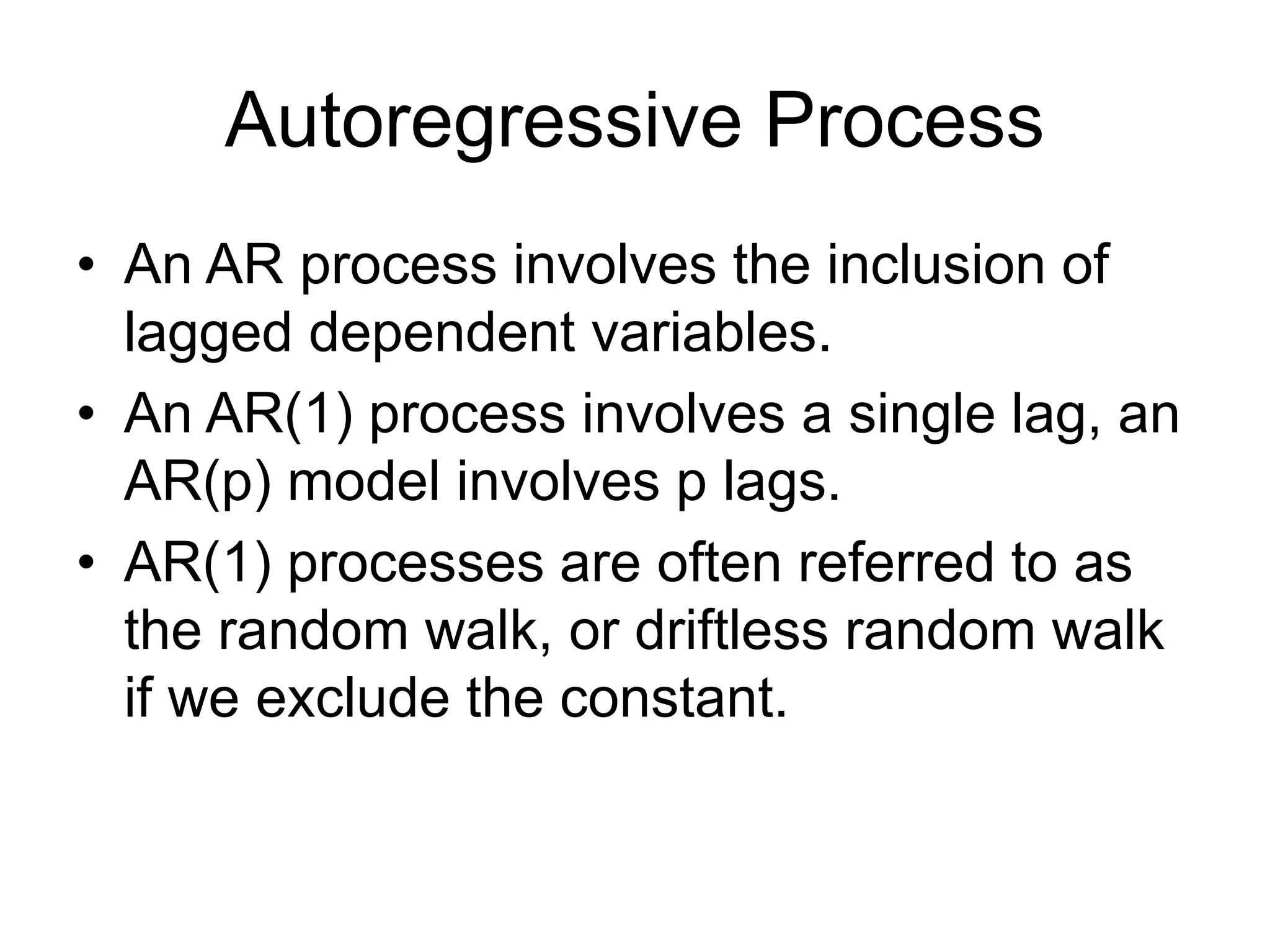

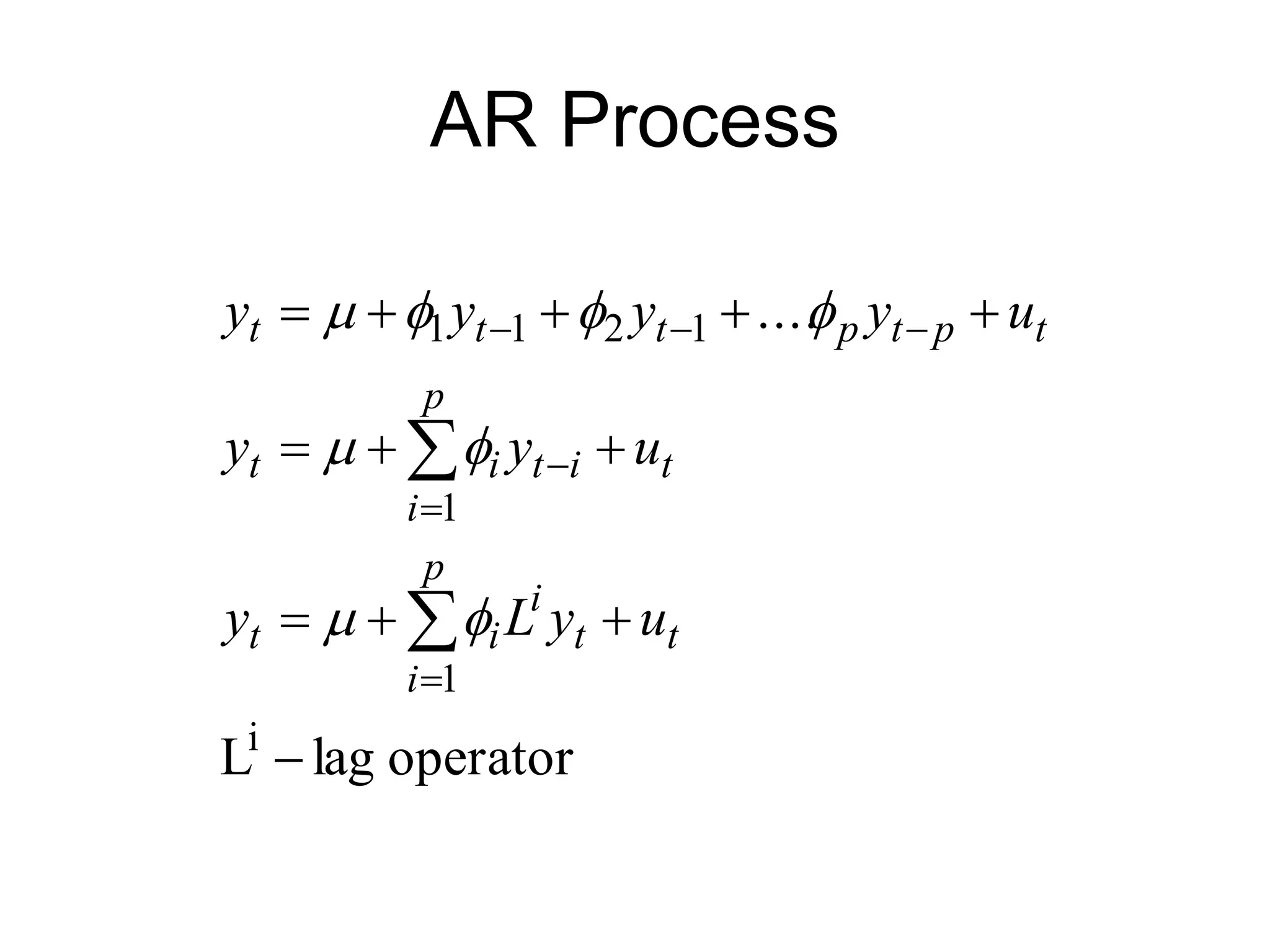

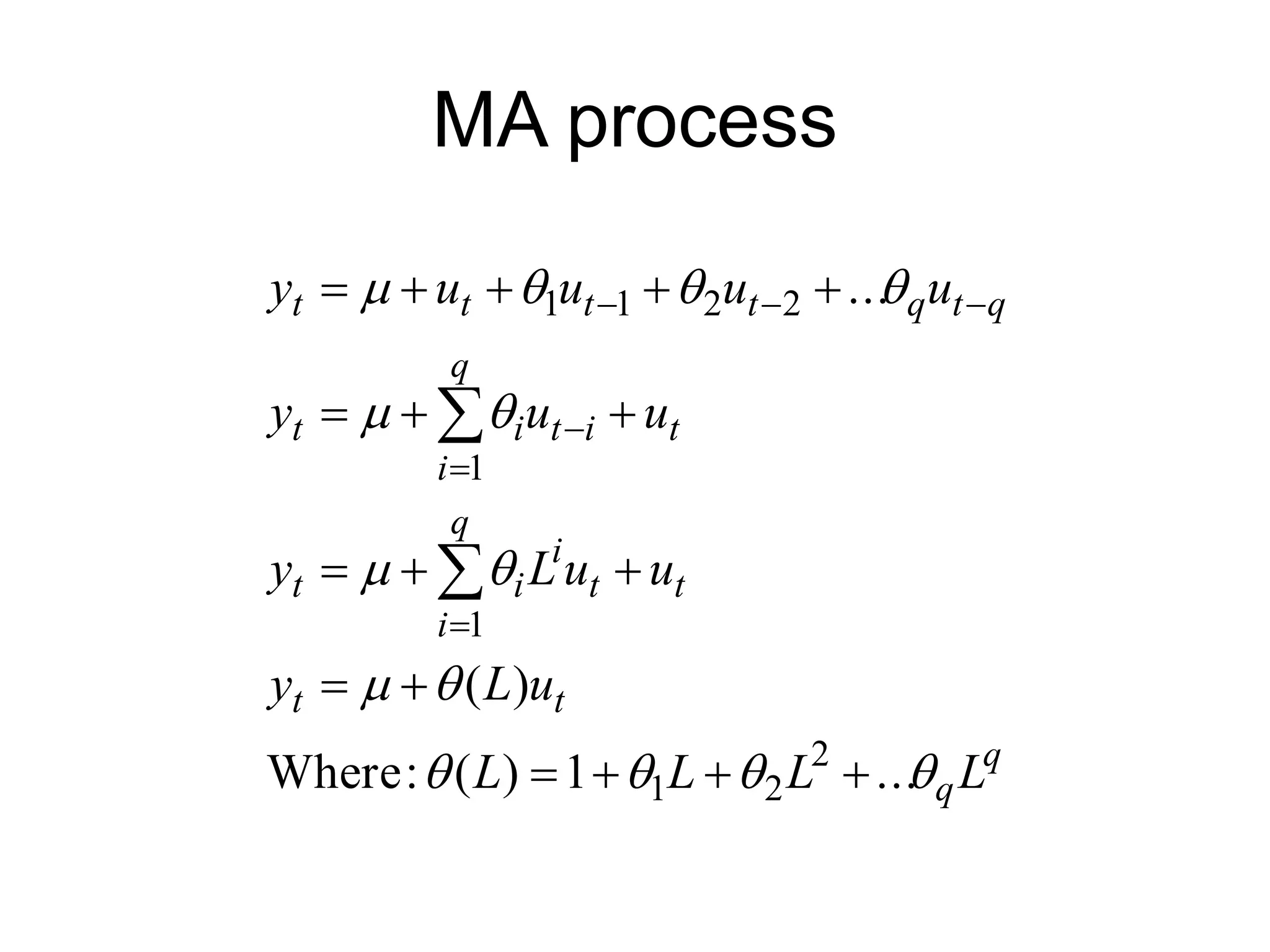

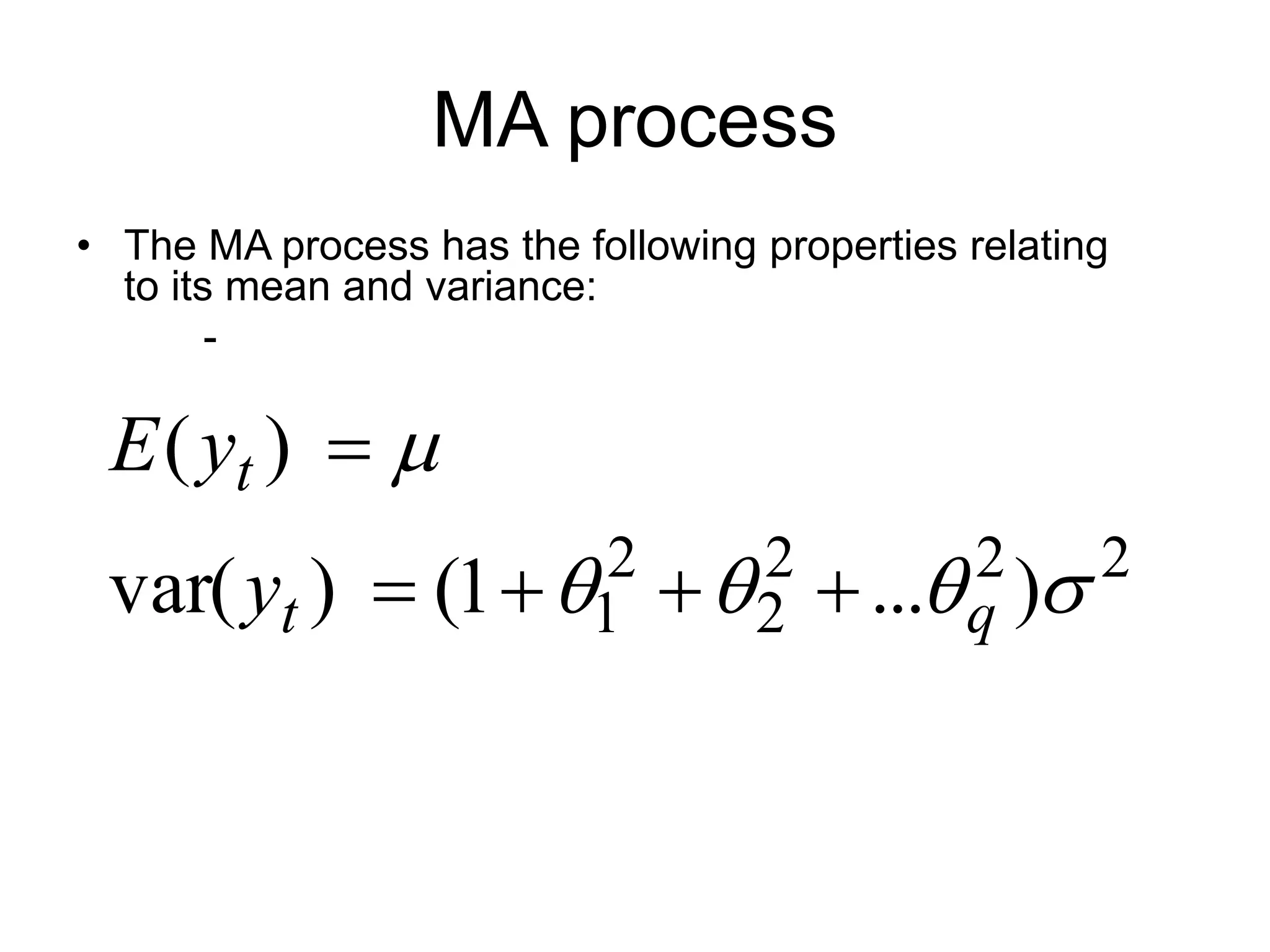

This document discusses stationarity, autocorrelation functions, and autoregressive integrated moving average (ARIMA) models. It defines stationarity and describes autocorrelation coefficients and the Q-statistic for evaluating stationarity. The components of an ARIMA model are autoregressive (AR) processes involving lagged dependent variables and moving average (MA) processes involving lagged error terms. The document provides examples of AR and MA processes and discusses interpreting their coefficients.