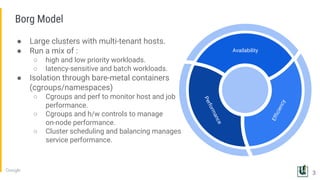

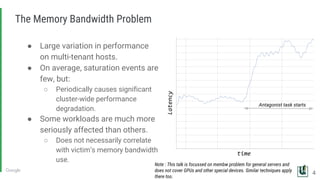

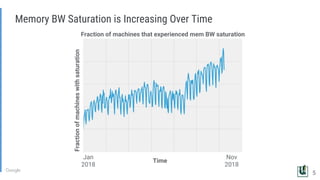

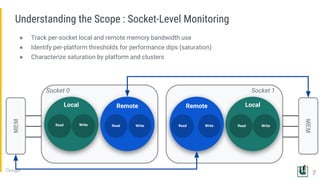

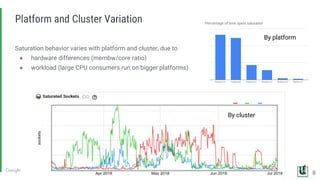

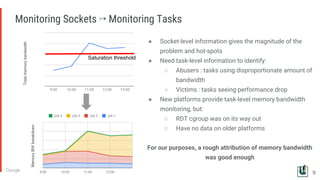

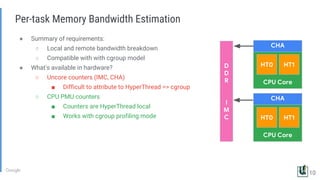

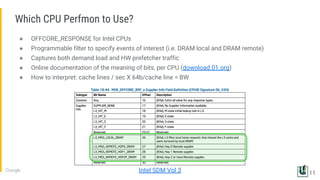

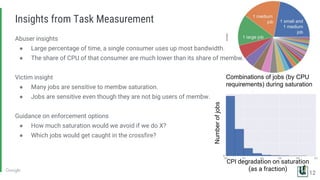

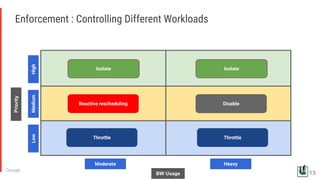

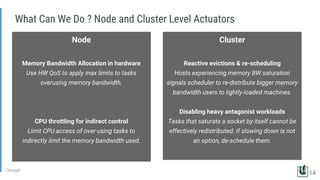

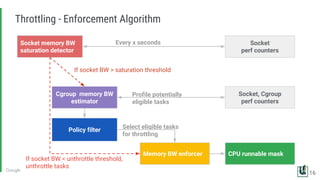

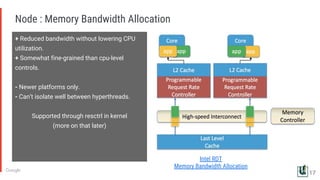

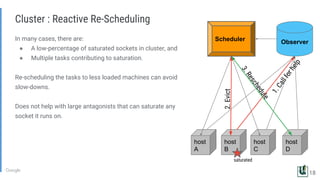

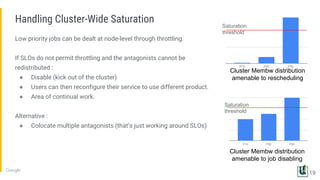

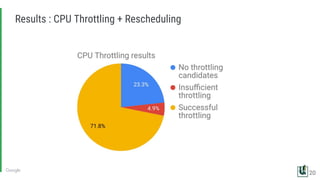

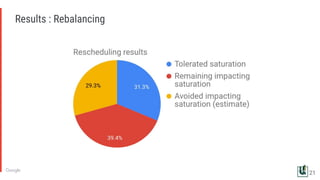

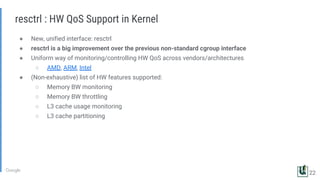

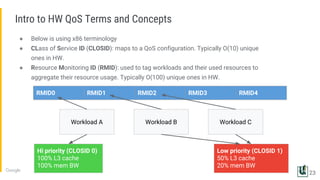

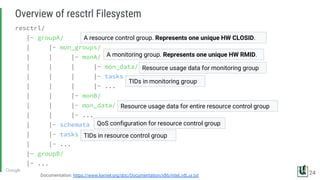

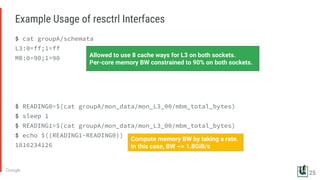

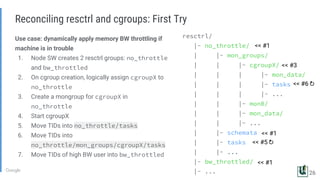

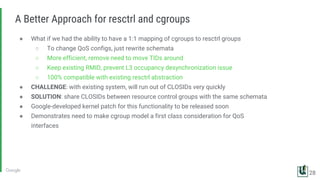

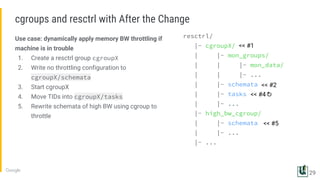

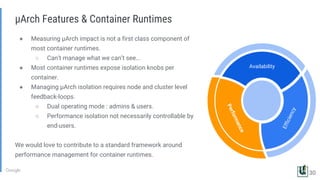

The document discusses the challenges of managing memory bandwidth in large multi-tenant clusters, focusing on monitoring and isolating workloads to prevent performance degradation. It examines strategies for identifying 'abuser' and 'victim' tasks, and proposes enforcement mechanisms like throttling and rescheduling to address memory bandwidth saturation. The use of hardware quality-of-service (QoS) interfaces such as resctrl is highlighted as a key innovation for improving memory bandwidth management across different platforms.