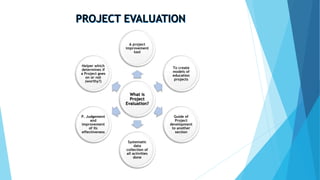

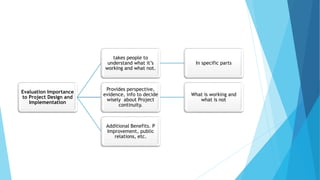

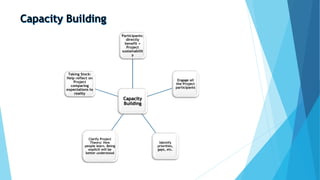

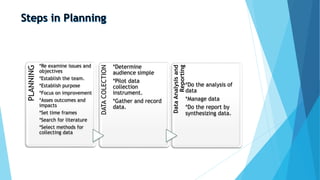

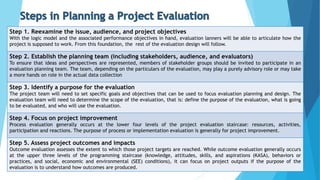

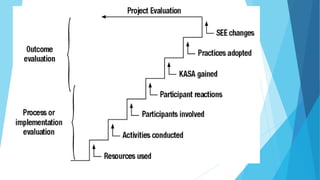

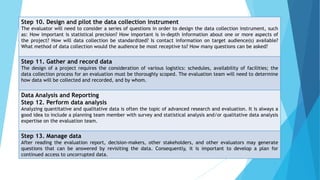

This document provides guidance on project evaluation. It discusses what project evaluation is, its importance in project design and implementation, additional benefits like project improvement and capacity building. It outlines the planning, data collection, analysis, and reporting process for evaluations. Key steps include examining issues and objectives, establishing a team, identifying the purpose, focusing on improvement, assessing outcomes and impacts, and creating a report to synthesize findings. The goal is to help determine what is and is not working to improve the project.