This document summarizes the challenges and solutions for maintaining large PostgreSQL databases at Emma, including:

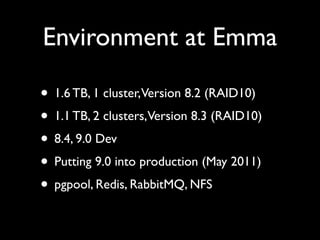

- Maintaining terabytes of data across multiple clusters up to version 9.0

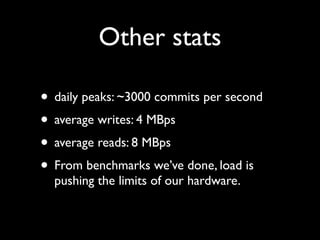

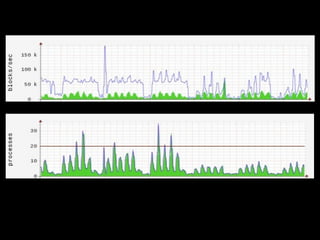

- Facing performance issues when the hardware load was pushed to its limits

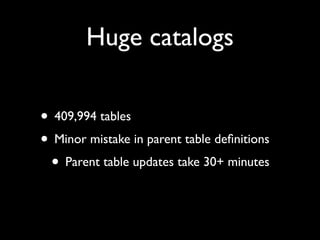

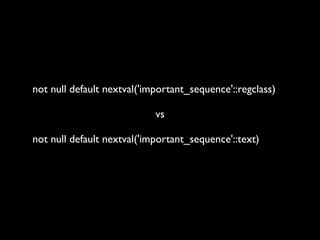

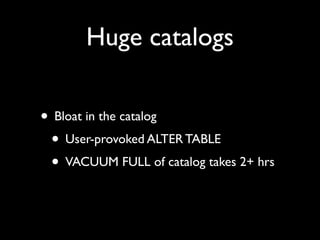

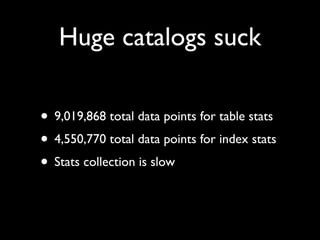

- Dealing with huge catalogs containing millions of data points that caused slow performance

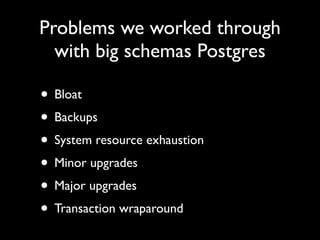

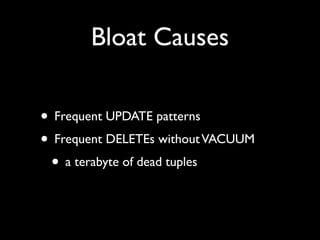

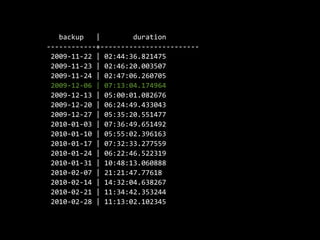

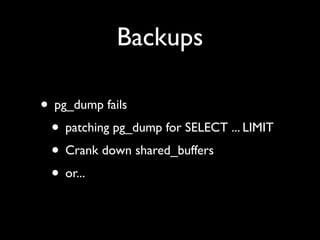

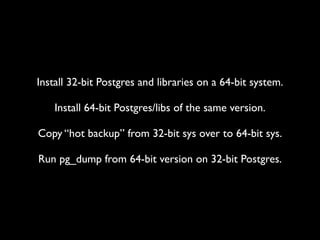

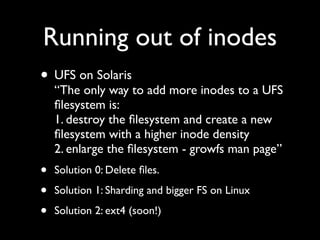

- Addressing problems like bloat, backups that took hours, system resource exhaustion, and transaction wraparound issues

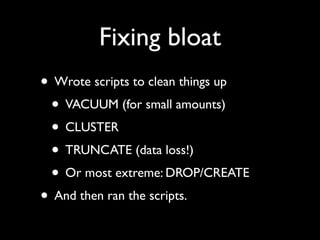

- Implementing solutions such as scripts to clean up bloat, sharding to a Linux filesystem, and increasing autovacuum thresholds