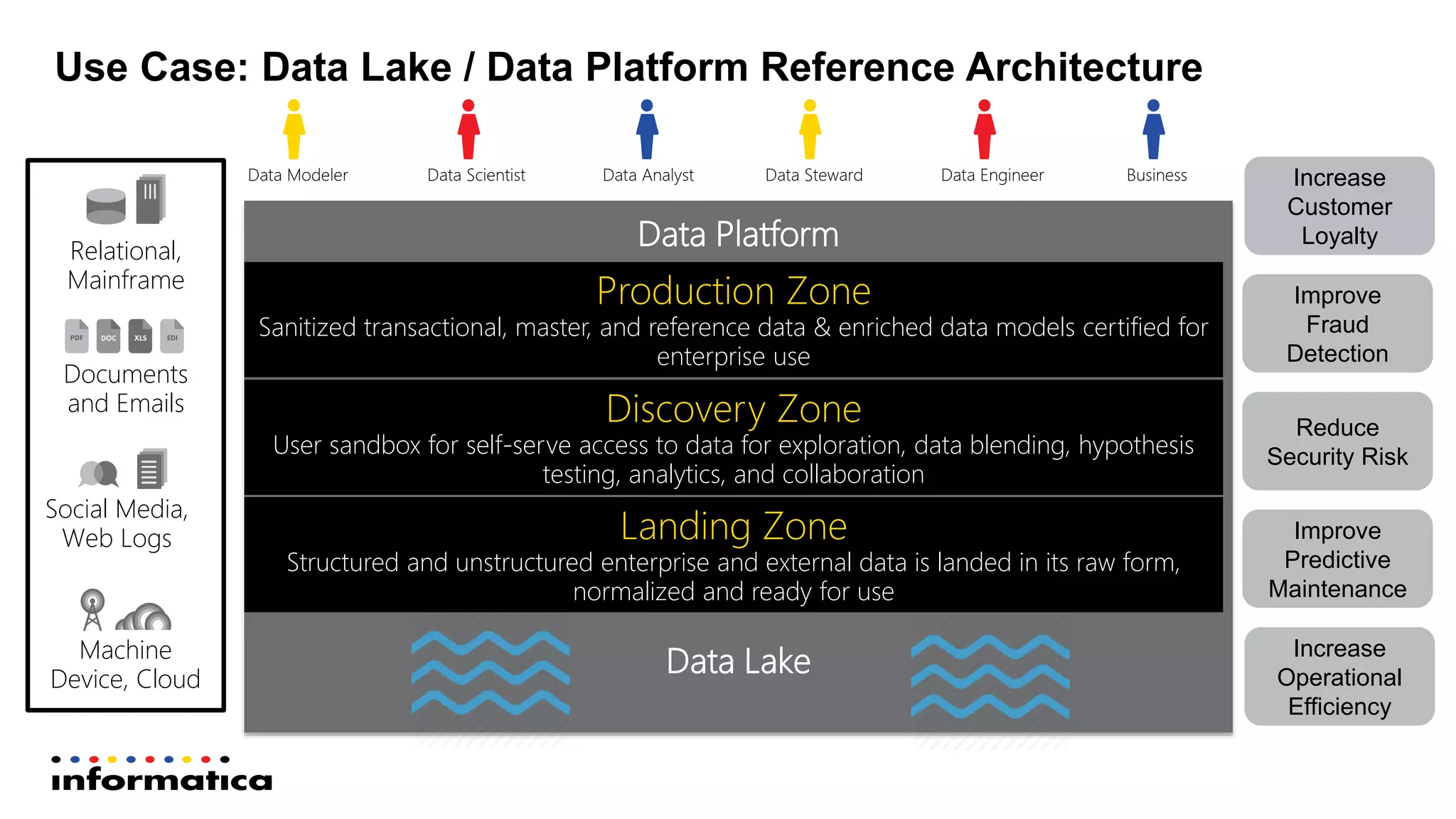

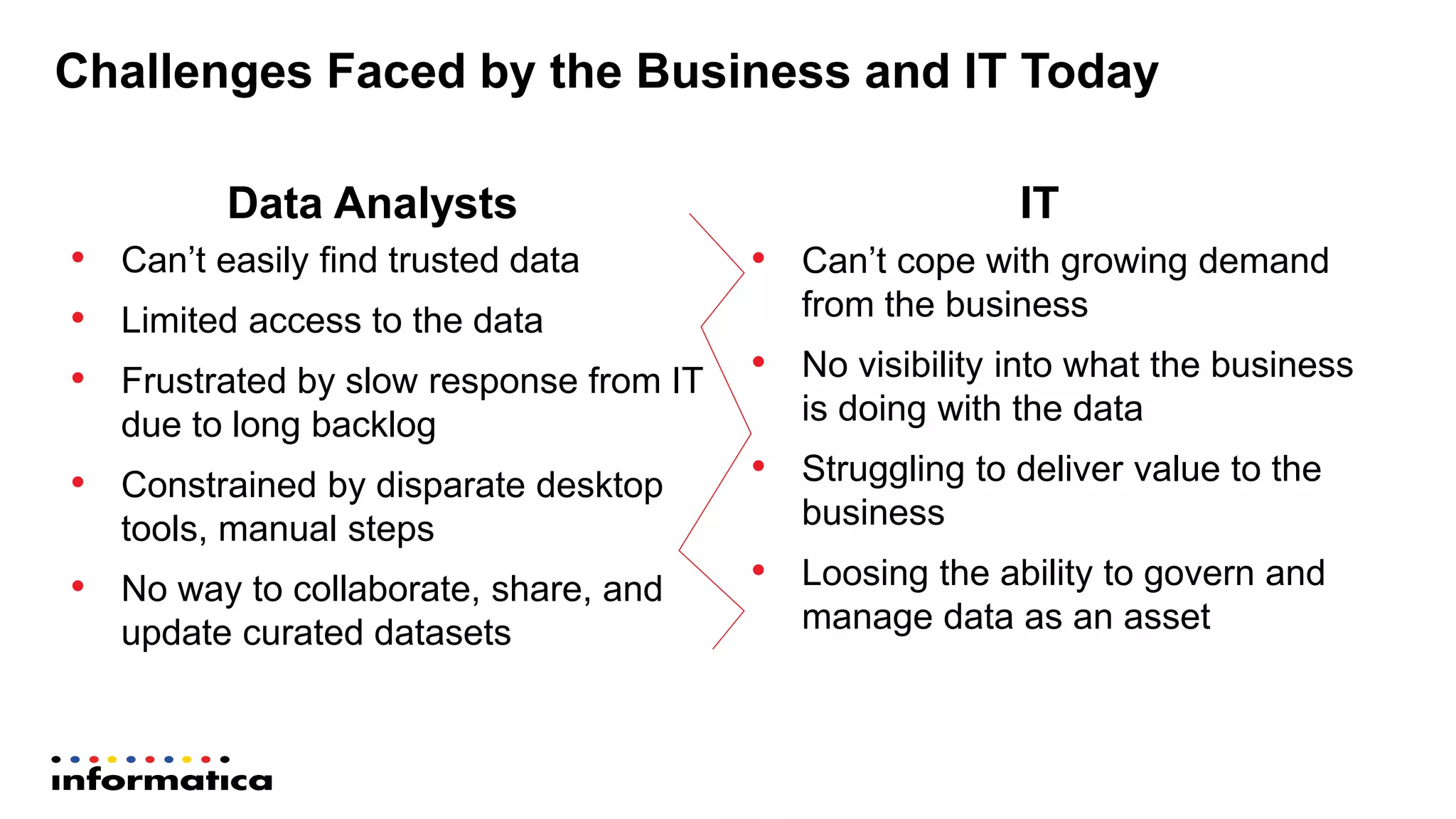

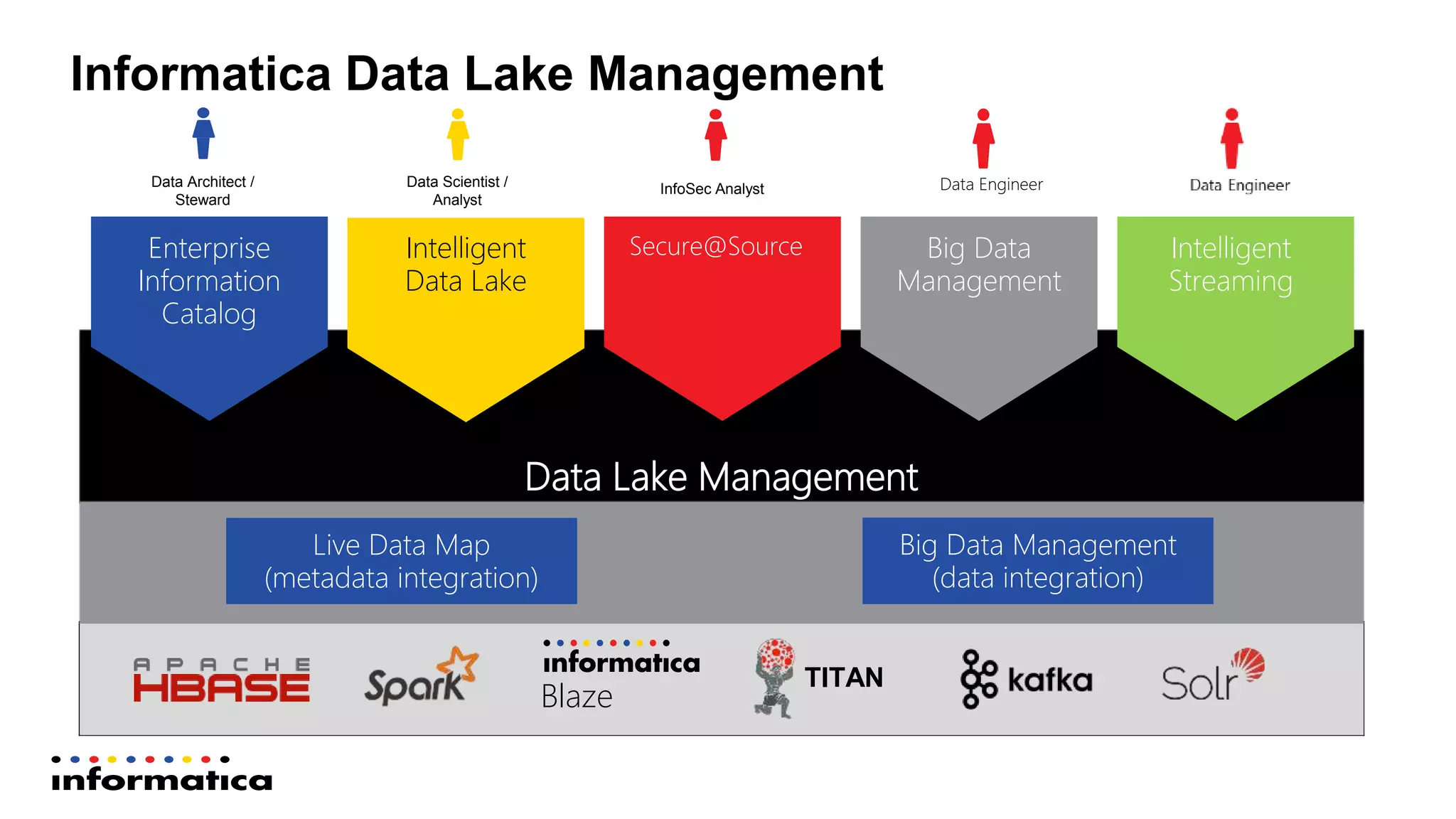

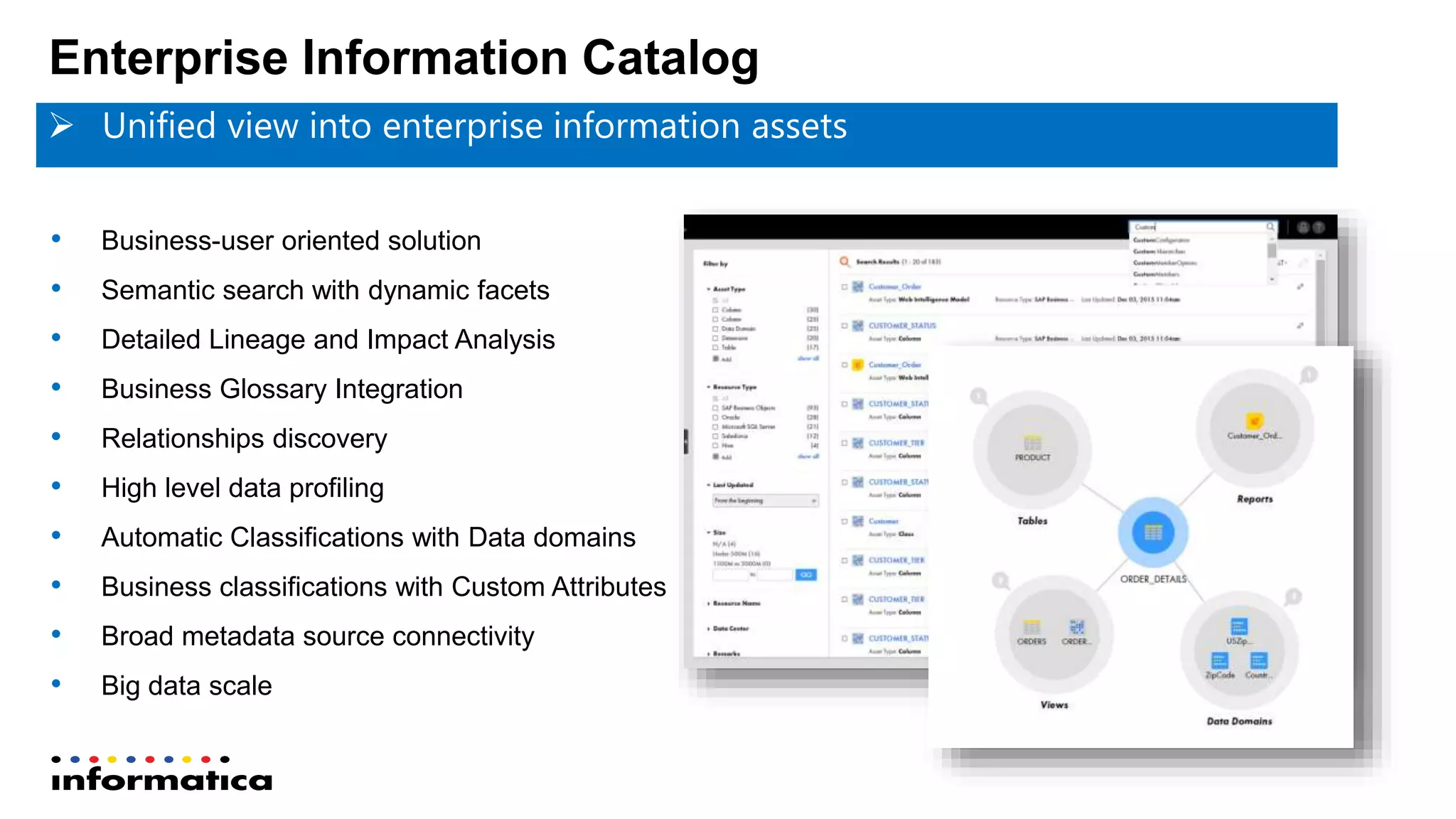

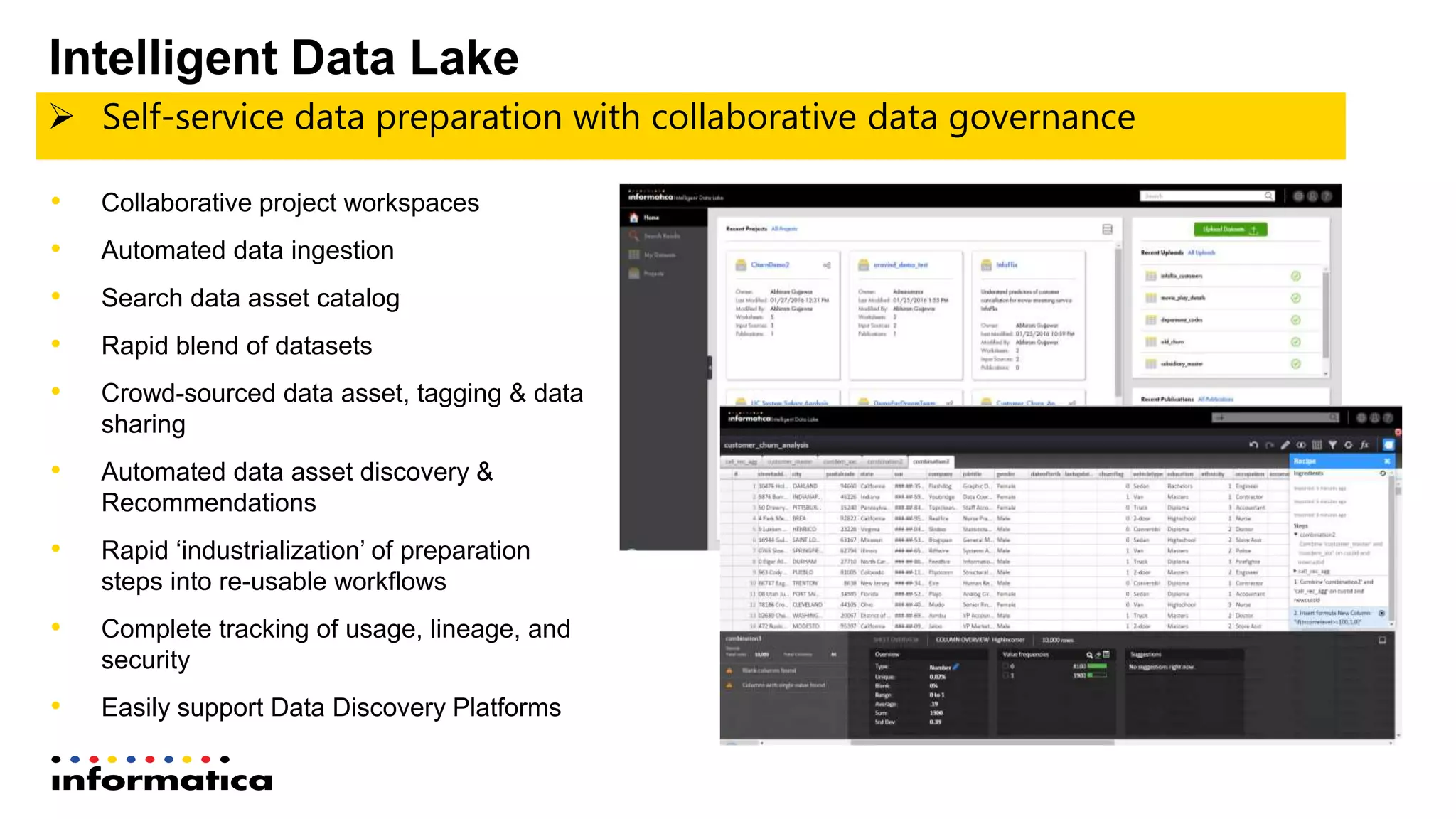

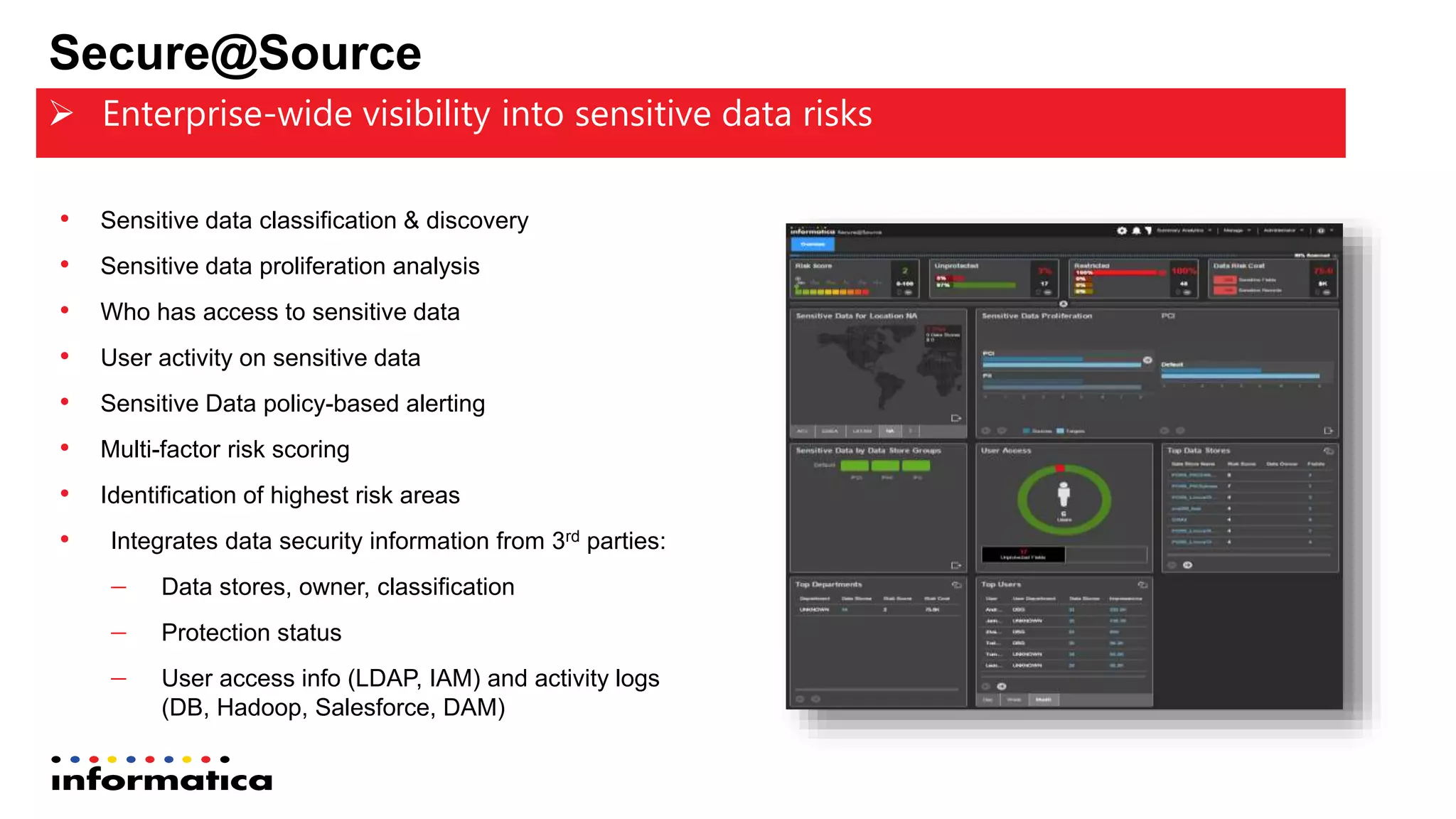

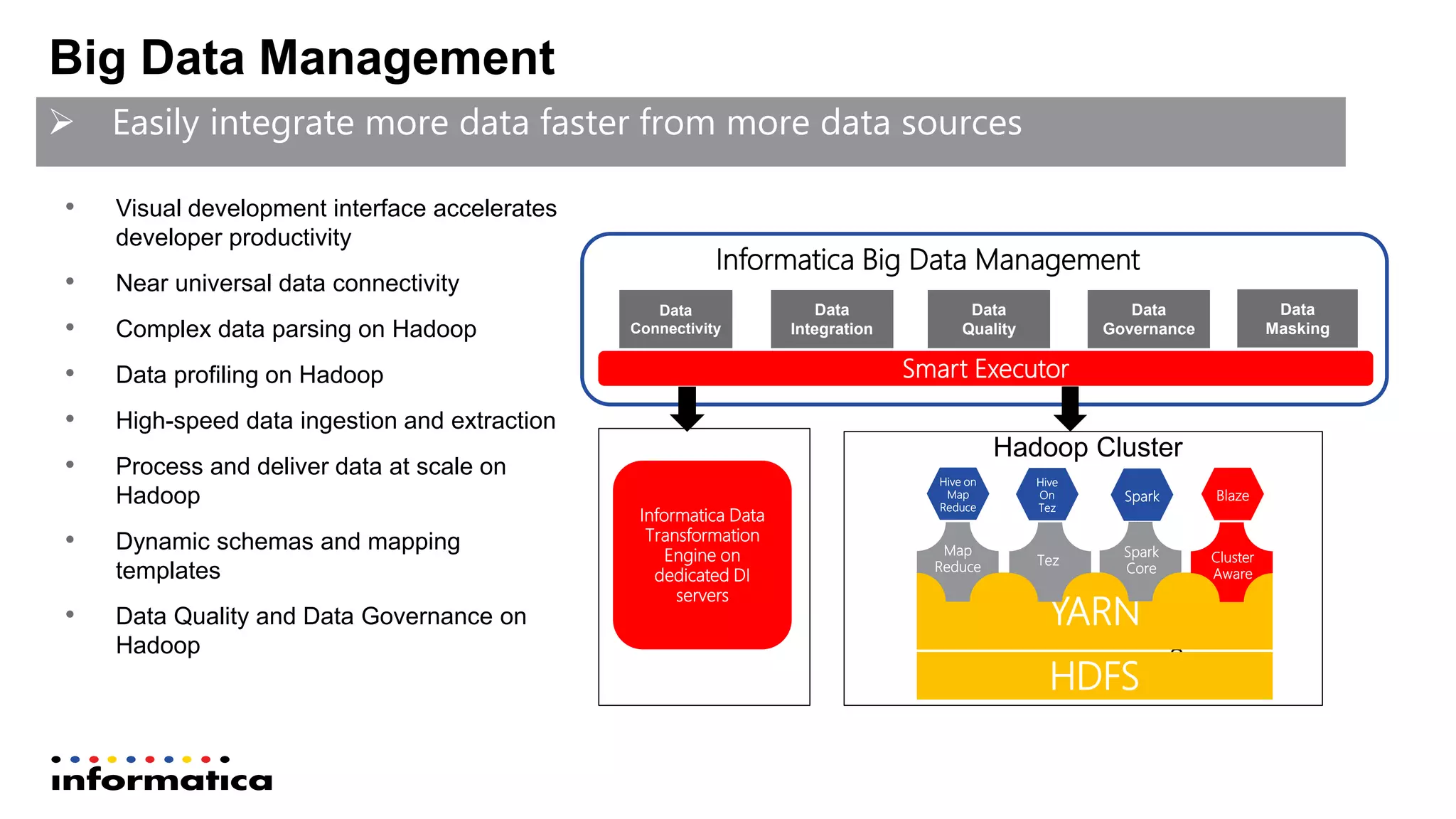

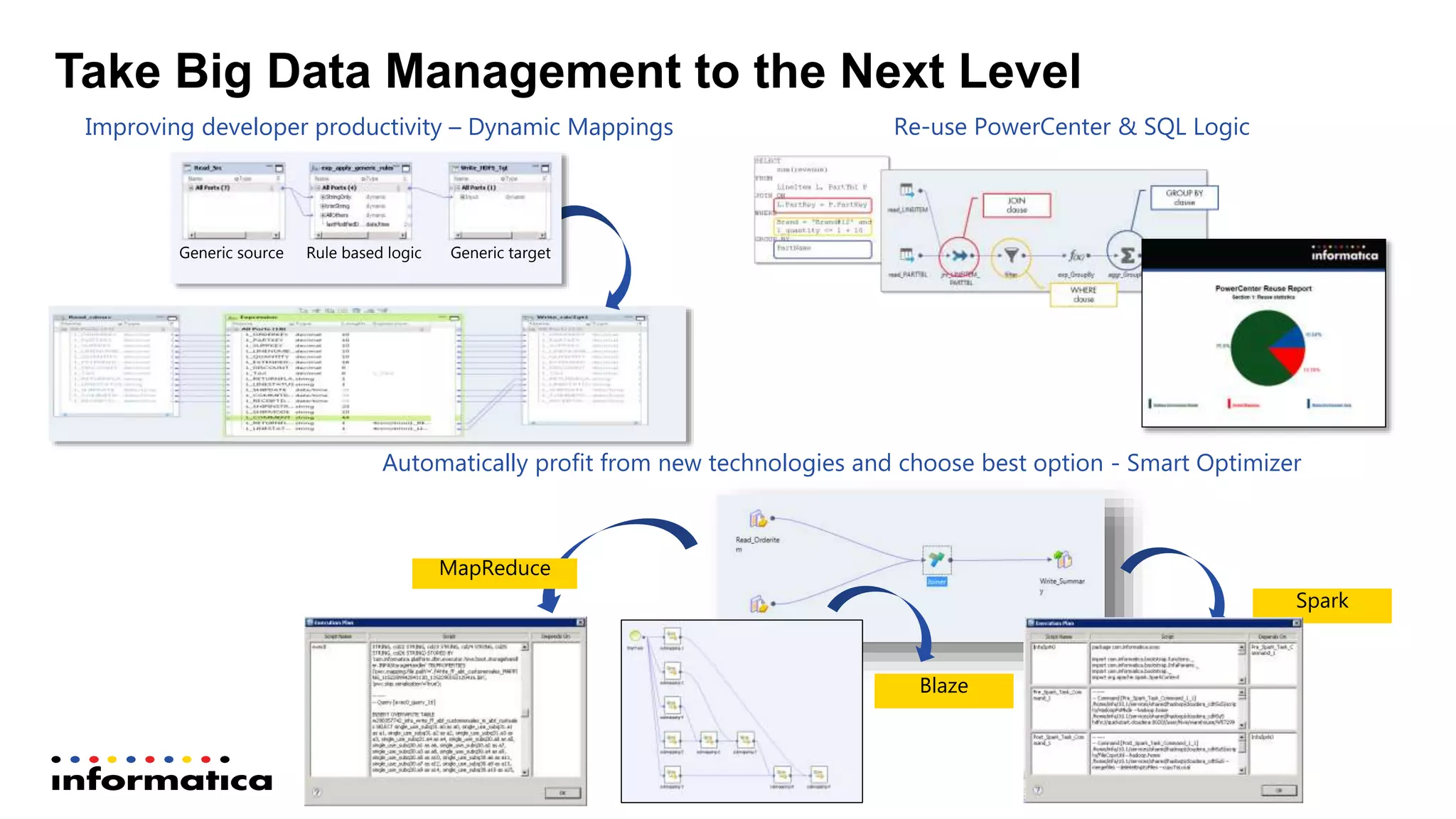

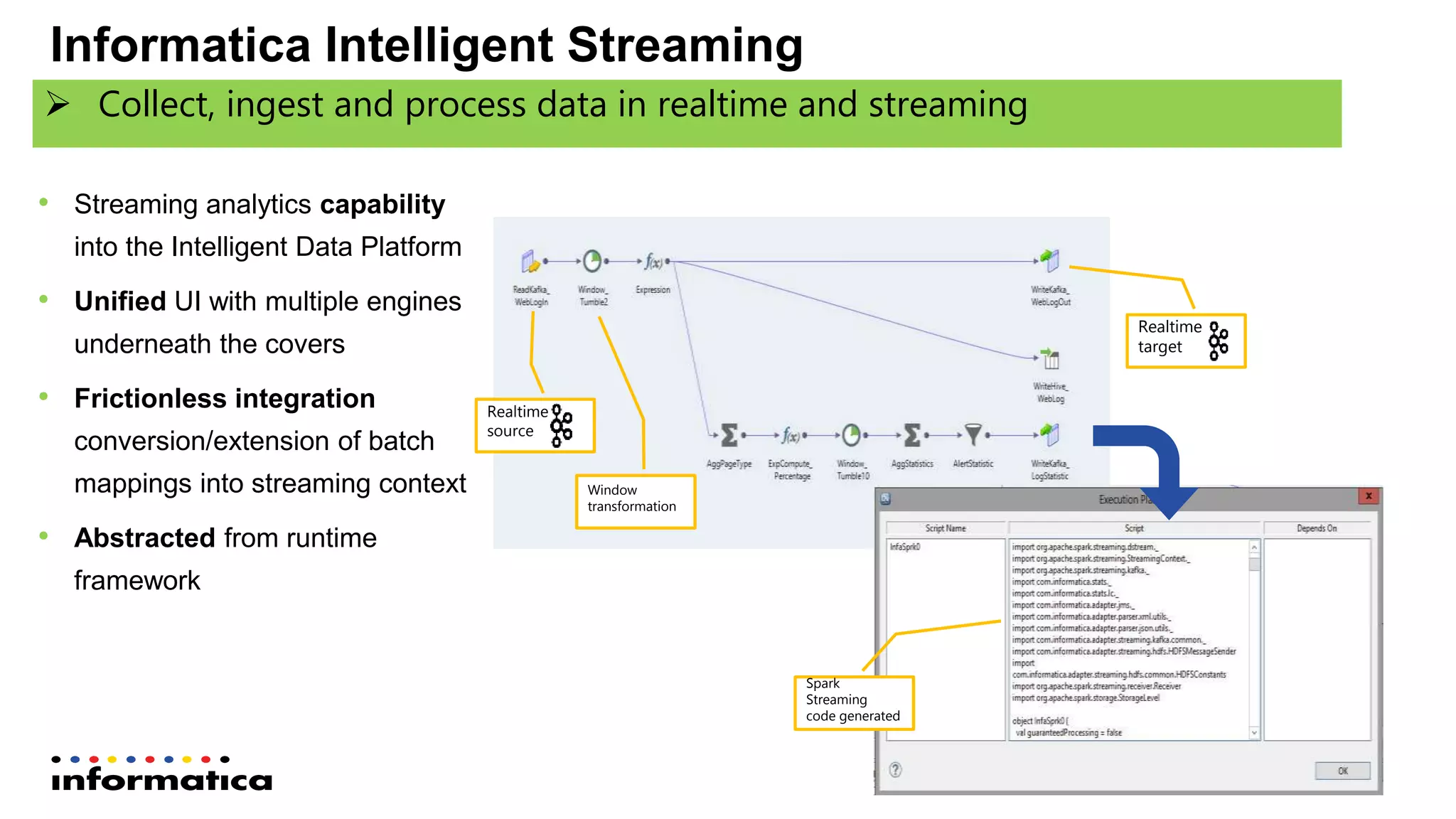

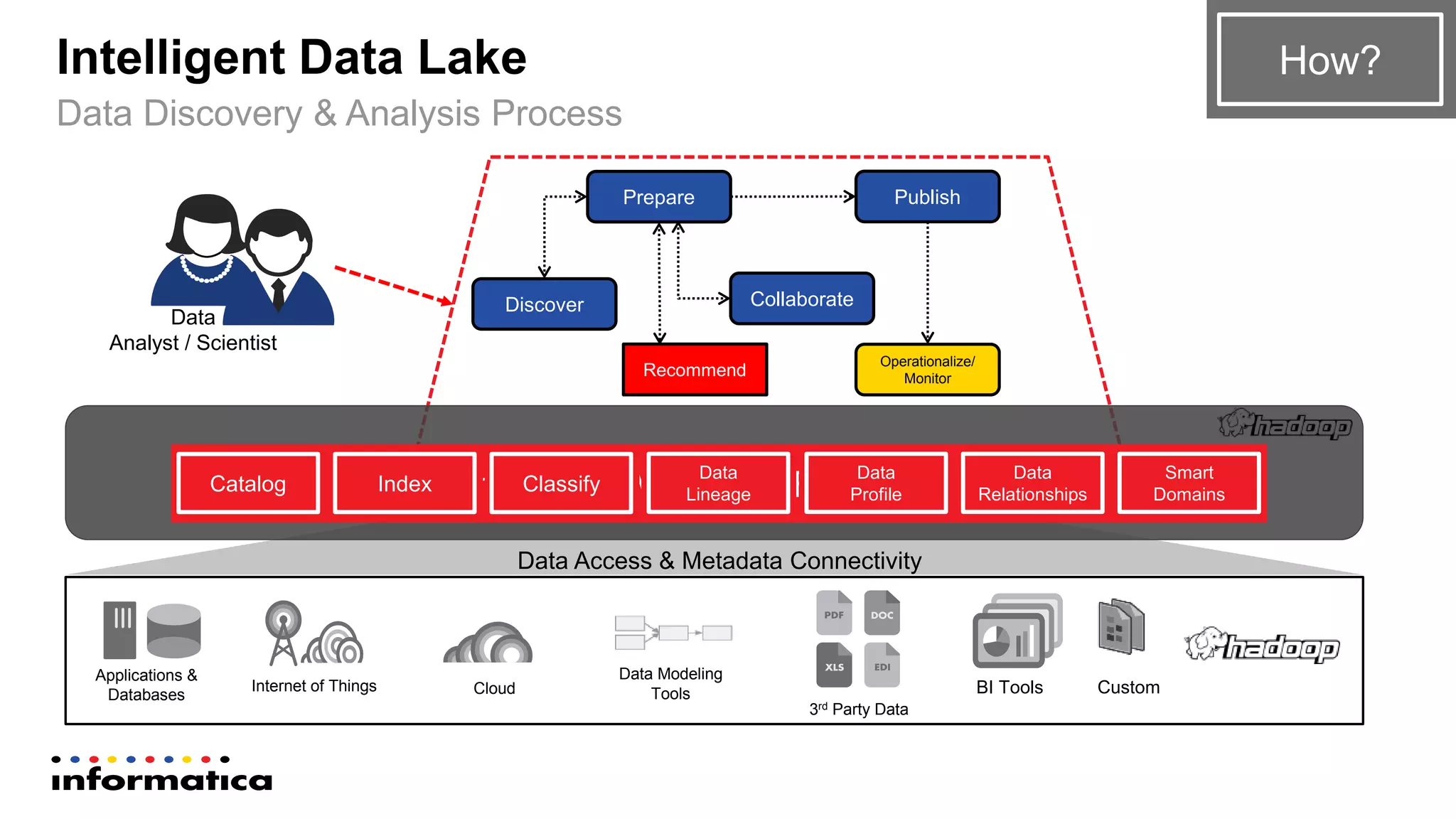

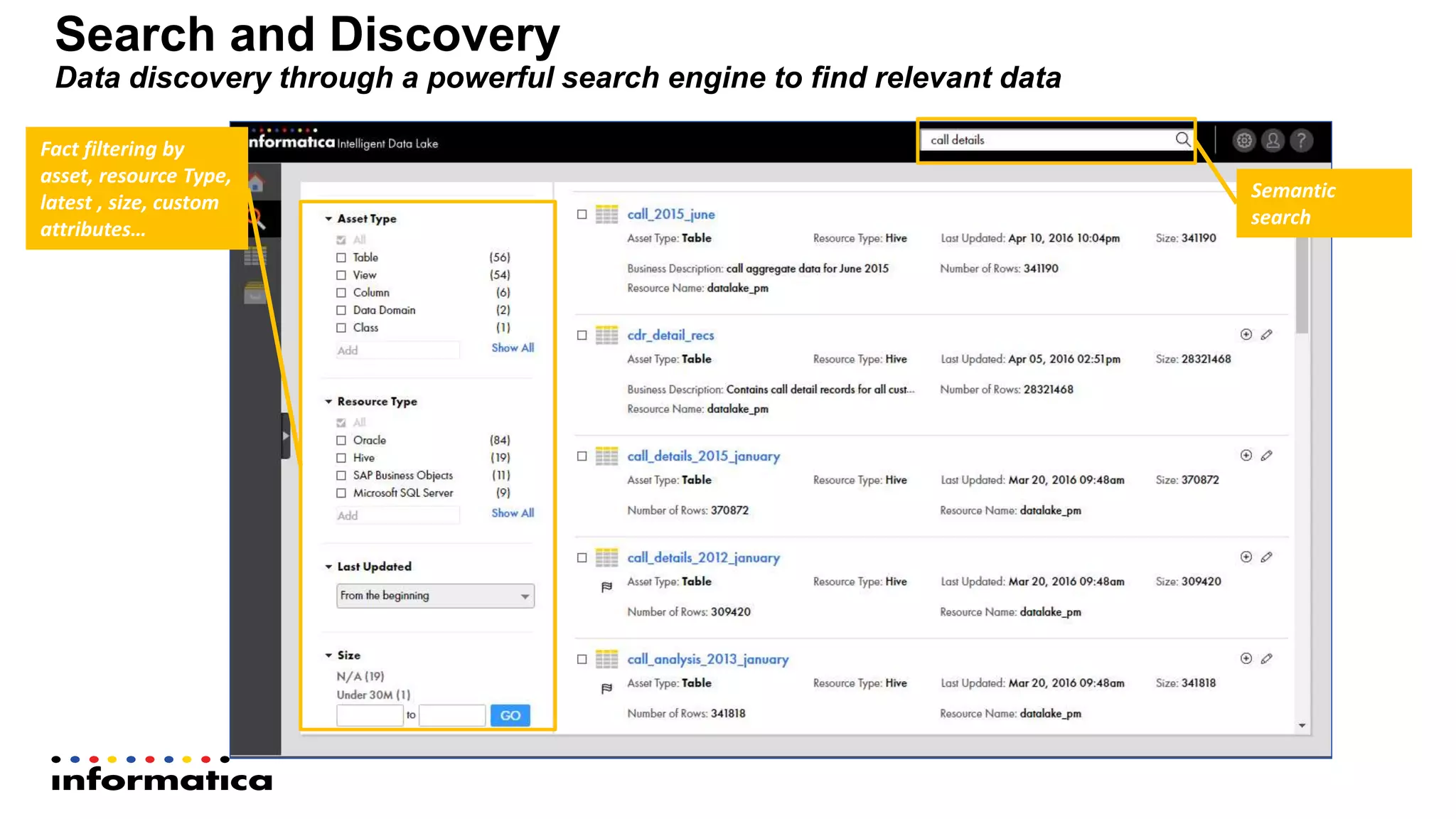

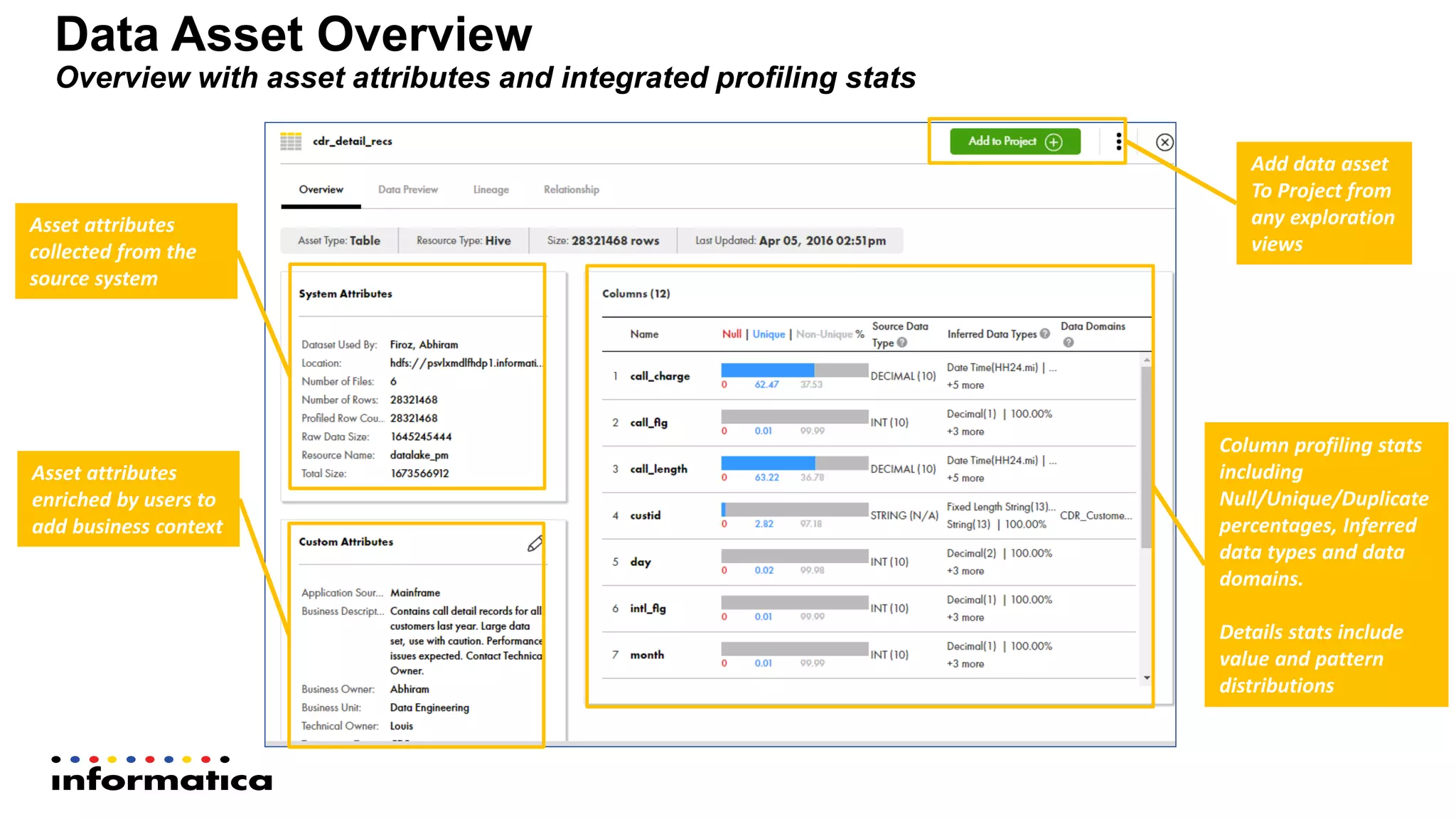

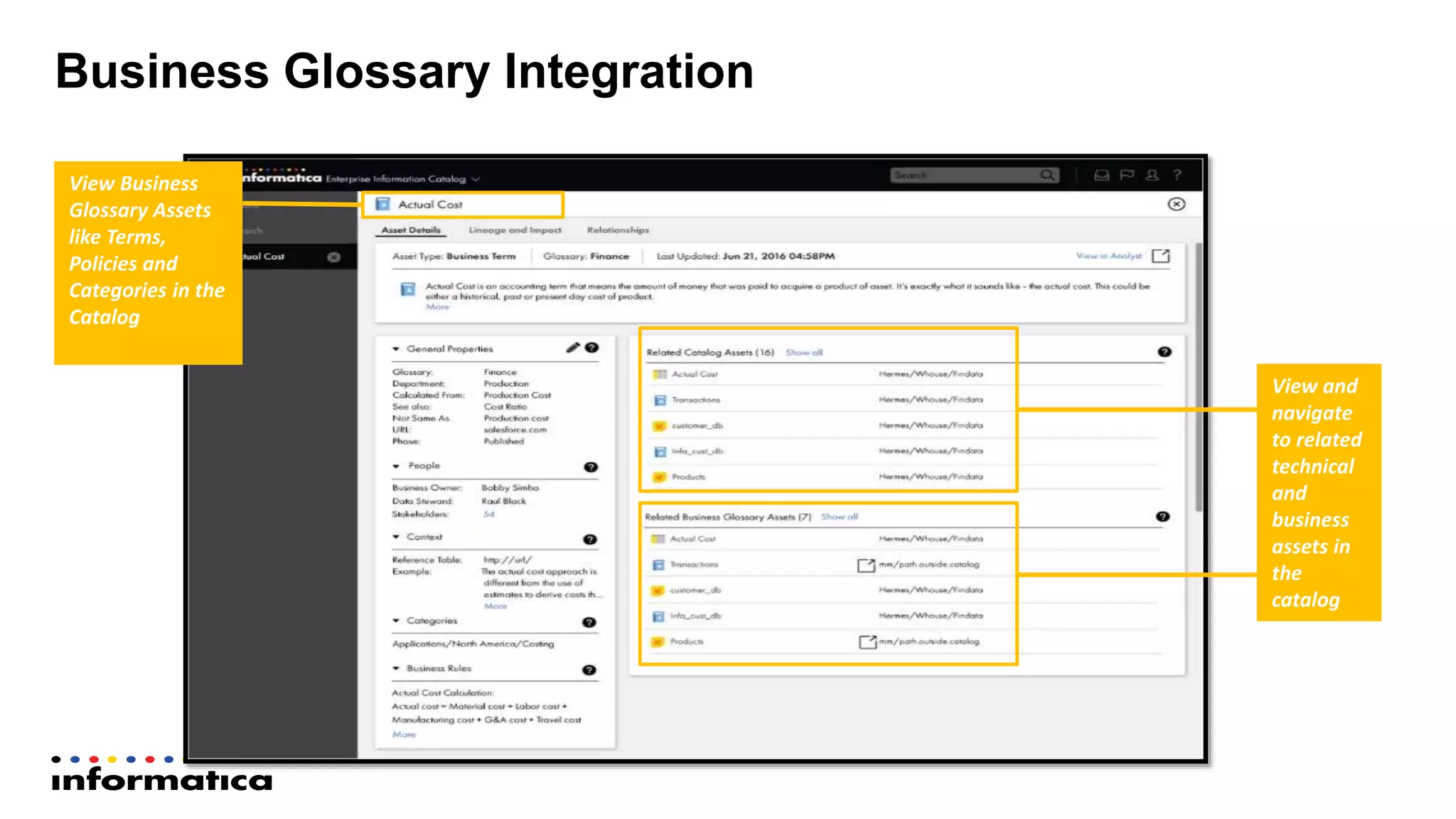

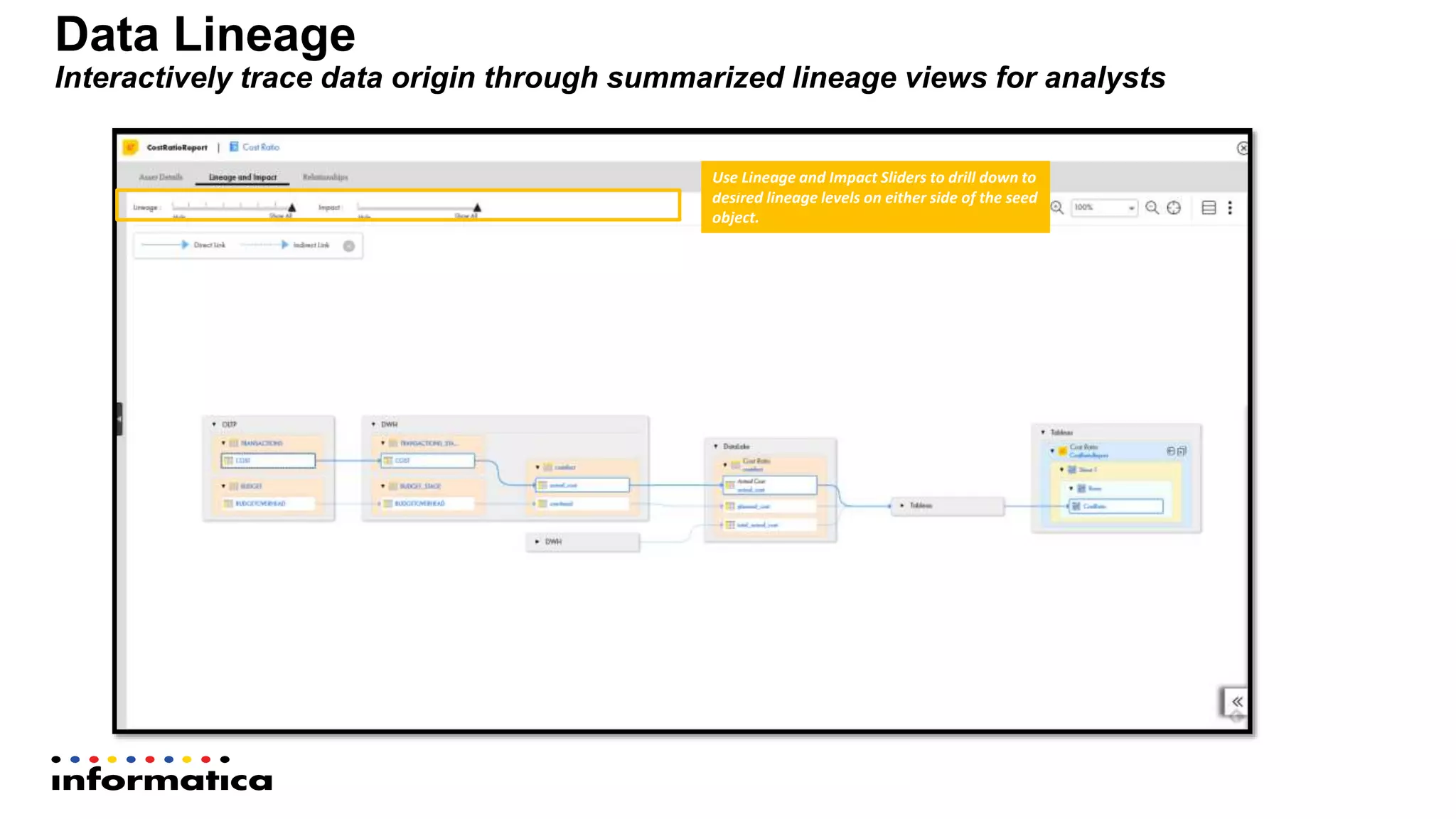

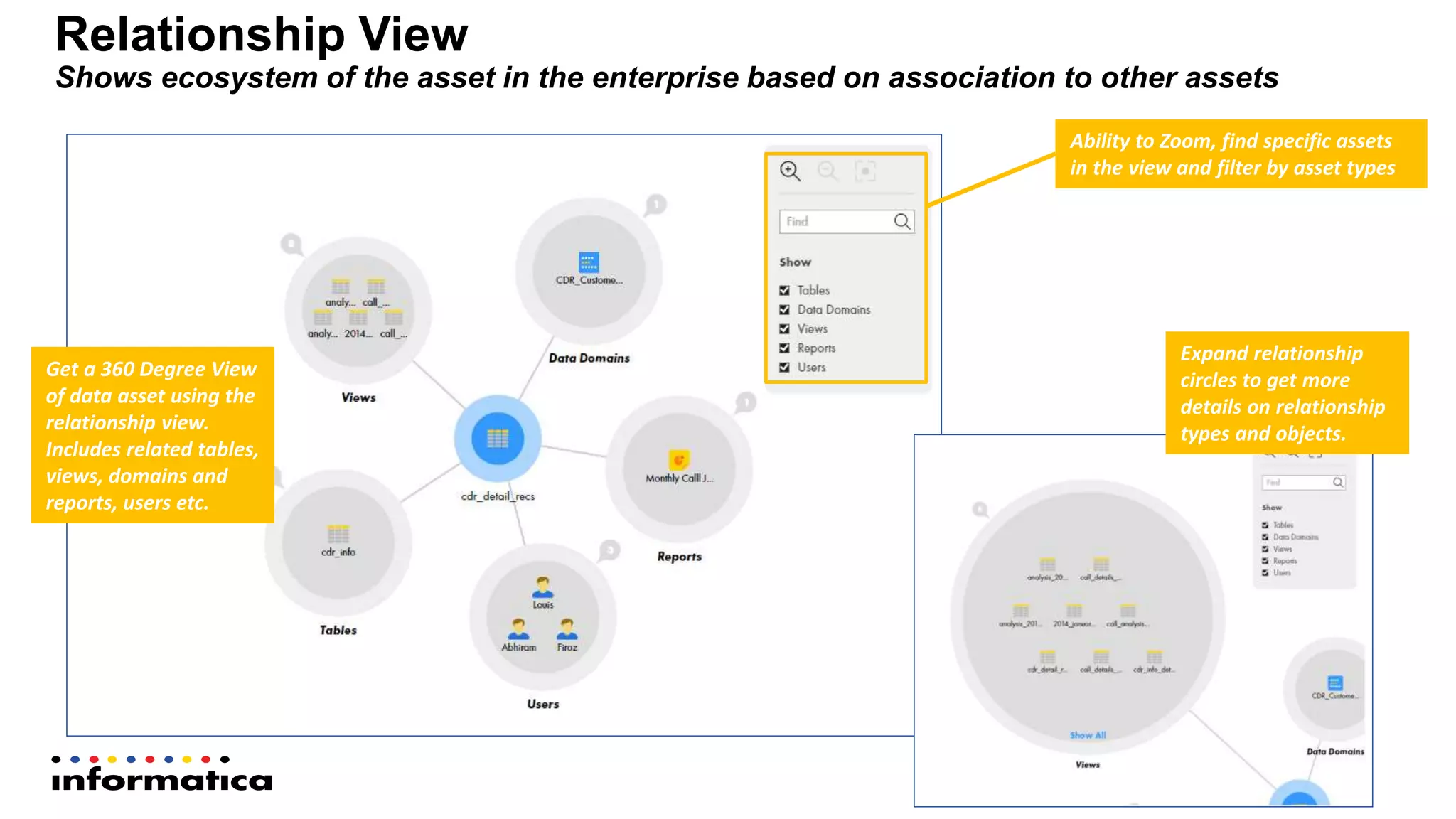

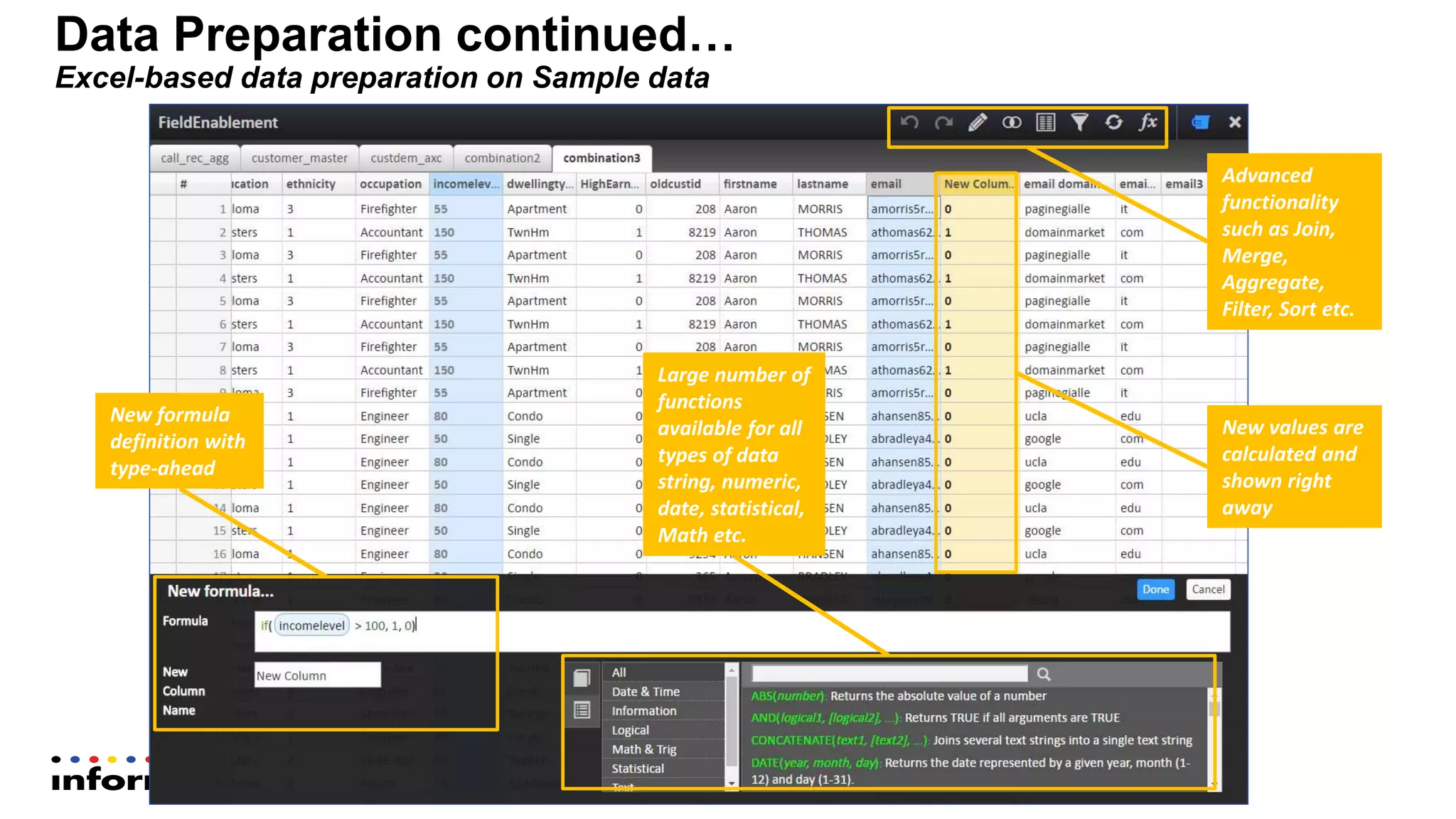

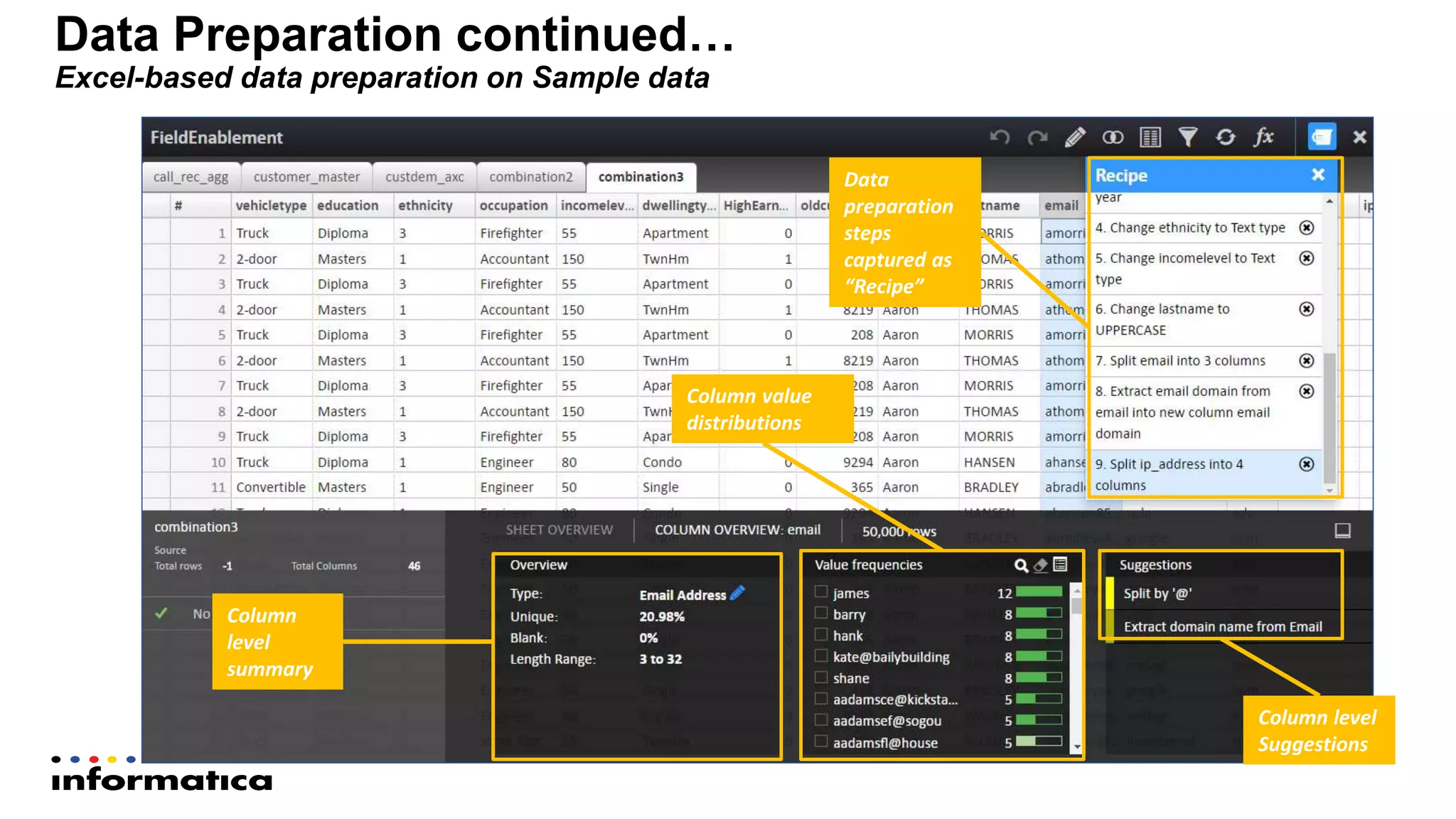

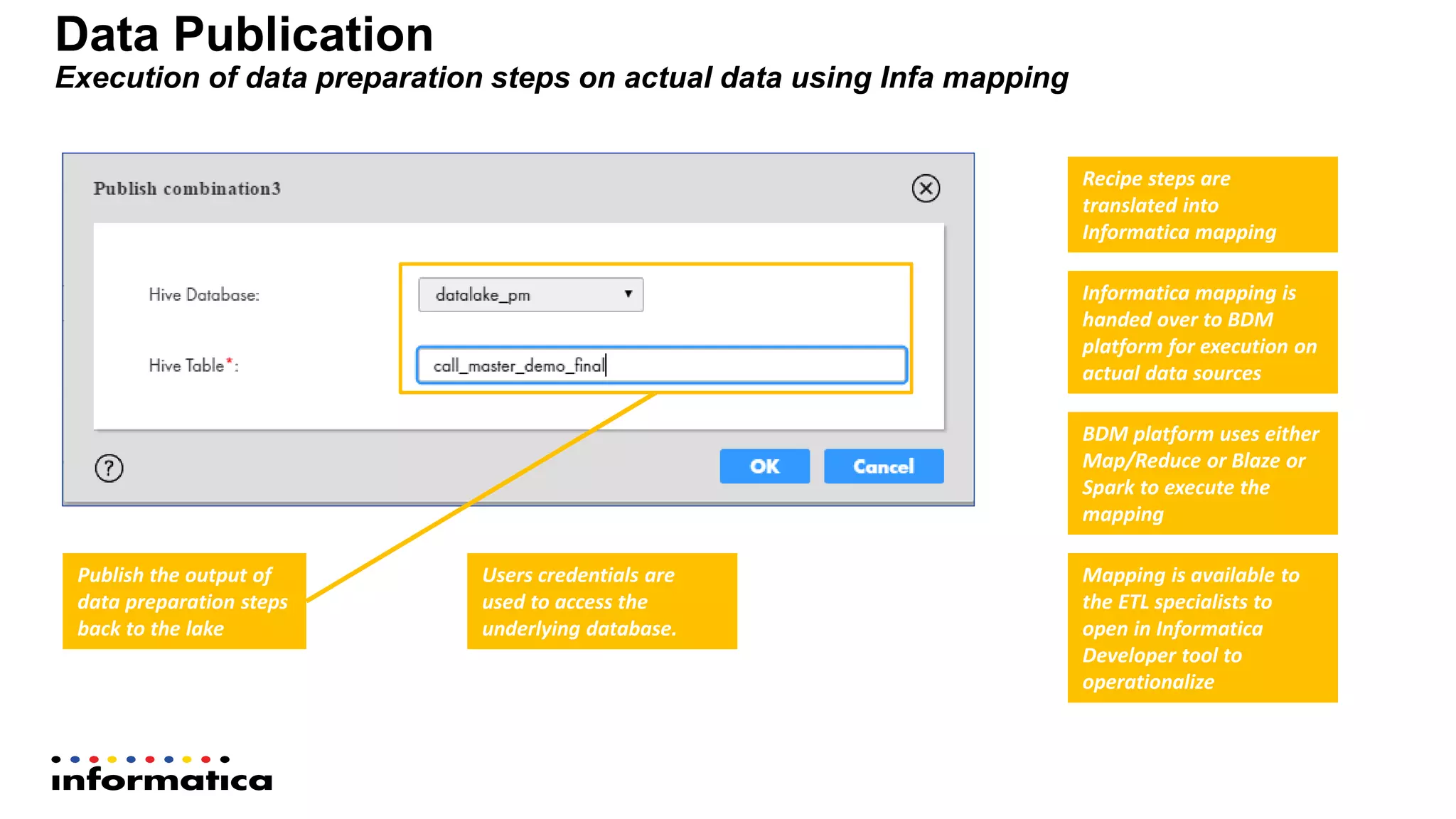

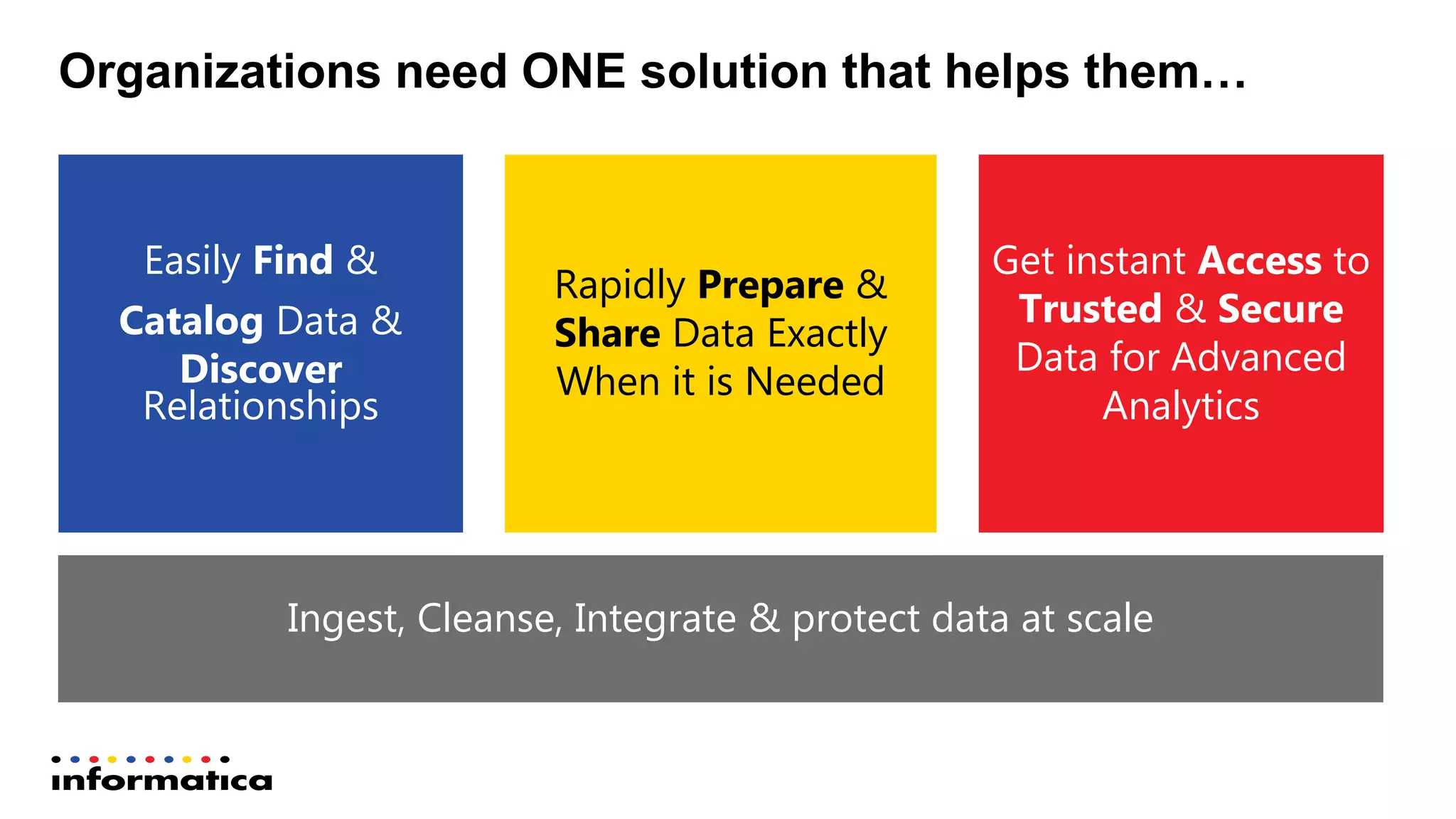

The document outlines the functionalities and benefits of Informatica's Intelligent Data Lake, aimed at empowering data analysts and scientists by facilitating self-service access to data for analytics and collaboration. It emphasizes features such as data management, automated data preparation, and governance, as well as addressing common challenges faced by IT and business users. The platform integrates various data sources, enhances visibility into data security risks, and enables efficient data discovery and classification.