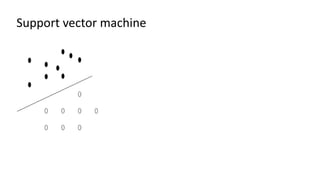

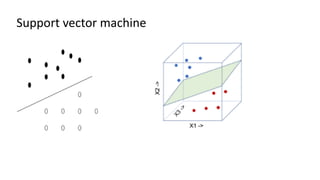

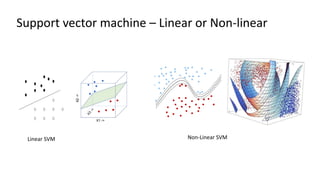

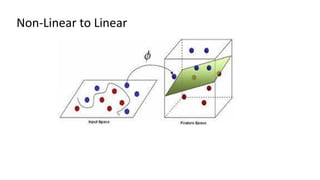

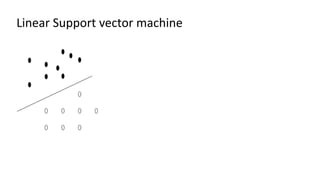

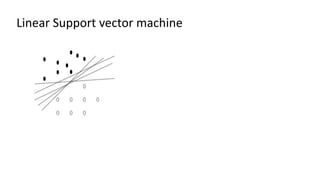

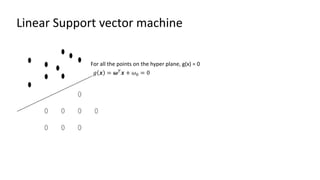

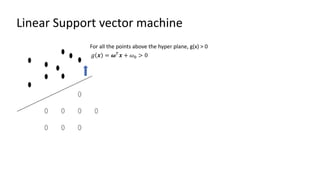

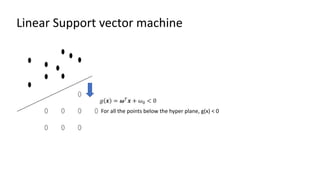

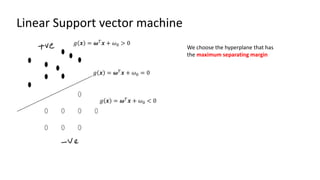

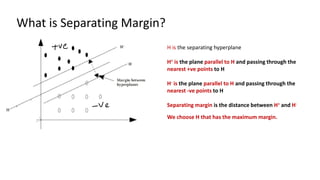

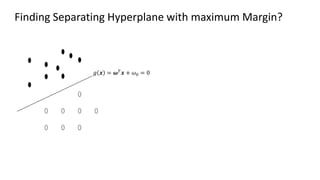

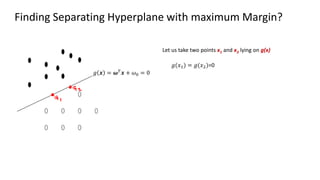

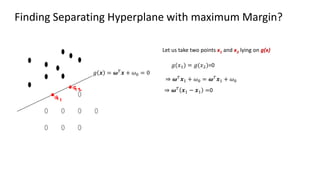

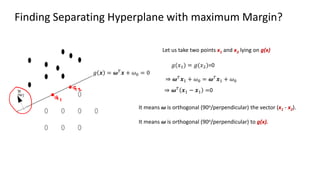

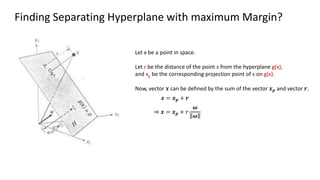

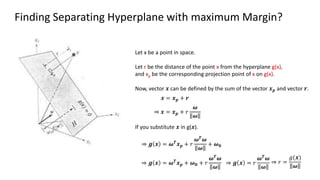

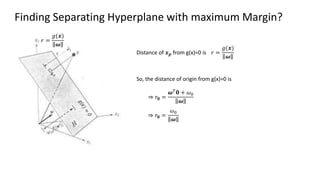

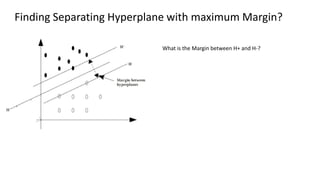

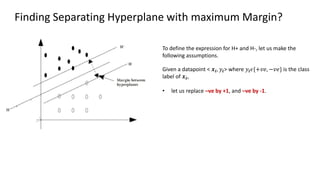

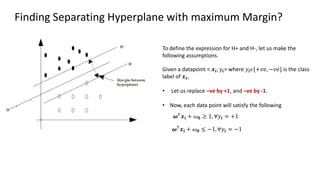

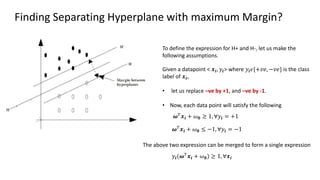

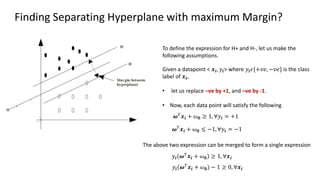

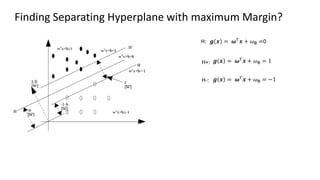

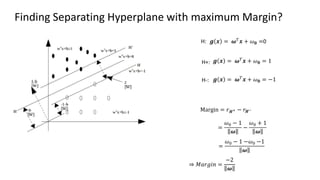

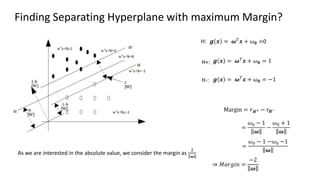

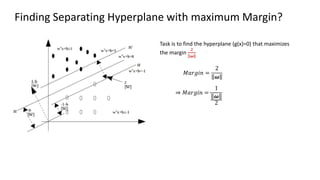

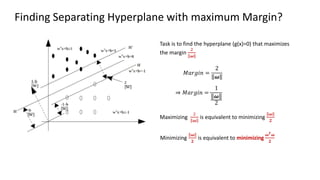

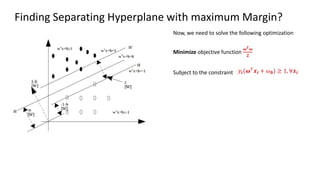

The document discusses support vector machines (SVM), detailing their linear and non-linear classifications and the concept of the separating hyperplane. It explains how to find the hyperplane with the maximum separating margin, defining key terms such as margin and support vectors, and outlines the optimization problem to determine parameters for classification. The document also includes mathematical formulations and constraints necessary for implementing SVM.

![What are the support vectors?

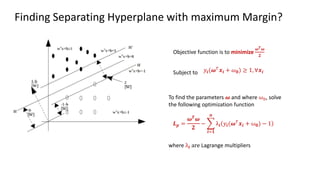

𝑳𝒑 =

𝝎𝑻

𝝎

𝟐

−

𝒊=𝟏

𝒏

λ𝒊 𝑦𝒊(𝝎𝑇𝒙𝒊 + 𝜔𝟎) − 1

[

In order to find the parameters, we need to solve this objective function.

]](https://image.slidesharecdn.com/alesson6-240906144131-385169c6/85/Machine-learning-Support-vector-Machine-case-study-which-implies-35-320.jpg)