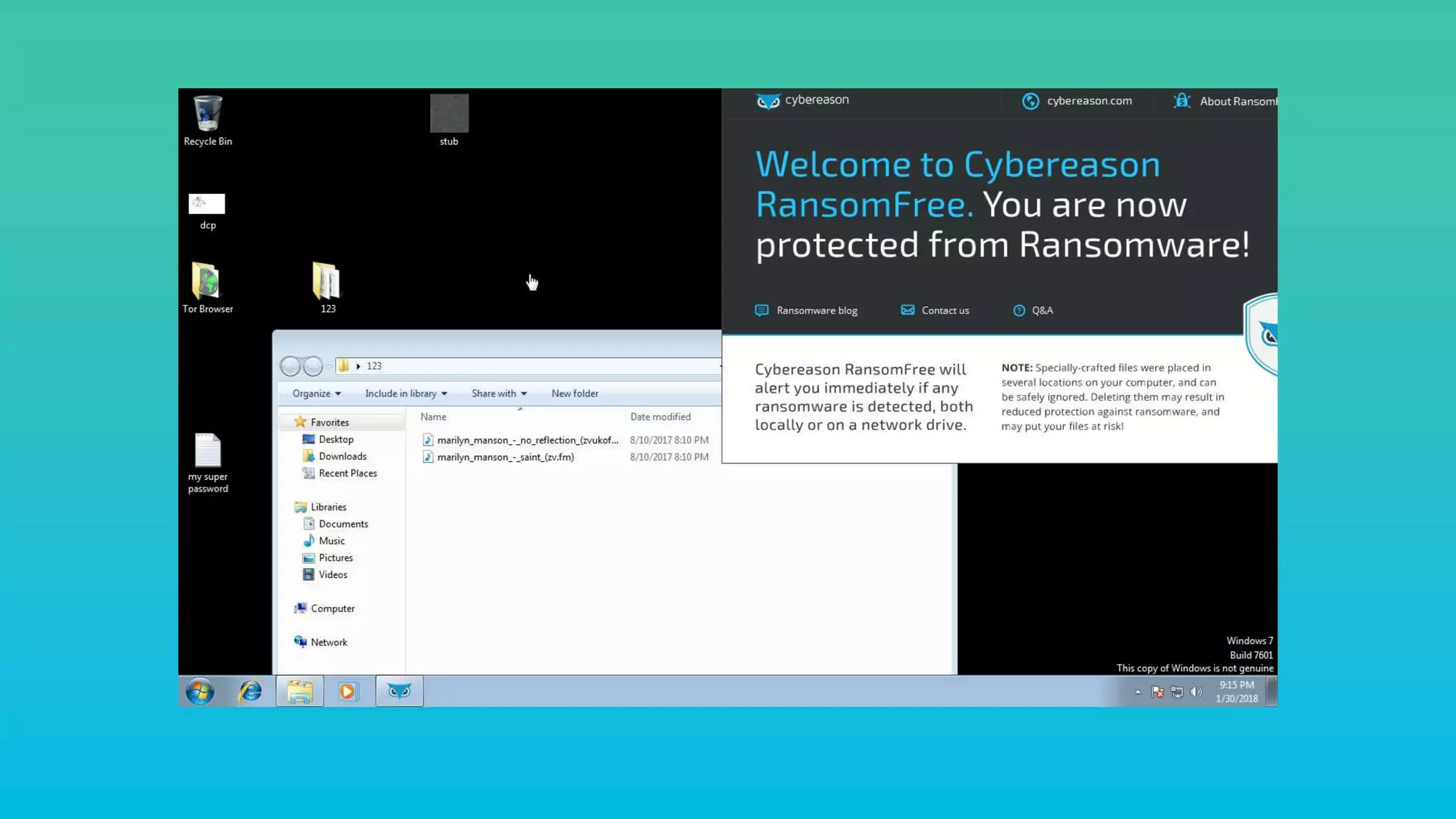

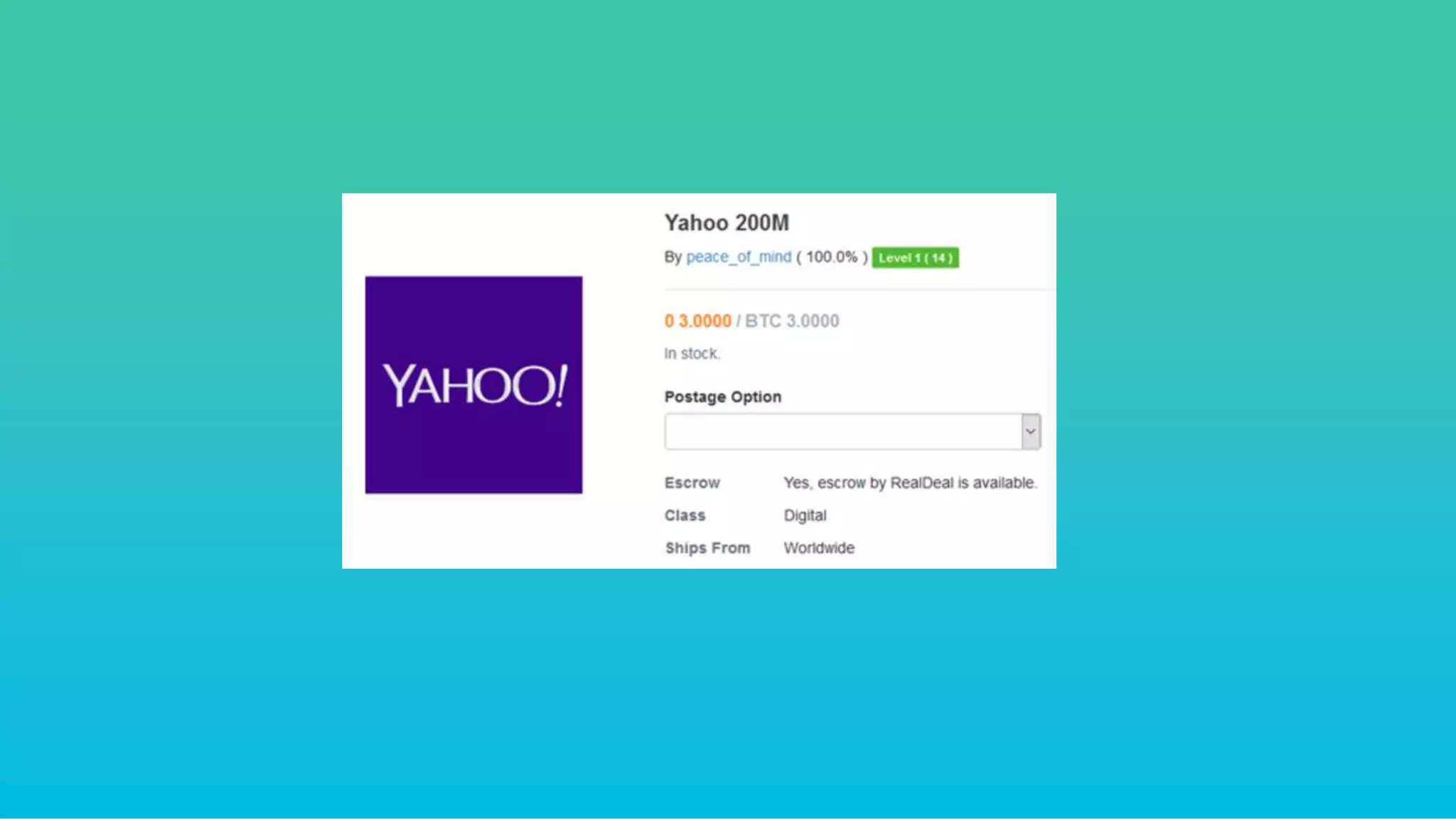

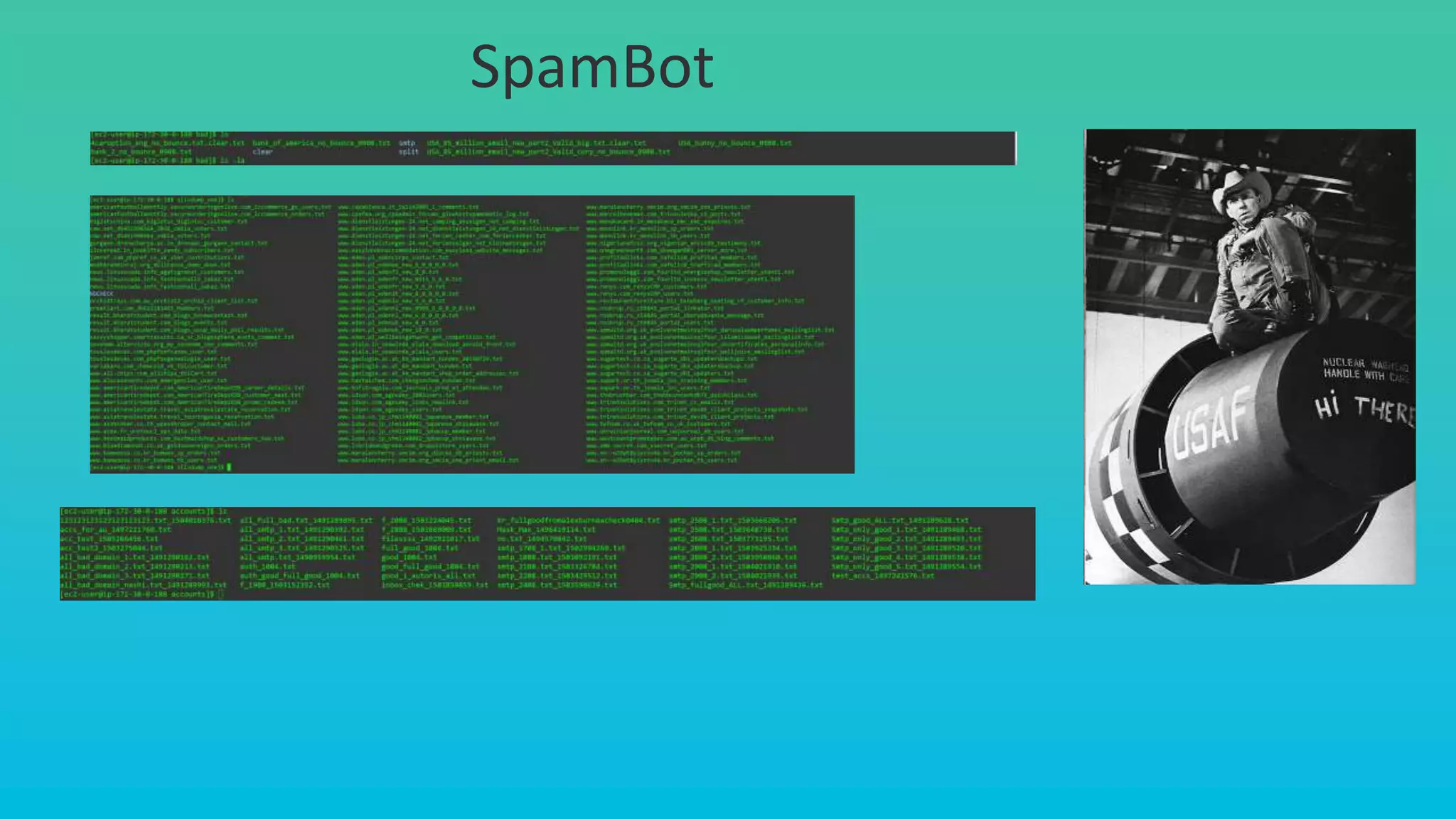

This document provides an overview of InfoArmor's threat intelligence and data ingestion capabilities. It begins with a brief history of InfoArmor and its vision for the future. It then discusses how threat data is collected from the dark web and other sources through techniques like forum scraping, human operatives, and threat actor profiling. The document also discusses lessons learned from processing over 1 billion rows of data in databases like Elasticsearch and MariaDB. It cautions against issues like poor schema design, not closing database connections, importing too much data at once, and allowing malicious scripts into databases. The key takeaways are that data should be ingested and processed incrementally and that remote DBAs can help manage infrastructure challenges.

![This product will get you 100.000 United Kingdom "HOTMAIL" Emails Leads

Source: http[:]//6qlocfg6zq2kyacl.onion/viewProduct?offer=857044.38586](https://image.slidesharecdn.com/mariadbsteveclees-180306225536/75/M-18-How-InfoArmor-Harvests-Data-from-the-Underground-Economy-8-2048.jpg)