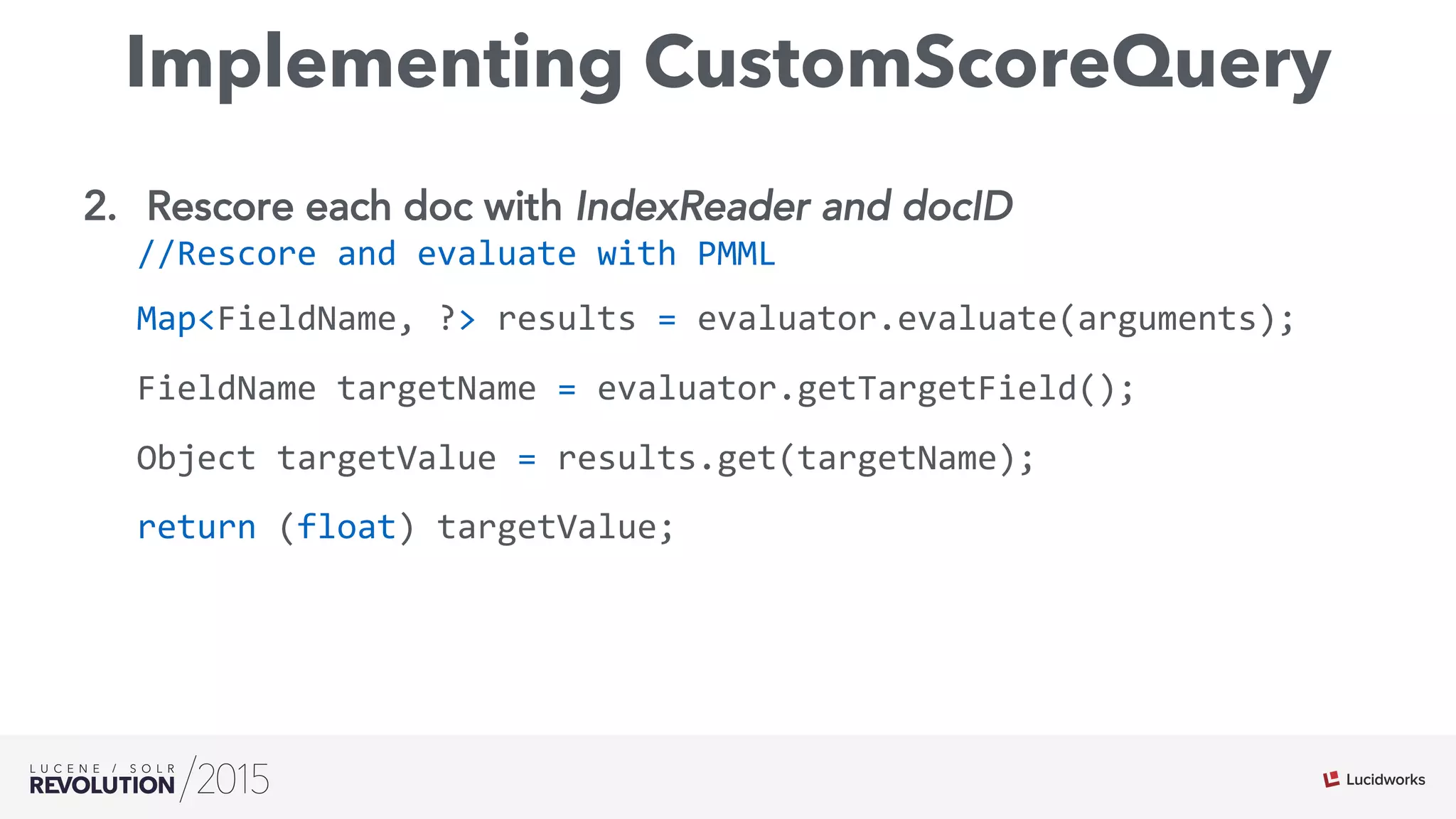

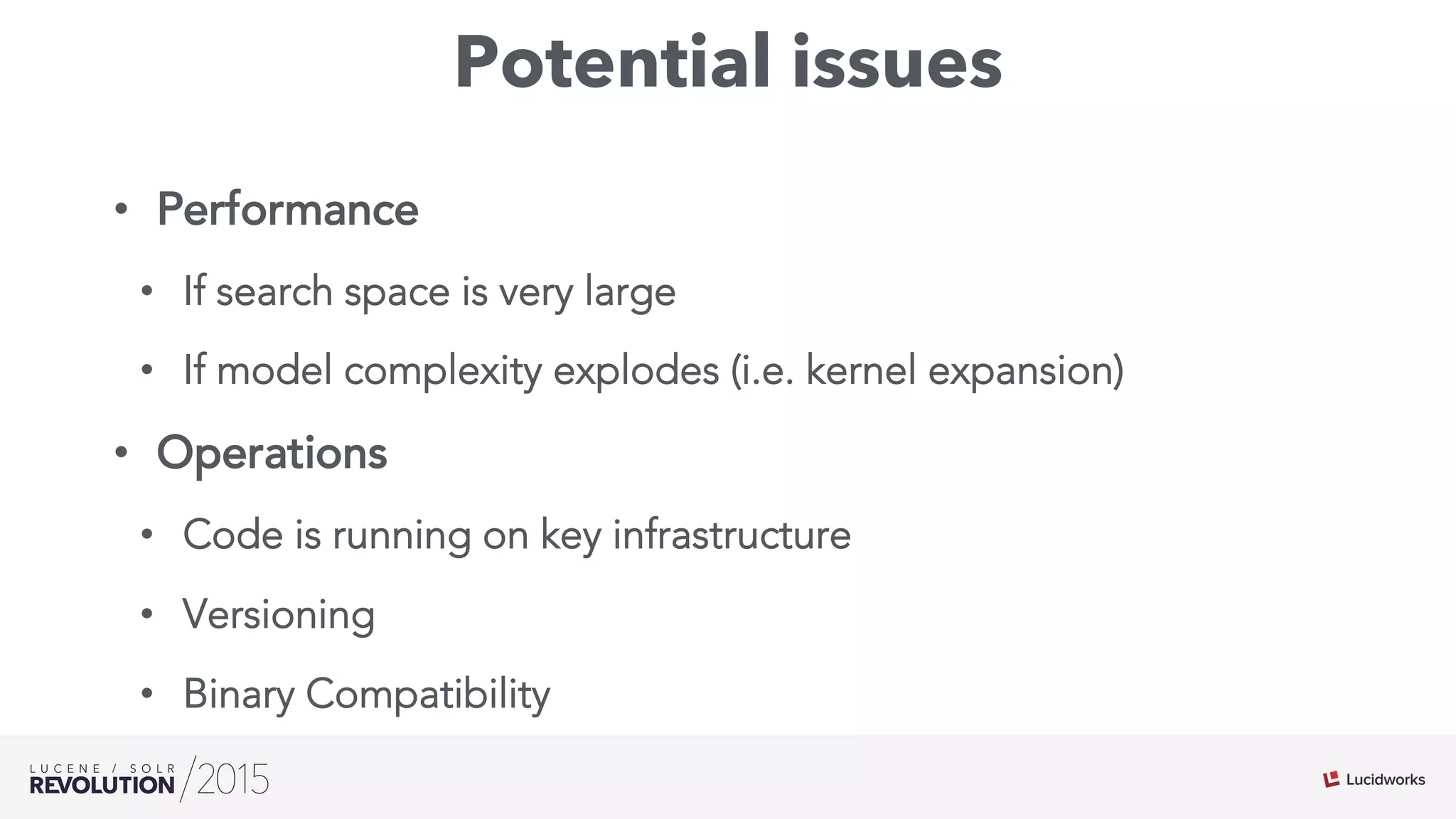

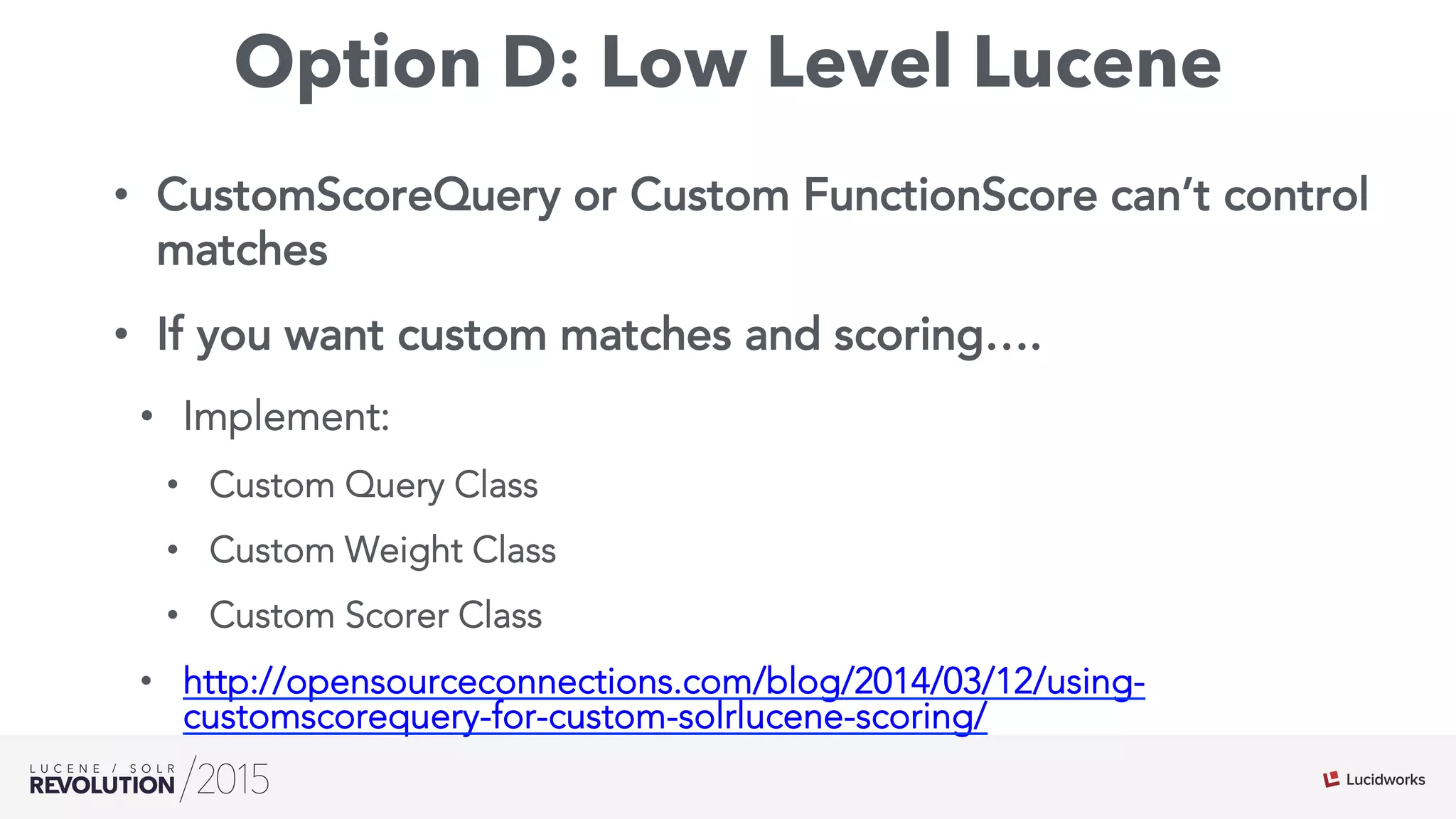

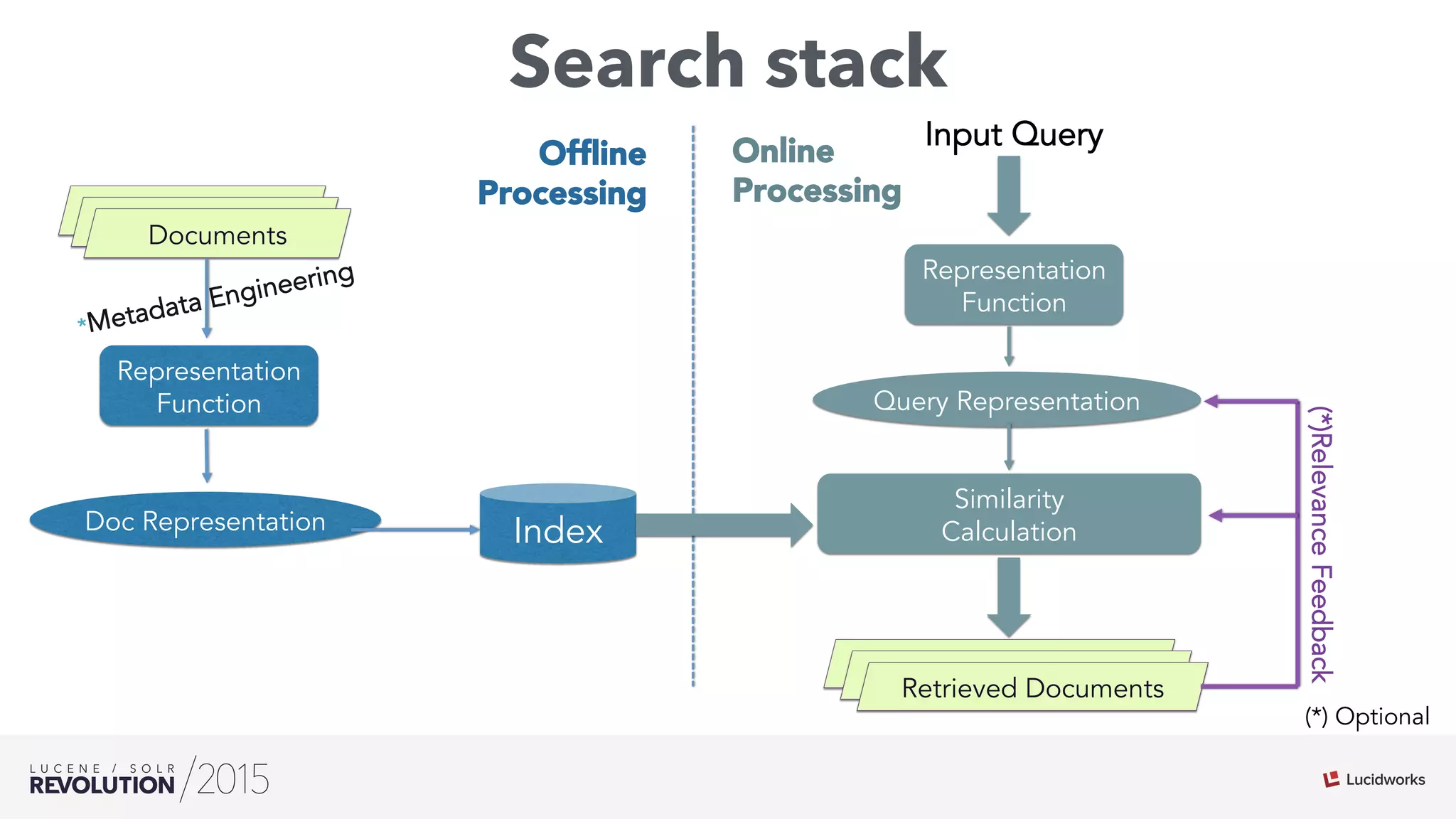

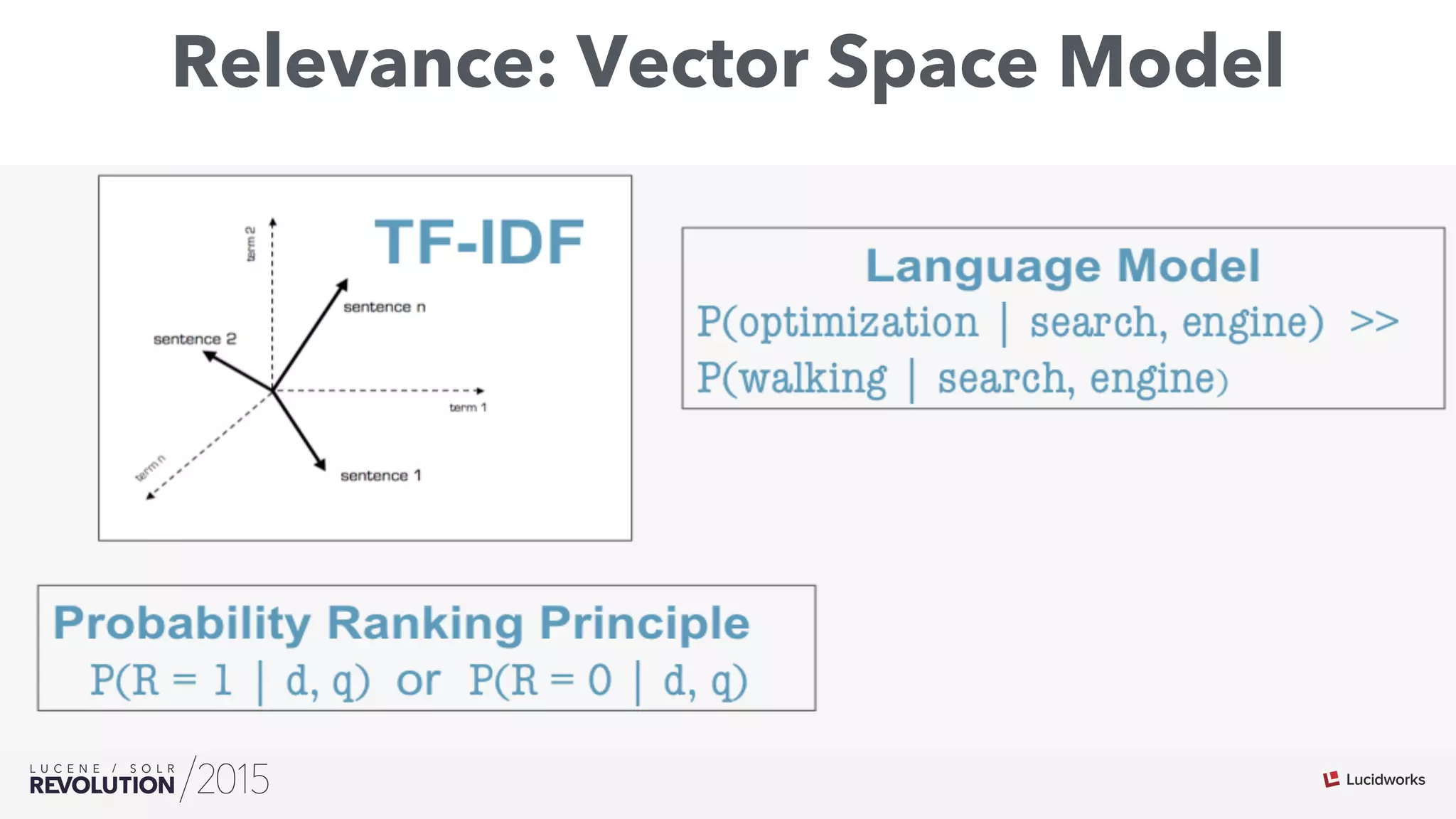

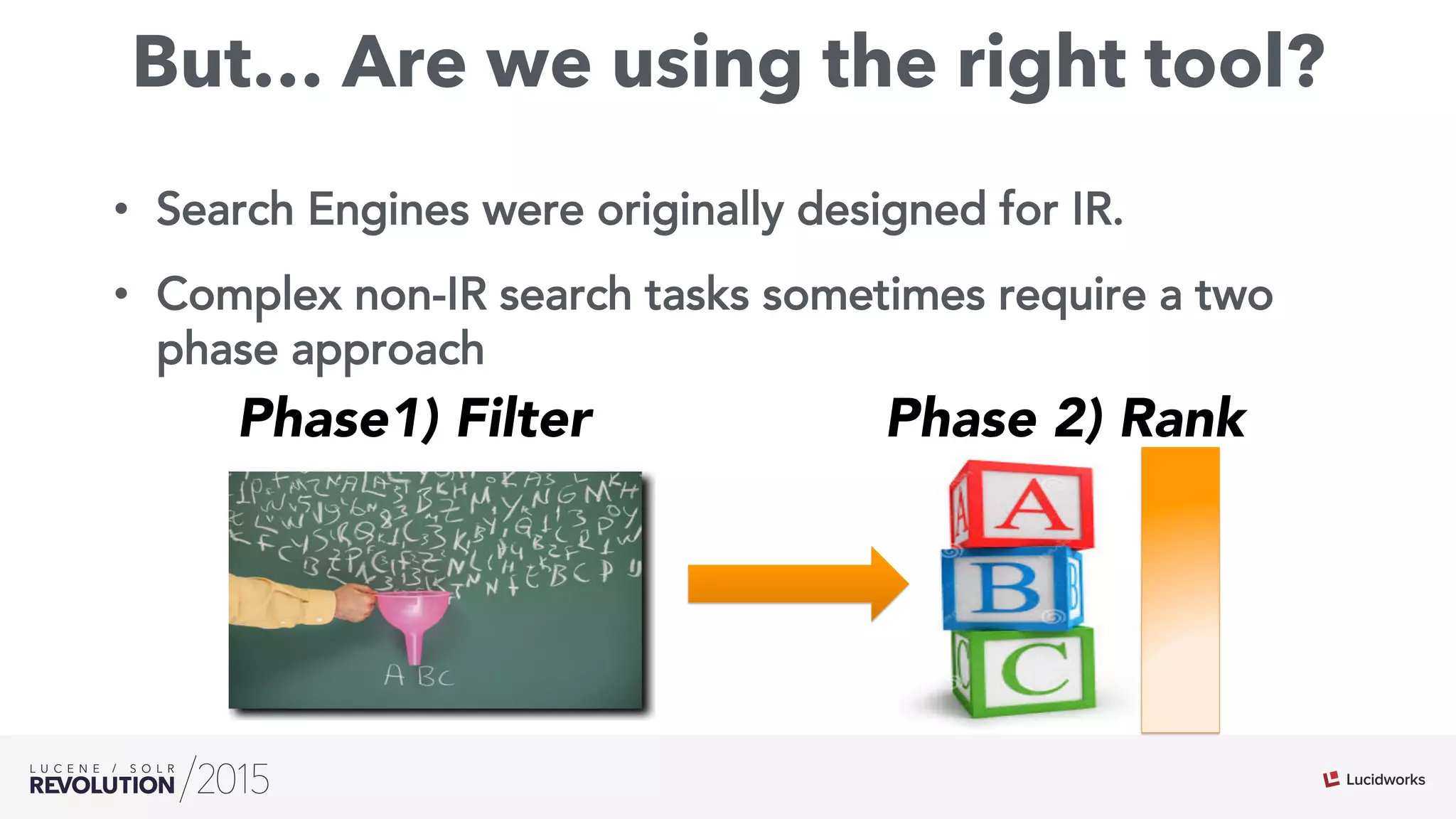

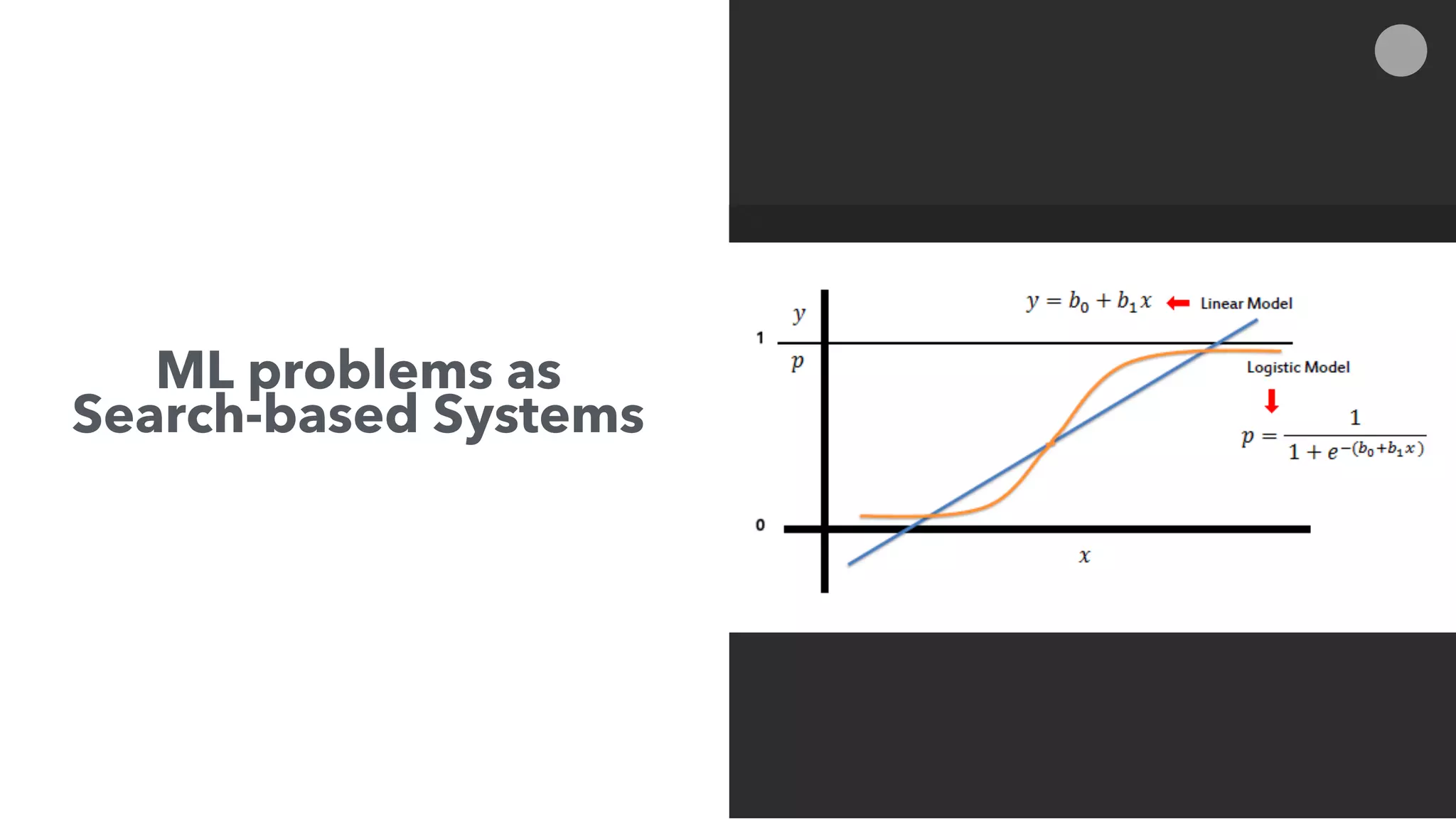

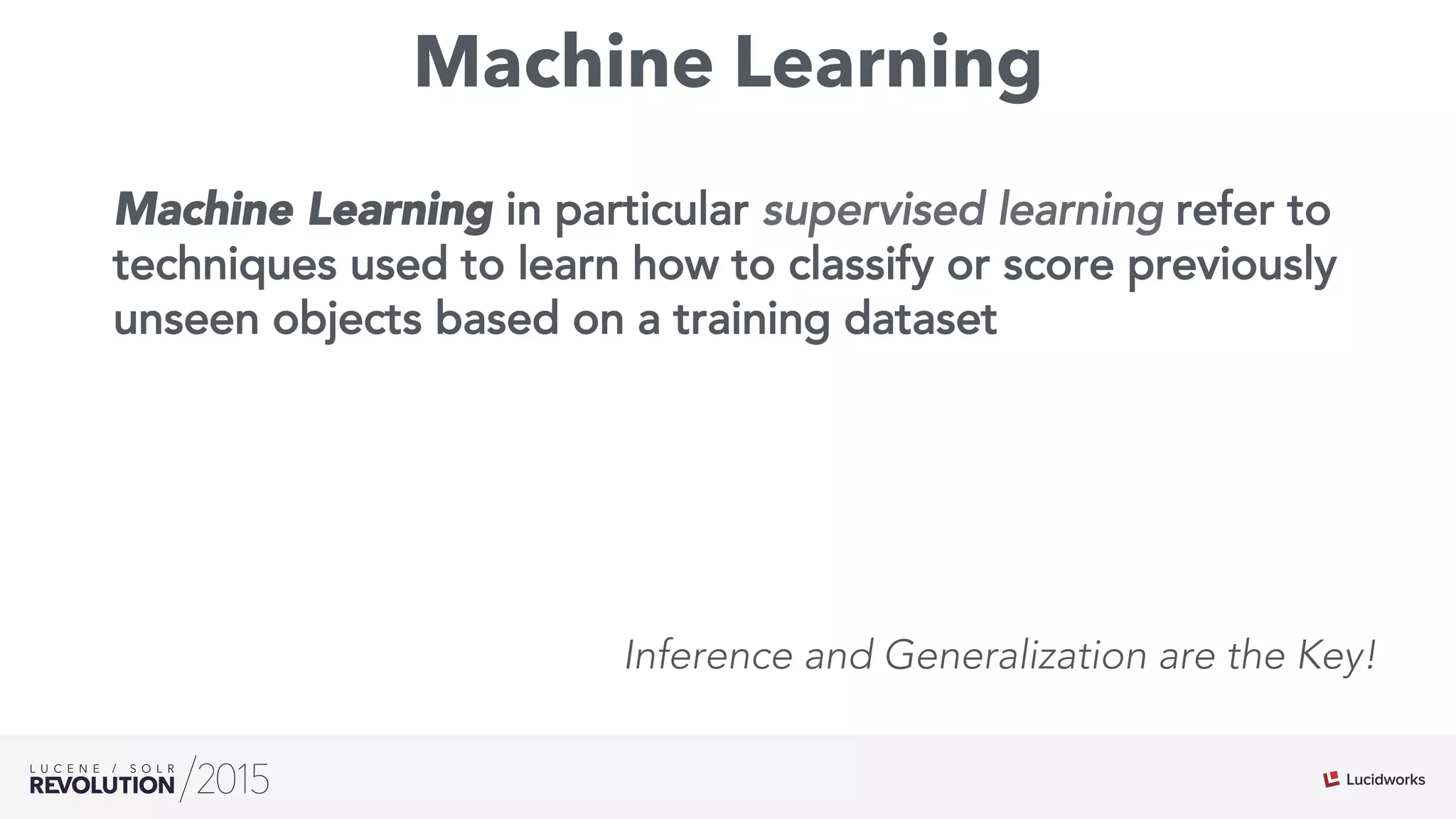

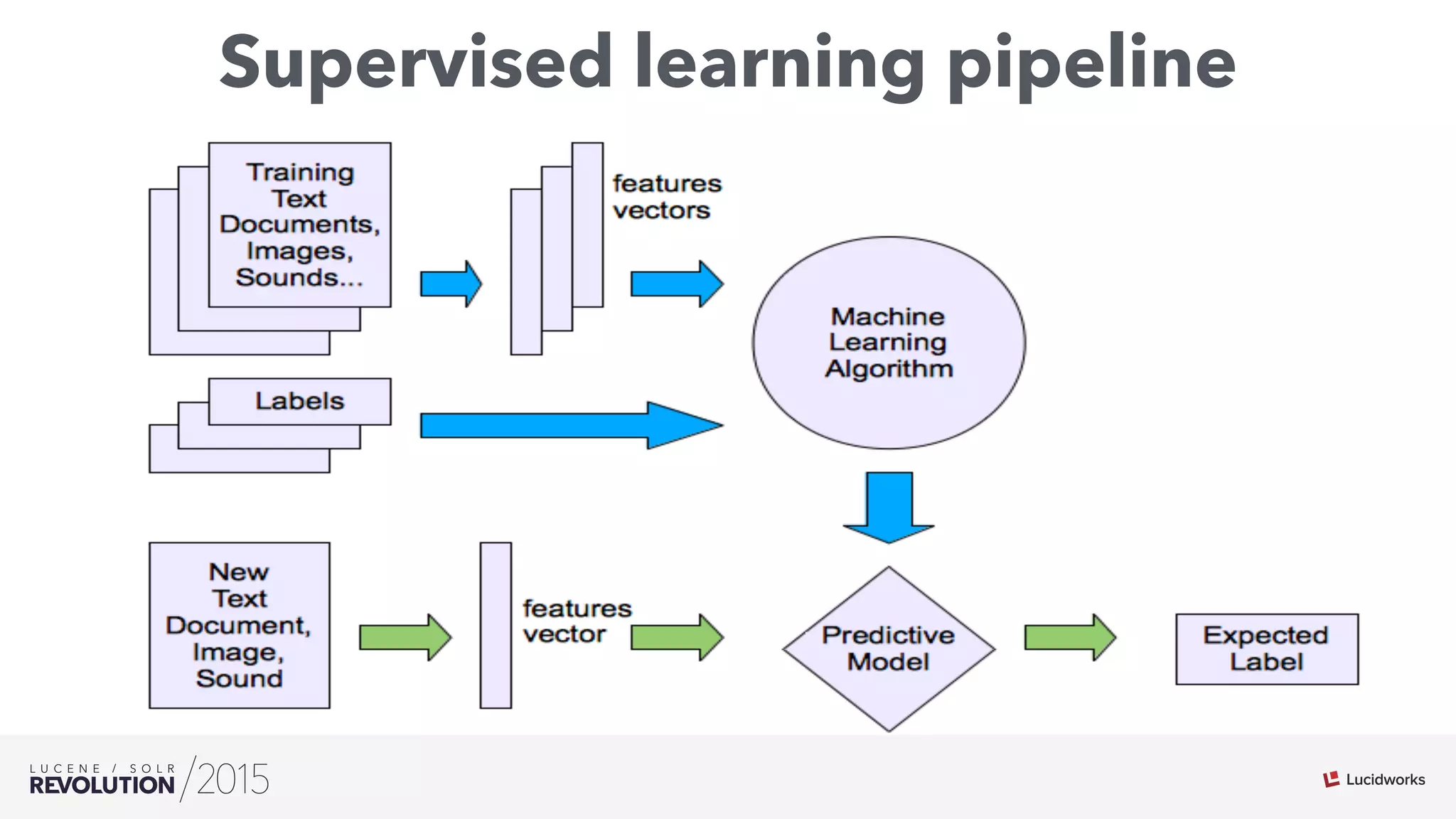

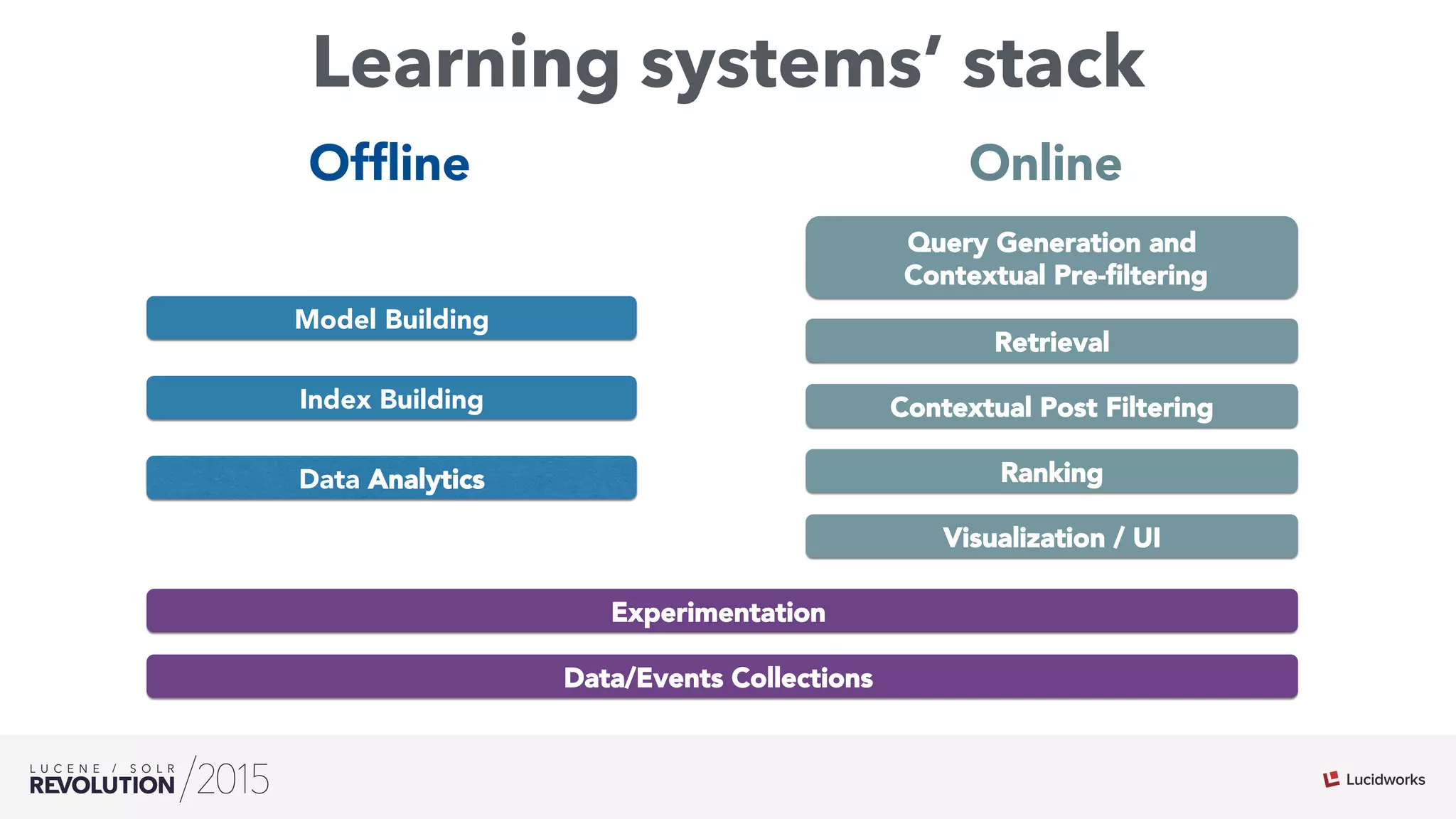

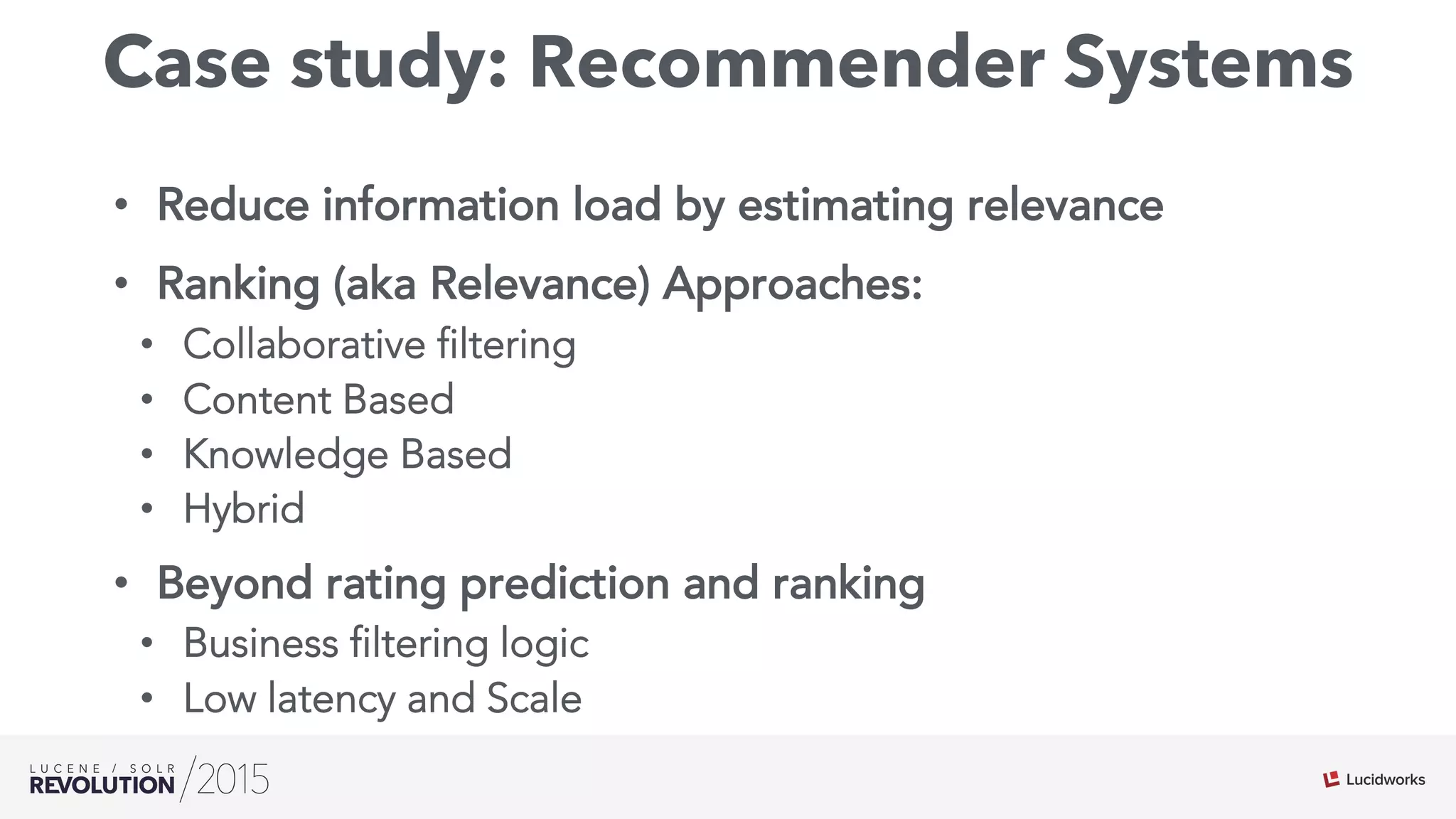

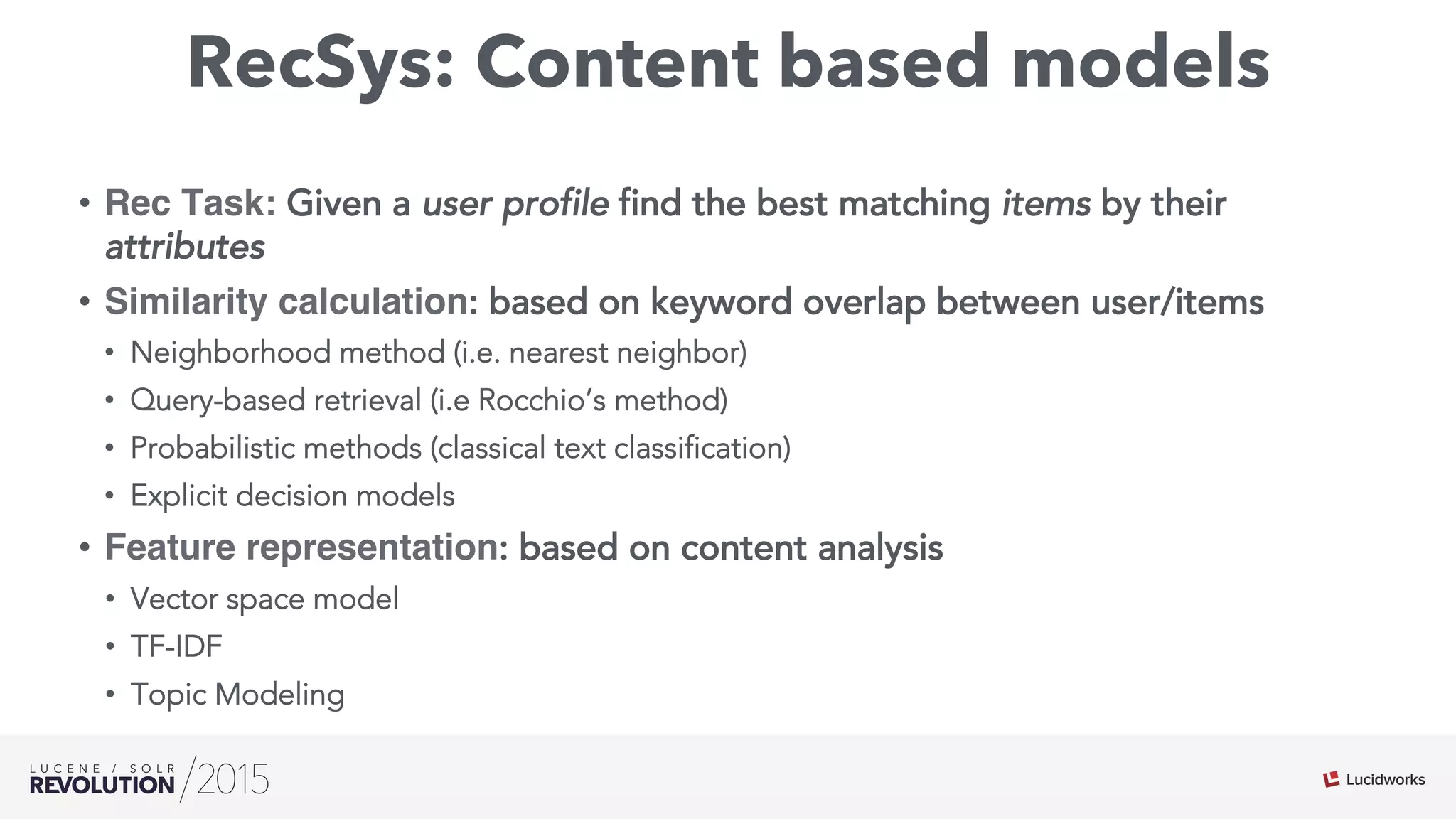

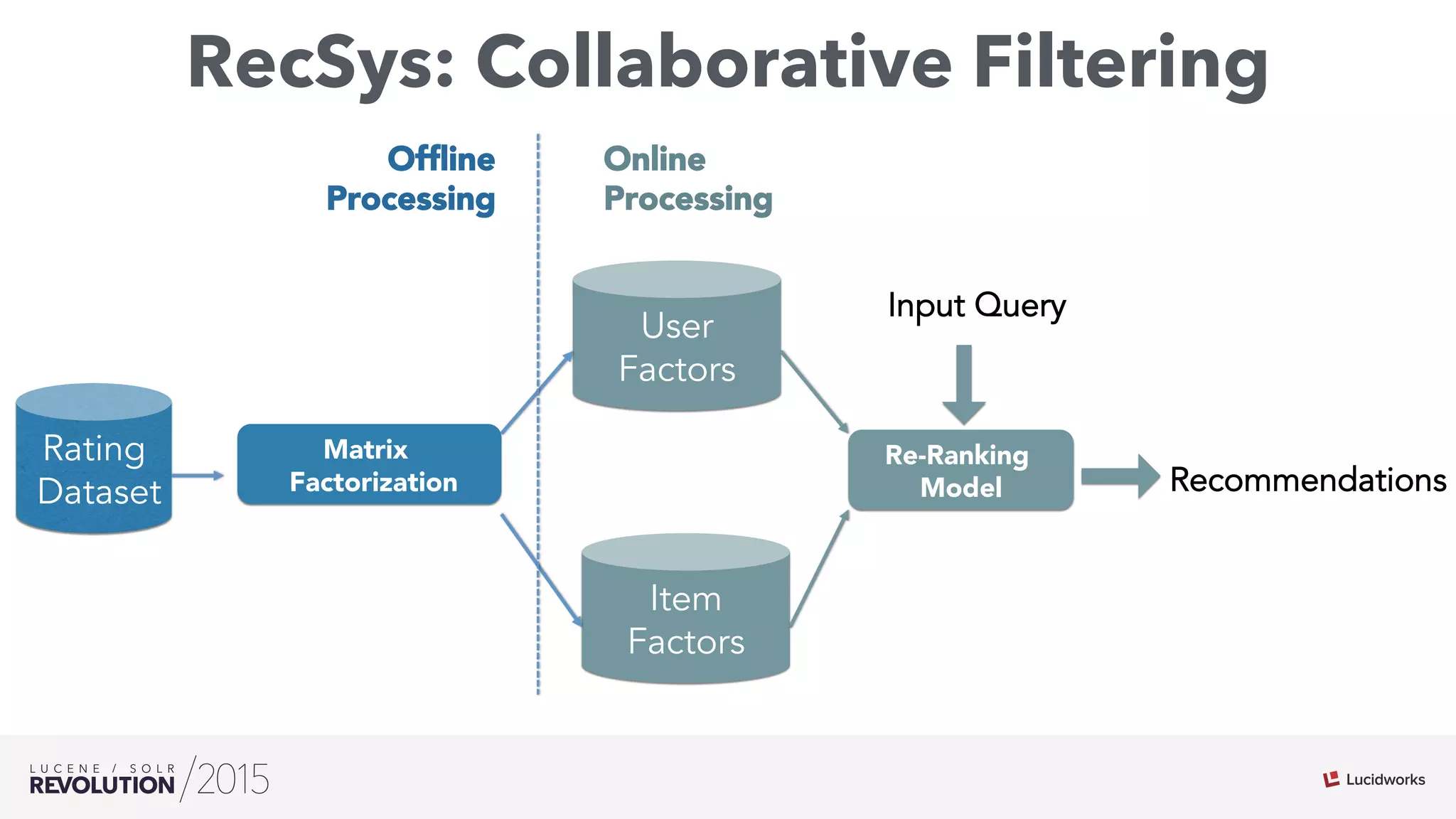

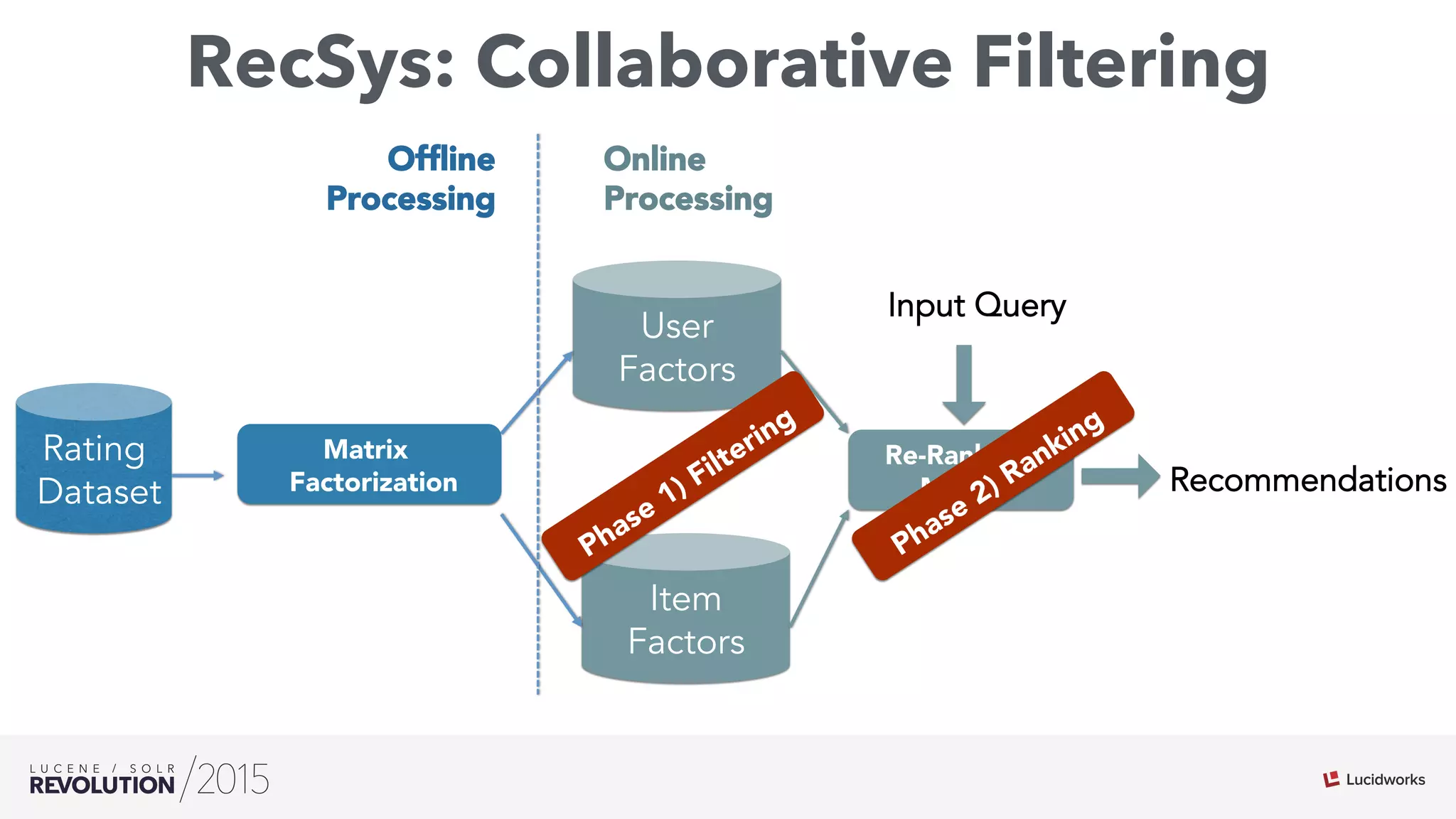

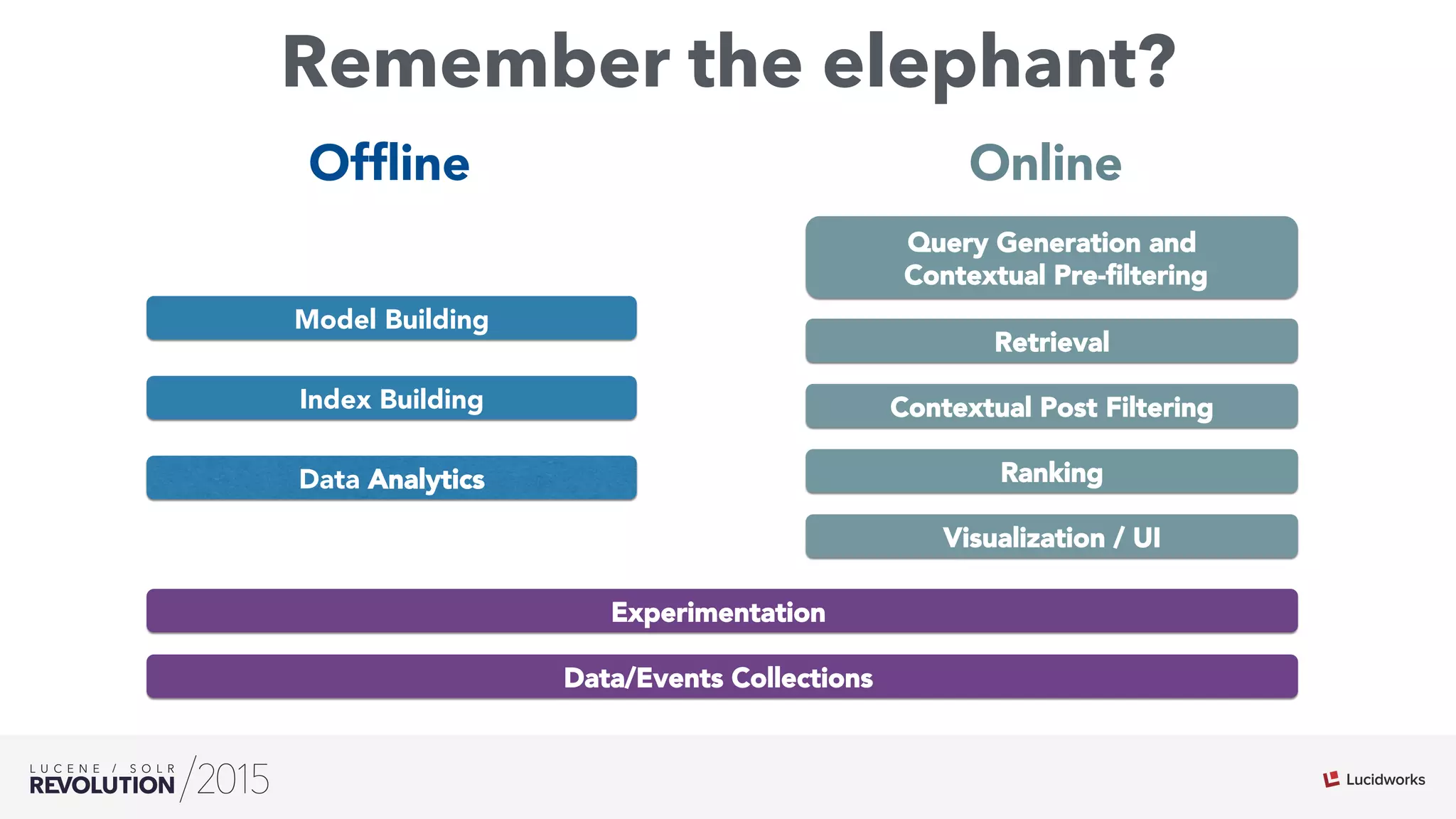

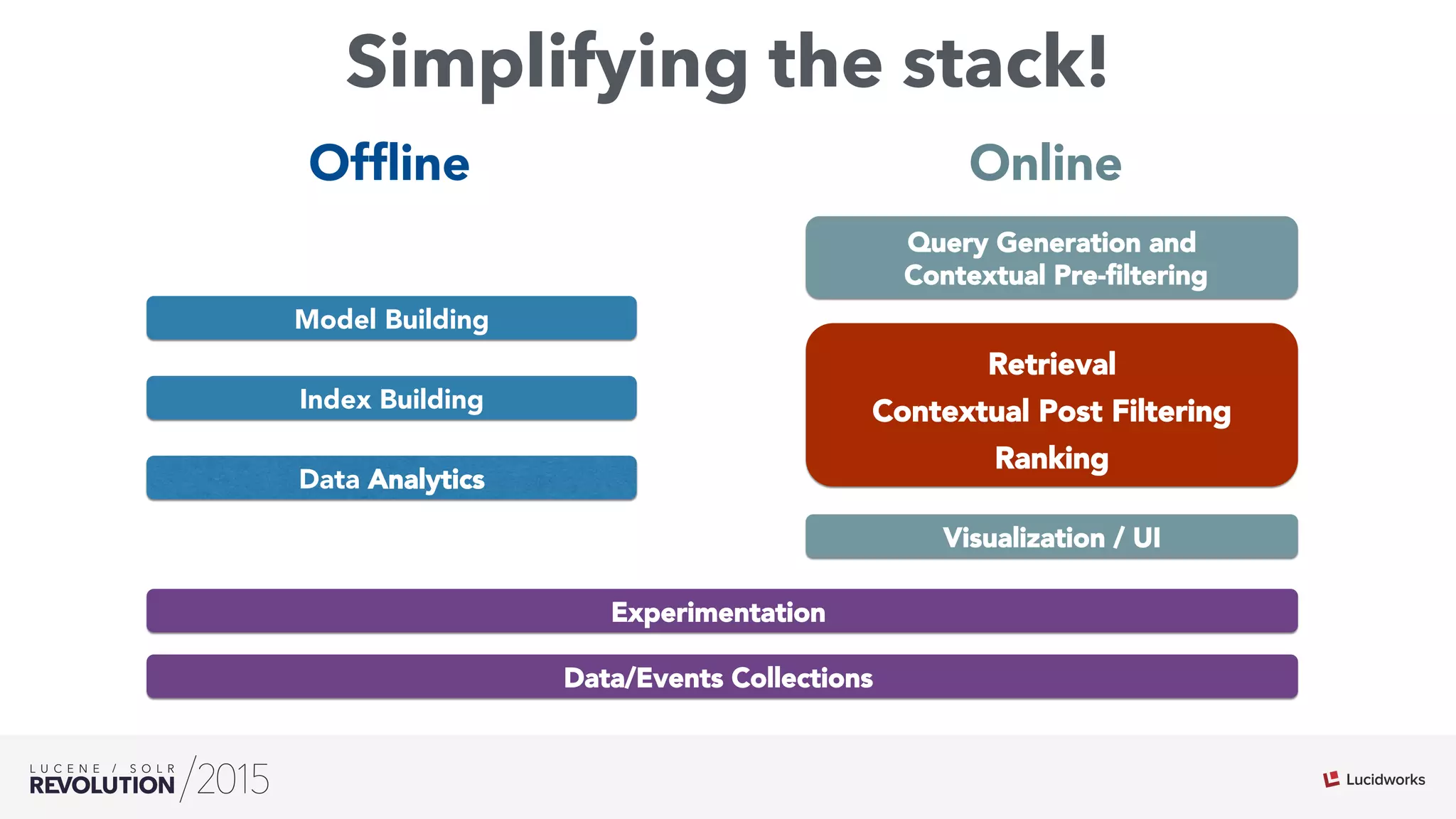

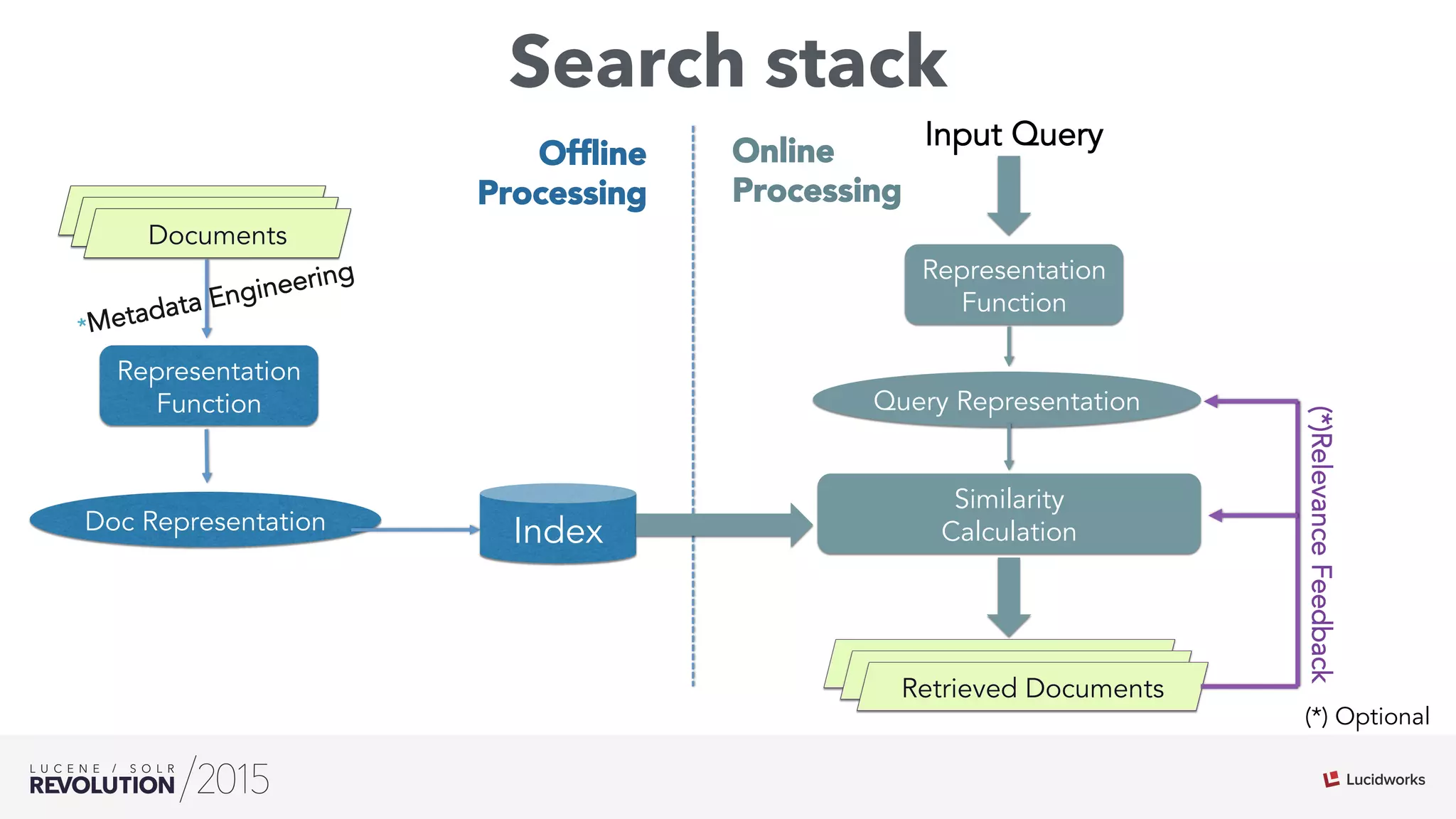

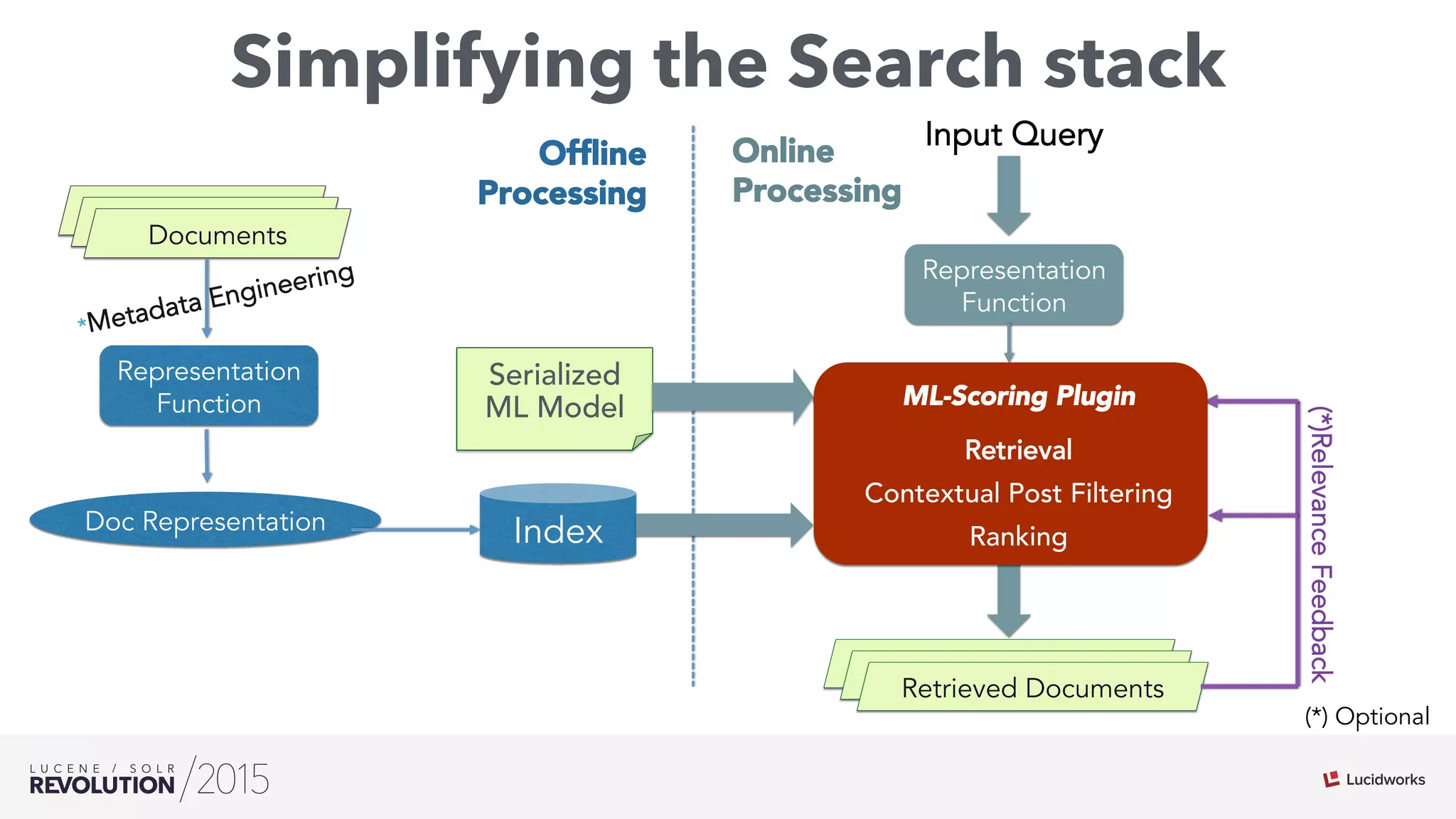

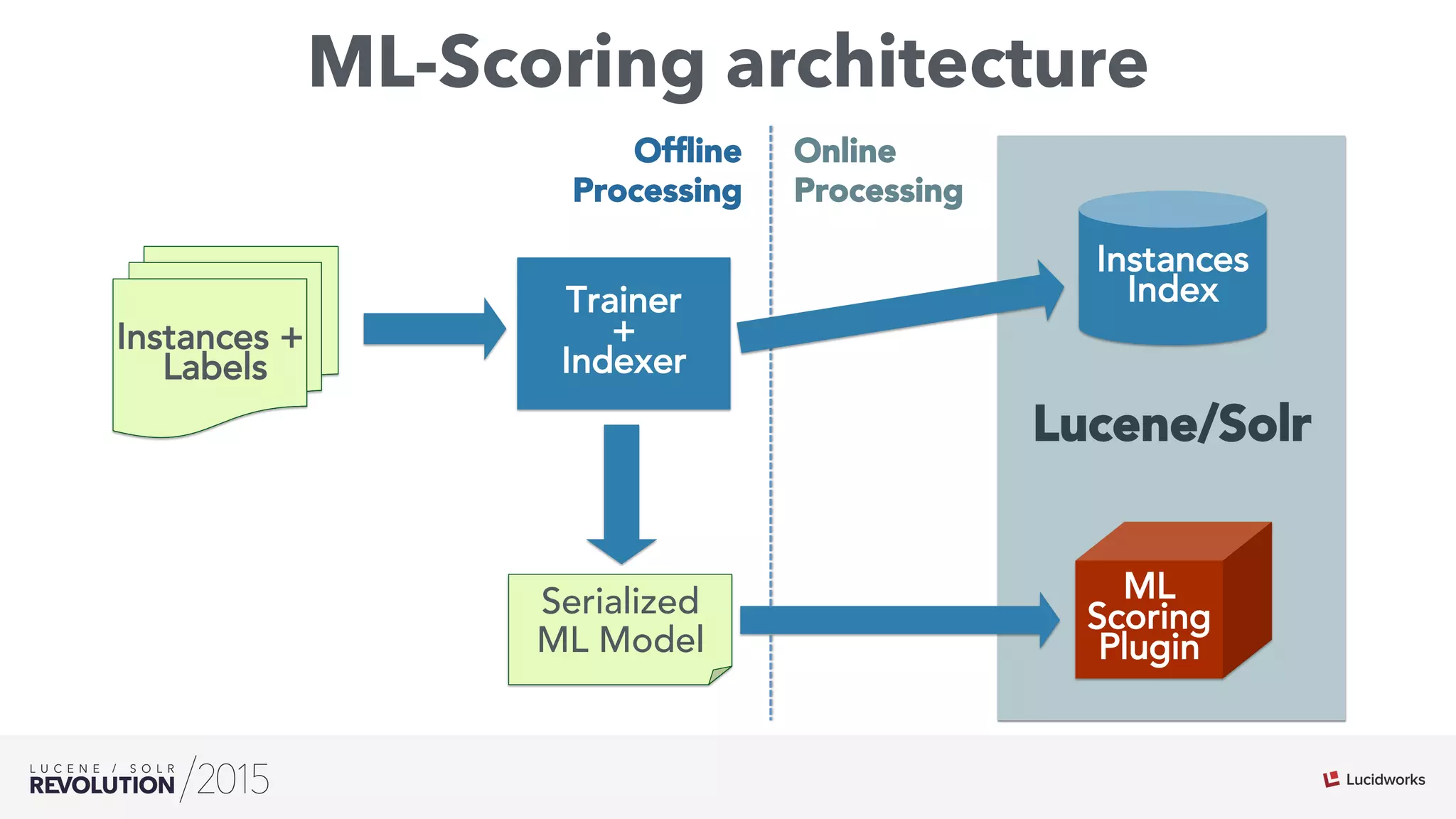

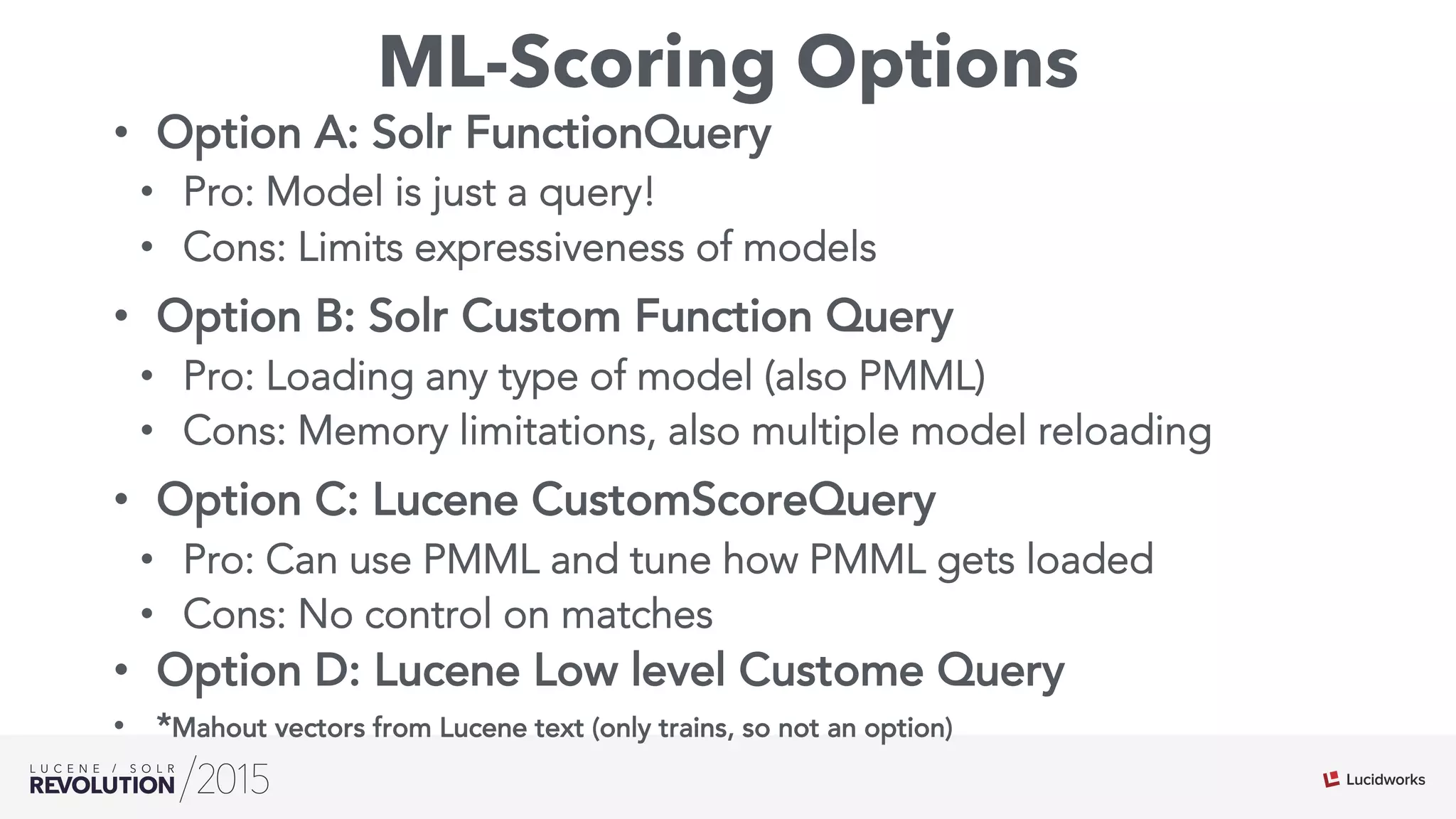

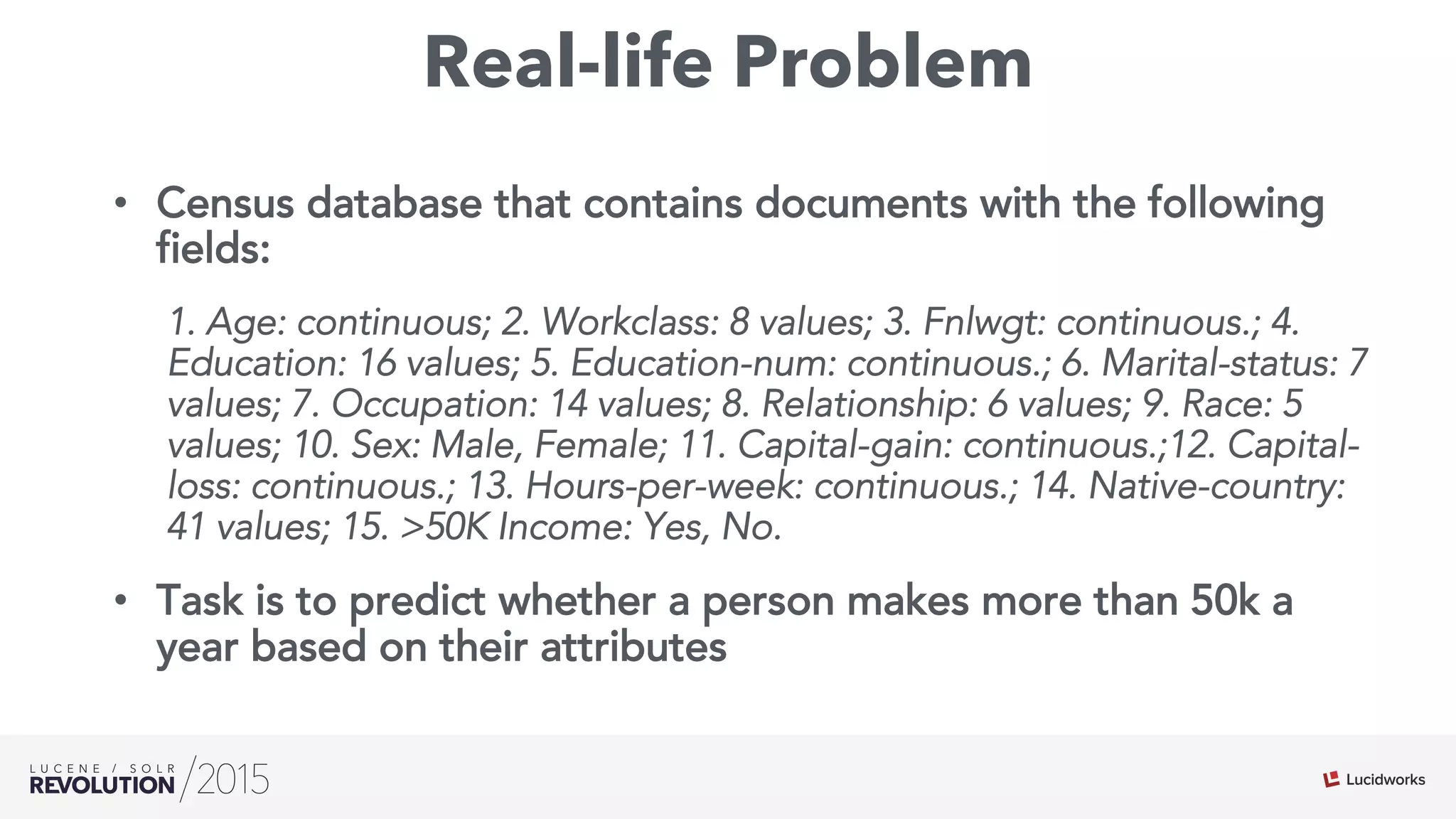

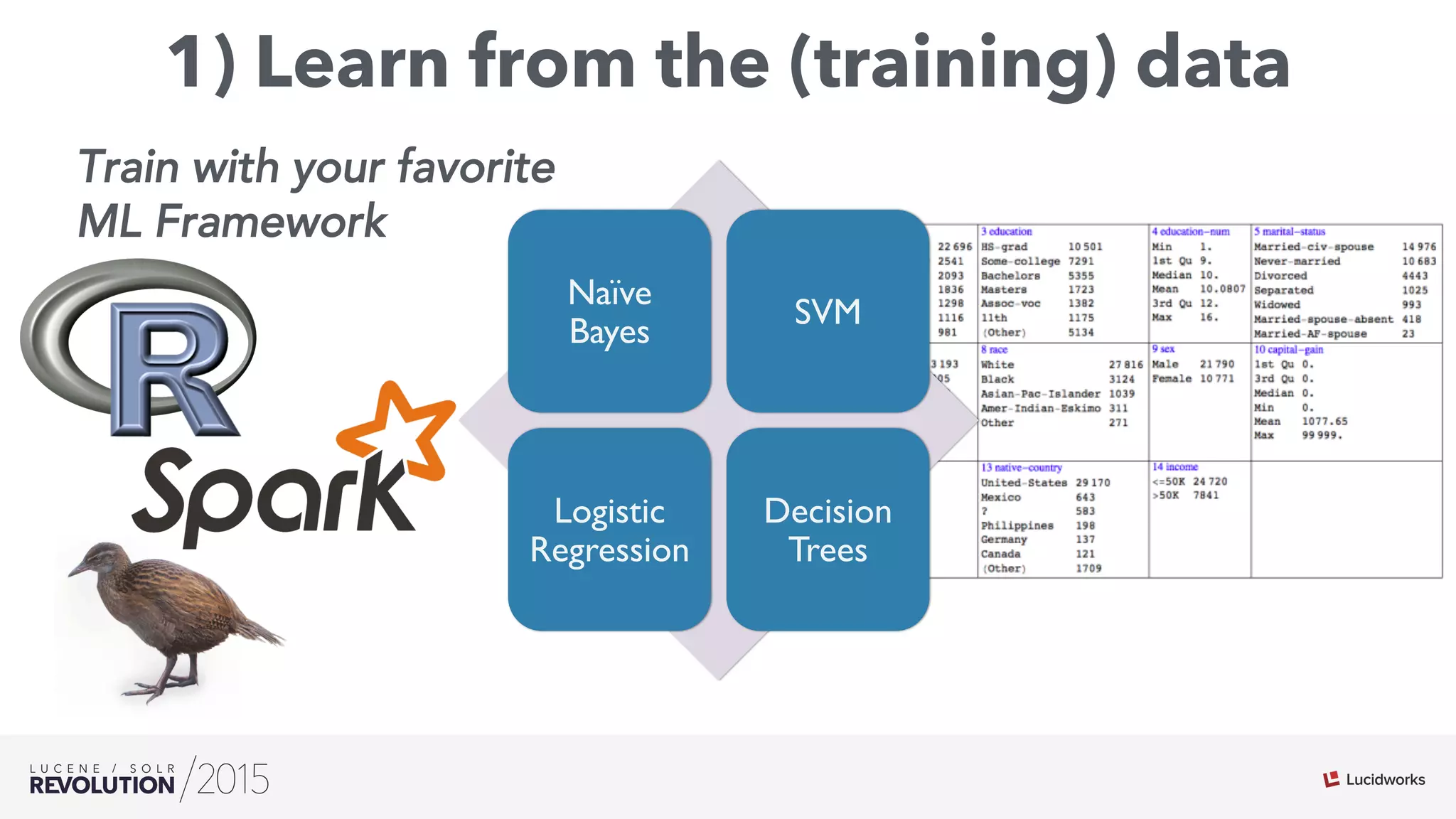

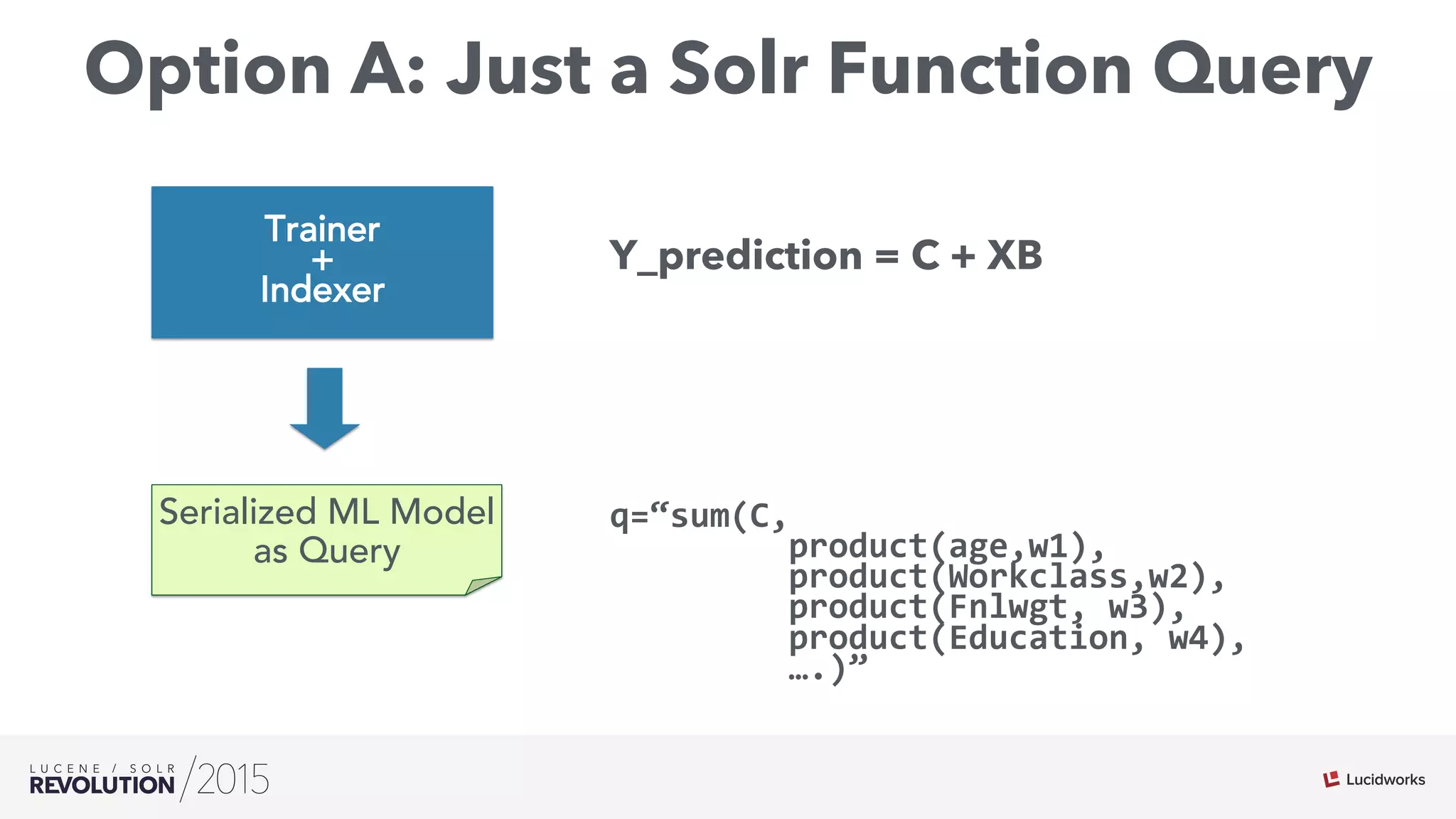

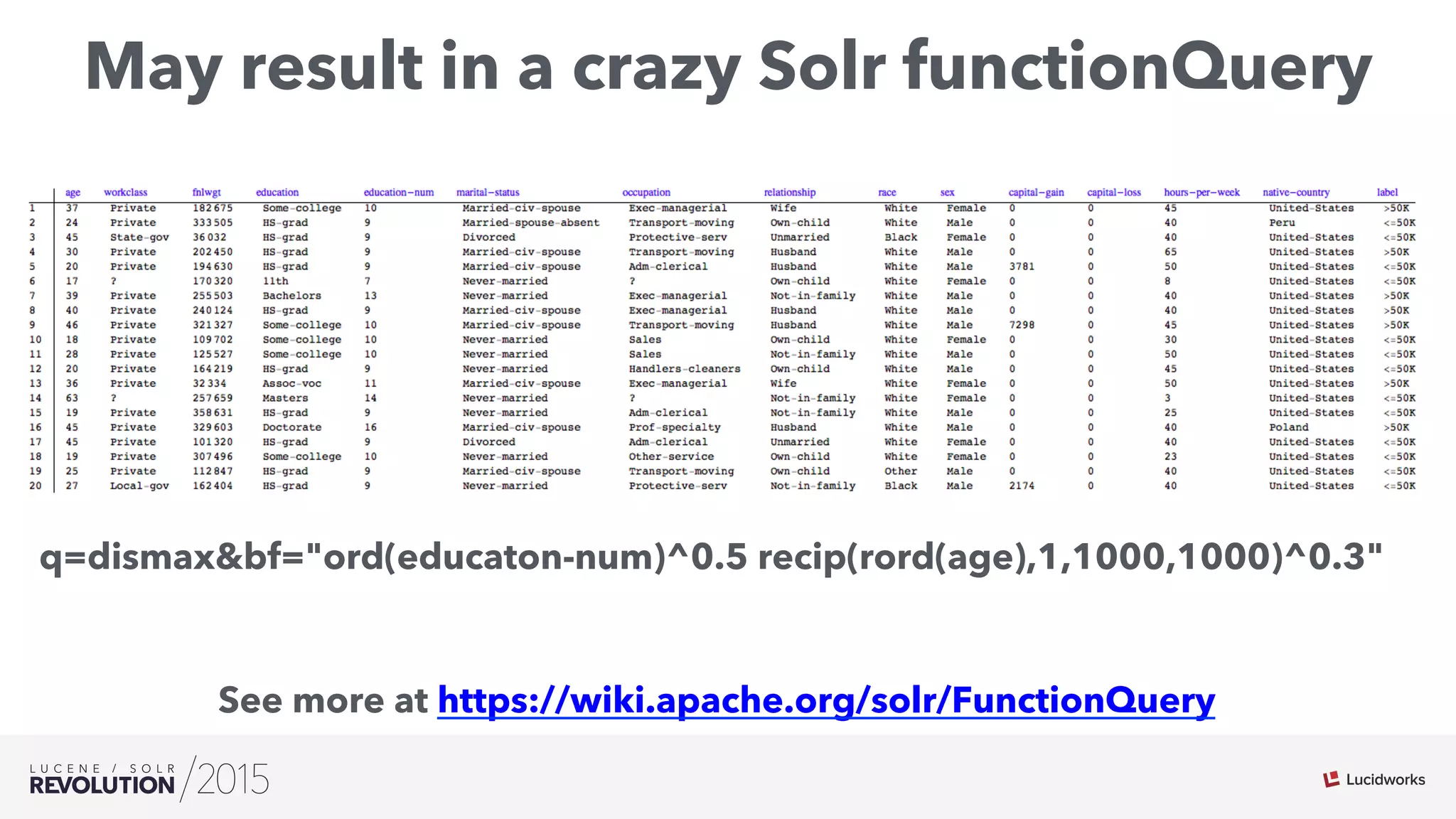

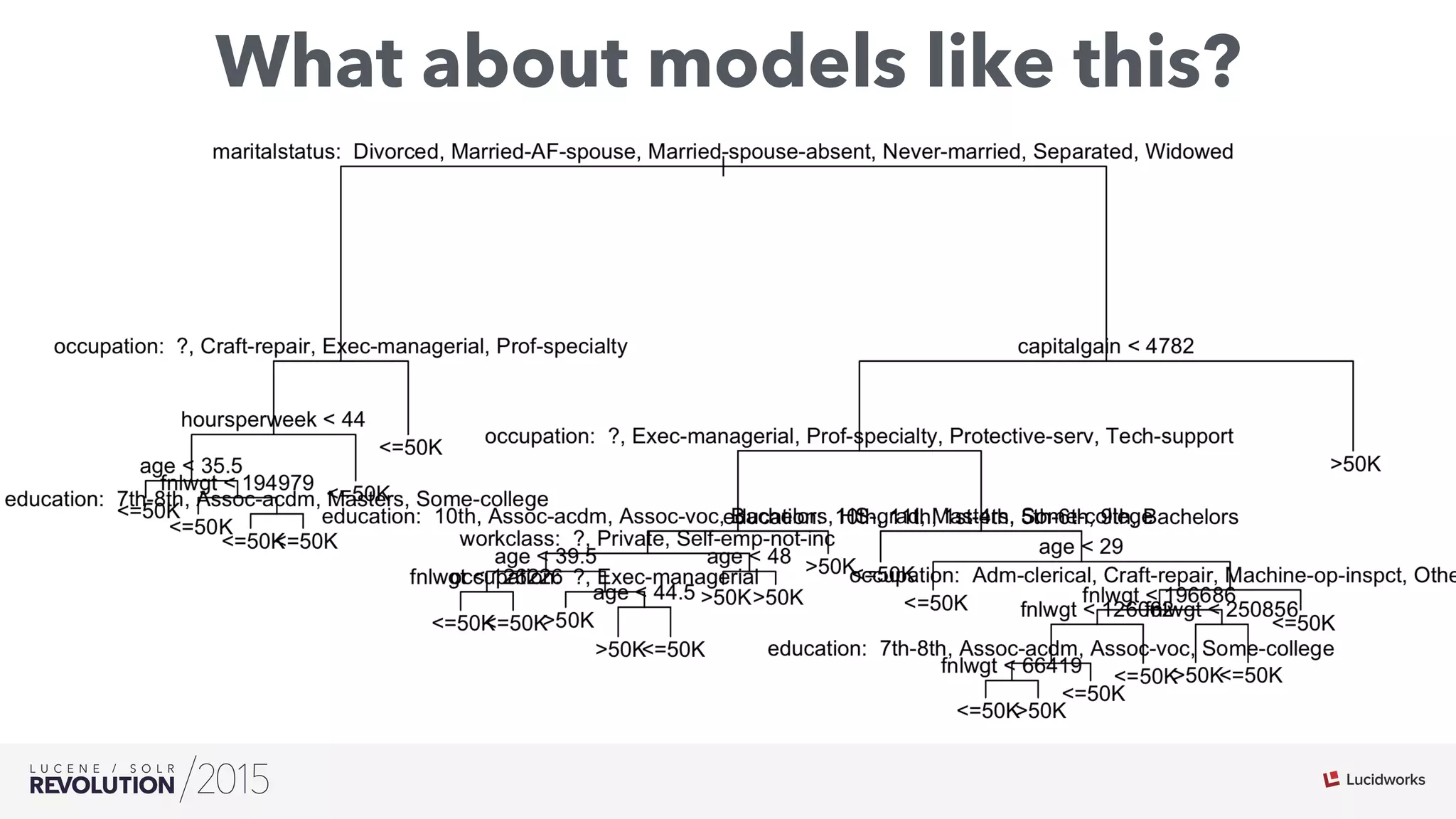

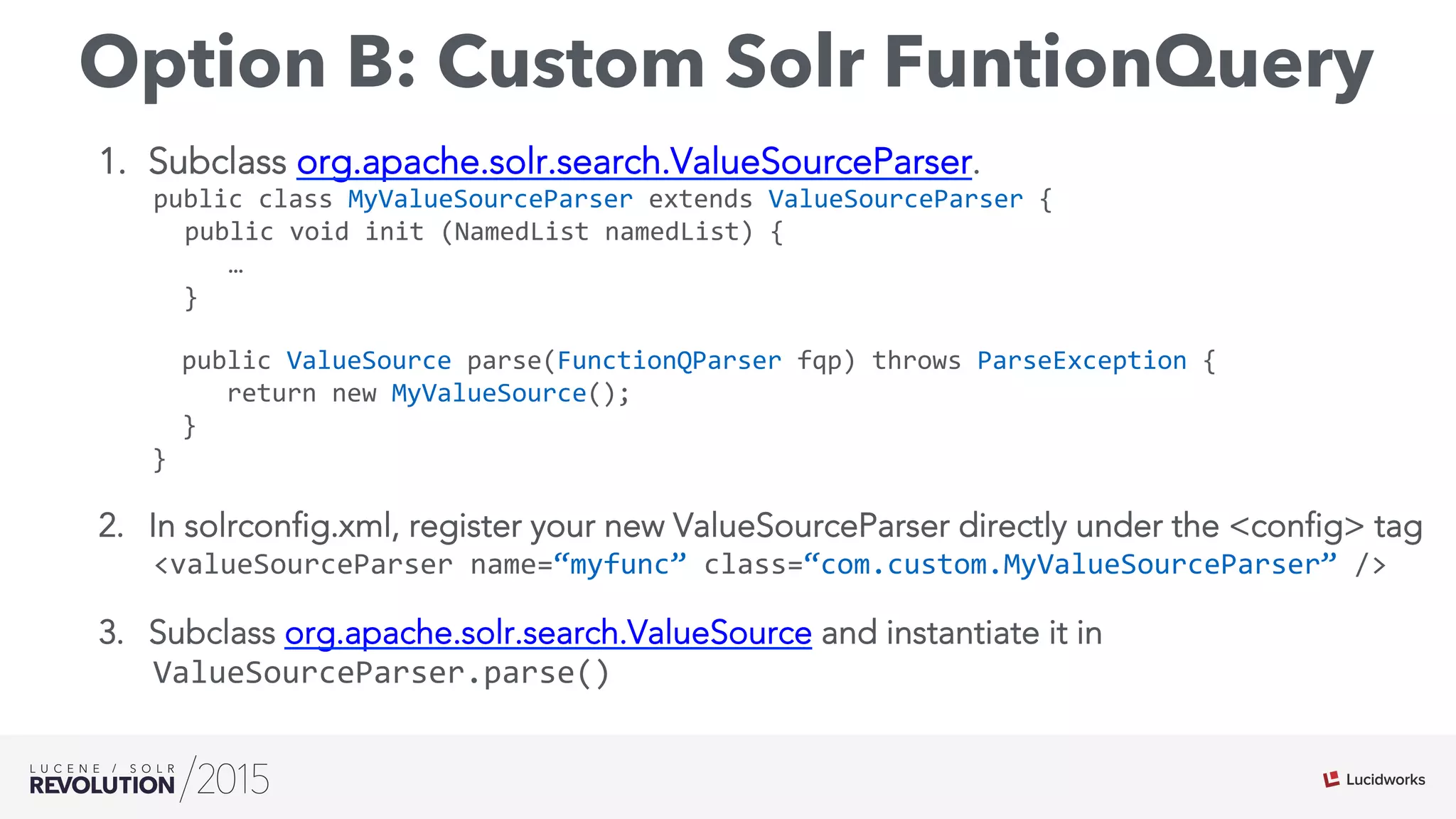

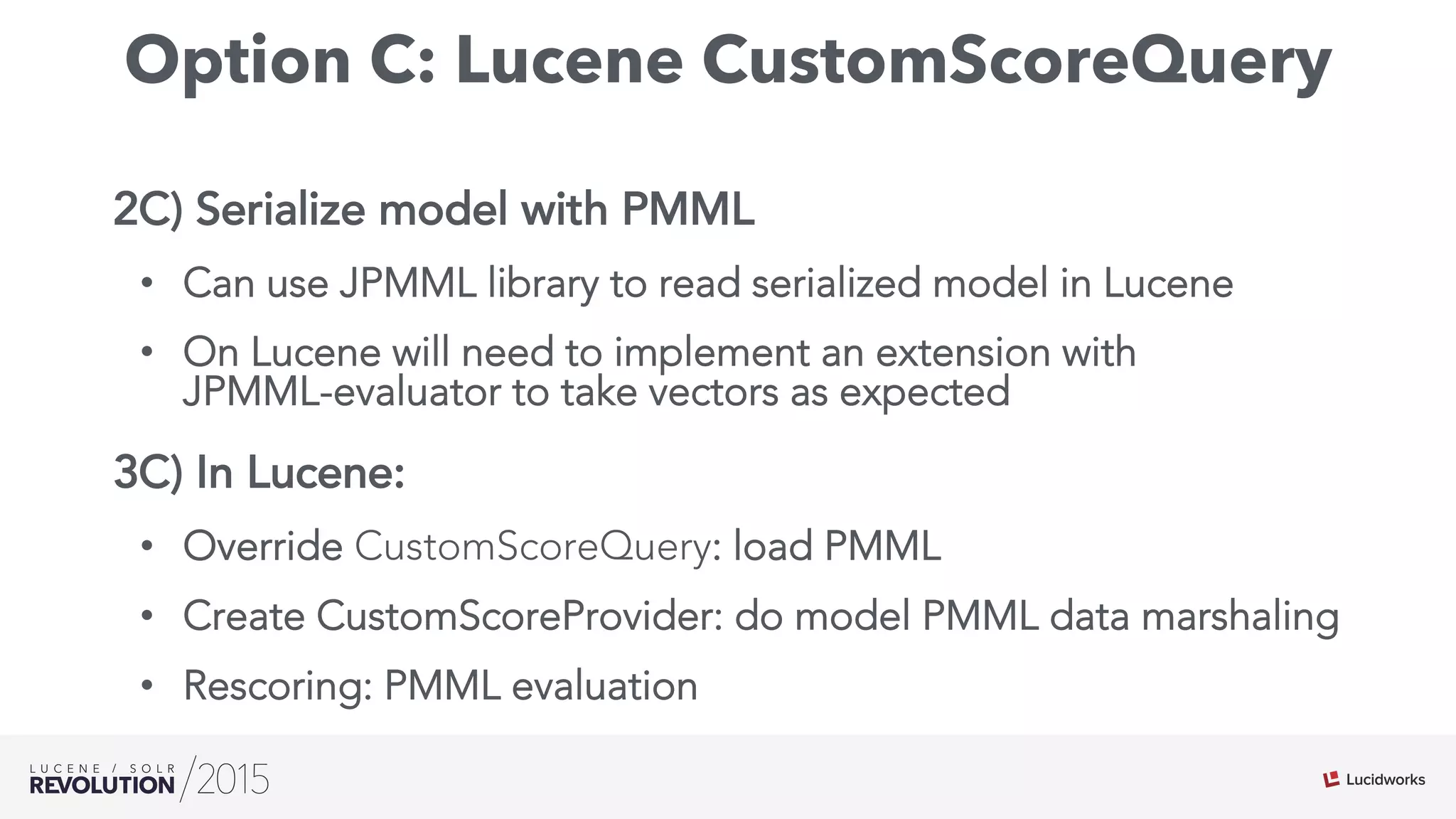

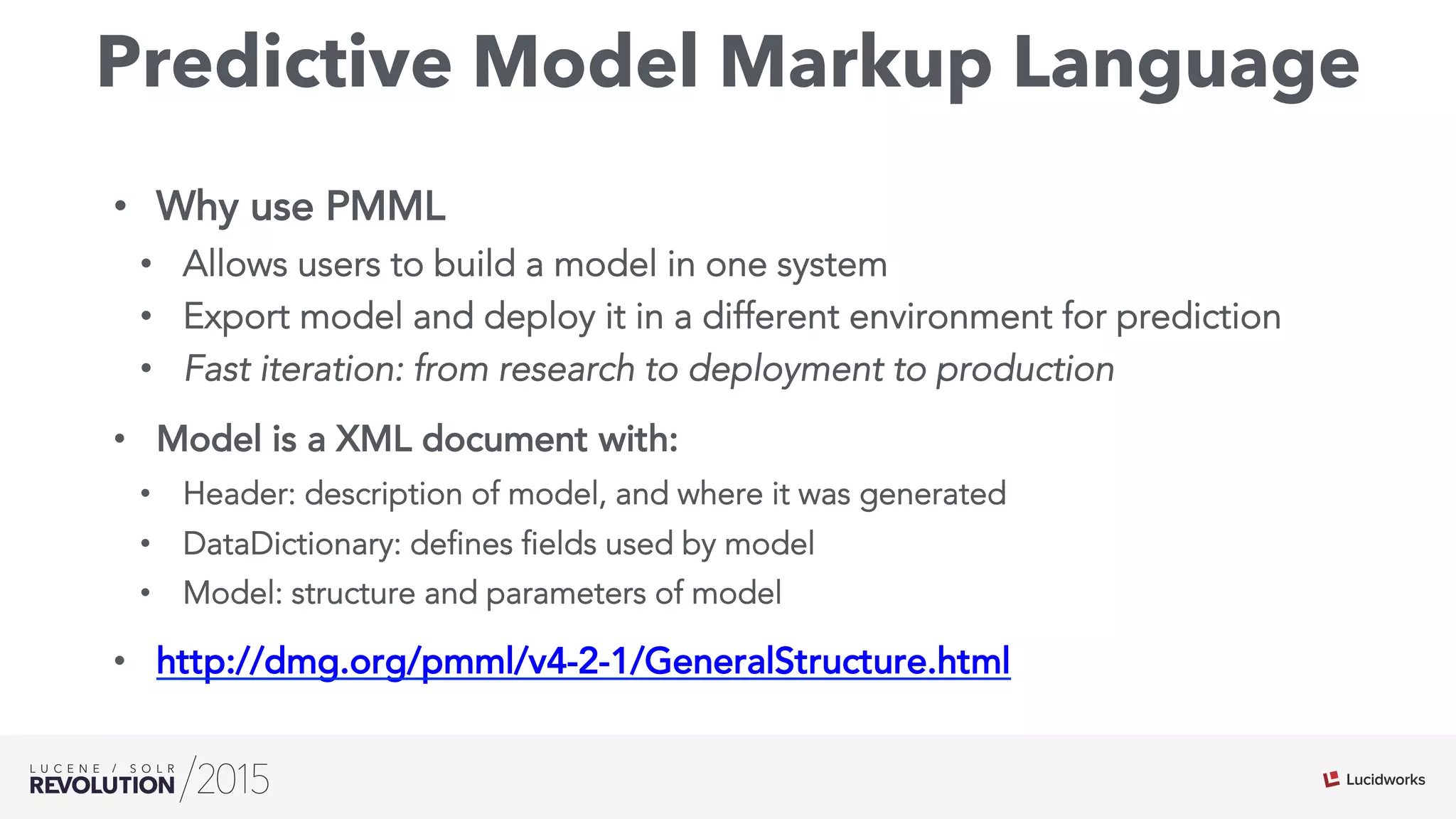

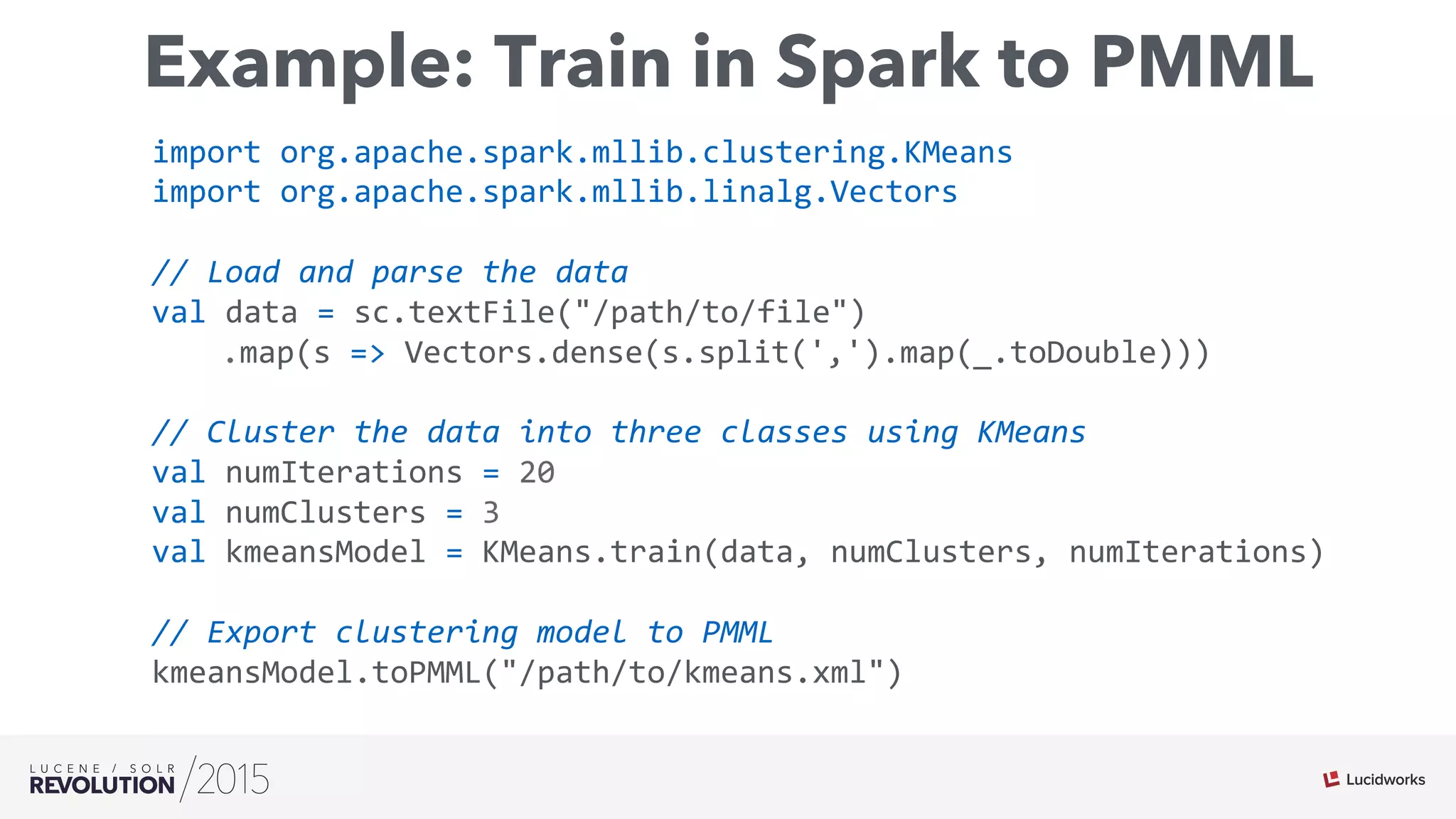

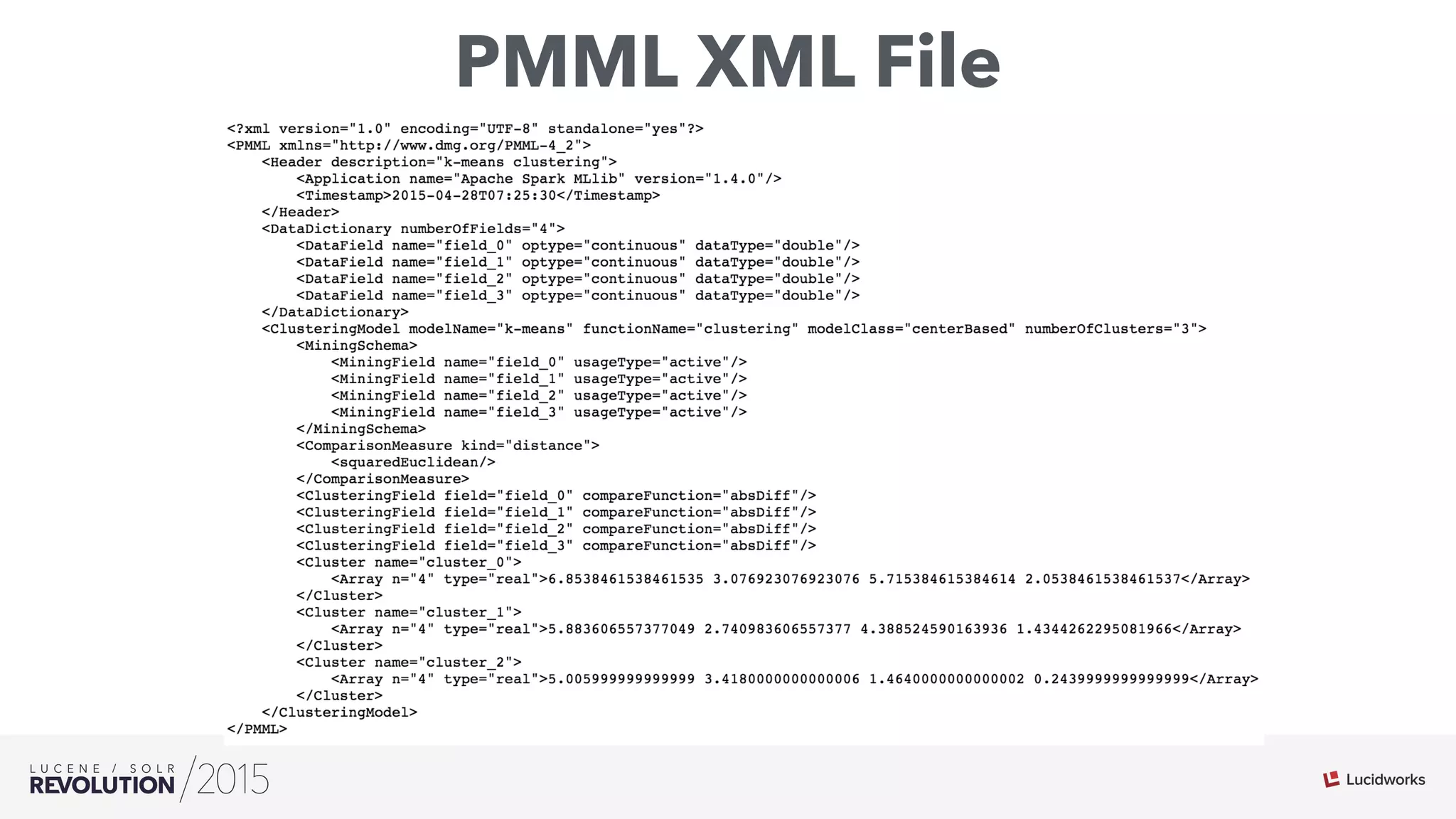

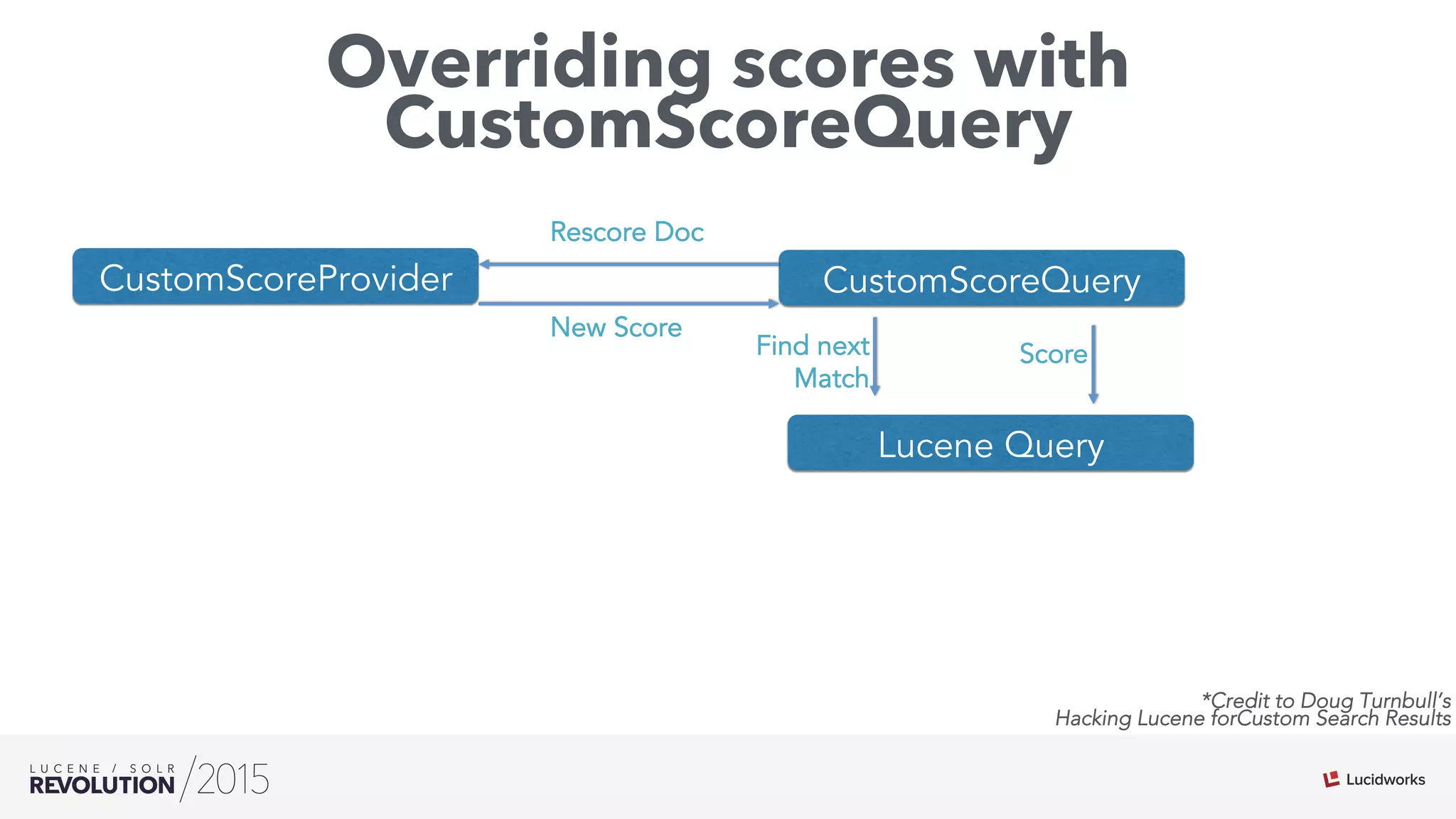

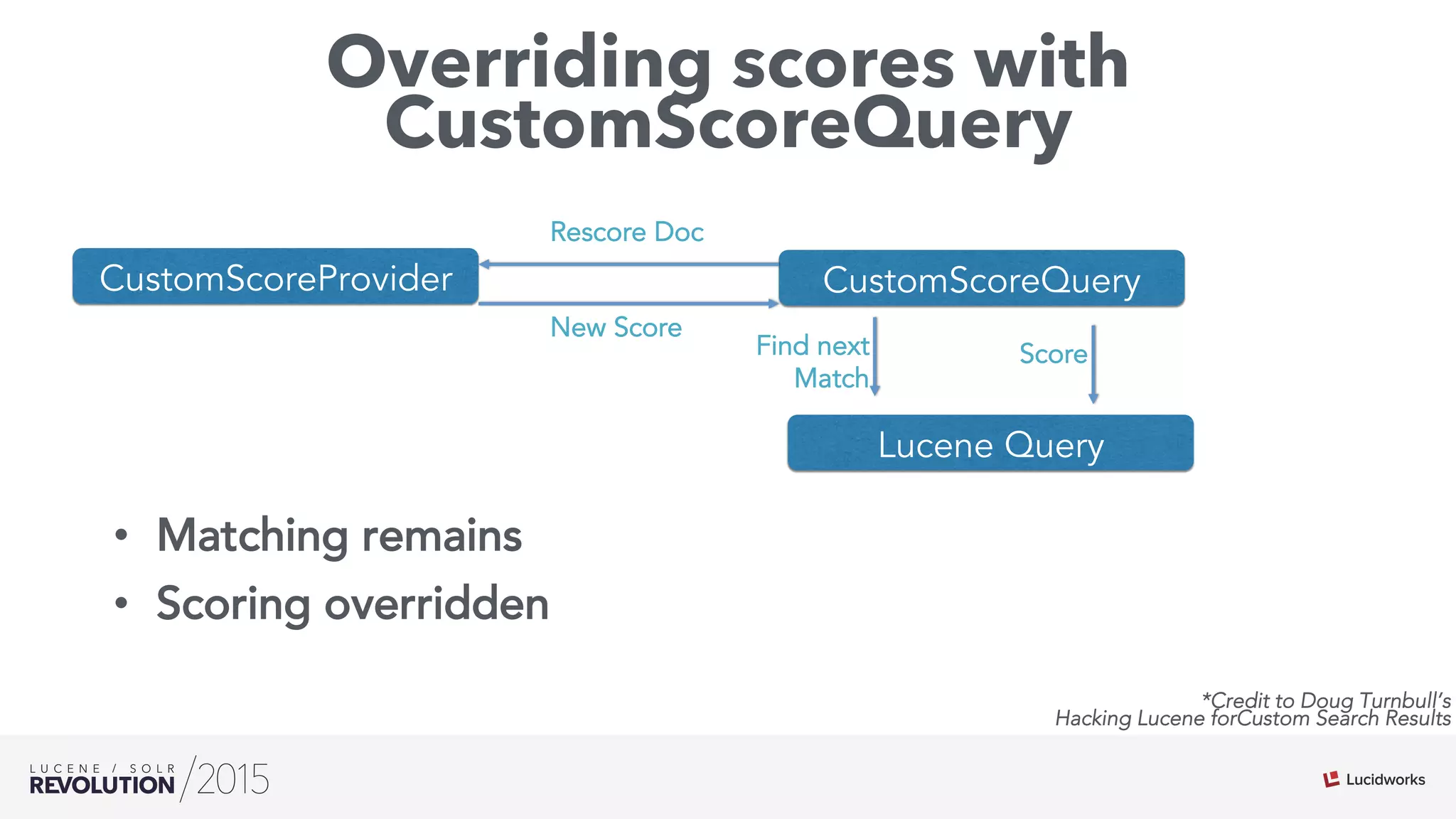

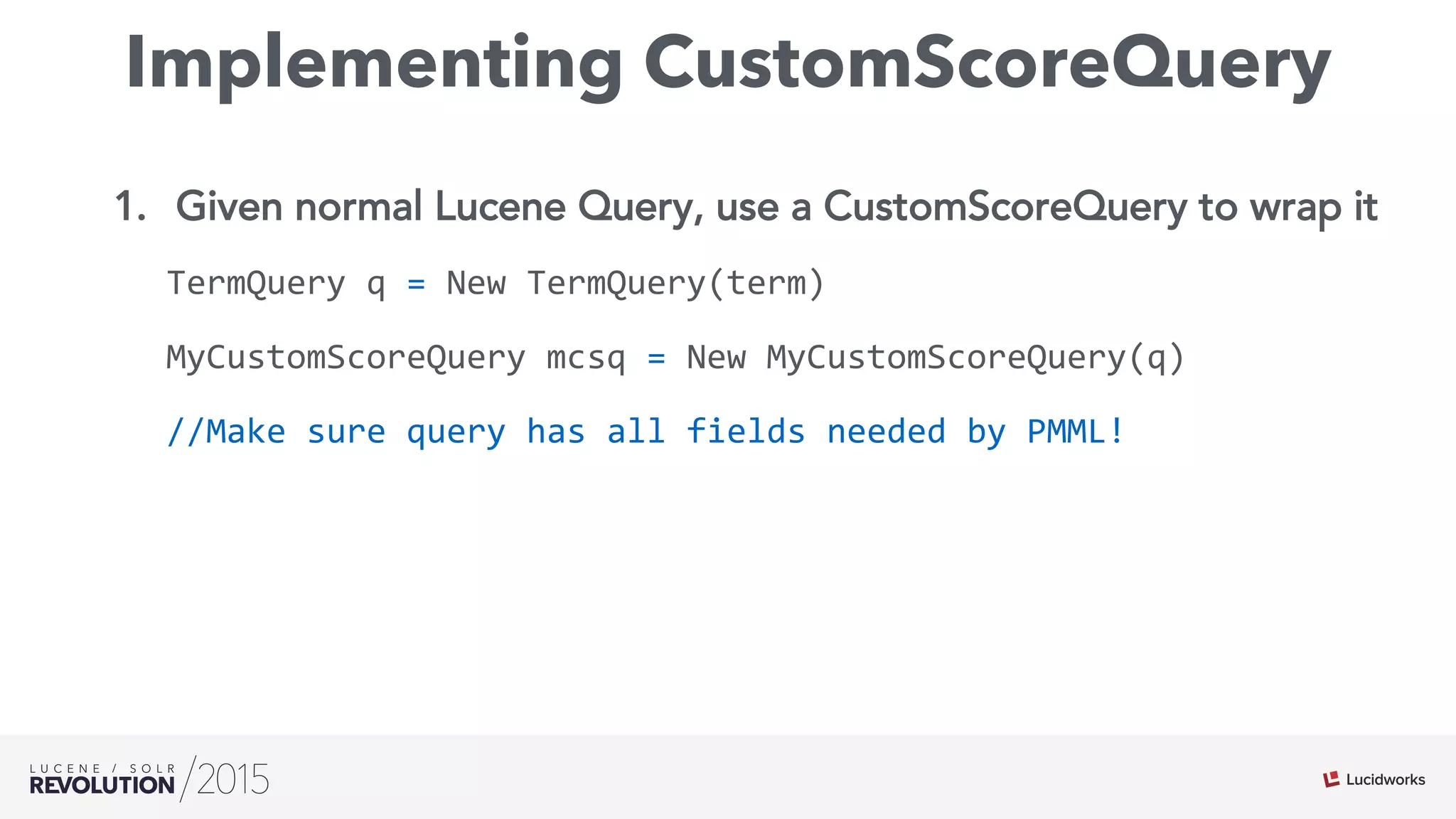

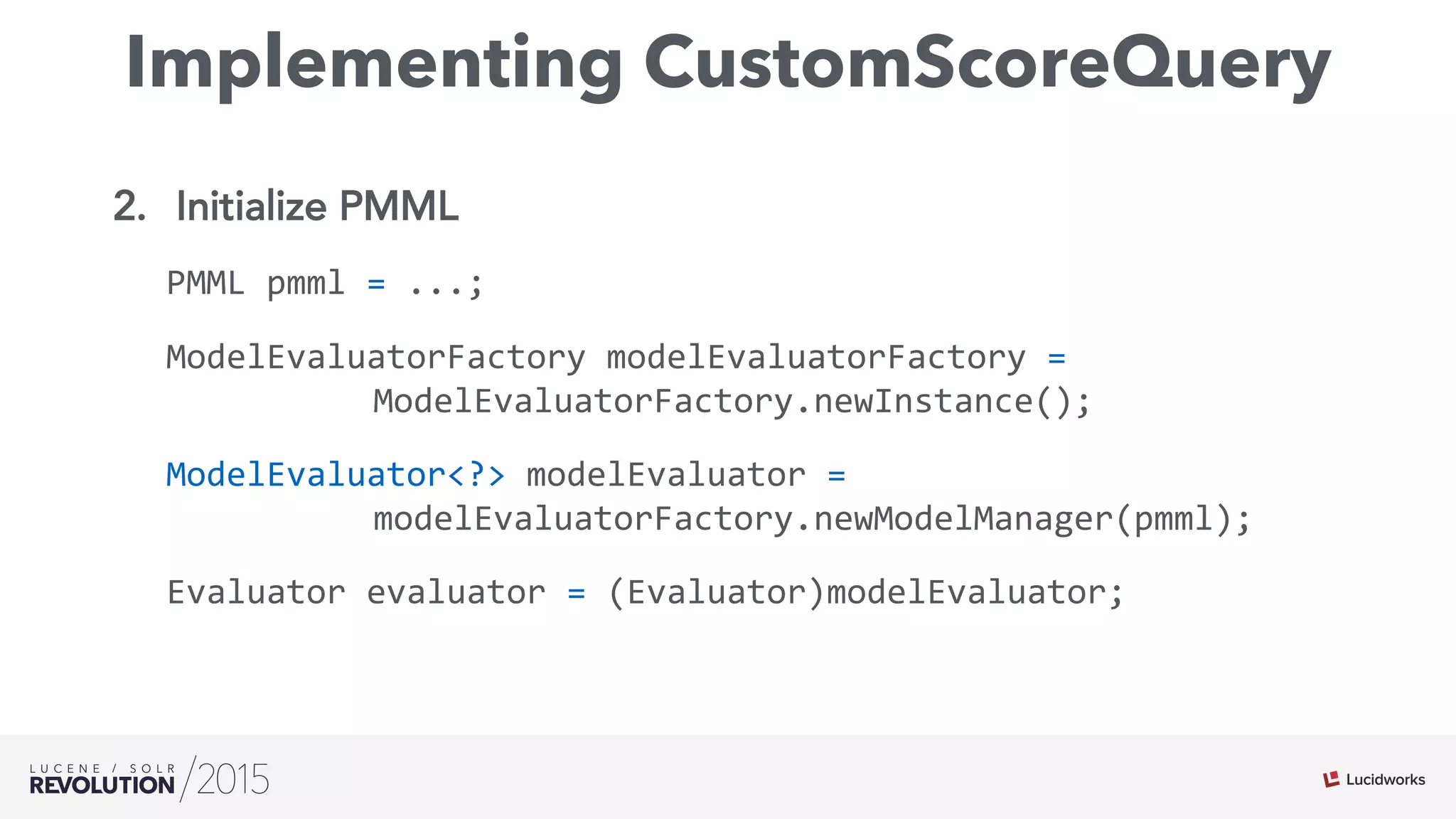

The document discusses the intersection of search technologies and machine learning, highlighting the challenges of scaling learning systems for real-world applications. It presents strategies for integrating machine learning into search systems, such as using custom scoring queries and the PMML for model interoperability. The authors emphasize the complexity of implementing effective and efficient learning systems while addressing issues related to performance, scalability, and system architecture.

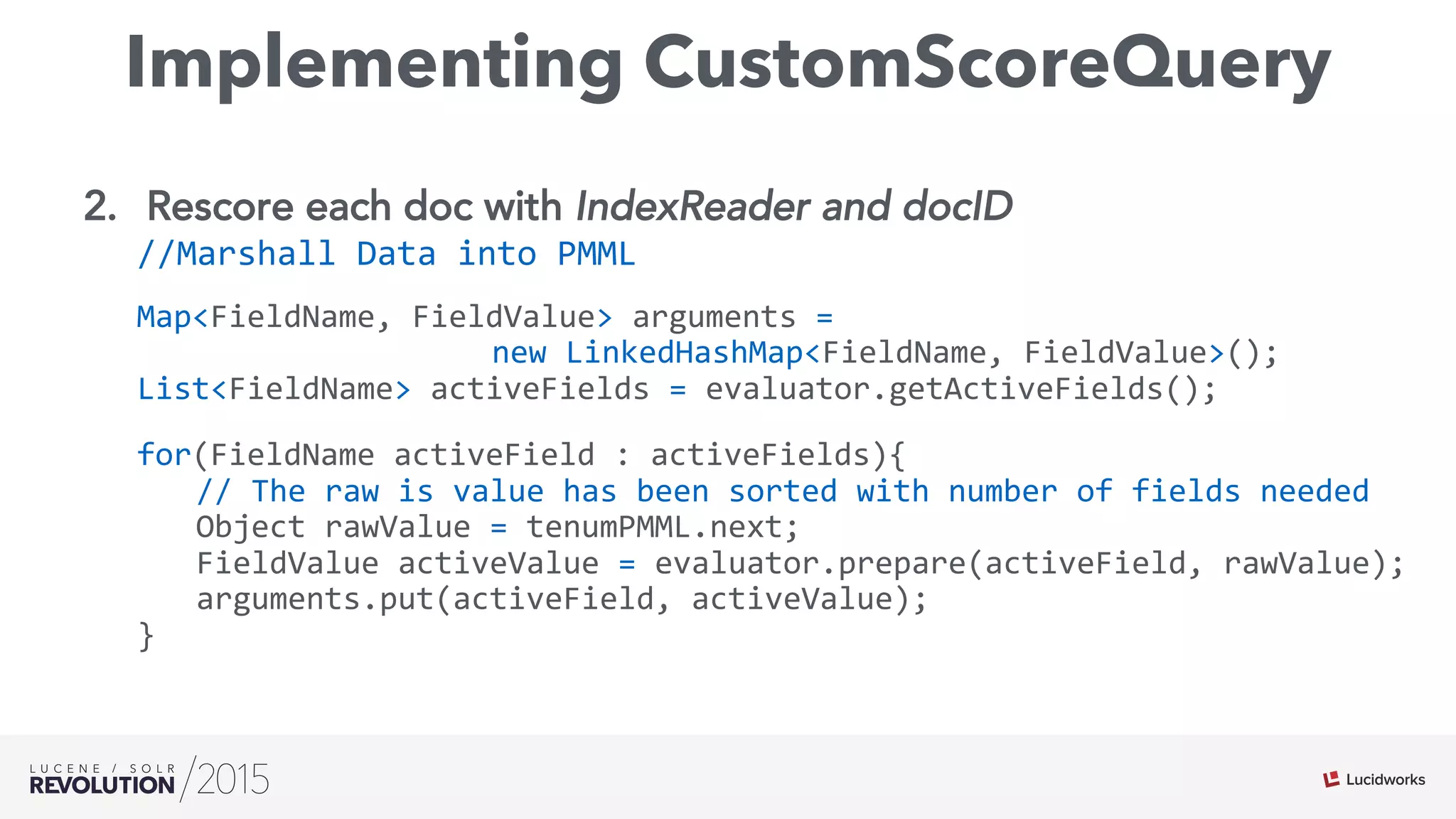

![Implementing CustomScoreQuery

2. Rescore each doc with IndexReader and docID

public

float

customScore(int

doc,

float

subQueryScore,

float

valSrcScores[])

throws

IOException

{

//Lucene

reader

IndexReader

r

=

context.reader();

Terms

tv

=

r.getTermVector(doc,

_field);

TermsEnum

tenum

=

null;

tenum

=

tv.iterator(tenum);

//convert

the

iterator

order

to

fields

needed

by

model

TermsEnum

tenumPMML

=

tenum2PMML(tenum,

evaluator.getActiveFields());](https://image.slidesharecdn.com/rev2015hudelgadofinal-151015220607-lva1-app6892/75/Lucene-Solr-Revolution-2015-Where-Search-Meets-Machine-Learning-43-2048.jpg)