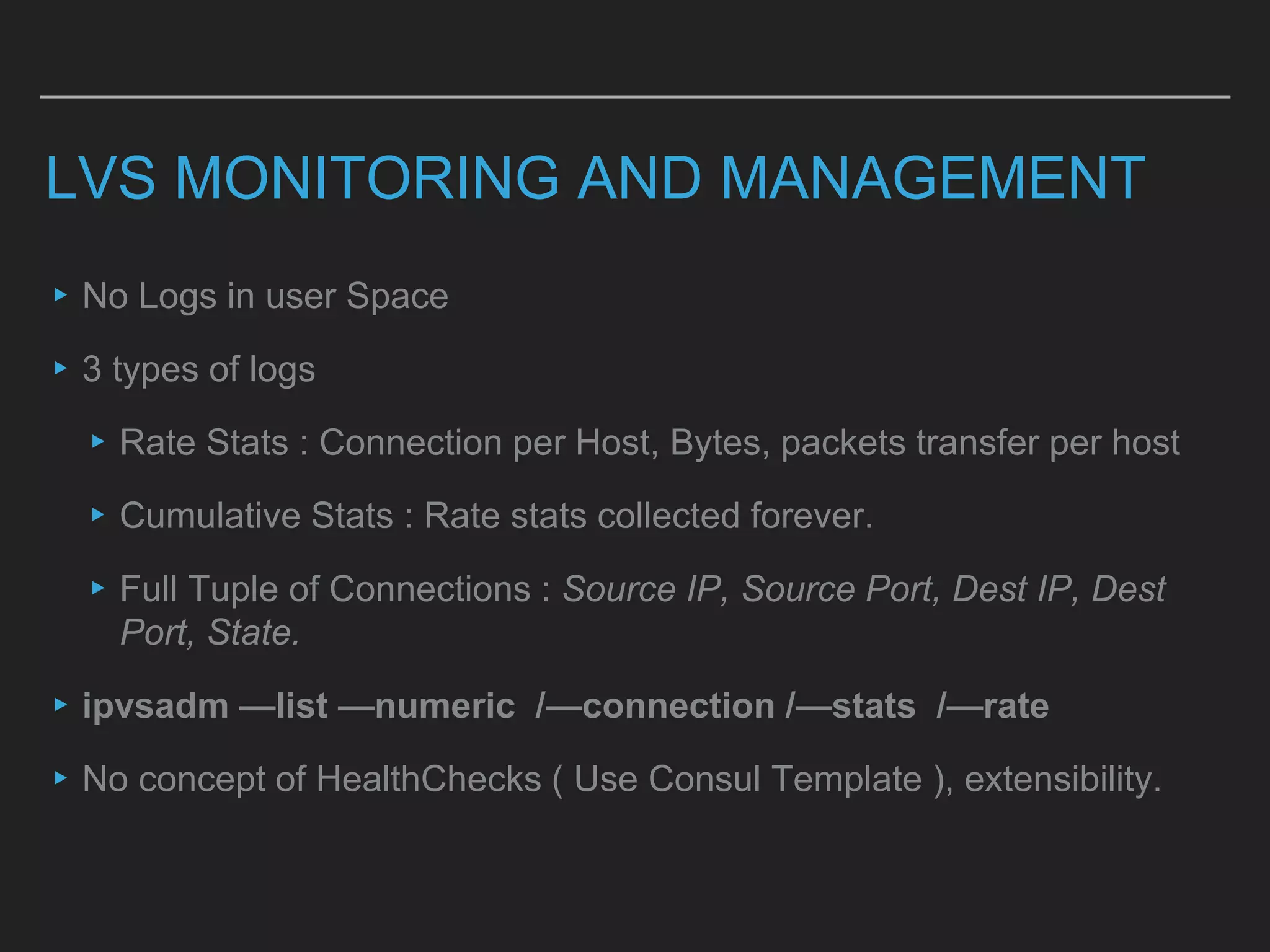

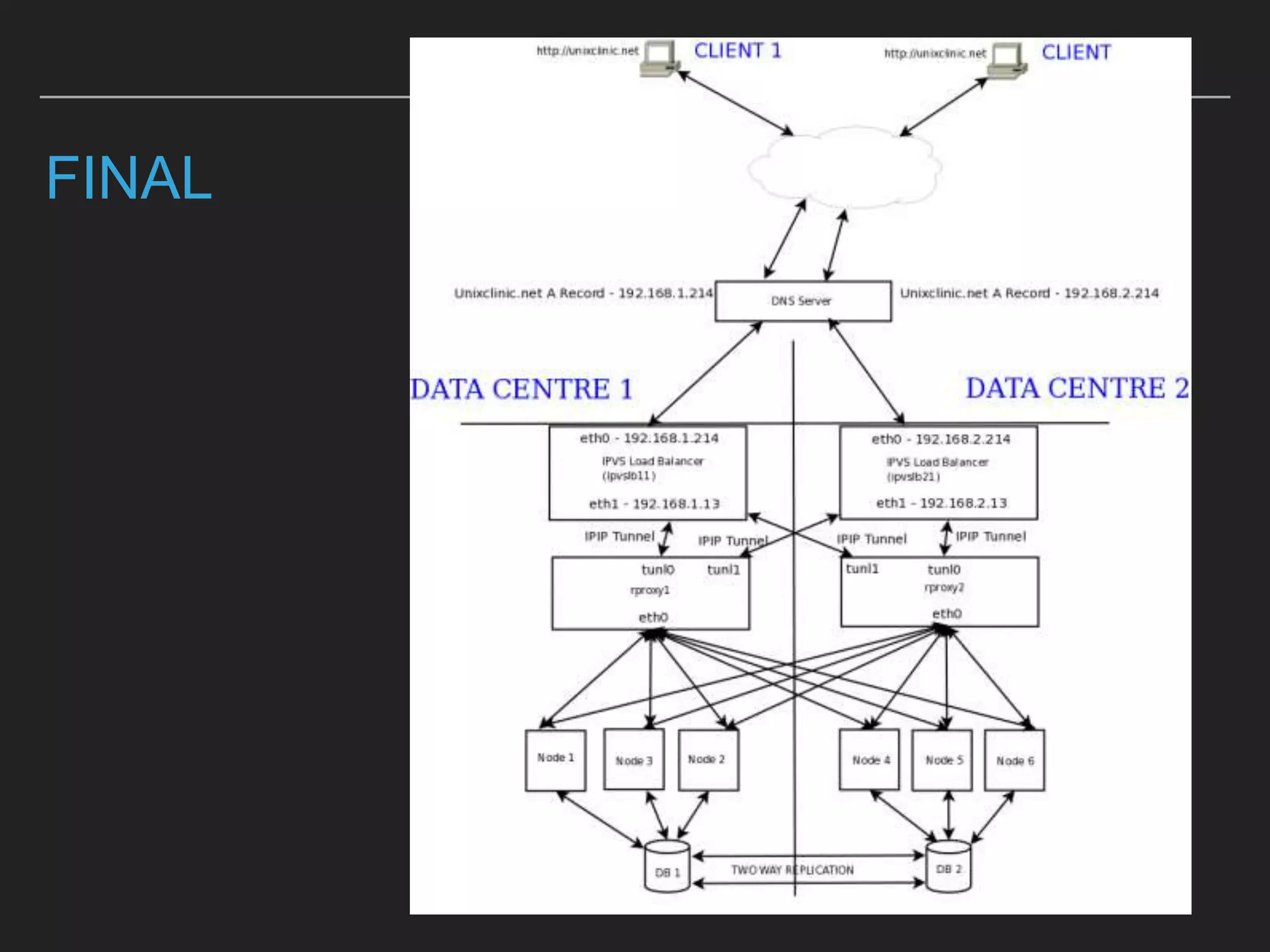

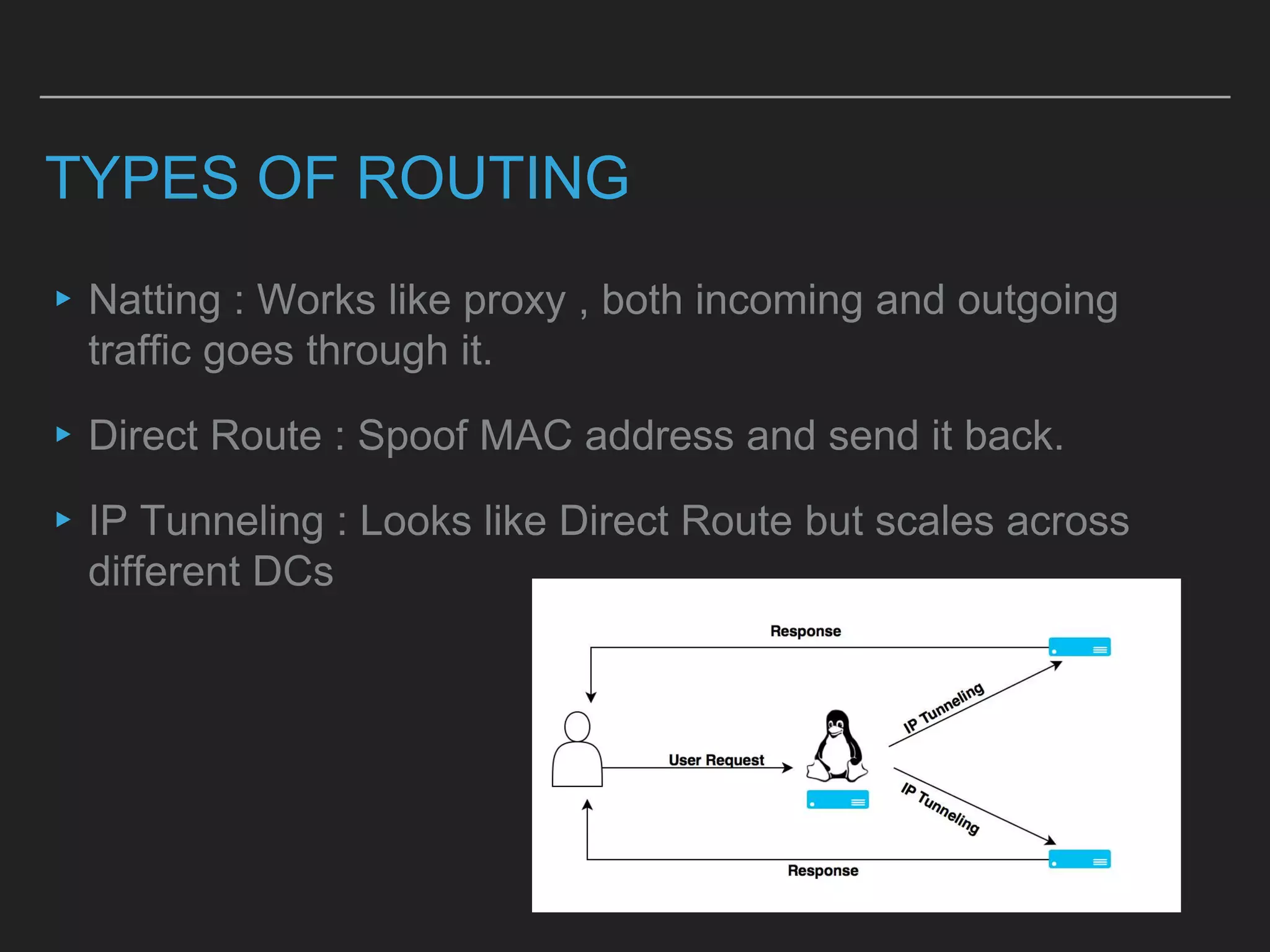

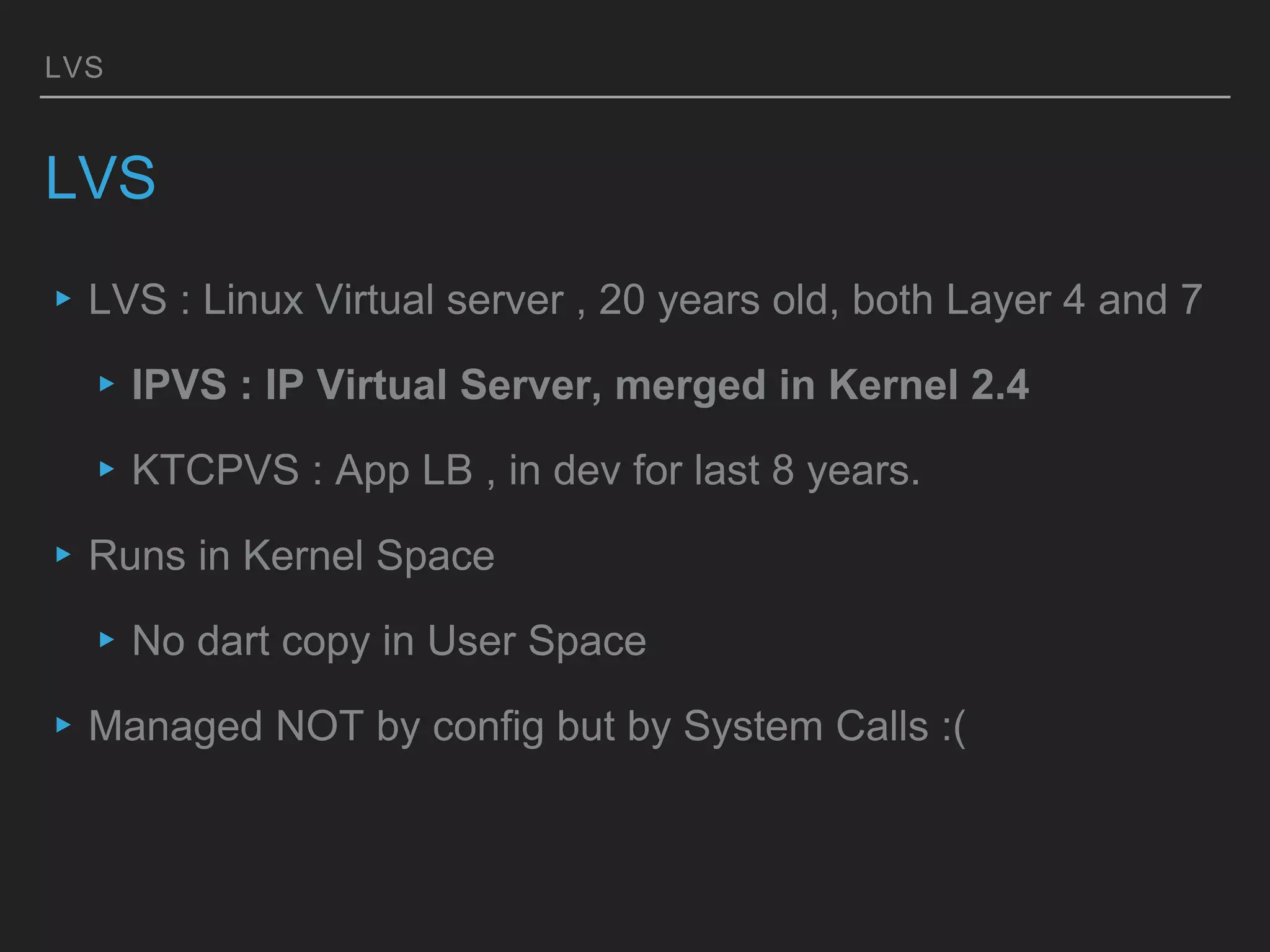

This document discusses load balancing techniques and provides an in-depth study of scaling load balancing to handle 80,000 transactions per second (TPS). It evaluates DNS-based, layer 3/4, layer 7, and hybrid load balancing approaches. It also describes setting up Linux Virtual Server (LVS) load balancing on a director server and configuring real servers, noting some caveats like disabling reverse path filtering and balancing interrupt processing across CPU cores.

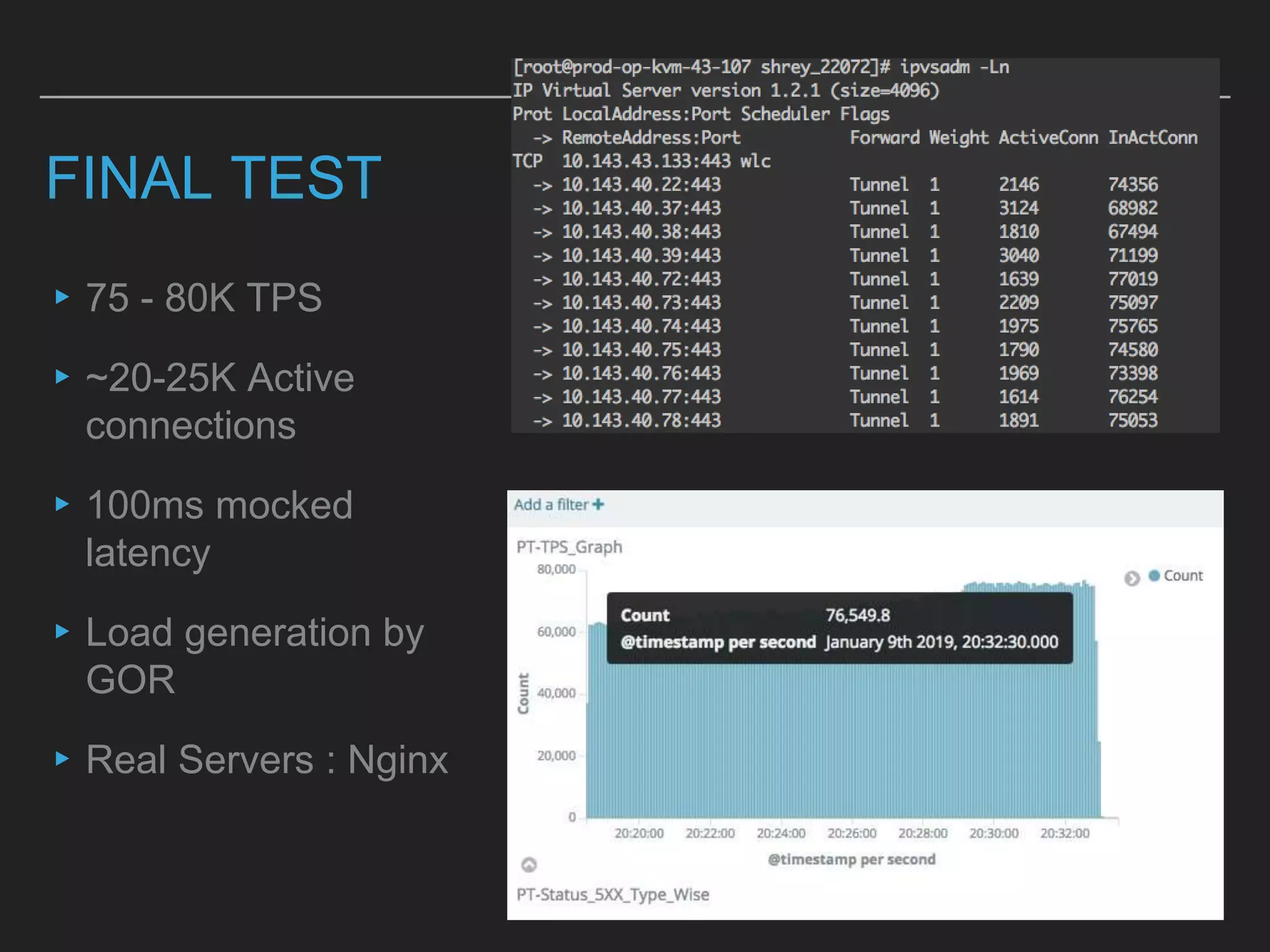

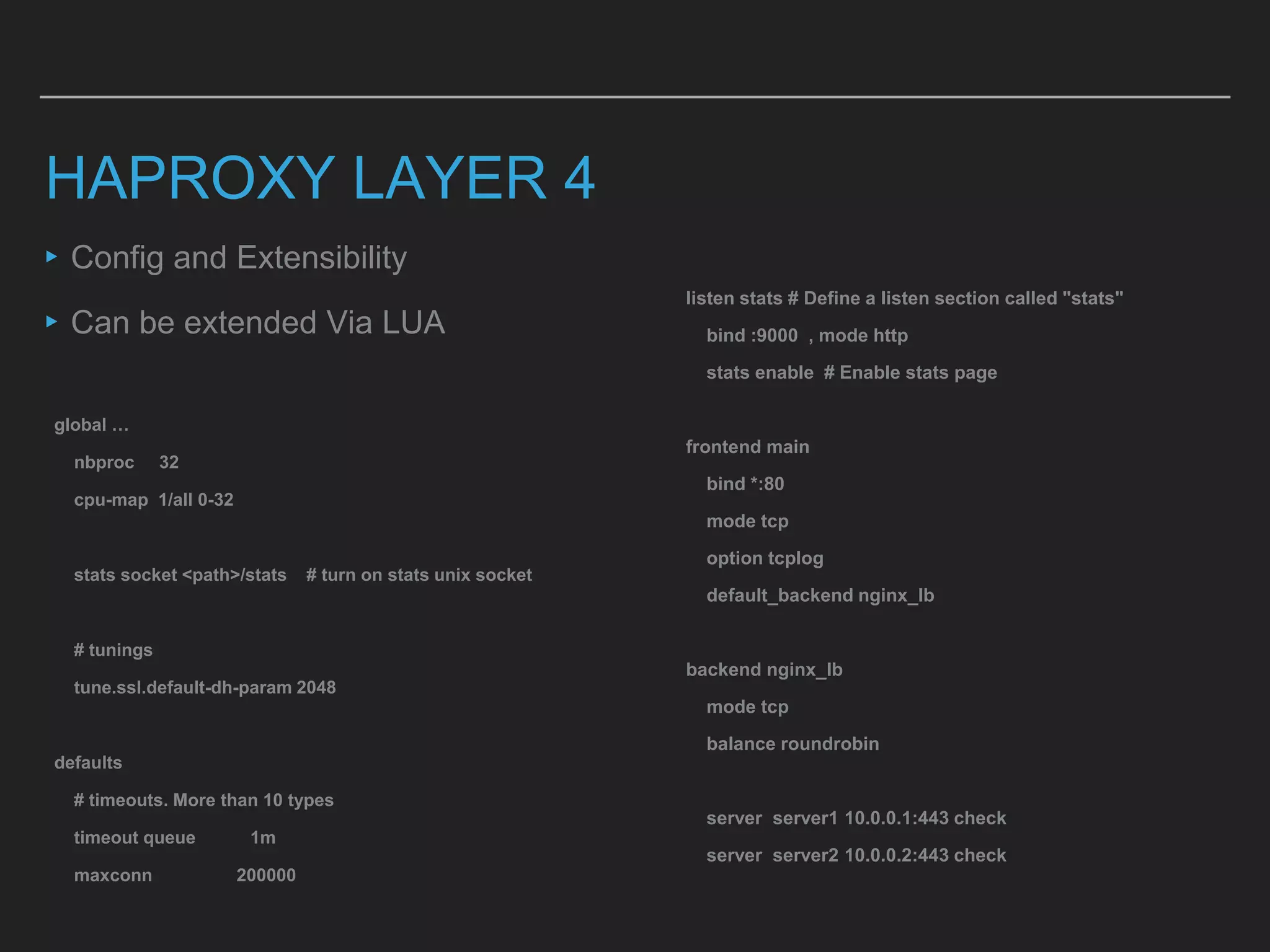

![LVS IMPLEMENTATION STEPS

# SETUP LVS

$ yum -y install ipvsadm

$ touch /etc/sysconfig/ipvsadm

$ systemctl start ipvsadm && systemctl enable ipvsadm

$ echo 'net.ipv4.ip_forward = 1' >> /etc/sysctl.conf

# CONFIGURE LVS

$ ipvsadm -C # clear tables

# add virtual service [ ipvsadm -A -t (Service IP:Port) -s (Distribution

method) ]

$ ipvsadm -A -t 10.0.0.0:80 -s wlc

# ADD BACKEND SERVERS [ ipvsadm -a -t (Service IP:Port) -r

(Real Server's IP:Port) -i ]

$ ipvsadm -a -t 10.143.45.105:80 -r 10.0.0.1 -i

# confirm tables

$ ipvsadm -ln

# ON REAL SERVERS

$ ip addr add <VIP>/32 dev tunl0 brd <VIP>

$ ip link set tunl0 up arp off

# TURN RP FILTER OFF ( later )

‣ LVS Server Setup on Director

‣ Service Setup

‣ Configure LVS

‣ Real Server Setup](https://image.slidesharecdn.com/loadbalancingppt-190301093723/75/Loadbalancing-In-depth-study-for-scale-80K-TPS-12-2048.jpg)