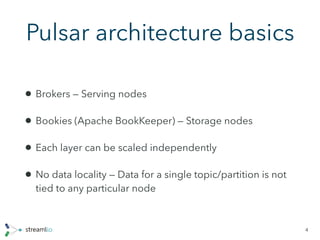

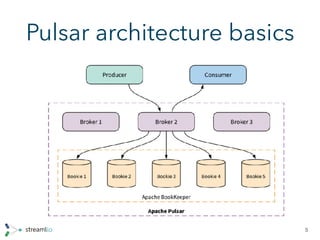

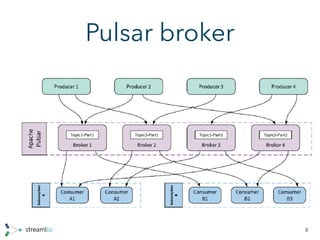

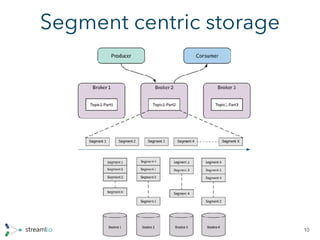

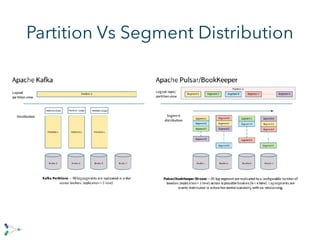

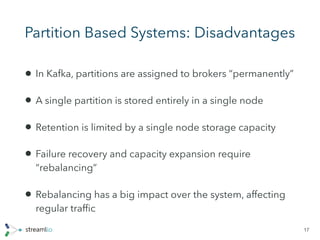

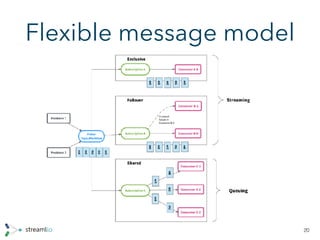

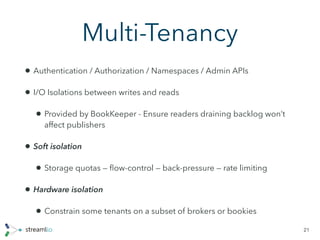

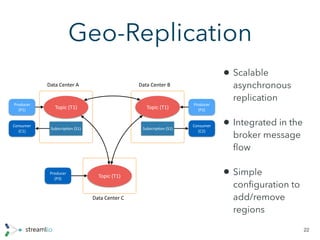

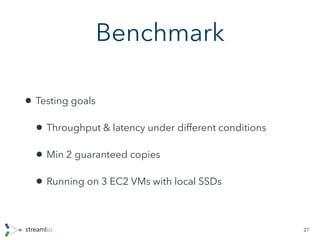

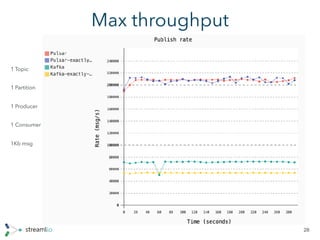

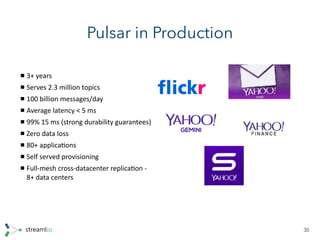

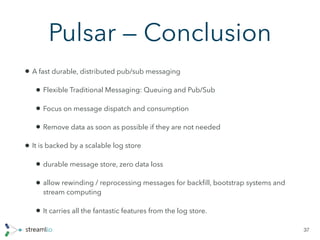

Apache Pulsar is a fast, highly scalable, and flexible pub/sub messaging system. It provides guaranteed message delivery, ordering, and durability by backing messages with a replicated log storage. Pulsar's architecture allows for independent scalability of brokers and storage nodes. It supports multi-tenancy, geo-replication, and high throughput of over 1.8 million messages per second in a single partition.