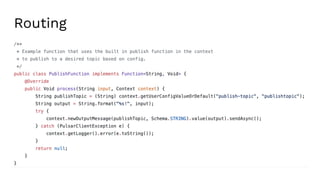

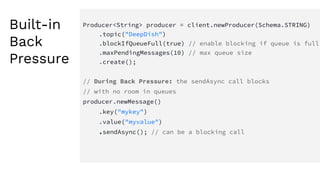

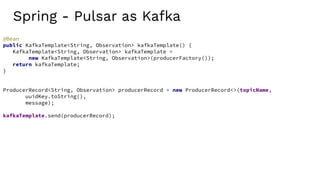

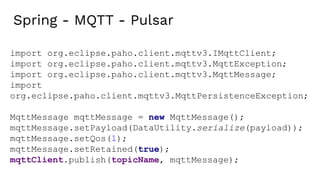

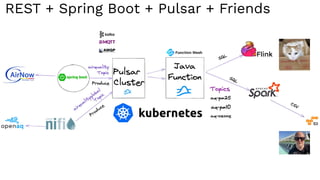

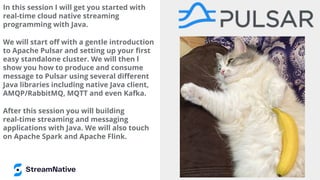

The document is a comprehensive introduction to Apache Pulsar development using Java by Tim Spann, outlining key features, architecture, and messaging models. It covers how to set up a Pulsar cluster, publish and consume messages via various protocols, and highlights the advantages of using Pulsar for real-time messaging and streaming. Additionally, it provides practical coding examples for creating Pulsar applications and details the training resources available for developers.

![Reader Interface

byte[] msgIdBytes = // Some byte

array

MessageId id =

MessageId.fromByteArray(msgIdBytes);

Reader<byte[]> reader =

pulsarClient.newReader()

.topic(topic)

.startMessageId(id)

.create();

Create a reader that will read from

some message between earliest and

latest.

Reader](https://image.slidesharecdn.com/jconf-220927160019-03d8ee78/85/JConf-dev-2022-Apache-Pulsar-Development-101-with-Java-21-320.jpg)

![Pulsar Simple Producer

String pulsarKey = UUID.randomUUID().toString();

String OS = System.getProperty("os.name").toLowerCase();

ProducerBuilder<byte[]> producerBuilder = client.newProducer().topic(topic)

.producerName("demo");

Producer<byte[]> producer = producerBuilder.create();

MessageId msgID = producer.newMessage().key(pulsarKey).value("msg".getBytes())

.property("device",OS).send();

producer.close();

client.close();](https://image.slidesharecdn.com/jconf-220927160019-03d8ee78/85/JConf-dev-2022-Apache-Pulsar-Development-101-with-Java-45-320.jpg)

![Setting Subscription Type Java

Consumer<byte[]> consumer = pulsarClient.newConsumer()

.topic(topic)

.subscriptionName("subscriptionName")

.subscriptionType(SubscriptionType.Shared)

.subscribe();](https://image.slidesharecdn.com/jconf-220927160019-03d8ee78/85/JConf-dev-2022-Apache-Pulsar-Development-101-with-Java-47-320.jpg)