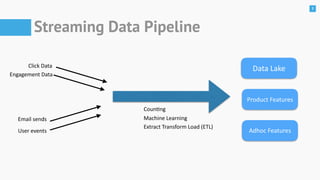

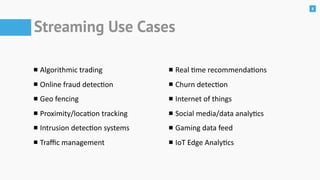

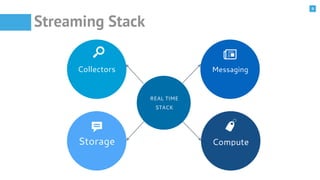

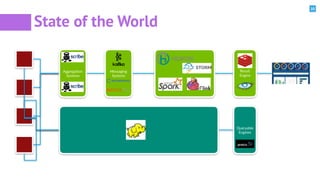

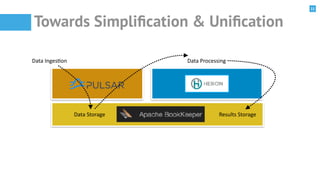

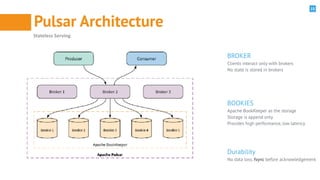

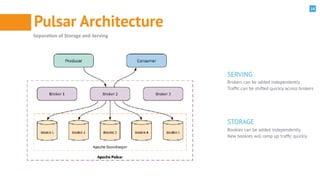

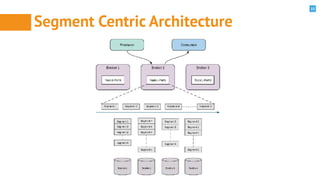

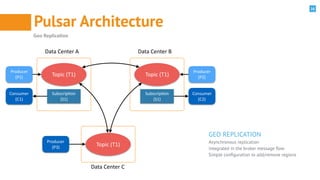

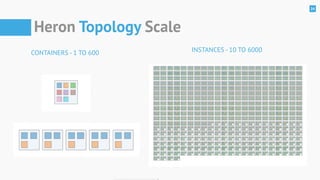

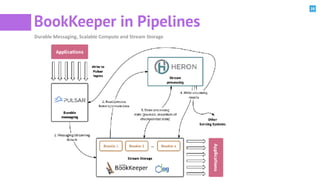

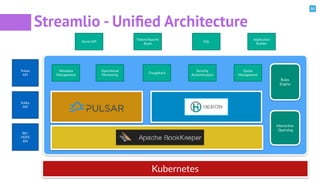

The document discusses the construction of modern data pipelines using Apache Pulsar, Heron, and BookKeeper, focusing on the transition from traditional batch processing to real-time streaming data analysis. It highlights the advantages of using these technologies, such as low latency and high throughput, and presents various use cases including algorithmic trading and online fraud detection. Additionally, it touches on the architecture and operational capabilities of Pulsar and Heron, emphasizing their efficiency and scalability for handling vast amounts of data.

![3

Increasingly Connected World

Internet of Things

30 B connected devices by 2020

Health Care

153 Exabytes (2013) -> 2314 Exabytes (2020)

Machine Data

40% of digital universe by 2020

Connected Vehicles

Data transferred per vehicle per month

4 MB -> 5 GB

Digital Assistants (Predictive Analytics)

$2B (2012) -> $6.5B (2019) [1]

Siri/Cortana/Google Now

Augmented/Virtual Reality

$150B by 2020 [2]

Oculus/HoloLens/Magic Leap

Ñ

!+

>](https://image.slidesharecdn.com/modern-data-pipelines-index-conference-180226174315/85/Modern-Data-Pipelines-3-320.jpg)