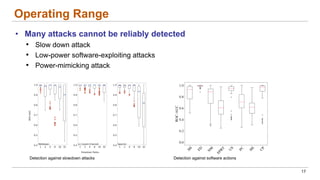

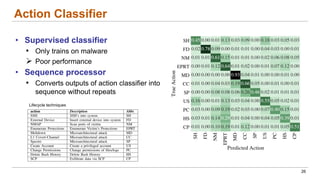

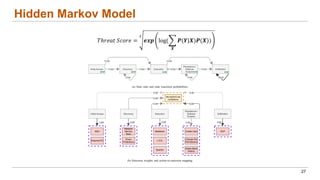

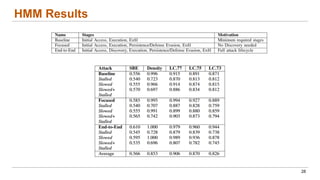

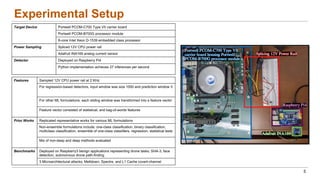

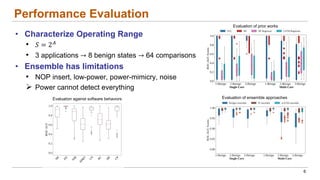

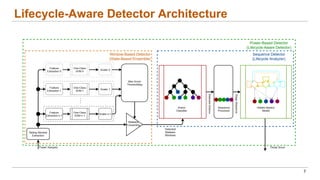

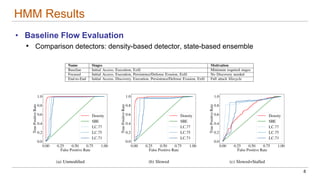

This document discusses the lifecycle-aware power side-channel malware detection system, which aims to address challenges posed by noisy power signals and the limitations of previous detection methods. It proposes a one-class classification (OCC) pipeline that adapts to various benign operating states in embedded systems while evaluating the performance of machine learning approaches. Future work includes improvements in cloud security and developing a multidomain detector leveraging lifecycle awareness.

![Side-Channel

• Implementation-based medium that

leaks information

• Not a weakness of the algorithm

• "Flaw” of hardware implementation

• Electromagnetic, power, timing, etc.

• Broad and impactful information

Can be used for attack and defense

• Well-fitted for embedded systems

• Deploy on smart battery

• Orthogonal to other defenses

No HW/SW overhead for target device

2

Power-based detector flow [Wei et al. ‘19]](https://image.slidesharecdn.com/cathisalexandermitreattackcon-241113152156-6931ce46/85/Lifecycle-Aware-Power-Side-Channel-Malware-Detection-Alexander-Cathis-2-320.jpg)

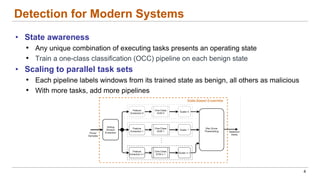

![Key Challenges

• Power-based challenges

• Power signal is noisy

• Large characterization scope increases misclassification

• Attacker can produce power-mimicking malware [Wei et al. ‘19]

• Prior work limitations

• Evaluation on parallel task sets

• Inappropriate utilization of ML tools

• Lack of rigorous public datasets

Ø Did not evaluate for modern complex systems

3](https://image.slidesharecdn.com/cathisalexandermitreattackcon-241113152156-6931ce46/85/Lifecycle-Aware-Power-Side-Channel-Malware-Detection-Alexander-Cathis-3-320.jpg)

![Side-Channel

• Implementation-based medium that

leaks information

• Not a weakness of the algorithm

• "Flaw” of hardware implementation

• Electromagnetic, power, timing, etc.

Broad attack vector

• Broad and impactful attack vector

• Meltdown, Spectre, SPA, DPA, etc.

Real-world threat

13

SPA (simple power analysis) attack exploiting a power trace

during modular exponentiation [Fujino et al. ‘17]](https://image.slidesharecdn.com/cathisalexandermitreattackcon-241113152156-6931ce46/85/Lifecycle-Aware-Power-Side-Channel-Malware-Detection-Alexander-Cathis-13-320.jpg)

![Power Side-Channel

• Used to steal encryption keys

• Attacks: SPA, DPA

• Defenses: Constant power, blinding,

randomized execution

Arms race

• Defense vector

• Utilize leaked information for defense

• Characterize standard operation with ML

• Raise alarm when deviations occur

Out-of-band detection mechanism

14

Power traces of benign and malicious workloads [Wei et al. ‘19]](https://image.slidesharecdn.com/cathisalexandermitreattackcon-241113152156-6931ce46/85/Lifecycle-Aware-Power-Side-Channel-Malware-Detection-Alexander-Cathis-14-320.jpg)

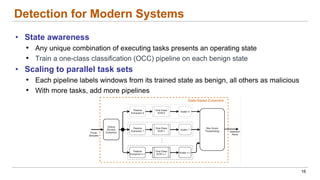

![Detection for Embedded Systems

• Out-of-band deployment

• Deploy on smart battery

• Orthogonal to other defenses

No HW/SW overhead for target device

15

Power-based detector flow [Wei et al. ‘19]

• Machine Learning

• Non-deep models have proven results

• Learns periodic behavior of embedded tasks

• Does not train on malware

Well-fitted for embedded systems](https://image.slidesharecdn.com/cathisalexandermitreattackcon-241113152156-6931ce46/85/Lifecycle-Aware-Power-Side-Channel-Malware-Detection-Alexander-Cathis-15-320.jpg)