Lecture 01

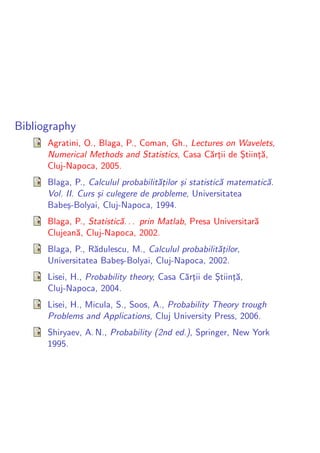

- 1. Bibliography Agratini, O., Blaga, P., Coman, Gh., Lectures on Wavelets, Numerical Methods and Statistics, Casa C˘rtii de Stiint˘, a¸ ¸ ¸a Cluj-Napoca, 2005. Blaga, P., Calculul probabilit˘¸ilor ¸i statistic˘ matematic˘. at s a a Vol. II. Curs ¸i culegere de probleme, Universitatea s Babe¸-Bolyai, Cluj-Napoca, 1994. s Blaga, P., Statistic˘. . . prin Matlab, Presa Universitar˘ a a Clujean˘, Cluj-Napoca, 2002. a Blaga, P., R˘dulescu, M., Calculul probabilit˘¸ilor, a at Universitatea Babe¸-Bolyai, Cluj-Napoca, 2002. s Lisei, H., Probability theory, Casa C˘rtii de Stiint˘, a¸ ¸ ¸a Cluj-Napoca, 2004. Lisei, H., Micula, S., Soos, A., Probability Theory trough Problems and Applications, Cluj University Press, 2006. Shiryaev, A. N., Probability (2nd ed.), Springer, New York 1995.

- 2. Probability space E (Experiment) −→ Ω (Outcomes - results of the experiment) Ω is called sample space Definition A non-empty subset K ⊂ P (Ω) is a σ-algebra (σ-field) if it satisfies the following conditions: 1o if A ∈ K, then A ∈ K (contrary event), 2o if Ai ∈ K, i ∈ I , then the union Ai ∈ K, i∈I and the pair (Ω, K) is called the space of events (measurable space of events).

- 3. Definition Let (Ω, K) be a measurable space of events. The set function P : K −→ R, is called probability, if it satisfies the conditions: 1o P (A) 0, ∀ A ∈ K, 2o P (Ω) = 1, Ω is the certain event, 3o P is σ–additive, i.e. for any subset of events Ai ∈ K, i ∈ I , pairwise disjoint events (mutually exclusive events), Ai ∩ Aj = ∅ (impossible event), i = j, it is satisfied P Ai = P (Ai ) , i∈I i∈I and the triple (Ω, K, P) is called probability space.

- 4. Proposition (1) P (∅) = 0, ¯ (2) P A = 1 − P (A), A) = P (B) − P (A ∩ B), (3) P (B A) = P (B) − P (A), if A ⊂ B, (4) P (B P (B) , if A ⊂ B, (5) P (A) (6) P (A ∪ B) = P (A) + P (B) − P (A ∩ B), n n (7) P Ai P (Ai ). i=1 i=1

- 5. Property (Poincar´) e n n n P (Ai ) − P (Ai ∩ Aj ) + P Ai = i=1 i=1 i,j=1 i<j n n n−1 P (Ai ∩ Aj ∩ Ak ) − · · · + (−1) + P Ai . i=1 i,j,k=1 i<j<k

- 6. Conditional Probability Definition Let (Ω, K, P) be a probability space and an event B ∈ K, P (B) > 0, it is called conditional probability of the event A with respect to the event B the ratio P (A ∩ B) PB (A) = P (A | B) = · P (B)

- 7. Proposition Let (Ω, K, P) be a probability space, then (1) for B ∈ K, with P (B) > 0, the function set PB : K −→ R, defined by P (A ∩ B) PB (A) = P (A | B) = , ∀A ∈ K, P (B) is a probability, and the triple (Ω, K, PB ) is a probability space, (2) ∀A, B ∈ K, satisfying the condition P (A) P (B) > 0, we have P (A ∩ B) = P (A) P (B | A) = P (B) P (A | B) , (3) ∀A ∈ K, satisfying the condition 0 < P (A) < 1, we have ¯ ¯ P (B) = P (A) P (B | A) + P A P B | A , ∀B ∈ K.

- 8. Property (Multiplication Formula for Probabilities) Let us consider the events Ai ∈ K, i = 1, n, satisfying P (∩n Ai ) > 0, then i=1 n n−1 = P (A1 ) P (A2 | A1 ) P (A3 | A1 ∩ A2 ) . . . P An P Ai Ai . i=1 i=1

- 9. Property (Formula for Total Probability) Let A ∈ K be an event and a complete set of disjoint events, (Ai )i∈I , Ai ∈ K, then P (Ai ) P (A | Ai ) . P (A) = i∈I Property (Bayes’s Formula) Let us consider (Ai )i∈I , Ai ∈ K, a complete set of disjoint events, and the event A ∈ K, with P (A) > 0, then P (Ai ) P (A | Ai ) P (Ai | A) = , for all i ∈ I . P (Ai ) P (A | Ai ) i∈I

- 10. Independence Definition The events A, B ∈ K are called independent, if P (A ∩ B) = P (A) P (B) . Property Let (Ω, K, P) be a probability space and the events A, B ∈ K, (1) if P (A) = 0 or P (A) = 1, then the events A and B are independent, (2) if the events A and B are independent, then P (A | B) = P (A), and P (B | A) = P (B), (3) if the events A and B are independent, then pairs of events ¯ ¯ ¯¯ A, B , A, B and A, B are independent.

- 11. Definition The events (Ai )i=1,n are called independent, if for all 1 i1 < i2 < · · · < ik n, and k = 2, n, the following relations are satisfied P (Ai1 ∩ Ai2 ∩ · · · ∩ Aik ) = P (Ai1 ) P (Ai2 ) . . . P (Aik ) . Definition Let (Ω, K, P) be a probability space. The events of the subset (Ai )i∈I , Ai ∈ K, are called independent, if P Aj = P (Aj ) , j∈J j∈J for all finite subsets of indices J ⊂ I .

- 12. Random Variables Let (Ω, K, P) a probability space. Definition A real function X : Ω −→ R, is called random variable, if X −1 ((−∞, x)) = (X < x) = ω ∈ Ω X (ω) < x ∈ K, for all x ∈ R. Remarks If |X (Ω)| denotes the cardinal of the range of random variable X , then the random variable is called: Discrete random variable, if |X (Ω)| ℵ0 , i.e. it is a countable (denumerable) set; Simple random variable, if |X (Ω)| < ℵ0 , i.e. it is a finite set; Continuous random variable, if |X (Ω)| = ℵ, i.e. it is a non-numerable (uncountable) set.

- 13. Random Vector Definition A real valued vector function X = (X1 , . . ., Xn ) : Ω −→ Rn is a random vector (n–dimensional random vector), if (X < x) = (X1 < x1 , . . . , Xn < xn ) = ω∈Ω X1 (ω) < x1 , . . . , Xn (ω) < xn ∈ K, for all x ∈ Rn .

- 14. Distribution Function Let us consider the probability space (Ω, K, P) and a random variable X : Ω → R. Definition The real function F : R −→ R, defined by ∀x ∈ R, F (x) = P (X < x) , (1) is called the (cumulative) distribution function of X . Remark Some books consider this definition for distribution function, others (Matlab) use the definition given by ∀x ∈ R. F (x) = P (X x) ,

- 15. Proposition Let X be a random variable with the corresponding distribution function F . Then we have: (1) ∀ x ∈ R, 0 F (x) 1, (2) ∀ a, b ∈ R, a < b, X < b) = F (b) − F (a), P (a P (a < X < b) = F (b) − F (a) − P (X = a), b) = F (b) − F (a) − P (X = a) + P (X = b), P (a < X b) = F (b) − F (a) + P (X = b), P (a X (3) ∀ x1 , x2 ∈ R, x1 < x2 , F (x1 ) F (x2 ) (F is undecreasing function), (4) lim F (x) = F (−∞) = 0, x→−∞ (5) lim F (x) = F (+∞) = 1, x→+∞ (6) ∀ x ∈ R, F (y ) = F (x − 0) = F (x) (F is left-continuous). limy x

- 16. Remark If F is piecewise constant, then the points of discontinuity xi , i ∈ I , are the values of a discrete random variable X , and the corresponding values of jumps are given by the probability distribution of X , i ∈ I. pi = P (X = xi ) ,

- 17. Definition Let X = (X1 , . . ., Xn ) a random vector. The real function F : Rn −→ R, defined by ∀x ∈ Rn . F (x) = F (x1 , . . . , xn ) = P (X1 < x1 , . . . , Xn < xn ) , is called the (cumulative) distribution function of the random vector X. Definition Let X = (X1 , . . ., Xn ) be a random vector. All distribution functions of the random vectors (Xi1 , . . ., Xik ), 1 i1 < . . . < ik n, k = 1, n − 1, are called marginal distribution functions of the random vector X. Property If F is the distribution function of random vector X, then the distribution function of random vector (Xi1 , . . ., Xik ) is given by Fi1 ,...,ik (xi1 , . . ., xik ) = lim F (x1 , . . ., xn ) . xj →∞ j=i1 ,...,ik

- 18. Probability Density Function Definition A random variable X is called (absolutely) continuous if the corresponding distribution function F is absolutely continuous, i.e. there exists a function f : R → R, such that x for all x ∈ R. F (x) = f (t) dt, −∞ The function f is called (probability) density function. Proposition If the random variable X is continuous, having the distribution function F and density function f , then: ∀ x ∈ R, f (x) (1) 0, (2) F (x) = f (x), a.e. (almost everywhere) on R, b (3) for a < b, P (a X < b) = f (x) dx, a +∞ (4) f (x) dx = 1. −∞

- 19. Remarks Let X be a continous random variable, then P (X = a) = 0, for each a ∈ R. It follows, in this case, that P (a X < b) = P (a < X b) = P (a < X < b) b = P (a X b) = f (x) dx. a If the continuous random variable X has the distribution function F and the density function f , then we have successively F (x + ∆x) − F (x) f (x) = F (x) = lim ∆x ∆x→0 P (x X < x + ∆x) · = lim ∆x ∆x→0 Therefore, for small values of ∆x, we have X < x + ∆x) ≈ f (x) ∆x. P (x

- 20. Definition A random vector X = (X1 , . . ., Xn ) is called (absolutely) continuous if the corresponding distribution function F is absolutely continuous, i.e. there exists a function f : Rn → R, called (probability) density function, such that x1 xn F (x) = F (x1 , . . ., xn ) = ... f (t1 , . . ., tn ) dt1 . . .dtn , −∞ −∞ for all x = (x1 , . . ., xn ) ∈ Rn . Proposition Let X = (X1 , . . ., Xn ) be a continuous random vector, having the distribution function F and the density function f , then f (x) 0, for all x ∈ Rn , (1) ∂ n F (x1 , . . ., xn ) (2) = f (x1 , . . ., xn ), a.e. (almost everywhere) ∂x1 . . . ∂xn on Rn , if D ⊂ Rn , we have P (X ∈ D) = (3) f (x) dx, D

- 21. Definition Let X = (X1 , . . ., Xn ) a continuous random vector. All densities functions of the random vectors (Xi1 , . . ., Xik ), 1 i1 < . . . < ik n, k = 1, n − 1, are called marginal densities functions of X. Property Let f be the density function of the continous random vector X, then the density function of random vector (Xi1 , . . ., Xik ) is given by ··· fi1 ,...,ik (xi1 , . . ., xik ) = f (x1 , . . ., xn ) dxj1 . . .dxjn−k , Rn−k where {j1 , . . ., jn−k } = {1, . . ., n} {i1 , . . ., ik }. Remark The density function of Xi is given by ··· xi ∈ R. fi (xi ) = f (x1 , . . ., xn ) dx1 . . .dxi−1 dxi+1 . . .dxn , Rn−1

- 22. Independence Definition Let X = (X1 , . . ., Xn ) be a random vector having distribution function F . The random variables X1 , . . ., Xn are independent if F (x) = F (x1 , . . ., xn ) = FX1 (x1 ) . . .FXn (xn ) , for all x = (x1 , . . ., xn ) ∈ Rn , FXi being the distribution function of random variable Xi .

- 23. Remarks If X = (X1 , . . ., Xn ) is a discrete random vector, then the random variables X1 , . . ., Xn are independent if and only if P (X1 = x1 , . . ., Xn = xn ) = P (X1 = x1 ) . . .P (Xn = xn ) , for all xi ∈ Xi (Ω), i = 1, n. If X = (X1 , . . ., Xn ) is a continuous random vector, then the random variables X1 , . . ., Xn are independent if and only if the density function of random vector X satisfies the relation f (x) = f (x1 , . . ., xn ) = fX1 (x1 ) . . .fXn (xn ) , for all x = (x1 , . . ., xn ) ∈ Rn , fXi being the density function of random variable Xi .

- 24. Proposition If the continuous random vector (X , Y ) has the density function f , then the density functions for the random variables sum, product, and ratio are respectively: +∞ f (u, x − u) du, x ∈ R, fX +Y (x) = −∞ +∞ x du x ∈ R, fXY (x) = f u, , |u| u −∞ +∞ f (xu, u) |u| du, x ∈ R. fX /Y (x) = −∞ If the random variables X and Y are independent, then +∞ fX (u) fY (x − u) du, x ∈ R, fX +Y (x) = −∞ +∞ x du x ∈ R, fXY (x) = fX (u) fY , |u| u −∞ +∞ fX (xu) fY (u) |u| du, x ∈ R. fX /Y (x) = −∞

- 25. Discrete Uniform Distribution The random variable X has discrete uniform distribution, when the distribution is x 1 where N ∈ N; X , f (x) = f (x; N) = , x = 1, N. 1 N N x=1,N The distribution function is 0, if x 1, k F (x) = F (x; N) = , if k < x k + 1, k = 1, N − 1, N 1, if x > N,

- 26. The distribution of random variable X is given by f(x) 1/N • • • 0 1 2 3 4 N−1 N x f(x)=1/N, pentru x=1,...,N The graph of distribution function F is F(x) 1 (N−1)/N • • • • • • 2/N 1/N • • • 0 1 2 3 N−1 N x

- 27. Binomial Distribution The random variable X has binomial distribution, we denote B (n, p), when the distribution is x n x n−x X , f (x) = f (x; n, p) = pq , x = 0, n, x f (x) x=0,n and p ∈ (0, 1), q = 1 − p. The random variable X represents the number of successes in n independent trials of an experiment. Binomial distribution was descovered by James Bernoulli, and was presented in his book Ars Conjectandi (1713). 1 Pascal considered the particular case p = 2 · The distribution function is given by 0, if x 0, k−1 n i n−i F (x) = F (x; n, p) = p q , if k − 1 < x k, k = 1, n, i i=0 1, if x > n,

- 28. In Figure, the vertical bars represent the probabilities of binomial distribution B (5, 0.4) and the corresponding distribution function. f(x) F(x) • • • • • • 0 1 2 3 4 5 x 0 1 2 3 4 5 x f(x)=(n)pxqn−x, pentru x=0,...,n x

- 29. Poisson Distribution The random variable X has Poisson distribution, we denote Po (λ), when distribution is given by x λx −λ X , where f (x) = f (x; λ) = e , and λ > 0. x! f (x) x=0,1,2,... The random variable X , having Poisson distribution, gives the number of occurrences of a fixed event in a time interval, on a distance, on an area, and so on. Poisson (1837) proved that this distribution is a limit case of binomial distribution. Namely, when p = p (n) and np → λ, for n → ∞, one obtains that λx λ n x n−x f (x; n, p) = pq e. x x! One remarks that for high values of n, p has to be small, to hold on the product np constant (λ). Thus, the probability p of considered event is small, when n is high. It is the reason that this distribution to be called the distribution of rare events.

- 30. The Figure illustrates this remark. It was considered binomial distribution B (100, 0.1) and corresponding Poisson distribution Po (10), because np = 10. Probabilitatile distributiilor 0.14 Legea binomiala Legea lui Poisson 0.12 0.1 0.08 0.06 0.04 0.02 4 6 8 10 12 14 16 18 We also remark a known result, which establishes the connection between the Poisson distribution and exponential distribution! When Poisson distribution gives the number of occurrences in a time interval, the exponential distribution gives the length of the interval between two successsive occurrences of events.

- 31. Uniform distribution The random variable aleatoare X has a uniform distribution on the interval [a, b], we denote by U (a, b), when the density function is 1 , if x ∈ [a, b], f (x) = f (x; a, b) = b − a (2) if x ∈ [a, b]. 0, / The distribution function of X is 0, if x < a, x x − a , if a x b, F (x) = F (x; a, b) = f (t; a, b) dt = b − a −∞ 1, if x > b. The name is in connection with the fact that if one considers subintervals of the interval [a, b] with the same length , then the probability that X belongs to such intervals is / (b − a).

- 32. The Figure contains the graphs of density function and distribution function. f(x) F(x) 1 1/(b−a) a b x a b x We remark that distribution function is continuous. Thus, the values x = a and x = b in formula (2) can be attached to the cases F (x) = 0 and F (x) = 1, respectively. It follows that density function can be considered non-zero on [a, b] or (a, b] or [a, b) or (a, b). In all cases we have uniform distribution U (a, b).

- 33. Normal Distribution The random variable X has normal distribution (Gauss-Laplace distribution) with the parameters µ ∈ R and σ > 0, we denote N (µ, σ), when the density function is (x−µ)2 1 − f (x) = f (x; µ, σ) = √ e 2σ2 , for all x ∈ R. σ 2π The corresponding distribution function is given by x x (t−µ)2 1 − f (t; µ, σ) dt = √ F (x) = F (x; µ, σ) = e dt, 2σ 2 σ 2π −∞ −∞ for all x ∈ R.

- 34. The graphs of density function and distribution function are given in the Figure. The curve which represents the graph of density function f is called the curve of Gauss. f(x) F(x) fm F(µ+σ) fi F(µ)=0.5 F(µ−σ) µ−σ µ µ+σ µ−σ µ µ+σ x x 1 For x = µ is obtained the maximum of f , fm = σ√2π . The inflexion points of f are x = µ ± σ with the corresponding value f (µ ± σ) = fi = σ√1 · 2πe

- 35. The distribution function of standard normal distribution, N (0, 1), is x 1 2 − t2 Φ (x) = √ e dt, x ∈ R, 2π −∞ and is called Laplace function. We remark that the function x 1 2 − t2 ˜ (x) = √ e dt, x ∈ R, Φ 2π 0 is also called Laplace function. Between the two Laplace functions the following relation 1˜ Φ (x) = + Φ (x) , 2 holds. Using the two Laplace functions we have: x x (t−µ)2 1 − f (t; µ, σ) dt = √ F (x; µ, σ) = e 2σ2 dt σ 2π −∞ −∞ x −µ 1 ˜ x −µ =Φ = +Φ . σ 2 σ

- 36. χ2 distribution (Helmert-Pearson) The random variable X has χ2 distribution or Helmert-Pearson distribution, and one denotes χ2 (n), when the density function is 1 n x x 2 −1 e− 2 , if x > 0, n n 22 Γ f (x) = f (x; n) = 2 0, if x 0, where n ∈ N represents the number of degrees of freedom.

- 37. The Figure contains the graphs of density function of χ2 (n) distribution, for some values of parameter n. f(x;n) n=5 n=10 n=20 f(x;5) f(x;10) f(x;20) 3 8 18 x Property If the random variables X1 , . . . , Xn are independent, each of them having the same normal distribution with the parameters µ = 0 and σ = 1, then the random variable n 2 Yn = Xk , k=1 is χ2 (n) distrubuted.

- 38. t distribution (Student) The random variable X is t-distributed (Student) distributed, denoted by T (n), when it has the density function − n+1 n+1 x2 Γ 2 2 x ∈ R, f (x) = f (x; n) = √ 1+ , n n nπΓ 2 where n ∈ N represents the number of degrees of freedom. Gosset (1908) descovered this distribution. The result was not published at the first time, but using the pseudonym Student, then it was published. For n = 1, it is obtained Cauchy distribution: 1 x ∈ R. f (x) = , π (1 + x 2 )

- 39. The Figure contains the graphs of density of T (n), for some values of n. f(x) n=1 n=3 n=20 −5 −4 −3 −2 −1 0 1 2 3 4 5 x Property If the random varibales X and Y are independent, X is normally distributed, and Y having χ2 distribution with n degrees of freedom, then the random variable X T= Y /n has the Student distribution with n degrees of freedom.

- 40. F distribution (Fisher–Snedecor) The random variable X has F (Fisher-Snedecor) distribution, denoted by F (m, n), when the density function is Γ m+n m m m −1 m − m+n 2 2 2 x2 1+ x , x > 0, Γ mΓ n n n f (x) = f (x; m, n) = 2 2 0, x 0, where m, n ∈ N represent the numbers of degrees of freedom.

- 41. The Figure contains graphs of density function of F (m, n), for some values of the parameters m and n. f(x) m=4, n=2 m=3, n=10 0.71483 m=7, n=10 0.595 x Property If the random variables X and Y are independent, with χ2 (m) and χ2 (n) distributions, then the random variable X Y F= m n is F (m, n) distributed.

- 42. Multidimensional normal distribution Random vector X = (X1 , . . ., Xn ) has n-dimensional (nondegenerate) normal distribution, one denotes N (µ, V), when the density function is f (x) = f (x1 , . . ., xn ) = f (x; µ, V) 1 1 × exp − (x − µ) V−1 (x − µ) , = n 1 2 (2π) 2 [det (V)] 2 for all x ∈ Rn . V is a positive definite matrix of order n, and µ ∈ Rn . When n = 2, the density function of the random vector (X , Y ), having the two-dimensional normal distribution can be put in the form 1 √ × f (x, y ) = 2πσ1 σ2 1 − r 2 (x −µ1 )2 (x −µ1 ) (y −µ2 ) (y −µ2 )2 1 × exp − −2r + , 2 2 2 (1−r 2 ) σ1 σ2 σ1 σ2 for (x, y ) ∈ R2 .

- 43. The Figure represents the graph of density function of two-dimensional normal distribution with µ1 = µ2 = 0, σ1 = 1, σ2 = 2 ¸i r = −0.5. s 0.07 0.06 0.05 0.04 f(x,y) 0.03 0.02 0.01 0 6 4 3 2 2 0 1 0 −2 −1 −4 −2 −6 −3 y x Property If the random vector (X , Y ) is a two-dimensional normally distributed, N (µ1 , µ2 ; σ1 , σ2 ; r ), then each of the components X and Y of random vector are normally distributed: N (µ1 , σ1 ) and N (µ2 , σ2 ) respectively.

- 44. Conditional distribution Let (X , Y ) be a two-dimensional random vector, having the distribution function F . Definition The conditional distribution function of the random variable X with respect to random variable Y , is the function FX |Y : R → R, given by FX |Y (x|y ) = P (X < x | Y = y ) , ∀x ∈ R, y ∈ R, fixed. Remark We can rewrite FX |Y (x|y ) = lim P (X < x | y Y < y + h) , h 0 and using the definition of conditional probability P (X < x, y Y < y + h) FX |Y (x|y ) = lim P (y Y < y + h) h0 F (x, y + h) − F (x, y ) · = lim h 0 FY (y + h) − FY (y )

- 45. Let (X , Y ) be a discret random vector, having the distribution X Y ... yj ... . . . . . . xi ... pij ... . . . . . . with (xi , yj ) ∈ R × R, (i, j) ∈ I × J. Definition The conditional distribution of random variable X with respect to random variable Y has the distribution given by P (X = xi , Y = yj ) pij pi|j = P (X = xi | Y = yj ) = (i, j) ∈ I ×J, = , P (Y = yj ) pj where j ∈ J. p j = P (Y = yj ) = pij , i∈I

- 46. We remark that the formula for all i ∈ I , pi = P (X = xi ) = p j pi|j , j∈J holds. It represents the formula for total probability. We have also the Bayes’s formula: pi pj|i pij (i, j) ∈ I × J. pi|j = = , pij pi pj|i i∈I i∈I

- 47. Let (X , Y ) be a two-dimensional continuous random vector, having the density function f . Definition The conditional density function of random variable X with respect the random variable Y , is the function given by f (x, y ) , if f (y ) = 0, Y fY (y ) fX |Y (x | y ) = 0, if fY (y ) = 0. The formula for total probability and the Bayes’s formula hold: +∞ ∀x ∈ R, fX (x) = fY (y ) fX |Y (x|y ) dy , −∞ fX (x) fY |X (y |x) ∀ (x, y ) ∈ R × R. fX |Y (x|y ) = , fX (x) fY |X (y |x) dx R

- 48. Example Let (X , Y ) be a two-dimensional normally distributed random vector, N (µ1 , µ2 ; σ1 , σ2 ; r ). The components X and Y are normally distributed: N (µ1 , σ1 ) and N (µ2 , σ2 ) respectively. The conditional densities of X with respect to Y and Y with respect to X are: (x−m1 (y ))2 1 − 22 e 2(1−r )σ1 , ∀ (x, y ) ∈ R × R, fX |Y (x|y ) = r 2) 2π (1 − σ1 (y −m2 (x))2 1 − 2(1−r 2 )σ 2 fY |X (y |x) = ∀ (y , x) ∈ R × R, e 2, 2π (1 − r 2 ) σ2 where σ1 σ2 m1 (y ) = µ1 + r (y − µ2 ) , m2 (x) = µ2 + r (x − µ1 ) . σ2 σ1 We remark that the two conditional densities correspond to the √ normal distribution: N m1 (y ) , σ1 1 − r 2 and √ N m2 (x) , σ2 1 − r 2 respectively.

- 49. In the Figure are presented the graphs of the two conditional densities functions, when a two-dimensional normal distribution with µ1 = µ2 = 0, σ1 = 1, σ2 = 2, r = −0.5 is considered, for three values of the two components: X = −2, 0, 2 and Y = −2, 0, 2. X = −2 Y = −2 fX|Y(x|y) X=0 Y=0 fY|X(y|x) X=2 Y=2 x 6y −3 −2/3 0 2/3 3 −6 −3−1.5 0 1.5 3