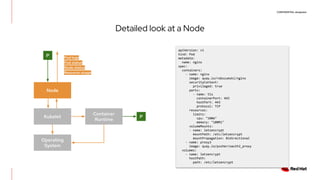

The document discusses the implementation and deployment of a standalone kubelet without a Kubernetes control plane, highlighting its prerequisites and functionalities. It details the architecture, deployment considerations, and pros and cons of this approach, especially for edge computing applications. Examples and configurations for a minimal deployment are provided, along with future recommendations for using ignition configurations with cloud providers.

![CONFIDENTIAL designator

The Standalone Kubelet

$ systemctl cat kubelet

# /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

After=crio.service

Requires=crio.service

[Service]

WorkingDirectory=/var/lib/kubelet

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet

$KUBE_LOGTOSTDERR

$KUBE_LOG_LEVEL

$KUBELET_API_SERVER

$KUBELET_ADDRESS

$KUBELET_PORT

$KUBELET_HOSTNAME

$KUBE_ALLOW_PRIV

$KUBELET_ARGS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

$ cat /etc/kubernetes/kubelet

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to

0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=127.0.0.1"

# The port for the info server to serve on

# KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=127.0.0.1"

# Add your own!

KUBELET_ARGS="--cgroup-driver=systemd --fail-swap-on=false

--pod-manifest-path=/etc/kubernetes/manifests

--container-runtime=remote

--container-runtime-endpoint=unix:///var/run/crio/crio.sock

--runtime-request-timeout=10m"](https://image.slidesharecdn.com/kubeletwnokubernetes7-9-20-200709153601/85/Kubelet-with-no-Kubernetes-Masters-DevNation-Tech-Talk-14-320.jpg)

![CONFIDENTIAL designator

Running a Container

$ ssh root@fedora

$ crictl ps

CONTAINER IMAGE STATE

proxy3 quay.io/pusher/oauth2_proxy@sha256:b5c44a0aba0e146a776a6a2a07353a3dde3ee78230ebfc56bc973e37ec68e425 Running

nginx7 quay.io/robszumski/nginx-for-drone@sha256:aee669959c886caaf7fa0c4d11ff35f645b68e0b3eceea1280ff1221d88aac36 Running

cncjs9 quay.io/robszumski/cncjs@sha256:3d11bc247c023035f2f2c22ba4fa13c5c43d7c28d8f87588c0f7bdfd3b82121c Running

transcode15 quay.io/robszumski/rtsp-to-mjpg@sha256:52dd81db58e5e7c9433da0eedb1c02074114459d4802addc08c7fe8f418aead5 Running

$ scp pod.yaml root@fedora:/etc/kubernetes/manifests

Deployment:

List running workloads:

$ crictl logs 86fadc1aee09c

[2020/07/05 20:15:46] [oauthproxy.go:252] mapping path "/" => upstream "http://192.168.7.62:8080/"

[2020/07/05 20:15:46] [http.go:57] HTTP: listening on :4180

[2020/07/05 20:18:45] [google.go:270] refreshed access token Session{email:xxxx@robszumski.com user:168129640651108868061

PreferredUsername: token:true id_token:true created:2020-07-05 16:49:23.445238687 +0000 UTC expires:2020-07-05 21:18:44 +0000 UTC

refresh_token:true} (expired on 2020-07-05 20:08:08 +0000 UTC)

173.53.xx.xxx - xxxx@robszumski.com [2020/07/05 20:18:44] xxxx.robszumski.com GET - "/oauth2/auth" HTTP/1.0 "Mozilla/5.0 (Macintosh;

Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.129 Safari/537.36" 202 0 1.221

Stream logs:](https://image.slidesharecdn.com/kubeletwnokubernetes7-9-20-200709153601/85/Kubelet-with-no-Kubernetes-Masters-DevNation-Tech-Talk-16-320.jpg)