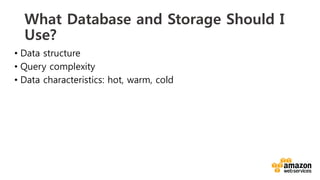

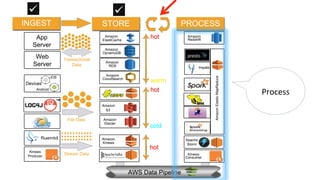

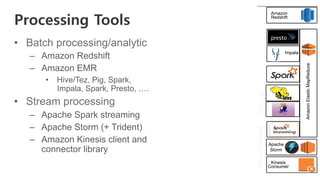

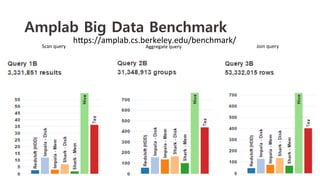

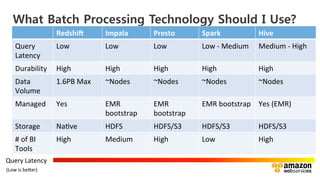

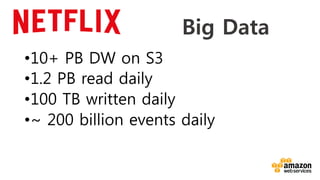

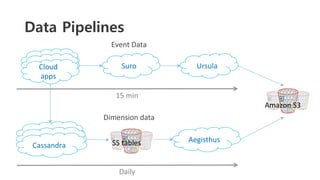

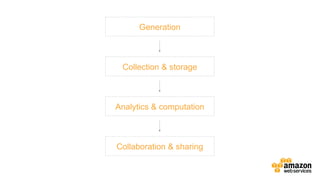

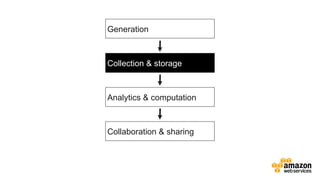

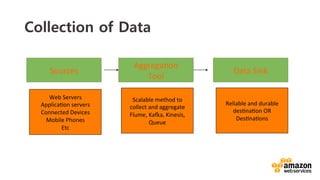

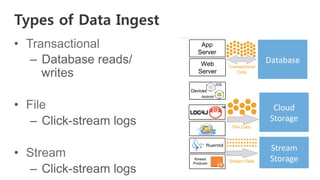

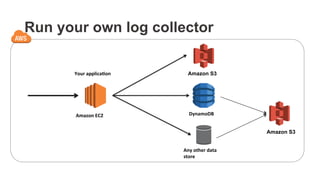

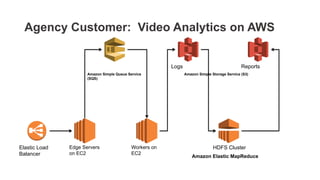

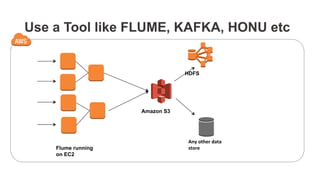

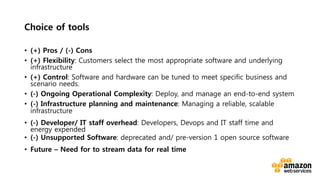

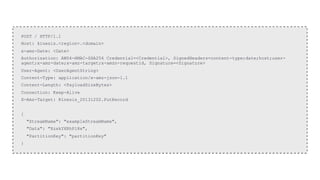

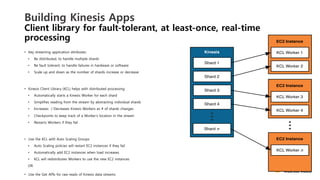

The document outlines a webinar on starting big data projects using Amazon Web Services (AWS) tools such as Elastic MapReduce, Redshift, and Kinesis. It covers various AWS big data components, data collection and storage methods, and analytics strategies, providing insights into real-time data processing and successful use cases, particularly for video analytics. The presentation emphasizes the importance of selecting the right tools at the right scale and time for effective big data solutions.

![Data

Sources

App.4

[Machine

Learning]

AWS

Endpoint

App.1

[Aggregate

&

De-‐Duplicate]

Data

Sources

Data

Sources

Data

Sources

App.2

[Metric

Extrac0on]

S3

DynamoDB

Redshift

App.3

[Sliding

Window

Analysis]

Data

Sources

Availability

Zone

Shard

1

Shard

2

Shard

N

Availability

Zone

Availability

Zone

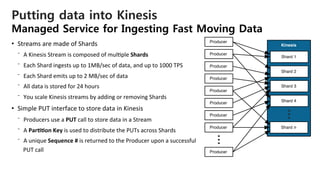

Introducing Amazon Kinesis

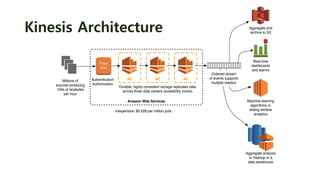

Managed Service for Real-Time Processing of Big Data

EMR](https://image.slidesharecdn.com/krwebinar2015yourfirstbigdataonaws-150712121920-lva1-app6892/85/AWS-AWS-2015-32-320.jpg)

![37

Easy

Administra0on

Managed

service

for

real-‐8me

streaming

data

collec8on,

processing

and

analysis.

Simply

create

a

new

stream,

set

the

desired

level

of

capacity,

and

let

the

service

handle

the

rest.

Real-‐0me

Performance

Perform

con8nual

processing

on

streaming

big

data.

Processing

latencies

fall

to

a

few

seconds,

compared

with

the

minutes

or

hours

associated

with

batch

processing.

High

Throughput.

Elas0c

Seamlessly

scale

to

match

your

data

throughput

rate

and

volume.

You

can

easily

scale

up

to

gigabytes

per

second.

The

service

will

scale

up

or

down

based

on

your

opera8onal

or

business

needs.

S3,

EMR,

Storm,

RedshiY,

&

DynamoDB

Integra0on

Reliably

collect,

process,

and

transform

all

of

your

data

in

real-‐8me

&

deliver

to

AWS

data

stores

of

choice,

with

Connectors

for

S3,

Redshi],

and

DynamoDB.

Build

Real-‐0me

Applica0ons

Client

libraries

that

enable

developers

to

design

and

operate

real-‐8me

streaming

data

processing

applica8ons.

Low

Cost

Cost-‐efficient

for

workloads

of

any

scale.

You

can

get

started

by

provisioning

a

small

stream,

and

pay

low

hourly

rates

only

for

what

you

use.

Amazon Kinesis: Key Developer Benefits](https://image.slidesharecdn.com/krwebinar2015yourfirstbigdataonaws-150712121920-lva1-app6892/85/AWS-AWS-2015-37-320.jpg)