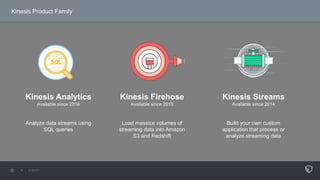

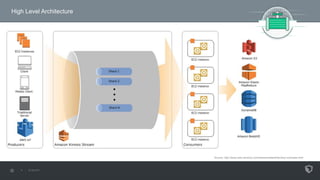

- Amazon Kinesis is a real-time data streaming platform that allows for processing of streaming data in the AWS cloud. It includes Kinesis Streams, Kinesis Firehose, and Kinesis Analytics.

- Kinesis Streams allows users to build custom applications to process or analyze streaming data. It is a high-throughput, low-latency service for real-time data processing over large, distributed data streams.

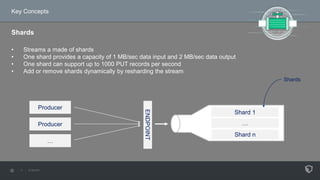

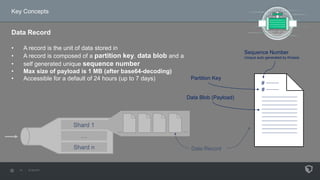

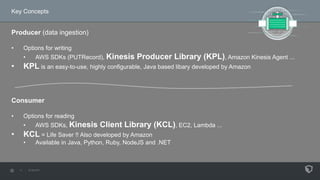

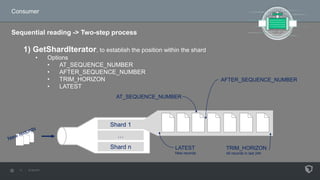

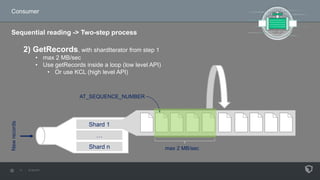

- Key concepts of Kinesis Streams include shards for partitioning streaming data, producers for ingesting data, data records as the unit of stored data, and consumers for reading and processing streaming data.