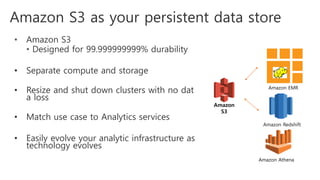

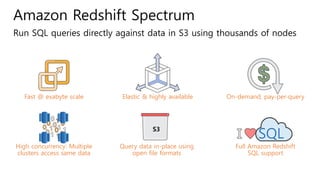

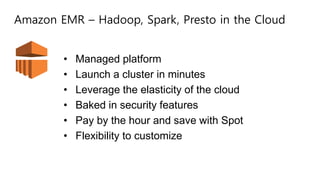

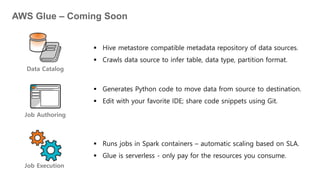

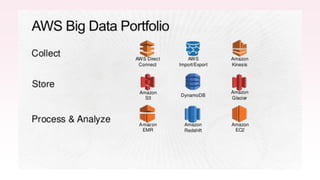

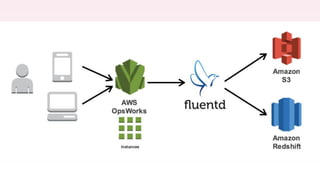

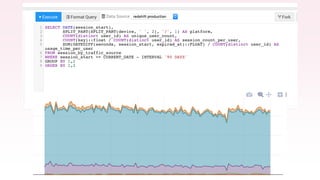

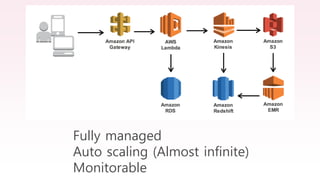

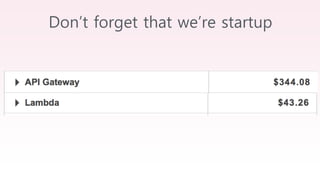

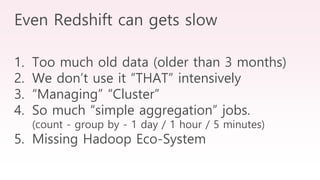

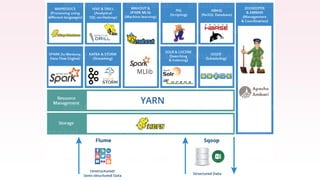

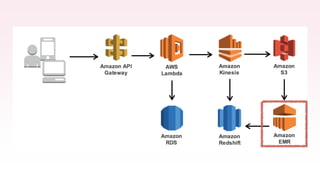

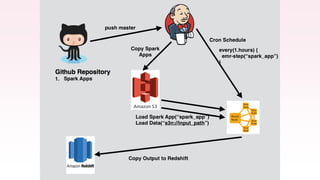

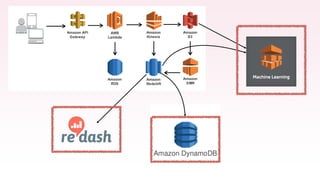

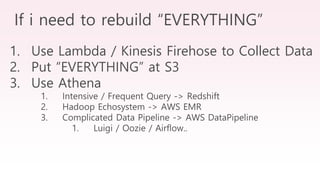

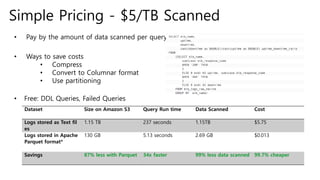

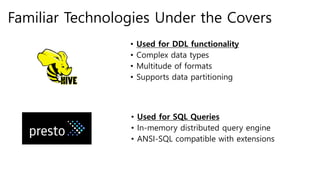

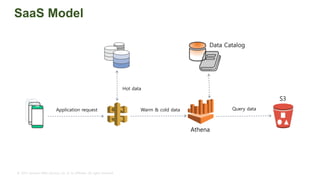

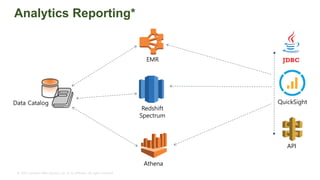

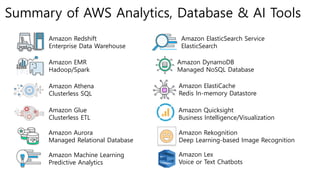

The document provides an overview of AWS big data and analytics services, focusing on tools like Amazon Athena, Amazon EMR, and Amazon Redshift, which can facilitate data ingestion, storage, querying, and analysis. It discusses features such as serverless architecture, scalability, cost management, and supports for different data formats and structures. The document emphasizes the importance of managing big data efficiently while ensuring security and compliance.