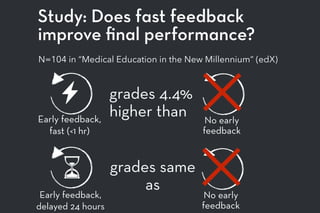

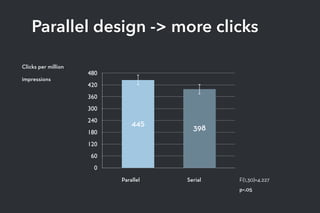

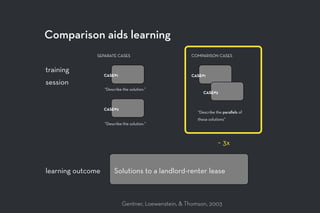

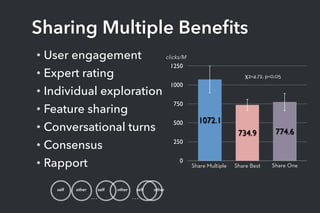

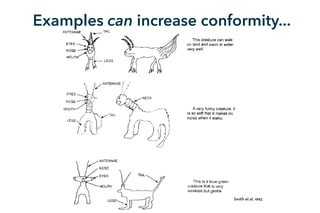

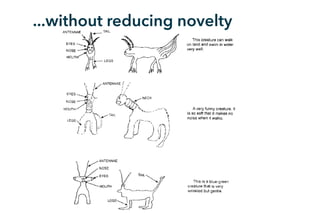

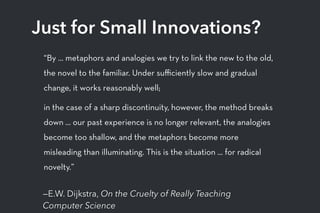

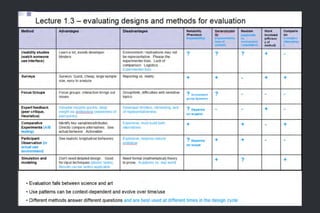

The document discusses the challenges and potential of scaling design education, emphasizing the need for practical theory and empirical approaches. It explores the concepts of peer assessment, diversity in learning, and the role of prototyping in enhancing creative outcomes. Various studies and examples illustrate how engagement and immediate feedback can improve learning experiences in large-scale settings.

![Students with novel answers

sometimes penalized unfairly

“damn peer review - it was a bunch of

[students] just making things fit into a rubric -

checking off a check sheet - like talking about

dog poop. what is this world coming to?”

-A student in a peer-assessed class](https://image.slidesharecdn.com/klemmer-presentation-150330115127-conversion-gate01/85/Design-at-Large-Integrating-Teaching-and-Experiments-Online-featuring-Scott-Klemmer-34-320.jpg)

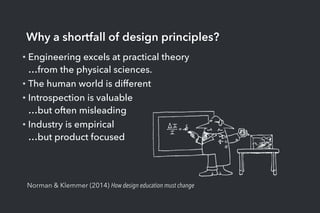

![Why diversity?

Different professional

knowledge, educational

systems, and cultural

values

Information

[Tudge ’08]

Cognition

[Gurin et al. ’02]

[Nemeth ’86]

[Schwartz et al ’04]

From passivity to active,

effortful, conscious

thinking](https://image.slidesharecdn.com/klemmer-presentation-150330115127-conversion-gate01/85/Design-at-Large-Integrating-Teaching-and-Experiments-Online-featuring-Scott-Klemmer-45-320.jpg)