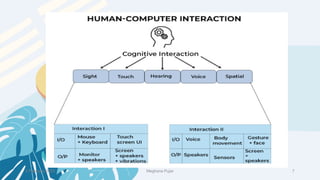

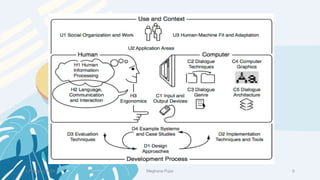

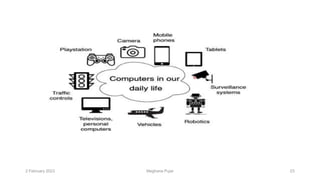

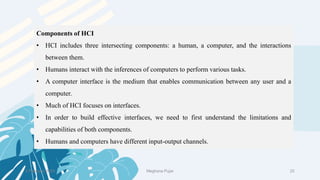

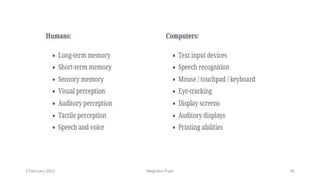

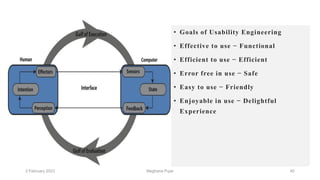

This document discusses an introduction to human-computer interaction (HCI). It provides the course objectives which are to understand design and evaluation methods for user-friendly interfaces. It also discusses understanding tools like sensors and augmented reality. Key aspects of HCI include the user, task, interface, and context of use. Examples provided are IoT devices, eye-tracking technology, and speech recognition.

![Objective

• The family consists of FOUR models

– Keystroke Level Model or KLM

– Original GOMS proposed by Card, Moran and

Newell, popularly known as (CMN) GOMS

– Natural GOMS Language or NGOMSL

– Cognitive Perceptual Motor or (CPM)GOMS [also

known as Critical Path Method GOMS]](https://image.slidesharecdn.com/it351mid-230327143611-1634cd57/85/IT351_Mid-pdf-170-320.jpg)

![Another Example

Goal: Close window

• [Select Goal: Use menu method

Operator: Move mouse to file menu

Operator: Pull down file menu

Operator: Click over close option

Goal: Use Ctrl+F4 method

Operator: Press Ctrl and F4 keys together]](https://image.slidesharecdn.com/it351mid-230327143611-1634cd57/85/IT351_Mid-pdf-253-320.jpg)

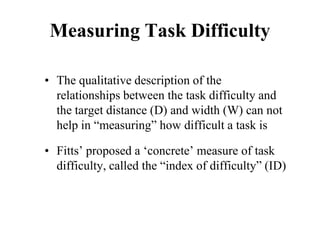

![Measuring Task Difficulty

• From the analysis of empirical data, Fitts’

proposed the following relationship between

ID, D and W

ID = log2(D/W+1) [unit is bits]

(Note: the above formulation was not what Fitts

originally proposed. It is a refinement of the original

formulation over time. Since this is the most common

formulation of ID, we shall follow this rather than the

original one)](https://image.slidesharecdn.com/it351mid-230327143611-1634cd57/85/IT351_Mid-pdf-275-320.jpg)

![Cross-Entropy Based Similarity

Measure

• Let X be a random variable which can take any

character as its value

• Further, let P be the probability distribution

function of X [i.e., P(xi) = P(X = xi)]

• We can calculate the “entropy”, a statistical

measure, of P in the following way

∑

−

=

i

i

i x

P

x

P

P

H )

(

log

)

(

)

( 2](https://image.slidesharecdn.com/it351mid-230327143611-1634cd57/85/IT351_Mid-pdf-337-320.jpg)