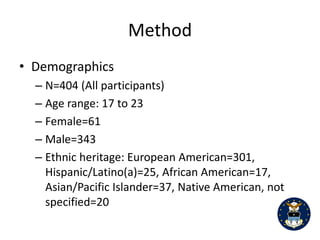

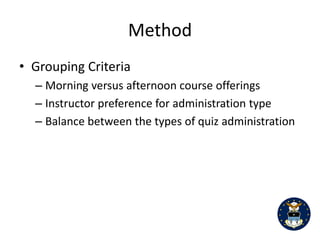

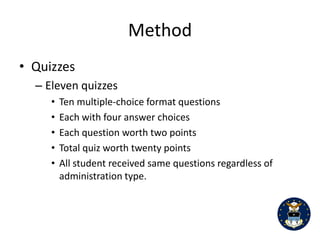

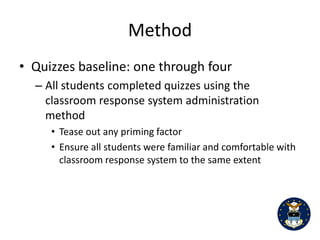

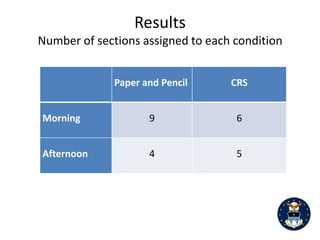

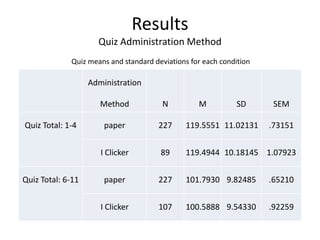

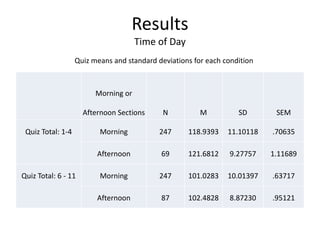

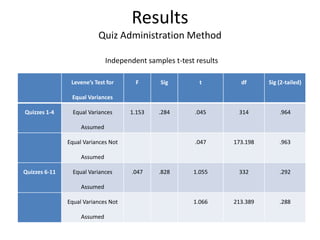

This study investigates whether there are performance differences between quizzes administered via paper-and-pencil versus a classroom response system (CRS), including any effects of quiz timing (morning vs. afternoon). The results indicate no significant differences in student performance between the two quiz methods or the times of day. Consequently, both administration methods can be used interchangeably without impacting quiz scores, suggesting that instructor preference can guide method choice without adversely affecting student outcomes.