The document discusses brain-inspired AI algorithms collaborating with various research institutions to improve mental health diagnostics and treatment, particularly for conditions like schizophrenia and addiction. A focus is on machine learning applications in neuroimaging and cognitive load assessment using EEG data, including successful predictive models and their relevance to mental state recognition. The research emphasizes the importance of interpretability in AI models and explores adaptive representation learning influenced by neurogenesis.

![Mental State Recognition to

Improve Mental Function

Detecting emotional & cognitive changes to predict response to

different types of input, e.g. music, video, news, ads, emails

(both for mental health and for neuromarketing)

Safety: detecting changes in driver’s alertness level

(drowsiness, microsleeps) to prevent accidents

Computational psychiatry:

data-analytic approach to diagnosis based on objective measurements

(new Research Domain Criteria (RDoC) initiative by NIMH)

Our current focus: schizophrenia, addiction, Huntington’s, Alzheimer’s, Parkinson’s

“Psychiatric research is in crisis”

[Wiecki et al. 2015]

AI 2 Brain:

Health & Productivity: mental-state-sensitive software

monitoring cognitive load, focus/attention; monitoring

stress/anxiety](https://image.slidesharecdn.com/irinamlconfnyc2017rish-170324185639/75/Irina-Rish-Researcher-IBM-Watson-at-MLconf-NYC-2017-4-2048.jpg)

![Overview: Machine Learning in Neuroimaging

“Statistical biomarkers”:

[Cecchi et al, NIPS 2009]

[Rish et al, PLOS One, 2013]

[Carroll et al, Neuroimage 2009]

[Scheinberg&Rish, ECML 2010]

Schizophrenia classification: 86% to 93% accuracy

[Rish et al, Brain Informatics 2010]

[Rish et al, SPIE Med.Imaging 2012]

[Cecchi et al, PLOS Comp Bio 2012]

Cognitive state prediction in videogames: 70-95%

Pain perception: 70-80%, distributed activation patterns

[Honorio et al, AISTATS 2012]

[Rish et al, SPIE Med.Imaging 2016]

Cocaine addiction: evaluating potential treatments

[Bashivan et al, ICLR 2016]

EEG-cognitive load prediction: 91% w/ recurrent ConvNets

+

++

+

- -

---

Predictive Model

mental

disorder

healthy](https://image.slidesharecdn.com/irinamlconfnyc2017rish-170324185639/75/Irina-Rish-Researcher-IBM-Watson-at-MLconf-NYC-2017-5-2048.jpg)

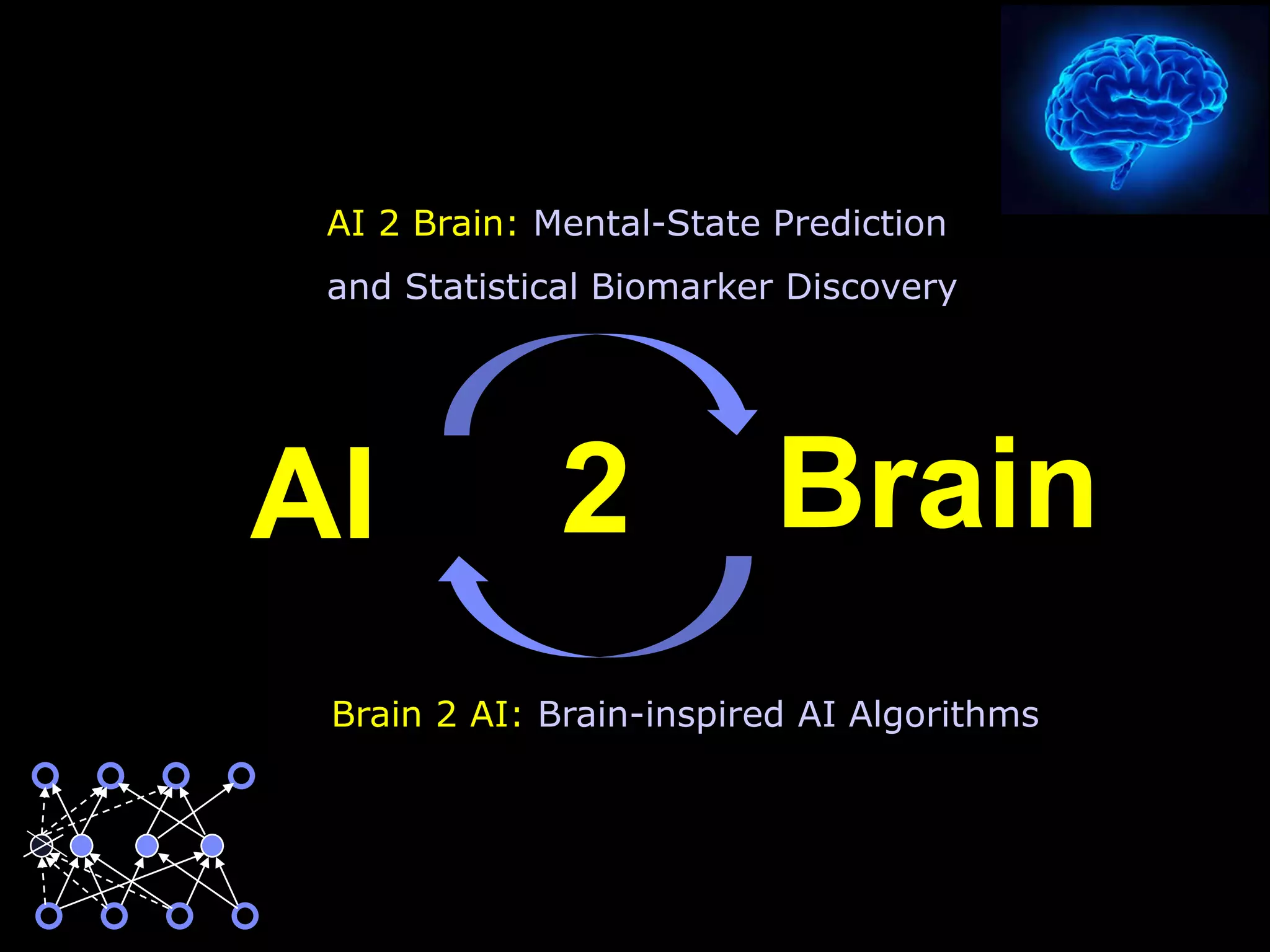

![MPH: A Stimulant for a Stimulant?

A potential therapeutic agent for CUD?

(e.g., similarly to nicotine patch and using methadone for heroin addiction)

Methylphenidate Hydrochloride (MPH)

• Common ADHD treatment (Ritalin)

• Similarity to cocaine:

• chemical structure

• mechanism of action (blocks

dopamine transporter)

• Difference: slower rate of clearance

(90 vs 20 min), and thus a lower

dependence and abuse potential

• MPH has shown positive behavioral effects on CUD subjects [Levin 2007]

• MPH tends to normalize both task-related [Goldstein 2010] and resting-state functional activity in

certain areas [Konova 2013]](https://image.slidesharecdn.com/irinamlconfnyc2017rish-170324185639/75/Irina-Rish-Researcher-IBM-Watson-at-MLconf-NYC-2017-7-2048.jpg)

![Resting-state Functional MRI

Image courtesy of fMRI Research

Center at Columbia University

Resting-state fMRI experiment: MPH vs. placebo [Konova et al 2013]

Features: functional network degrees

• Network link (i,j) correlation between BOLD signals of voxels i and j

exceeds a given threshold (e.g., > 0.6)

• Feature selection: univariate ranking based on p-values; multiple subsets of

top K features, with increasing K, are used to train classifiers](https://image.slidesharecdn.com/irinamlconfnyc2017rish-170324185639/75/Irina-Rish-Researcher-IBM-Watson-at-MLconf-NYC-2017-8-2048.jpg)

![Classification Results: MPH Normalizes CUD’s Networks

[Rish, Bashivan, Cecchi, Goldstein, SPIE 2016]

MPH ‘normalizes’ CUD networks:

CUD’s are harder to discriminate from

controls (10-20% increase in classification

error) under MPH vs under placebo

MPH has stronger effect on CUDs:

MPH (M2) vs Placebo (P2) condition

is much easier to discriminate for CUDs

rather than for controls

Leave-one-out CV with Nearest Neighbor (NN), Linear SVM, Decision Tree (DT), Random

Forest (RF), Logistic Regression (LR), Naïve Bayes (NB), Linear Discriminant Analysis (LDA)](https://image.slidesharecdn.com/irinamlconfnyc2017rish-170324185639/75/Irina-Rish-Researcher-IBM-Watson-at-MLconf-NYC-2017-9-2048.jpg)

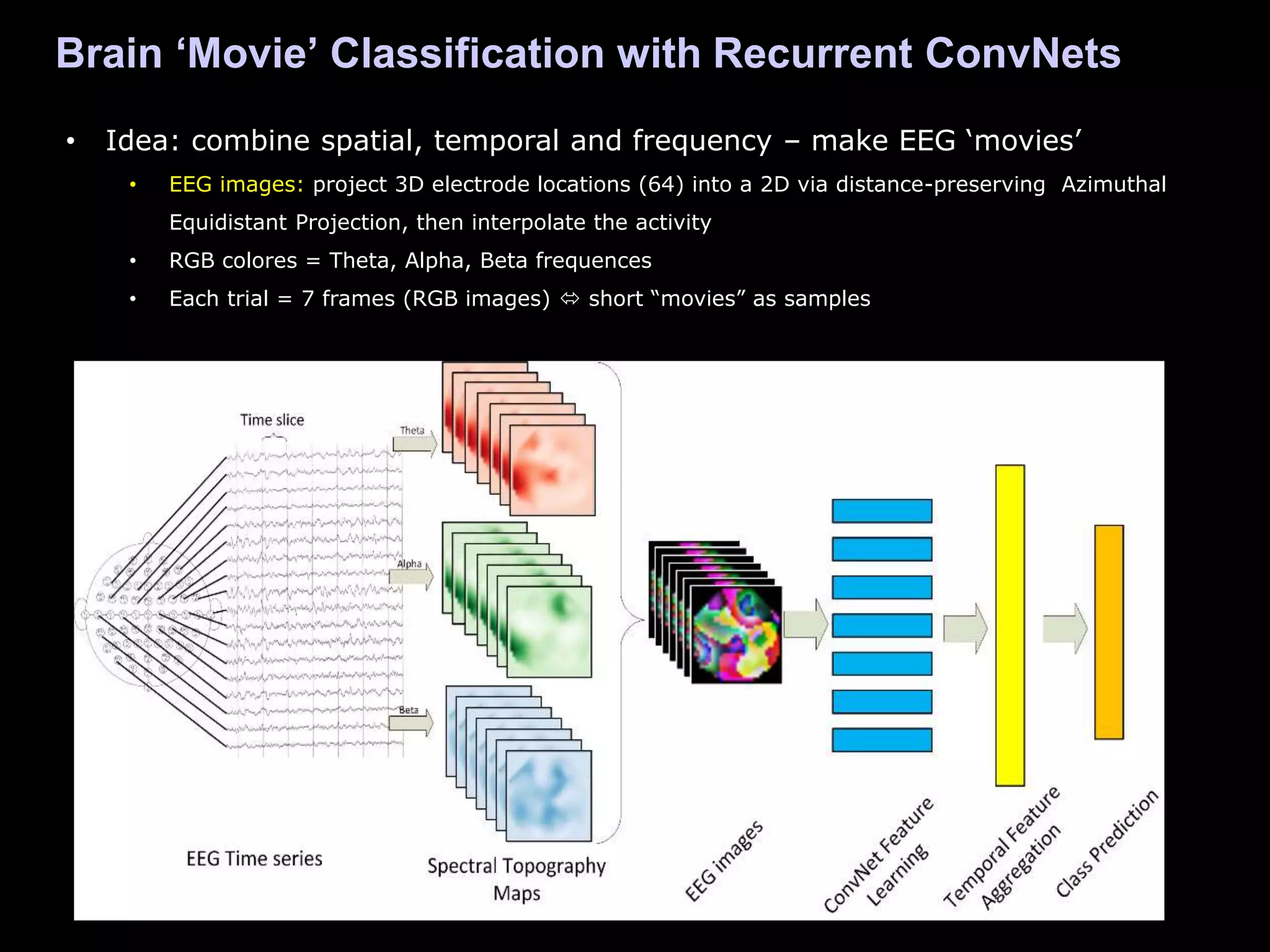

![Example 2: Working Memory Load Classification from EEG)

EEG Experiment:

64-electrode EEG

Working Memory task, 4 levels of difficulty:

2,4,6, or 8 symbols to remember

13 subjects, 240 trials each (=3120 trial)

[Bashivan, Rish, Yeasin, Codella, ICLR 2016]

Classification Problem:

given time-series recorded during each trial of WM task, predict WM load level

Data Samples: 2670 correctly answered trials (a subset of total 3120)

Feature Extraction: FFT to find spectral power within each electrode at three

frequency bands - theta (4-8Hz), alpha (8-13Hz), and beta (13-30Hz).

Evaluation: leave-one-subject out (i.e., 13-fold) CV](https://image.slidesharecdn.com/irinamlconfnyc2017rish-170324185639/75/Irina-Rish-Researcher-IBM-Watson-at-MLconf-NYC-2017-10-2048.jpg)

![• FFT over the complete trial

single image for each trial

• VGG style ConvNets

[Simonyan & Zisserman, 2015]

• Conv layers with 3 x 3 receptive

fields

• 4 architectures, increasing depth;

deeper is (slightly) better

Baseline: Non-temporal Approach with ConvNets

ConvNet Configurations

A B C D

input (32 x 32 3-channel image)

Conv3-32

Conv3-32

Conv3-32

Conv3-32

Conv3-32

Conv3-32

Conv3-32

Conv3-32

Conv3-32

Conv3-32

maxpool

-

Conv3-64

Conv3-64

Conv3-64

Conv3-64

Conv3-64

Conv3-64

- maxpool

- -

Conv3-

128

Conv3-

128

- - maxpool

Architecture

Number of

parameters

Test Error

A ~10k 13.05

B ~65.5k 13.17

C ~139.4k 13.91

D ~158k 12.39](https://image.slidesharecdn.com/irinamlconfnyc2017rish-170324185639/75/Irina-Rish-Researcher-IBM-Watson-at-MLconf-NYC-2017-12-2048.jpg)

![Adding Time is Better: Recurrent ConvNets

Best result: 8.9% error discriminating

among 4 levels of cognitive load

achieved by recurrent Conv Nets with

LSTM + time convolution

• EEG times series for each trial split into 7

windows (0.5 sec). FFT on each time window

to get an image as before

• Best ConvNet (7-layer) used as C component

• All 7 ConvNets shared parameters

• video classification architectures from

[Ng et al, CVPR 2015]

• Temporal Maxpool: Max pool over time frames

• Temporal Convolution: 1D convolution over time

frames

• LSTM - sequence mapping over times frames

• Mixed LSTM/1D-Conv: Combination of both LSTM

and 1D-Conv architectures

Architecture

Test

Error (%)

Validation

Error (%)

Number of

parameters

RBF SVM 15.34 - -

L1-logistic

regression

15.32 -

-

Random Forest 12.59 - -

DBN 14.96 8.37 1.02 mil

ConvNet+Maxpoo

l

14.80 8.48

1.21 mil

ConvNet+1D-

Conv

11.32 9.28

441 k

ConvNet+LSTM 10.54 6.10 1.34 mil

ConvNet+LSTM/1

D-Conv

8.89 8.39 1.62 mil

[Bashivan, Rish, Yeasin, Codella, ICLR 2016]](https://image.slidesharecdn.com/irinamlconfnyc2017rish-170324185639/75/Irina-Rish-Researcher-IBM-Watson-at-MLconf-NYC-2017-13-2048.jpg)

![Interpretability via Deconvolution

Code: https://github.com/pbashivan/EEGLearn

Using deconvnet of [Zeiler et al] to map features

back to the brain images

Back Projections: maps obtained by deconvnet

on the feature map displaying structures in the

input image that excite that particular feature map.

Some of these features correspond to well-known

electrophysiological markers of cognitive load.

First-layer features (1st stack, kernel 7) captured

wide-spread theta (1st stack output-kernel7) and

another (1st stack, kernel 23) frontal beta

activity

Second- and third-layer features – frontal theta/beta

(2nd stack,kernel7) and 3rd stack kernel60, 112) as well

as parietal alpha (2nd stack kernel29) .

Frontal theta and beta activity as well as parietal

alpha are most prominent markers of

cognitive/memory load in neuroscience

literature [Bashivan et al., 2015; Jensen et al., 2002;

Onton et al., 2005; Tallon-Baudry et al., 1999]

Input EEG images: top 9 images with highest

feature activations across the training set

Layer4Layer6Layer7](https://image.slidesharecdn.com/irinamlconfnyc2017rish-170324185639/75/Irina-Rish-Researcher-IBM-Watson-at-MLconf-NYC-2017-14-2048.jpg)

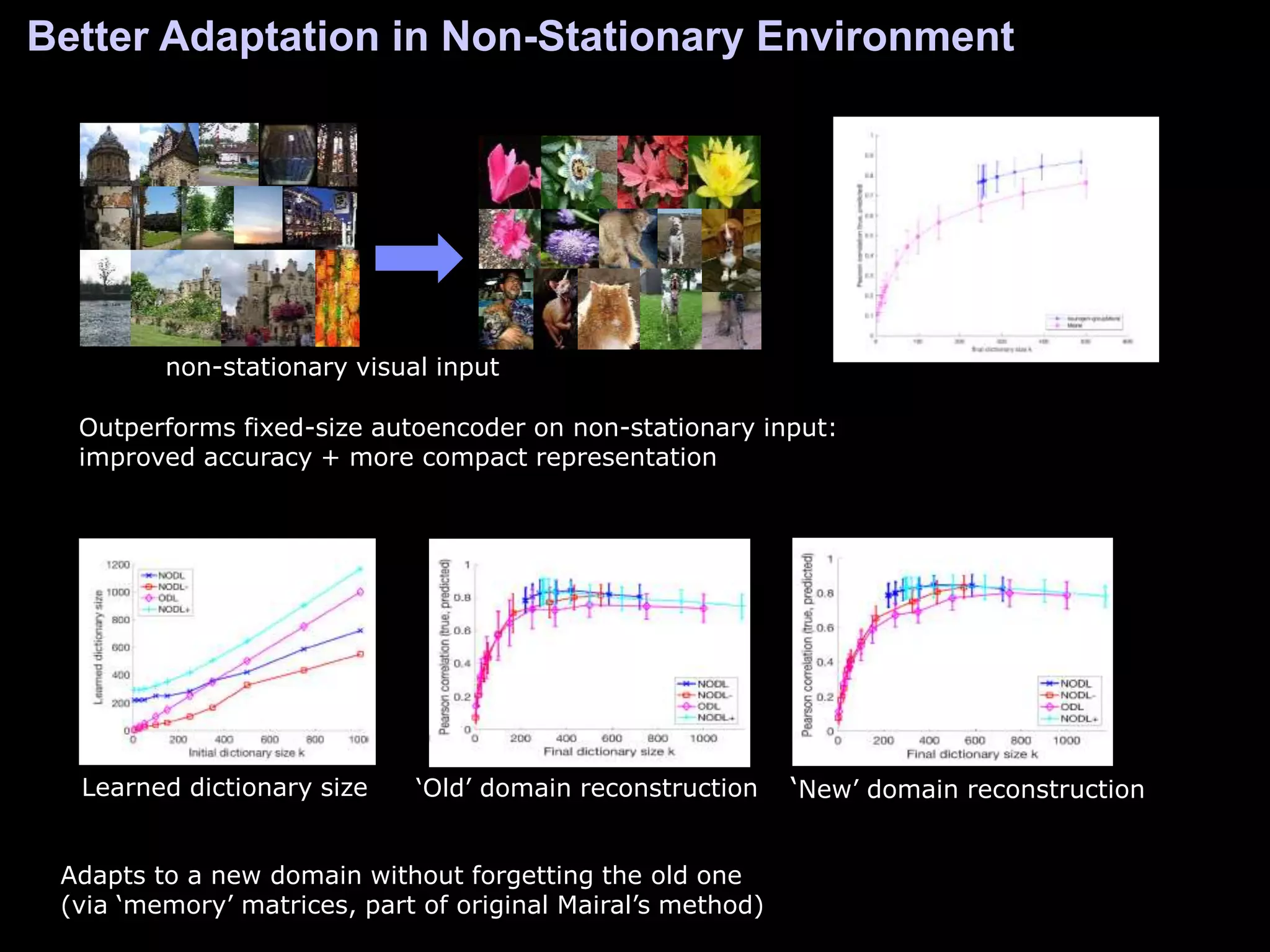

![• Current theories: the hippocampus functions as an autoenconder to evoke

memories; similar encoding function is suggested in the olfactory bulb

• Our computational model: sparse linear autoencoder (online dictionary learning of

Mairal et al) + dynamic addition (birth) abnd deletion (death) of hidden nodes

Adult Neurogenesis:

Inspiration for Adaptive Representation Learning

• Predominant in the dentate gyrus of the hippocampus

and in the olfactory bulb

Olfactory bulb Dentate gyrus

[Garg, Rish, Cecchi, Lozano, ICLR 2017]

nsamples

p variables

~~

mbasisvectors

(dictionary)sparse

representation

input x

output x’

reconstructed x

hidden nodes c

encoded x

link weights

‘dictionary’ D

c c

Brain 2 AI:](https://image.slidesharecdn.com/irinamlconfnyc2017rish-170324185639/75/Irina-Rish-Researcher-IBM-Watson-at-MLconf-NYC-2017-15-2048.jpg)

![References

[Garg, Rish, Cecchi, Lozano 2016; submitted] S. Garg, I. Rish, G. Cecchi, A. Lozano. Neurogenesis-inspired Dictionary Learning: Online Model Adaptation in

a changing world, submitted to ICLR-2017

[Bashivan et al, ICLR 2016] P. Bashivan, I. Rish, M. Yeasin, N. Codella. Learning Representations from EEG with Deep Recurrent-Convolutional Neural

Networks. ICLR 2016 : International Conference on Learning Representations.

[Bashivan et al, 2015] Mental State Recognition via Wearable EEG, in Proc. of MLINI-2015 workshop at NIPS-2015.

[Heisig et al, 2014] S. Heisig, G. Cecchi, R. Rao and I. Rish. Augmented Human: Human OS for Improved Mental Function. AAAI 2014 Workshop on Cognitive

Computing and Augmented Human Intelligence.

[Neuropsychopharmacology, 2014] A Window into the Intoxicated Mind? Speech as an Index of Psychoactive Drug Effects. Bedi G, Cecchi G A, Fernandez

Slezak D, Carrillo F, Sigman M, de Wit H. Neuropsychopharmacology, 2014

[NPJ 2015] G. Bedi, F. Carrillo, G. A Cecchi, D. F. Slezak, M. Sigman, N. B Mota, S. Ribeiro, D C Javitt, M. Copelli, C M Corcoran. Automated analysis of free

speech predicts psychosis onset in high-risk youths. NPJ Schizophrenia 2015.

[PLoS ONE, 2013] Schizophrenia as a Network Disease: Disruption of Emergent Brain Function in Patients with Auditory Hallucinations, I Rish, G Cecchi, B

Thyreau, B Thirion, M Plaze, M-L Paillere-Martinot, C Martelli, J-L Martinot, J-B Poline. PloS ONE 8(1), e50625, Public Library of Science, 2013.

[PLoS One, 2012] Speech Graphs Provide a Quantitative Measure of Thought Disorder in Psychosis. N.B. Mota, N.A.P. Vasconcelos, N. Lemos, A.C. Pieretti,

O. Kinouchi, G.A. Cecchi, M. Copelli, S. Ribeiro. PLoS One, 2012

[Rish et al, SPIE 2016] I.Rish, P. Bashivan, G. A. Cecchi, R.Z. Goldstein, Evaluating Effects of Methylphenidate on Brain Activity in Cocaine Addiction: A

Machine-Learning Approach. SPIE Medical Imaging, 2016

[SPIE Med.Imaging 2012] Sparse regression analysis of task-relevant information distribution in the brain.

Irina Rish, Guillermo A Cecchi, Kyle Heuton, Marwan N Baliki, A Vania Apkarian, SPIE Medical Imaging, 2012.

[AISTATS 2012] J. Honorio, D. Samaras, I. Rish, G.A. Cecchi. Variable Selection for Gaussian Graphical Models. AISTATS, 2012.

[PLoS Comp Bio 2012] Predictive Dynamics of Human Pain Perception, GA Cecchi, L Huang, J Ali Hashmi, M Baliki, MV Centeno, I Rish, AV Apkarian,

PLoS Comp Bio 8(10), e1002719, Public Library of Science, 2012.

[Brain Informatics 2010] I. Rish, G. Cecchi, M.N. Baliki and A.V. Apkarian. Sparse Regression Models of Pain Perception, in Proc. of Brain Informatics (BI-

2010), Toronto, Canada, August 2010.

[NeuroImage, 2009] Prediction and interpretation of distributed neural activity with sparse models. Melissa K Carroll, Guillermo A Cecchi, Irina Rish, Rahul

Garg, A Ravishankar Rao. NeuroImage 44(1), 112--122, Elsevier, 2009.

[NIPS, 2009] Discriminative network models of schizophrenia, GA Cecchi, I Rish, B Thyreau, B Thirion, M Plaze, M-L Paillere-Martinot, C Martelli, J-L Martinot,

J-B Poline. Advances in Neural Information Processing Systems (NIPS 2009) , pp. 252--260, 2009.](https://image.slidesharecdn.com/irinamlconfnyc2017rish-170324185639/75/Irina-Rish-Researcher-IBM-Watson-at-MLconf-NYC-2017-18-2048.jpg)