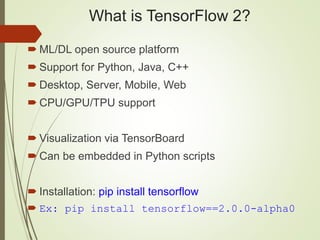

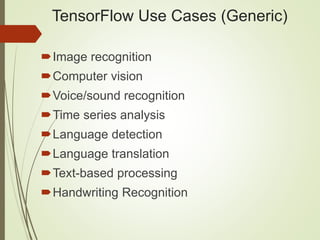

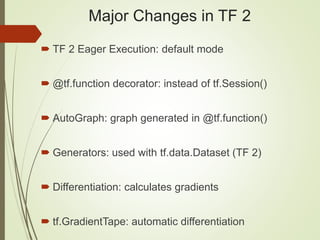

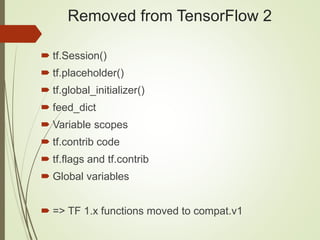

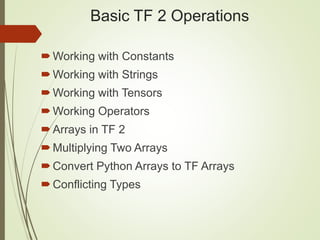

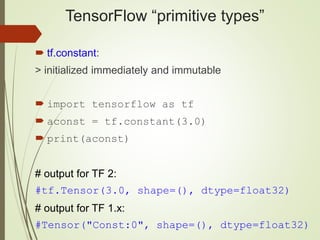

This document provides an overview and introduction to TensorFlow 2. It discusses major changes from TensorFlow 1.x like eager execution and tf.function decorator. It covers working with tensors, arrays, datasets, and loops in TensorFlow 2. It also demonstrates common operations like arithmetic, reshaping and normalization. Finally, it briefly introduces working with Keras and neural networks in TensorFlow 2.

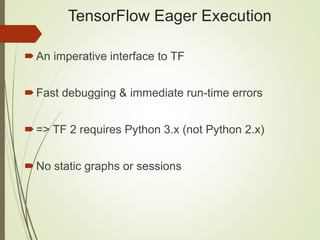

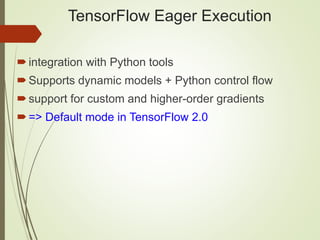

![TensorFlow Eager Execution

import tensorflow as tf

x = [[2.]]

# m = tf.matmul(x, x) <= deprecated

m = tf.linalg.matmul(x, x)

print("m:",m)

print("m:",m.numpy())

#Output:

#m: tf.Tensor([[4.]], shape=(1,1),dtype=float32)

#m: [[4.]]](https://image.slidesharecdn.com/gdgsf-tf2-191120070051/85/Introduction-to-TensorFlow-2-and-Keras-17-320.jpg)

![tf.data.Dataset.from_tensors()

Import tensorflow as tf

#combine the input into one element

t1 = tf.constant([[1, 2], [3, 4]])

ds1 = tf.data.Dataset.from_tensors(t1)

# output: [[1, 2], [3, 4]]](https://image.slidesharecdn.com/gdgsf-tf2-191120070051/85/Introduction-to-TensorFlow-2-and-Keras-33-320.jpg)

![tf.data.Dataset.from_tensor_slices()

Import tensorflow as tf

#separate element for each item

t2 = tf.constant([[1, 2], [3, 4]])

ds1 = tf.data.Dataset.from_tensor_slices(t2)

# output: [1, 2], [3, 4]](https://image.slidesharecdn.com/gdgsf-tf2-191120070051/85/Introduction-to-TensorFlow-2-and-Keras-34-320.jpg)

![TF 1.x map() and take()

import tensorflow as tf # tf 1.12.0

tf.enable_eager_execution()

ds = tf.data.TextLineDataset("file.txt")

ds = ds.map(lambda line:

tf.string_split([line]).values)

try:

for value in ds.take(2):

print("value:",value)

except tf.errors.OutOfRangeError:

pass](https://image.slidesharecdn.com/gdgsf-tf2-191120070051/85/Introduction-to-TensorFlow-2-and-Keras-39-320.jpg)

![TF 2 map() and take(): output

('value:', <tf.Tensor: id=16, shape=(5,),

dtype=string, numpy=array(['this', 'is',

'file', 'line', '#1'], dtype=object)>)

('value:', <tf.Tensor: id=18, shape=(5,),

dtype=string, numpy=array(['this', 'is',

'file', 'line', '#2'], dtype=object)>)](https://image.slidesharecdn.com/gdgsf-tf2-191120070051/85/Introduction-to-TensorFlow-2-and-Keras-41-320.jpg)

![TF 2 map() and take()

import tensorflow as tf

import numpy as np

x = np.array([[1],[2],[3],[4]])

# make a ds from a numpy array

ds = tf.data.Dataset.from_tensor_slices(x)

ds = ds.map(lambda x: x*2).map(lambda x:

x+1).map(lambda x: x**3)

for value in ds.take(4):

print("value:",value)](https://image.slidesharecdn.com/gdgsf-tf2-191120070051/85/Introduction-to-TensorFlow-2-and-Keras-42-320.jpg)

![TF 2 map() and take(): output

value: tf.Tensor([27], shape=(1,), dtype=int64)

value: tf.Tensor([125], shape=(1,), dtype=int64)

value: tf.Tensor([343], shape=(1,), dtype=int64)

value: tf.Tensor([729], shape=(1,), dtype=int64)](https://image.slidesharecdn.com/gdgsf-tf2-191120070051/85/Introduction-to-TensorFlow-2-and-Keras-43-320.jpg)

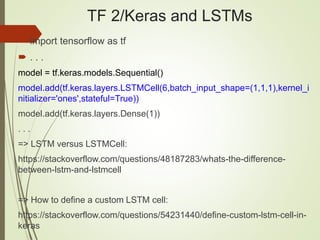

![TF 2/Keras and MLPs

import tensorflow as tf

. . .

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(10, input_dim=num_pixels, activation='relu’),

tf.keras.layers.Dense(30, activation='relu’),

tf.keras.layers.Dense(10, activation='relu’),

tf.keras.layers.Dense(num_classes, activation='softmax’),

tf.keras.optimizers.Adam(lr=0.01), loss='categorical_crossentropy',

metrics=['accuracy'])

])](https://image.slidesharecdn.com/gdgsf-tf2-191120070051/85/Introduction-to-TensorFlow-2-and-Keras-63-320.jpg)

![TF 2/Keras and MLPs

import tensorflow as tf

. . .

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Dense(10, input_dim=num_pixels,

activation='relu'))

model.add(tf.keras.layers.Dense(30, activation='relu'))

model.add(tf.keras.layers.Dense(10, activation='relu'))

model.add(tf.keras.layers.Dense(num_classes,

activation='softmax'))

model.compile(tf.keras.optimizers.Adam(lr=0.01),

loss='categorical_crossentropy', metrics=['accuracy'])](https://image.slidesharecdn.com/gdgsf-tf2-191120070051/85/Introduction-to-TensorFlow-2-and-Keras-64-320.jpg)

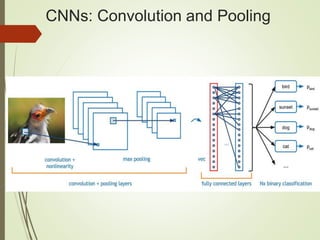

![TF 2/Keras and CNNs

import tensorflow as tf

. . .

model = tf.keras.models.Sequential([

tf.keras. layers.Conv2D(30,(5,5),

input_shape=(28,28,1),activation='relu')),

tf.keras.layers.MaxPooling2D(pool_size=(2, 2))),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(500, activation='relu’),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.Dense(num_classes, activation='softmax’)

])](https://image.slidesharecdn.com/gdgsf-tf2-191120070051/85/Introduction-to-TensorFlow-2-and-Keras-66-320.jpg)