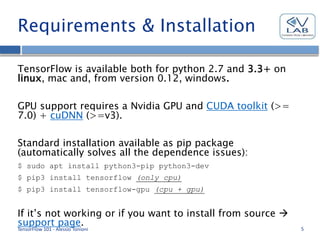

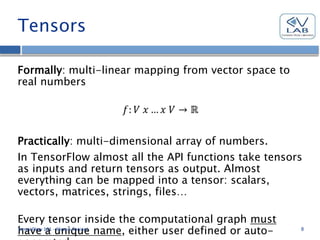

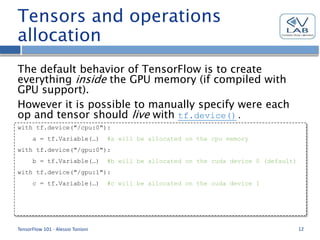

This document provides an overview and introduction to TensorFlow. It describes that TensorFlow is an open source software library for numerical computation using data flow graphs. The graphs are composed of nodes, which are operations on data, and edges, which are multidimensional data arrays (tensors) passing between operations. It also provides pros and cons of TensorFlow and describes higher level APIs, requirements and installation, program structure, tensors, variables, operations, and other key concepts.

![TensorFlow Graph

TensorFlow 101 - Alessio Tonioni 7

X

W

b

*

+ y

[32,128]

[128,10]

[32,10]

[10]](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-7-320.jpg)

![Tensors

Three main type of tensors in TensorFlow

(tensors_type.ipynb):

• tf.constant(): tensor with constant and immutable

value.

After creation it has a shape, a datatype and a value.

TensorFlow 101 - Alessio Tonioni 9

#graph definition

a = tf.constant([[1,0],[0,1]], name="const_1")

…

#graph evaluation inside session

#constant are automatically initialized

a_val = sess.run(a)

#a_val is a numpy array with shape=(2,2)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-9-320.jpg)

![Tensors

• tf.Variable(): tensor with variable (and trainable)

values.

At creation time it can have a shape, a data type and

an initial value. The value can be changed only by

other TensorFlow ops.

All variables must be initialized inside a session

before use.

TensorFlow 101 - Alessio Tonioni 10

#graph definition

a=tf.Variable([[1,0],[0,1]], trainable=False, name="var_1")

…

#graph evaluation inside session

#manual initialization

sess.run(tf.global_variables_initializer())

a_val = sess.run(a)

#a_val is a numpy array with shape=(2,2)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-10-320.jpg)

![Tensors

• tf.placeholder(): dummy tensor node. Similar to

variables, it has shape and datatype, but it is not

trainable and it has no initial value. A placeholder

it’s a bridge between python and TensorFlow, it only

takes value when it is populated inside a session

using the feed_dict of the session.run()method.

TensorFlow 101 - Alessio Tonioni 11

#graph definition

a = tf.placeholder(tf.int64, shape=(2,2),name="placeholder_1")

…

#graph evaluation inside session

#a takes the value passed by the feed_dict

a_val = sess.run(a, feed_dict={a:[[1,0],[0,1]]})

#a_val is a numpy array with shape=(2,2)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-11-320.jpg)

![Input Queues

Seems too complicated?

Use tf.train.batch() or

tf.train.shuffle_batch()instead!

Both functions take cares of creating and handling an

input queues for the examples. Moreover

automatically spawn multiple threads to keep the

queue always full.

TensorFlow 101 - Alessio Tonioni 19

image = tf.get_variable(…) #tensors containing a single image

label = tf.get_variable(…) #tensors with the label associated to image

#create two minibatch of 16 example one filled with image one with labels

image_batch,label_batch = tf.train.shuffle_batch([image,label],

batch_size=16, num_threads=16,capacity=5000, min_after_dequeue=1000)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-19-320.jpg)

![Operations – Convolution2D

tf.nn.conv2d(): standard convolution with bi-dimensional

filters. It takes as input two 4D tensors, one for the mini-

batch and one for the filters.

tf.nn.bias_add(): add bias to a tensor taken as input,

special case of the more general tf.add() op.

TensorFlow 101 - Alessio Tonioni 26

input = … #4D tensor [#batch_example,height,width,#channels]

kernel = … #4D tensor [filter_height, filter_width, filter_depth, #filters]

strides = … #list of 4 int, stride across the 4 batch dimension

padding = … #one of "SAME"/"VALID" enable or disable 0 padding of inputs

bias = … #tensors with one dimension shape [#kernel filters]

conv = tf.nn.conv2d(input,kernel,strides,padding)

conv = tf.nn.bias_add(conv,bias)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-26-320.jpg)

![Operations – Deconvolution

tf.nn.conv2d_transpose(): deconvolution with bi-

dimensional filters. It takes as input two 4D tensors,

one for the mini-batch and one for the filters.

TensorFlow 101 - Alessio Tonioni 27

input = … #4D tensor [#batch_example,height,width,#channels]

kernel = … #4D tensor [filter_height, filter_width, output_channels, in_channels]

output_shape = … #1D tensor representing the output shape of the deconvolution op

strides = … #list of 4 int, stride across the 4 batch dimension

padding = … #one of "SAME"/"VALID" enable or disable 0 padding of inputs

bias = … #tensors with one dimension shape [#kernel filters]

deconv = tf.nn.conv2d(input,kernel,output_shape,strides,padding)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-27-320.jpg)

![Operations –

BatchNormalization

tf.contrib.layers.batch_norm: batch normalization

layer with trainable parameters.

TensorFlow 101 - Alessio Tonioni 28

input = … #4D tensor [#batch_example,height,width,#channels]

is_training = … #Boolean True if the network is in training

#apply batch norm to input

Normed = tf.contrib.layers.batch_norm(input)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-28-320.jpg)

![Operations – fully connected

tf.matmul(): fully connected layers can be

implemented as matrix multiplication between the

input values and the weights matrix followed by bias

addition.

TensorFlow 101 - Alessio Tonioni 29

input=… #3D tensor [#batch size, #features, #nodes previous layer]

weights=… #2D tensor [#features, #nodes]

bias=… #1D tensor [#nodes]

fully_connected=tf.matmul(input,weights)+bias

#operator overloading to add bias (syntactic sugar)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-29-320.jpg)

![Operations - pooling

tf.nn.avg_pool(), tf.nn.max_pool(): pooling

operations, takes a 4D tensor as input and performs

spacial pooling according to the parameter passed as

input.

TensorFlow 101 - Alessio Tonioni 31

input=… #4D tensor with shape[batch_size,height,width,channels]

k_size=… #list of 4 ints with the dimension of the pooling window

strides=… #list of 4 ints with the stride across the 4 batch dimension

max_pooled = tf.nn.max_pool(input,k_size,strides)

avg_pooled = tf.nn.avg_pool(input,k_size,strides)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-31-320.jpg)