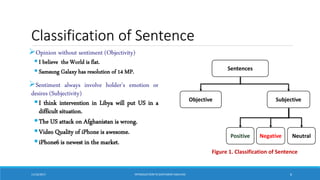

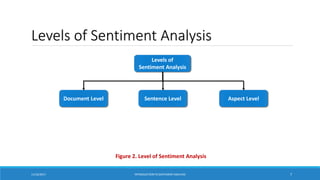

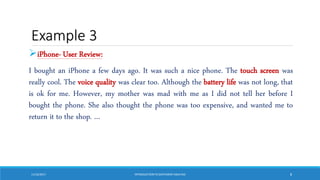

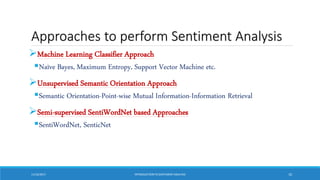

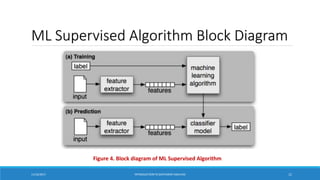

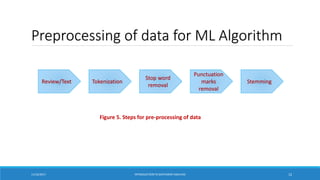

The document provides an introduction to sentiment analysis. It defines sentiment analysis as classifying text as positive, negative, or neutral based on the expressed sentiment. Sentiment analysis can be performed at the document, sentence, or aspect level. Supervised machine learning algorithms like Naive Bayes are commonly used for classification, which requires preprocessing text data into feature vectors before training models on labeled data. The document outlines the process and provides examples to illustrate sentiment analysis techniques.

![NB Machine Learning Approach

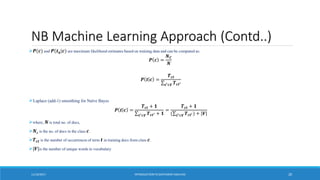

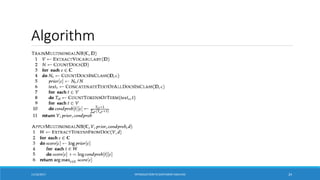

The probability of a document d being in class c is computed as

𝑷 𝒄 𝒅 ∝ 𝑷 𝒄

𝟏≤𝒌≤𝒏𝒅

𝑷( 𝒕𝒌|𝒄)

where, 𝑷(𝒕𝒌|𝒄) is the conditional probability of a term 𝒕𝒌 occurring in a document of class 𝒄.

The goal is to find the best class, i.e., Maximum A Posteriori Class as follows:

𝒄𝒎𝒂𝒑 = 𝒂𝒓𝒈𝒎𝒂𝒙𝒄∈𝑪 𝑷 𝒄 ∗

𝟏≤𝒌≤𝒏𝒅

𝑷( 𝒕𝒌|𝒄)

Which can be reframed as

𝒄𝒎𝒂𝒑 = 𝒂𝒓𝒈𝒎𝒂𝒙𝒄∈𝑪[𝒍𝒐𝒈 𝑷 𝒄 +

𝟏≤𝒌≤𝒏𝒅

𝒍𝒐𝒈 𝑷(𝒕𝒌|𝒄)]

11/10/2017 INTRODUCTIONTO SENTIMENTANALYSIS 19](https://image.slidesharecdn.com/introductiontosentimentanalysis-210607123548/85/Introduction-to-sentiment-analysis-19-320.jpg)